by Scott Muniz | Aug 25, 2020 | Uncategorized

This article is contributed. See the original author and article here.

We recently released an update to Microsoft.Data.SqlClient, version 2.0.1. The update addresses several issues that are important to our customers.

Updates in Microsoft.Data.SqlClient 2.0.1 include:

Added

- Added support for a new Configuration Section, SqlClientAuthenticationProviders (duplicate of existing SqlAuthenticationProviders), to allow co-existence of configurations for both drivers, “System.Data.SqlClient” and “Microsoft.Data.SqlClient” #701

Fixed

- Fixed pooled connection re-use on access token expiry issue when using Active Directory authentication modes #639

- Fixed transient fault handling for Pooled connections #638

- Fixed Enclave session cache issue with Azure Database #628

- Reverted changes to return empty DataTable from GetSchemaTable to return null as before. #697

- Fixed configuration section collision issue with System.Data.SqlClient type #701

- Fixed blank error message [HTTP Provider] issues due to unexpected pre-login failures when using Native SNI. Fixed with Microsoft.Data.SqlClient.SNI v2.0.1 and Microsoft.Data.SqlClient.SNI.runtime v2.0.1 release versions.

To get the new package, add a NuGet reference to Microsoft.Data.SqlClient in your application.

For the list of changes in Microsoft.Data.SqlClient 2.0.1, you can also see the Release Notes.

If you encounter any issues or have any feedback, head over to the SqlClient GitHub repository and submit an issue.

David Engel

by Scott Muniz | Aug 25, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

We are thankful for all the encouragement and positive feedback you’ve shared with us since our recent feature releases enhancing the Azure CLI’s user experience. Since then, we have doubled down our effort and are excited to share with you some more progress in this space. This month, the spotlight will be on the new experimental feature az config alongside its unique capability to dynamically install extensions

Transforming az configure to az config

If you are one of our typical users who script and automate on a regular basis, then you must have tried az configure to configure basic settings. We heard from you that you felt limited by its defaulting capabilities or the lack thereof in this command (since you can only set “defaults” in tool) and therefore have put together a simpler, more familiar version of the command, with more configuration options for you to use.

Az config is the transformed version of the original az configure command. Its subcommands come in the form of positional arguments which makes it more git-like and more syntactically intuitive to use. Our team intended to experiment new ideas with a similar command name, while preserving the current state of az configure — thereby the birth of az config. With az config, you can now config various settings across all sections in az that were previously only configurable by directly editing the configuration file.

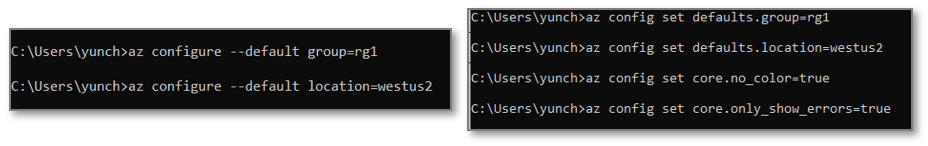

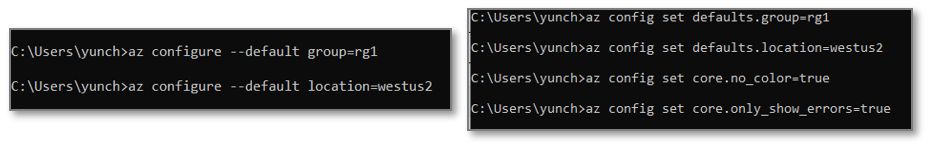

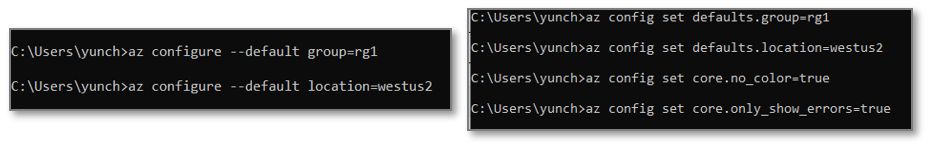

Figure1: Comparison between az configure vs. az config

It also enables you to unset and clear configs in tool, which was previously unavailable. This further equip you to smoothly complete your end to end jobs to be done, without ever having to leave the tool

Note: az config is in the experimental state to get more feedback from users like you. Hence, we are currently supporting both this and az configure. We do plan to merge the two and eventually support only one command across all in tool settings so if you have specific preferences/feedback, please do share them with us. We’d love to incorporate your feedback in the final product.

Installing extensions dynamically with az config

Did you know that we have over 80 Azure CLI extensions available for you to use?

If not, we highly encourage you to explore and try them out — Azure service teams have invested tremendous amount of effort to bridge the feature gaps so you can perform all kinds of tricks within az, irrespective of whether you are a newbie or an experienced power user of the Azure CLI (extensions). If you have been frustrated at some point in time with the lack of discoverability and errors around extensions, then the following feature is for you.

Dynamic extension installer is Azure CLI’s intelligent and interactive way to install extensions on your behalf, after you’d attempted to use extension commands when the extension has yet to be installed. It’s now part of Azure CLI core and you can configure the settings via the new az config command.

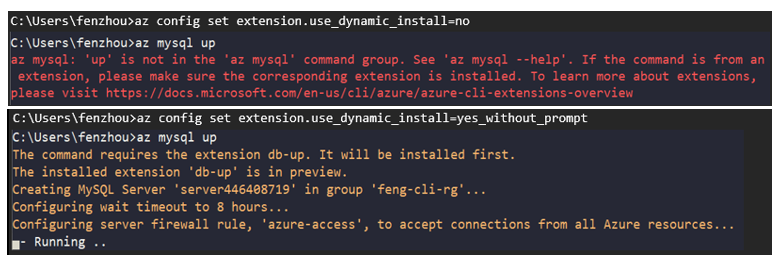

By default, it’s set to no because we’d like you to be in control with the settings; this means you will receive the command_not_found error as usual if you attempt to use an extension command without the extension being installed. However when it’s set to yes_without_prompt, the tool will automatically install the extensions and rerun your extension command. Consider the following comparison when spinning up a MySQL database using az mysql up:

Figure 2: Comparison of dynamic extension installer settings, no vs. yes_without_prompt

We can see that the previously unavoidable error is now out of the picture with this capability

This setting is especially handy in automation use cases – imagine your page long script leverages multiple extension commands that have frequent updates. With the dynamic installer in place, the hassle around extension management is conveniently eliminated

If you prefer using your CLI interactively in a terminal or shell, there are a couple other options for you to choose from. For instance, with az config set extension.use_dynamic_install=yes_prompt, the tool will first prompt you a reminder prior to installing the any extension on your behalf

Here’s where you can learn more about all the settings. Please feel free to try them out and let us know what you think about this feature!

Call to action

We’d love for you to try out these new experiences and share us your feedback on their usability and applicability for your day-to-day use cases.

Similar to last time, some of these improvements are early in the preview or experimental stage but we certainly do look forward to improving them to serve you better. If you’re interested, here is where you can learn more about new features in the ever improving Azure CLI.

Thank you for reading! We’re excited to share with you more delightful features in upcoming releases!

by Scott Muniz | Aug 25, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Due to performance and locking reasons, change datatype column can be a long-running operation.

Supose table PRU with bigserial id column and column A, with integer data saved as Text that you want to change to Integer type:

SELECT * FROM pg_stat_statements_reset();

CREATE TABLE PRU (id bigserial, A TEXT);

INSERT INTO PRU (A) VALUES ('111');

INSERT INTO PRU (A) VALUES ('111');

Generate rows until 2M, repeating this sentence:

INSERT INTO PRU SELECT * FROM PRU;

We want to change the column datatype:

ALTER /*optionA*/ TABLE PRU ALTER COLUMN A TYPE INTEGER USING A::INTEGER;

We could review stats from this command with following command:

select * from pg_stat_statements where query like '%optionA%';

This method is the easiest one, but could generate high contention due to required exclusive lock for the table that could generate errors in applications, most of them will require stop applications to perform this type of long running operations.

Another approach to change the datatype of the column could be to add some extra columns, migrate the data to those columns and finally drop old column and rename new one.

The advantages of doing in this way is that the customer has more control about the process, it can be executed during multiples hours/days.

//

// Add 2 auxiliary columns

//

ALTER TABLE PRU ADD COLUMN A1 INTEGER, ADD COLUMN A1_CHANGED BOOLEAN;

//

// Trigger to take care of ongoing changes from the applications

//

CREATE OR REPLACE FUNCTION set_a1()

RETURNS TRIGGER AS

$func$

BEGIN

IF (TG_OP='INSERT') THEN

NEW.a1:=NEW.a::integer;

ELSEIF (TG_OP='UPDATE') THEN

IF (NEW.a <> OLD.a) THEN

NEW.a1:=NEW.a::integer;

ELSEIF (NEW.a is null and OLD.a is not null) THEN

NEW.a1:=null;

ELSEIF (NEW.a is not null and OLD.a is null) THEN

NEW.a1:=NEW.a::integer;

END IF;

END IF;

NEW.a1_changed:=true;

RETURN NEW;

END

$func$ LANGUAGE plpgsql;

DROP TRIGGER IF EXISTS set_a1 ON PRU;

CREATE TRIGGER set_a1

BEFORE INSERT OR UPDATE ON pru

FOR EACH ROW EXECUTE PROCEDURE set_a1();

//

// Update sentence with limit number or fows to update in single transaction

//

// This sentence must be repeated multiple times until all rows were updated

//

UPDATE /*optionB*/ PRU SET A1=A::INTEGER, A1_CHANGED=true WHERE id IN (SELECT id FROM PRU WHERE A1_CHANGED is null limit 100000);

//

// Check the process

//

// Current changed rows:

SELECT COUNT(1) FROM PRU WHERE A1_CHANGED=true;

// Current pending rows:

SELECT COUNT(1) FROM PRU WHERE A1_CHANGED is null;

//

// Final work

//

// After no rows need changes, we can switch the columns

BEGIN WORK;

LOCK TABLE PRU IN SHARE MODE;

ALTER /*optionB*/ TABLE PRU DROP COLUMN A;

ALTER /*optionB*/ TABLE PRU DROP COLUMN A1_CHANGED;

ALTER /*optionB*/ TABLE PRU RENAME A1 TO A;

DROP TRIGGER set_a1 ON PRU;

DROP FUNCTION set_a1();

COMMIT WORK;

We could review stats from this command with following command:

select * from pg_stat_statements where query like '%optionB%';

Here we could observe more resources used, more space allocated in table, but application has been working most of the time without high contention locking.

by Scott Muniz | Aug 25, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Use of COPY command as an alternative way to PostgreSQL data migration

Using pg_dump, psql, recover data structure in new server

Generate COPY command pipes

Copy real data through COPY command pipes

Advantages

- COPY command through pipe, no space required in client side

- Increased performance with parallelism

Disadvantages

- COPY method is not taking care of indexing, foreign keys, additional steps will be required to have a complete migration mechanism using this approach

Preparation

pgsource.sh = bash script with psql and connection string to source tests database

pgdestination.sh = bash script with psql and connection string to destination tests database

cat <<EOF > pgsource.sh

PGPASSWORD=<sourcePassword> psql -t -h <sourceServerName>.postgres.database.azure.com -U <username>@<sourceServerName> tests

EOF

chmod +x pgsource.sh

cat <<EOF > pgdestination.sh

PGPASSWORD=<destinationPassword> psql -t -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> tests

EOF

chmod +x pgdestination.sh

Migrating Basic Azure Database for PostgreSQL server to Azure Database for PostgreSQL server GeneralPurpose

Data preparation

pgsource has 1 single table “pru” with 1 million rows, will generate some other table to test with, there are no indexes or foreign keys in this database

for j in {1..20}

do

echo "create table pru$j as select * from pru;"|./pgsource.sh

done

Test full restoration with pg_dump/pg_restore (no parallel)

Internally, pg_dump and pg_restore uses the COPY command

time PGPASSWORD=<sourcePassword> pg_dump -C -Fc -h <sourceServerName>.postgres.database.azure.com -U <username>@<sourceServerName> tests|PGPASSWORD=<destinationPassword> pg_restore -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> -C -c -d postgres

real 6m15.310s

user 0m22.976s

sys 0m9.393s

– Total Time: 6m15sec

Test full restoration with pg_dump/pg_restore (parallel)

Need to use the directory format export, dump to regular files (see: https://www.postgresql.org/docs/10/app-pgrestore.html, https://www.postgresql.org/docs/10/app-pgdump.html)

time PGPASSWORD=<sourcePassword> pg_dump -C -Fd -h <sourceServerName>.postgres.database.azure.com -U <username>@<sourceServerName> tests -j 4 -f testsdir

real 3m23.004s

user 0m20.291s

sys 0m13.888s

time PGPASSWORD=<destinationPassword> pg_restore -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> -C -c -d postgres -j 4 testsdir

real 0m56.175s

user 0m1.671s

sys 0m0.478s

– Total Time: 4m19sec

Test alternative restoration with COPY (no parallel)

time PGPASSWORD=<sourcePassword> pg_dump -C -Fc --schema-only -h <sourceServerName>.postgres.database.azure.com -U <username>@<sourceServerName> tests|PGPASSWORD=<destinationPassword> pg_restore -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> --schema-only -C -c -d postgres

real 0m15.288s

user 0m0.144s

sys 0m0.054s

time for j in `echo "SELECT table_name FROM information_schema.tables WHERE table_schema = 'public' and table_type='BASE TABLE' ORDER BY table_name"|./pgsource.sh`

do

echo "copy $j FROM PROGRAM 'echo copy binary $j to STDOUT|./pgsource.sh' WITH BINARY;"|./pgdestination.sh

done

real 7m47.179s

user 0m19.518s

sys 0m23.467s

– Total Time: 8m2sec

Test alternative restoration with COPY (parallel)

No need to use dump to regular file, still with pipe mechanism

export maxParal=4

>curParal.tmp

time for j in `echo "SELECT table_name FROM information_schema.tables WHERE table_schema = 'public' and table_type='BASE TABLE' ORDER BY table_name"|./pgsource.sh`

do

echo $j >>curParal.tmp

nohup $SHELL <<EOF &

echo "copy $j FROM PROGRAM 'echo copy binary $j to STDOUT|./pgsource.sh' WITH BINARY;"|./pgdestination.sh; sed -i '/^$j$/d' curParal.tmp

EOF

while [ `wc -l curParal.tmp|cut -f 1 -d " "` -ge $maxParal ]

do

sleep 1

done

echo $curParal $maxParal

done

real 2m59.491s

user 0m24.388s

sys 0m40.200s

– Total Time: 3m14sec

Results

Without paralellism:

pg_dump/pg_restore won with 6m15sec vs 8m2sec of COPY approach

With paralelism:

COPY approach won with 3m14sec vs 4m19sec of pg_dump/pg_restore

Although pg_dump/pg_restore uses COPY command internally, when we use a manual parallelism mechanism we can obtain better execution times due to the fact that we can create Pipes without need to save data to staging area as pg_dump/pg_restore require when using parallelism.

Using COPY command to ingest from http datasources

Using COPY command we can ingest not only .csv data or copy from another database through pipes, we can create authentic ETL like processes with a single command

This type of pipeline should be executed with the “copy” psql instruction, that is different from the “copy” command, because resources should be accessible from psql client side, not the database server. https://www.postgresql.org/docs/11/sql-copy.html

In this case we are going to ingest the open data dataset “COVID-19 cases worldwide” csv from https://data.europa.eu/euodp/en/data/dataset/covid-19-coronavirus-data

First create the table covid19casesww

echo "create table covid19casesww (dateRep date,day integer,month integer,year integer,cases integer,deaths integer,countriesAndTerritories text,geoId text,countryterritoryCode text,popData2019 integer,continentExp text);"|PGPASSWORD=<destinationPassword> psql -t -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> tests

Pipe from wget to copy command

echo "set datestyle to SQL,DMY;COPY covid19casesww FROM PROGRAM 'wget -q -O - "$@" "https://opendata.ecdc.europa.eu/covid19/casedistribution/csv"|tail -n +2' CSV DELIMITER ',';"|PGPASSWORD=<destinationPassword> psql -t -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> tests

Now we can access the data and calculate, by example, the 10th territories with low number of cases

echo "select sum(cases) as cases, countriesAndTerritories, continentexp from covid19casesww group by countriesAndTerritories,continentExp order by 1 asc limit 10;"|PGPASSWORD=<destinationPassword> psql -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> tests

cases | countriesandterritories | continentexp

-------+-----------------------------------+--------------

3 | Anguilla | America

7 | Bonaire, Saint Eustatius and Saba | America

8 | British_Virgin_Islands | America

11 | Montserrat | America

11 | Papua_New_Guinea | Oceania

11 | Seychelles | Africa

12 | Holy_See | Europe

13 | Falkland_Islands_(Malvinas) | America

13 | Greenland | America

15 | Saint_Kitts_and_Nevis | America

(10 rows)

Using COPY command to ingest from other databases

Suppose we want migrate data from a Azure Database for MySQL server database to a Azure Database for PostgreSQL server database

First create the table prumysql

echo "create table prumysql (a text, b integer);"|PGPASSWORD=<destinationPassword> psql -t -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> tests

Pipe from mysql to copy command

echo "COPY prumysql FROM PROGRAM 'echo select a,b from prumysql|mysql -h <sourceServerName>.mysql.database.azure.com -u <username>@<sourceServerName> -p<sourcePassword> -sN --ssl tests -B' ;"|PGPASSWORD=<destinationPassword> psql -t -h <destinationServerName>.postgres.database.azure.com -U <username>@<destinationServerName> tests

Recent Comments