by Scott Muniz | Aug 18, 2020 | Azure, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Within Azure SQL maintenance tasks such as backups and integrity checks are handled for you, and while you may be able to get away with automatic updates keeping your statistics up-to-date, sometimes it’s not enough. Proactively managing statistics in Azure SQL requires some extra steps, and in this Data Exposed episode with MVP Erin Stellato, we’ll step through how you can schedule and automate regular updates to statistics.

Watch on Data Exposed

Additional Resources:

SQL Server Maintenance Solution

SQL Server Index and Statistics Maintenance

View/share our latest episodes on Channel 9 and YouTube!

by Scott Muniz | Aug 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Co-authored with @Itamar Falcon

Microsoft Cloud App Security is removing non-secure cipher suites to provide best-in-class encryption, and to ensure our service is more secure by default. As of Oct 1, 2020, Microsoft Cloud App Security will no longer support the following cipher suites. From this date forward, any connection using these protocols will no longer work as expected, and no support will be provided.

Non-secure cipher suites:

- ECDHE-RSA-AES256-SHA

- ECDHE-RSA-AES128-SHA

- AES256-GCM-SHA384

- AES128-GCM-SHA256

- AES256-SHA256

- AES128-SHA256

- AES256-SHA

- AES128-SHA

Support will continue for the following suites:

- ECDHE-ECDSA-AES256-GCM-SHA384:

- ECDHE-ECDSA-AES128-GCM-SHA256:

- ECDHE-RSA-AES256-GCM-SHA384:

- ECDHE-RSA-AES128-GCM-SHA256:

- ECDHE-ECDSA-AES256-SHA384:

- ECDHE-ECDSA-AES128-SHA256:

- ECDHE-RSA-AES256-SHA384:

- ECDHE-RSA-AES128-SHA256

What do I need to do to prepare for this change?

Customers should ensure that all client-server and browser-server combinations are using supported suites in order to maintain the connection to Microsoft Cloud App Security.

Components that may be affected by this change include:

- SIEM Agent – Customers can use any supported cipher suite as described above.

- Microsoft Cloud App Security API – Custom applications and code that are utilizing the Microsoft Cloud App Security API must utilize supported suites to continue functioning. If unsure whether applications function with a supported suite, customers can test by authenticating to our dedicated API endpoint: https://tlsv12.portal-rs.cloudappsecurity.com.

- Apps configured with Conditional Access App Control – If customers are using Conditional Access App Control for any web or native client applications, they must verify that these applications are not using the deprecated suites; access to apps that use non-secure cipher suites and relevant controls will no longer work.

- Log collector – No changes are needed if no modification was done to the provided docker.

For additional inquiries please contact support.

– Microsoft Cloud App Security team

by Scott Muniz | Aug 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Summary: In March 2020 we introduced inline, short description of fixes in the master Cumulative Update KB articles. We call those descriptions “blurbs” and they replace many of the individual KB articles that existed for each product fix and contained brief descriptions. In other words, the same one-two sentence description that existed in a separate article before, now appears directly in the master CU KB article. For fixes that require longer explanation, provide additional information, workarounds, and troubleshooting steps, a full KB article is still created.

Now, let’s look at the details behind this. SQL Server used the Incremental Servicing Model for a long time to deliver updates to the product. This model used consistent and predictable delivery of Cumulative Updates and Service Packs. A couple of years ago, we announced the Modern Servicing Model to accelerate the frequency and quality of delivering the updates. This model relies heavily on Cumulative Updates as the primary mechanism to release product fixes and updates. The new model provides the ability to introduce new capabilities and features in Cumulative Updates. As you are aware, every Cumulative Update comes with a Knowledge Base article. You can take a look at some of these articles in the update table available in our latest updates section of the docs.

Each one of these Cumulative Update articles have a well-defined and consistent format. It starts off with metadata information about the update, mechanisms to acquire and install the update, listing of all the fixes and changes in the update. In some rare occasions, there might be a section that provides a heads up to users about any known problems encountered after applying the update. In this post, we will focus on the changes introduced to the listing of the fixes and changes in the update. Usually what you will find is a table that contains a list of fixes with bug numbers, link to an article that describes the fix, area of the product to which this fix applies. Imagine a Cumulative Update with a payload of 50 fixes. What that means to a user reading the Cumulative Update article is 50 context switches in and out of that article (not including other links you might end up clicking and reading). We all agree that frequent context switching is not efficient – be it computer processing code or human brain reading things. So how do you optimize this experience and still maintain the details provided in the individual articles you click and read? Here comes the concept of “article inlining” to the rescue! Yes, we borrowed the same concept we use in SQL Server (e.g. scalar UDF inlining) and computer programming in general (e.g. inline functions).

We will take this opportunity to explain the thought process that went into this change and what data points and decision points we use to drive this change.

Justification for Using a Blurb

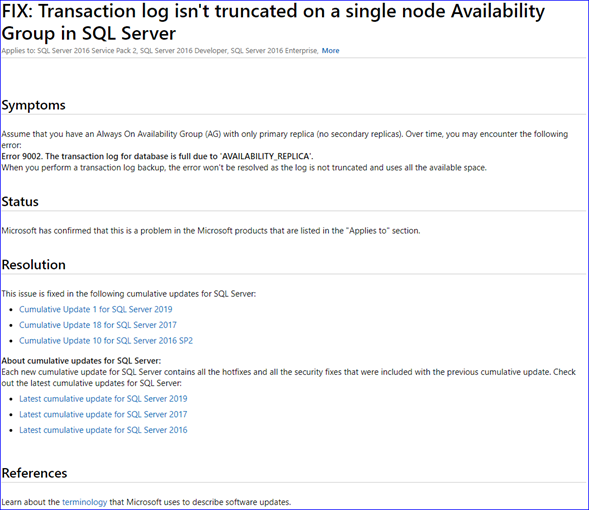

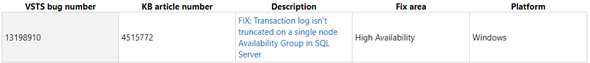

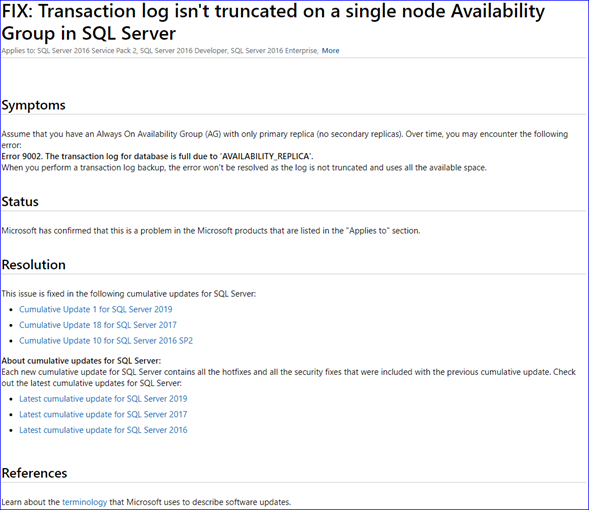

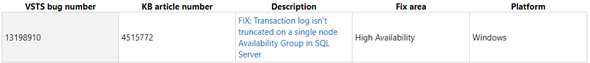

- The normal format used for the individual fix articles include: Title, Symptoms, Status, Resolution, References. For example, let us look at SQL Server 2019 CU1 article. The first one in the fix list table is FIX: Transaction log isn’t truncated on a single node Availability Group in SQL Server

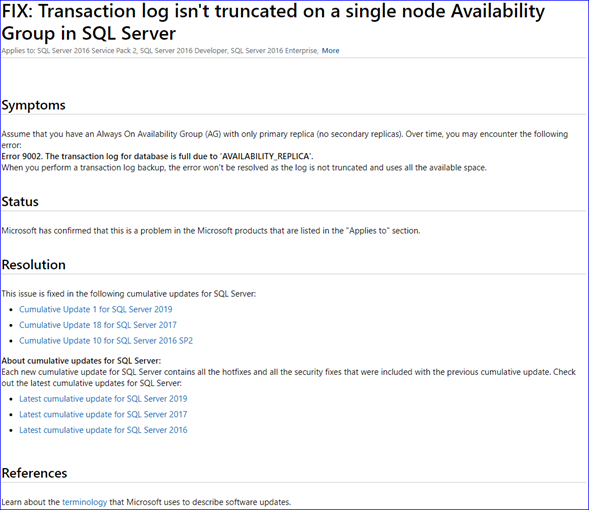

Let us click on the link and open this article to understand more details about this fix. Here is what we see:

The only useful information you get from this lengthy article is the symptom section. Usually the title of the article provides a summary of the symptoms section. Now look at other sections. Of course, we know this is a problem in the software – otherwise why will we be fixing it:smiling_face_with_smiling_eyes:?. Isn’t resolution here obvious – that you need to apply this cumulative update to fix this problem? If we now moved the 2 lines from the symptoms section in this individual fix article to the main cumulative update article, then you can avoid all these round trips and context switches.

You can go down the list of fix articles in that KB and you will find many articles that fit this pattern. Our content management team did a study of several cumulative updates from the past and found that a majority of the individual fix articles (to the tune of 85%) follow this pattern and can be good candidates to “inlining” the symptom description in the main cumulative update article table.

Criteria for Creating a Full KB Article

In such scenarios, we carefully evaluate if we can perform inlining and still manage to represent this additional information in the main cumulative update article. These are good candidates to have an individual fix article.

We started piloting this approach around March 2020 timeframe. The initial releases we attempted this style for were SQL Server 2016 SP2 CU12 and SQL 2019 CU3. Our friends and colleagues called it the “Attack of the blurbs!”. Some folks called it the “TL/DR version” of knowledge base articles. The reactions have been predominantly positive and encouraging. Of course, we will constantly fine tune these approaches with user feedback. As part of this change, we are also improving the content reviews done for the CU articles to ensure they contain relevant and actionable information for users.

One important callout we would like to make sure users understand is about the quality of these fixes. Irrespective of whether a fix has an individual article or an inline reference in the main cumulative update article, they go through the same rigorous quality checks established for cumulative updates in general. In fact, the article writing, and documentation process is the last step in the cumulative update release cycle. Only after a fix passes the quality gates and is included in the cumulative updates the documentation step happens. And we have already discussed how a fix gets described – an individual article vs inline blurb.

We hope this explains the changes you notice around the KB articles for Cumulative Updates.

From the support team: Joseph, Suresh, Ramu

by Scott Muniz | Aug 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Got a problem that needs solving? WWBD. AKA “What would Bob do?” – a common question I ask myself often. And often the answer lies in Bob’s blog, where this episode sprouts its roots. We invite you to figure it out with Bob – Bob German that is.

Bob German is a Cloud developer advocate at Microsoft and a friend of the community who consistently puts complex concepts into simple, meaningful terms. He also is a contributor of samples and ideas via GitHub / PnP. Using a few of his recent blog posts as a guide, we cover the evolution of SharePoint as a modern development platform, alongside the approach to brand and customize SharePoint – to develop once and deploying into SharePoint and Microsoft Teams. You’ll gain clarity and numerous tips and tricks along the way.

Listen to podcast below.

Subscribe to The Intrazone podcast! And listen to episode 55 now + show links and more below.

Intrazone guest Bob German (Cloud developer advocate, focused on Teams and Microsoft Graph development – Microsoft).

Intrazone guest Bob German (Cloud developer advocate, focused on Teams and Microsoft Graph development – Microsoft).

Links to important on-demand recordings and articles mentioned in this episode:

- Articles and sites

- Bob German articles mentioned in this episode:

Subscribe today!

Listen to the show! If you like what you hear, we’d love for you to Subscribe, Rate and Review it on iTunes or wherever you get your podcasts.

Be sure to visit our show page to hear all the episodes, access the show notes, and get bonus content. And stay connected to the SharePoint community blog where we’ll share more information per episode, guest insights, and take any questions from our listeners and SharePoint users (TheIntrazone@microsoft.com). We, too, welcome your ideas for future episodes topics and segments. Keep the discussion going in comments below; we’re hear to listen and grow.

Subscribe to The Intrazone podcast! And listen to episode 55 now.

Thanks for listening!

The SharePoint team wants you to unleash your creativity and productivity. And we will do this, together, one ‘Bob advice nugget’ at a time.

The Intrazone links

+ Listen to other Microsoft podcasts at aka.ms/microsoft/podcasts.

![Mark Kashman_0-1585068611977.jpeg Left to right [The Intrazone co-hosts]: Chris McNulty, senior product manager (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).](https://www.drware.com/wp-content/uploads/2020/08/large-758) Left to right [The Intrazone co-hosts]: Chris McNulty, senior product manager (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

Left to right [The Intrazone co-hosts]: Chris McNulty, senior product manager (SharePoint, #ProjectCortex – Microsoft) and Mark Kashman, senior product manager (SharePoint – Microsoft).

The Intrazone, a show about the SharePoint intelligent intranet (aka.ms/TheIntrazone)

The Intrazone, a show about the SharePoint intelligent intranet (aka.ms/TheIntrazone)

by Scott Muniz | Aug 18, 2020 | Uncategorized

This article is contributed. See the original author and article here.

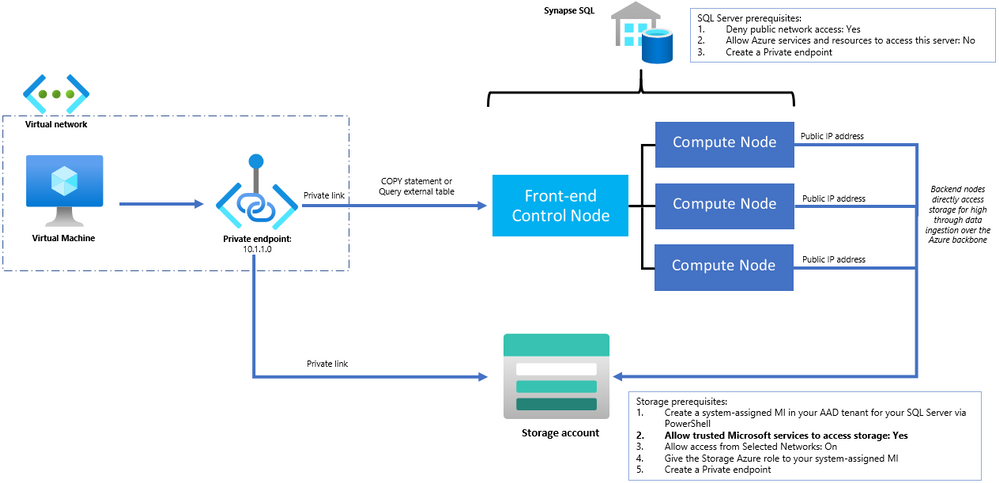

Azure Synapse Analytics supports Private Link enabling you to securely connect to SQL pools via a private endpoint. This quick how-to guide provides a high-level overview and walks you through how to set up Private Link when you’re using the COPY statement for high-throughput data ingestion. Using the COPY statement is a best practice when data loading where the experience is simple, flexible, and fast.

The following diagram illustrates a simple set-up and the interactions happening across various components when Private Link is enabled for a SQL pool with a single VM within a VNet accessing the SQL endpoint:

The following settings are required on your SQL Server when securing your SQL pool:

- Deny public network access: Yes

- Allow Azure services and resources to access this server: No

- Create a Private endpoint

These steps can all be easily done in the Azure portal. After configuring your SQL Server, access to the SQL pool is secured which can only be done via the private endpoint in your VNet.

The following settings are required on your storage account that you are loading from:

- Allow access from Selected Networks: On

- Create a Private endpoint

- Create a system-assigned MI in your AAD tenant for your SQL Server via PowerShell

- Give the required Storage Azure role (Storage Blob Data Reader or higher) to your system-assigned MI

- Allow trusted Microsoft services to access storage: Yes

- This configuration allows the SQL pool backend compute nodes to bypass the storage network configurations using the system-assigned MI. This allows the COPY statement to directly access the storage account for high through data ingestion over the Azure backbone.

For more details on setting up your storage account for COPY access, you can visit the following documentation. You can visit the following links to learn how Azure Synapse provides secure network access for your analytics platform:

Recent Comments