by Contributed | Sep 30, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Dear IT Pros,

I would like to continue on Part 2 of the Windows Defender ATP Operation with tasks handled by ATP operators, ATP administrator.

_________________________________________________

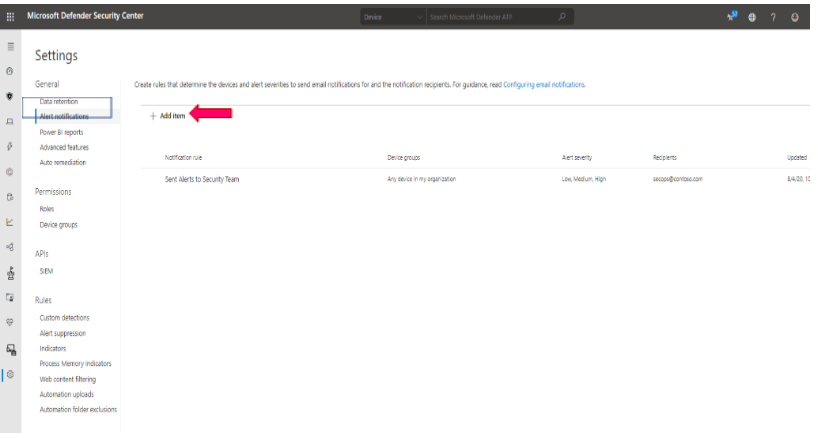

Creating Alert Notification

Alert Notification settings are configured for the purpose of sending alert email message to Security Team and other teams.

To setup Alert Notification:

-

In ATP Portal, go to SettingsGeneralAlert notification

-

Add Item

-

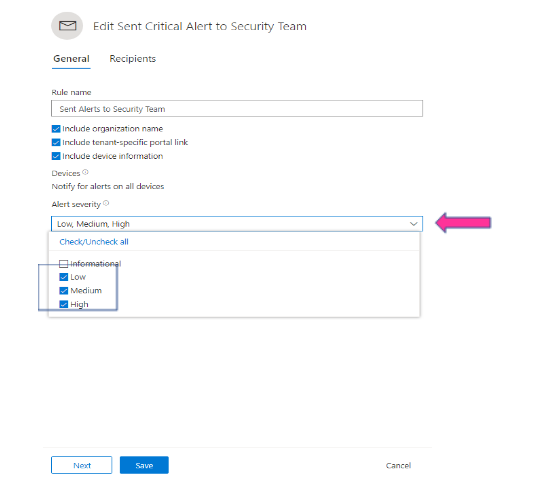

Enter Rule name, eg: Sent High Severity Alert to Secops Team

-

Choose options: include organization name, include tenant-specific portal link, include device information

-

Choose alert severity: High, Medium, Low

-

Next,

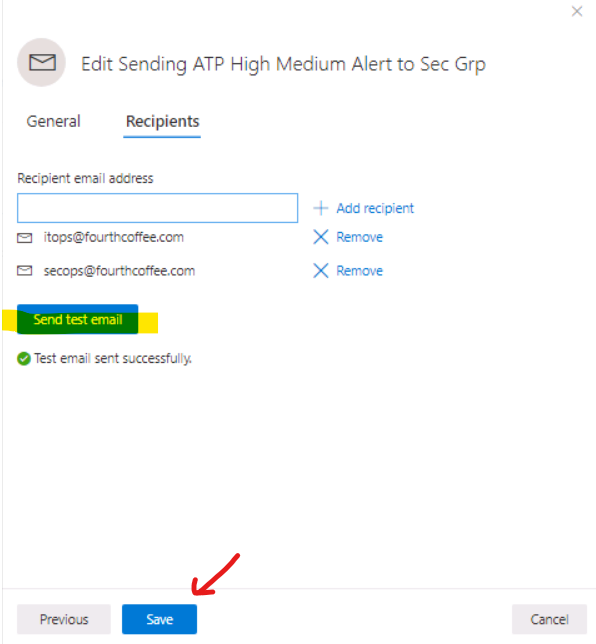

- Enter Group’s email address

- Send test email and Save

Live Response to remote device

Live response gives you the remote access to target device by remote shell connection. It enables security admin to run command, script and collect forensic data, send suspicious entities for analysis, remediate threats, and proactively hunt for emerging threats on the remote device.

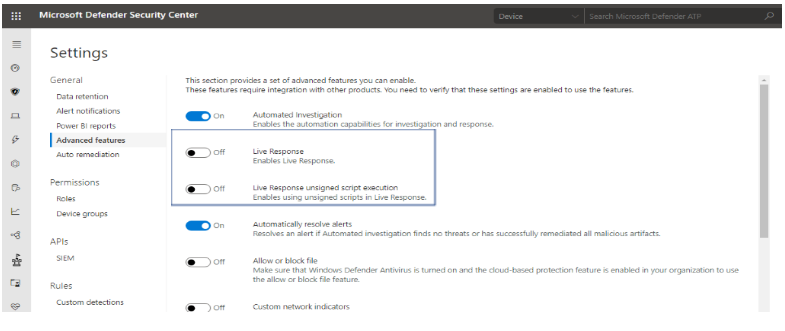

To Enable Live Response for ATP devices:

-

In ATP Portal, go to SettingsGeneralAdvanced features

-

Turn on Live Response

-

Turn on (optional) Live Response unsigned script execution

-

Save Preferences

To Run Live Response Remote Access to Device:

- Client Prerequisite:

-

Windows 10 version 1909 or later.

-

For other Windows 10 versions: Make sure to install appropriate updates (live response feature included in these updates).

Windows 10-1903: KB4515384

Windows 10-1809 (RS5): KB4537818

Windows 10-1803 (RS4): KB4537795

Windows 10-1709 (RS3): KB4537816

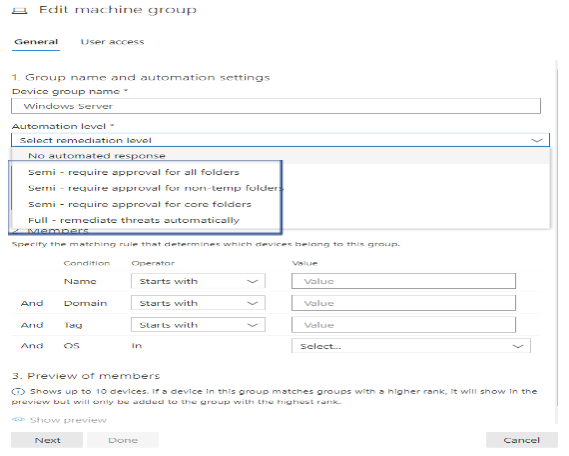

- The Target Machine is member of a Device Group with Semi or Full Remediation of Automation Level as shown :

For Dynamic Device Group, please refer to “ATP Daily Operation – Part 1″ for more detail.

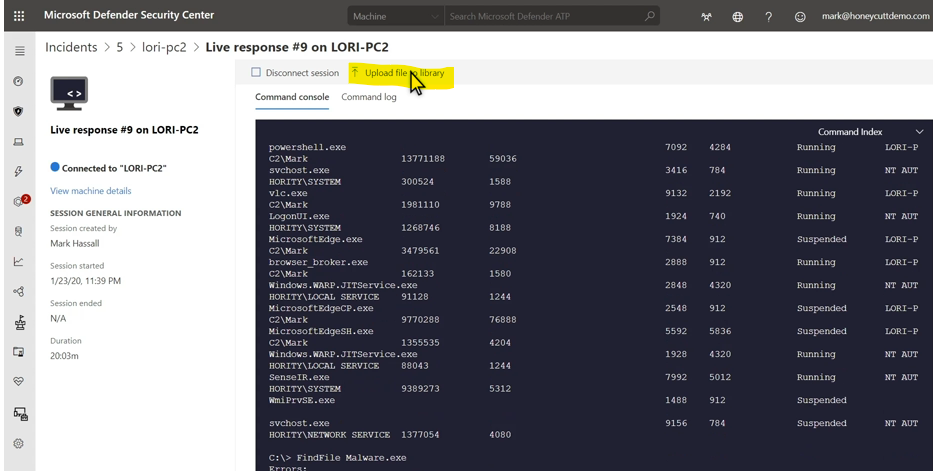

To Run the command or script in live response session.

In live response session, you could run one of the commands in the following table of commands:

|

Command

|

Description

|

|

cd

|

Changes the current directory.

|

|

cls

|

Clears the console screen.

|

|

connect

|

Initiates a live response session to the device.

|

|

connections

|

Shows all the active connections.

|

|

dir

|

Shows a list of files and subdirectories in a directory.

|

|

download <file_path> &

|

Downloads a file in the background.

|

|

drivers

|

Shows all drivers installed on the device.

|

|

fg <command ID>

|

Returns a file download to the foreground.

|

|

fileinfo

|

Get information about a file. (10GB max size limit)

|

|

findfile

|

Locates files by a given name on the device.

|

|

help

|

Provides help information for live response commands.

|

|

persistence

|

Shows all known persistence methods on the device.

|

|

processes

|

Shows all processes running on the device.

|

|

registry

|

Shows registry values.

|

|

scheduledtasks

|

Shows all scheduled tasks on the device.

|

|

services

|

Shows all services on the device.

|

|

trace

|

Sets the terminal’s logging mode to debug.

|

Advanced commands

The following advanced commands are for the user roles that are granted the ability to run advanced live response commands such as ATP Administrator Role:

|

ADVANCED COMMANDS

|

|

Command

|

Description

|

|

analyze

|

Analyses the entity with various incrimination engines to reach a verdict.

|

|

getfile

|

Gets a file from the device. (3GB max size limit)

NOTE: This command has a prerequisite command. You can use the -auto command in conjunction with getfile to automatically run the prerequisite command.

|

|

run

|

Runs a PowerShell script from the library on the device.

|

|

library

|

Lists files that were uploaded to the live response library. (250MB max size limit)

|

|

putfile

|

Puts a file from the library to the device. Files are saved in a working folder and are deleted when the device restarts by default.

|

|

remediate

|

Remediates an entity on the device. The remediation action will vary depending on the entity type:

– File: delete

– Process: stop, delete image file

– Service: stop, delete image file

– Registry entry: delete

– Scheduled task: remove

– Startup folder item: delete file

NOTE: This command has a prerequisite command. You can use the -auto command in conjunction with remediate to automatically run the prerequisite command.

|

|

undo

|

Restores an entity that was remediated.

|

To run Powershell Script in live response:

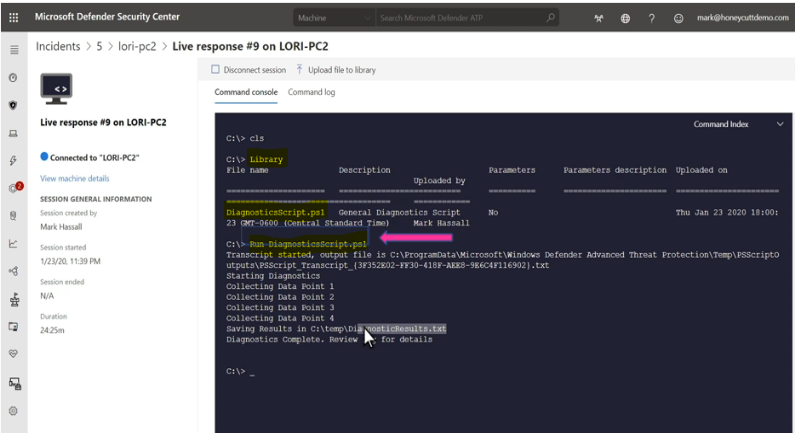

The library stores files (such as scripts) that can be run in a live response session at the tenant level. PowerShell scripts must first be placed in the library before you can run them.

Upload the script file in the library and run script

Click Upload file to library.

-

Click Browse and select the file.

-

Provide a brief description.

-

Specify if you’d like to overwrite a file with the same name.

-

If you’d like to be known what parameters are needed for the script, select the script parameters check box. In the text field, enter an example and a description.

-

(Optional) To verify that the file was uploaded to the library, run the library command.

-

Run the script with command: Run scriptname.ps1

Cancel a command

Anytime during a session, you can cancel a command by pressing CTRL + C.

Using this shortcut will not stop the command in the agent side. It will only cancel the command in the portal.

Automatically run prerequisite commands

Some commands have prerequisite command’s to run parallelly. If you don’t run the prerequisite command, you would get an error. For example, running the download command without fileinfo will return an error.

You can use the auto flag to automatically run prerequisite commands,:

getfile c:UsersuserDesktopwork.txt -auto

Apply command parameters

When using commands that have prerequisite commands, you can use flags:

<command name> -type file -id <file path> – auto

Supported output types

Live response supports table and JSON format output types. For each command, there’s a default output behavior. You can modify the output in your preferred output format using the following commands:

-

-output json

-

-output table

Note

Fewer fields are shown in table format due to the limited space. To see more details in the output, you can use the JSON output command so that more details are shown.

View the command log

Select the Command log tab to see the commands used on the device during a session. Each command is tracked with full details, ID, Command line, Duration, Status and input or output side bar

Examples:

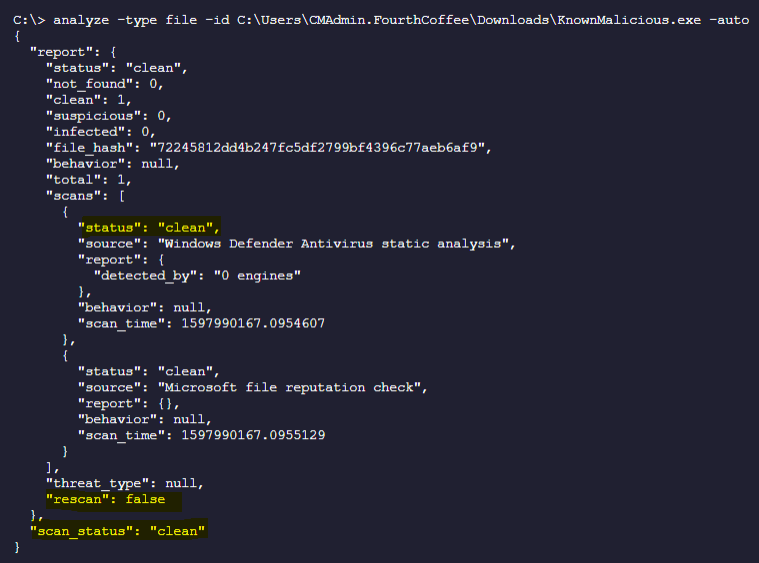

analyze -type file -id C:UsersCMAdmin.FourthCoffeeDownloadsKnownMalicious.exe –auto

-

Analyze File in remote machine and Auto Download to local Workstation in the “Downloads” Folder:

analyze -type file -id C:UsersCMAdmin.FourthCoffeeDownloadsKnownMalicious.exe -auto > AnalyzedKnownMalicious.txt

-

Remediating a file (delete file)

C:>remediate -type file -id C:UsersCMAdmin.FourthCoffeeDownloadsFreeVideo.exe –auto

or

C:>remediate file C:UsersCMAdmin.FourthCoffeeDownloadsFreeVideo.exe –auto

-

To download file from the remote target device to your local workstation

C:getfile “C:UsersCMAdmin.FourthCoffeeDownloadsFreeVideo.exe” -auto

or

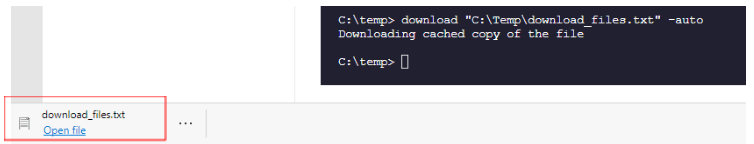

C:> download “C:UsersCMAdmin.FourthCoffeeDownloadsFreeVideo.exe” -auto

-

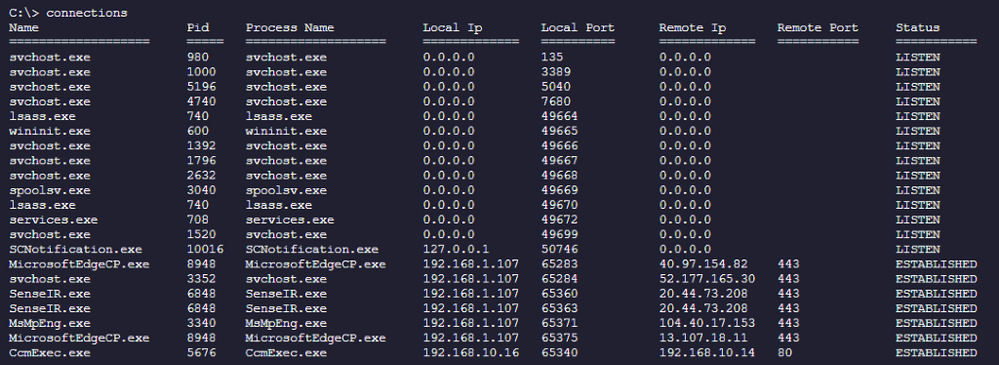

To list on connection of the remote target device

C:> connections

-

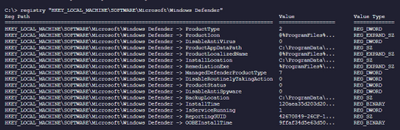

To list the registry key and value of the remote target device

C:> registry “HKEY_LOCAL_MACHINESOFTWAREMicrosoftWindows Defender”

-

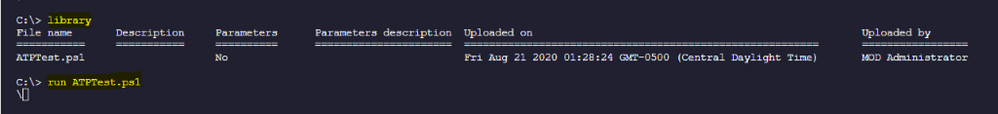

Creating a test script, upload to Library and run script:

Example: creating ATPTest.ps1 with the following content:

Dir c:usersCMAdmin.Contoso.comdownloads > C:tempdowload_files.txt

Upload script named “ATPTest.ps1” to Library and run the script

Download result of run content to your local workstation under “downloads” folder

Download “C:Tempdownload_files.txt” -auto

I hope the information is useful to your daily ATP operation monitoring.

Cheer!

References:

Live Response Investigation:

https://docs.microsoft.com/en-us/windows/security/threat-protection/microsoft-defender-atp/live-response#:~:text=Microsoft%20Defender%20Advanced%20Threat%20Protection%20%28Microsoft%20Defender%20ATP%29,as%20a%20machine%29%20using%20a%20remote%20shell%20connection.

https://docs.microsoft.com/en-us/windows/security/threat-protection/microsoft-defender-atp/live-response-command-examples

Video about Live Response

https://www.bing.com/videos/search?q=microsoft+live+response+advanced+threat+protection+video&docid=608005478874219990&mid=593DC3A568771CBCEF01593DC3A568771CBCEF01&view=detail&FORM=VIRE

__________________________

Disclaimer

The sample scripts are not supported under any Microsoft standard support program or service. The sample scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages.

by Contributed | Sep 30, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Happy #OpenSourceOctober everyone!

Do you remember your first encounter with an open source project? Some of the most popular developer tools, frameworks, and experiences in the world are built around open communities. Most coders can recall the first time they ran into an open source project on GitHub, SourceForge, CodePlex, Google Code, or just downloaded one from someone’s blog and asked themselves “what is this open source thing, anyway?”. While consuming, contributing to, and talking about open source feels normal today, it didn’t exist before 1998 but it has made an immense impact over the last 22 years!

So, now it’s time to test your open source project knowledge! How many of these did you already know about? Share your ‘score’ in the comments below!

MVVM Light Toolkit

One of the first open source breakouts was Laurent Bugnion’s MVVM Light Toolkit. Laurent was a Microsoft MVP at the time and is currently a Senior Cloud Advocate for Microsoft. For enterprise developers especially, it was a shock in 2009 to find someone creating a great software package and not charging for it. But it clearly also wasn’t freeware because it didn’t force you to watch ads or download potential malware ;). It also happened to be the best tool for data binding and lightweight messaging in XAML apps, and everybody was using it. Free, useful software with no strings attached changed how many of us understood enterprise development from that point forward.

Visual Studio Code

Open source isn’t just about free distribution; it’s also about having a decentralized developer base. The current GitHub project with the most contributors (19.1K according to Octoverse) is VS Code, Microsoft’s super extensible code editor. Since 2015, developers around the world have been continuously adding new features, visual schemes, and language integrations.

WriteableBitmapEx

WriteableBitmapEx was a CodePlex project that took the .NET WriteableBitmap class and made it better by adding tons of helpers as .NET extension methods. All this code effectively turned a basic component of most platforms, the common bitmap, into a powerful drawing surface to brighten and animate the battleship–gray user interfaces of the time.

Blender

Instead of trying to improve something already out there, Blender is a great open source example of looking at something already dominant in the marketplace and trying to replace it. Blender is a free 3D modeling tool that competes with the best of the best. The proof of its success lies in just how many creative professionals are now using it in their daily workflows. In order to support this important free tool for the creation of 3D models for AI research, Microsoft along with many other software companies contribute to the Blender Development Fund.

DotNetNuke

Going a little further back in time, DotNetNuke (DNN) began its life as a 2001 Microsoft reference application, IBuySpy (familiar to anyone who might remember what Northwind and AdventureWorks were). Shaun Walker added features to the reference application and released it as an open source project. It was an early .NET-based content management system best known for its extensibility and quickly formed a community of developers who built and sold plugin solutions for it. Shawn Wildermuth recently did an amazing interview with Shaun Walker about what it’s like to maintain a project for 14 years!

OpenVR

The purpose of OpenVR is to emphasize the “open” part of the open source movement. It provides an API that developers can program against while ignoring the actual hardware that runs it. This means the underlying hardware (HTC Vive) can change without directly affecting your app. It also means your app can potentially run on other hardware devices (like Oculus, Windows Mixed Reality, etc) without major changes. It’s also the bridge technology that allowed Windows Mixed Reality headsets to automatically play the large catalog of Steam VR games when WMR was first released.

TypeScript

TypeScript came out in 2012, after two years of internal development at Microsoft, as a way to formalize the way JavaScript is written without breaking compatibility with it. TypeScript is probably best known for introducing static typing to web programming, but it also extended JavaScript with other programming features like classes, interfaces, and generics.

JSON.NET

At some point in software history, developers migrated from using XML to JSON as the ubiquitous, cross-platform serialization format. It turns out, however, that a cross-platform format still needs translation software to talk to specific platforms. In the .NET world, James Newton-King’s JSON.NET fills that role. We all use it. What more is there to say?

XUnit

2007 was a watershed year for formal software processes and paradigm inversions. We also learned that we needed tools to test first, mock up our classes, and implement continuous integration. For many software architects, xUnit was the way to go and we’re still using it 13 years later. Shawn Wildermuth did a great interview with Brad Wilson on maintaining the xUnit repo over such a long period of time.

OpenCV

OpenCV is everything you want an open source project to be. OpenCV is a computer vision library that uses machine learning techniques to analyze images. It has applications that run from interpreting handwritten letters to segmenting, classifying, and identifying objects in images. Intel started sharing their magic in 2001 with the release of the first OpenCV beta. The library has since been used by developers for many projects including many early Microsoft Kinect applications. It is the basis for almost all smart phone and tablet computer vision apps including modern UWP apps and provides the foundation for machine learning on Microsoft Azure as well as all the other major AI services providers. The moment in 2001 when OpenCV became open source marks the start of the current AI revolution.

And that’s a wrap! Hopefully you enjoyed this trip down memory lane and we want to know what you learned! If you already knew about all of them, make sure to share your personal favorite open source project in the comments below or on Twitter using the #OpenSourceOctober hashtag.

If you are interested in other open source projects and initiatives that Microsoft is investing in, please check out Microsoft’s Open Source page!

by Contributed | Sep 30, 2020 | Azure, Technology, Uncategorized

This article is contributed. See the original author and article here.

Initial Update: Thursday, 01 October 2020 01:47 UTC

We are aware of issues within Log Analytics and are actively investigating. Some customers in West Central US, Australia south East regions may experience issues with Data Latency in AzureDiagnostics and AzureMetrics which may cause misfiring of alerts.

-

Work Around: none

-

Next Update: Before 10/01 04:00 UTC

We are working hard to resolve this issue and apologize for any inconvenience.

-Vincent

by Contributed | Sep 30, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Hi everyone!

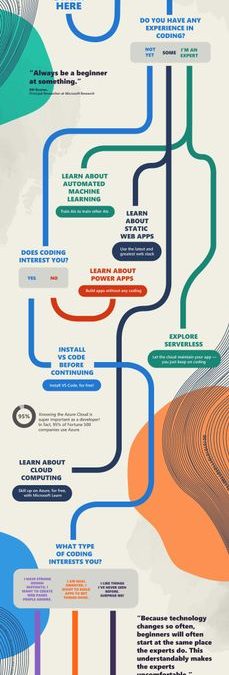

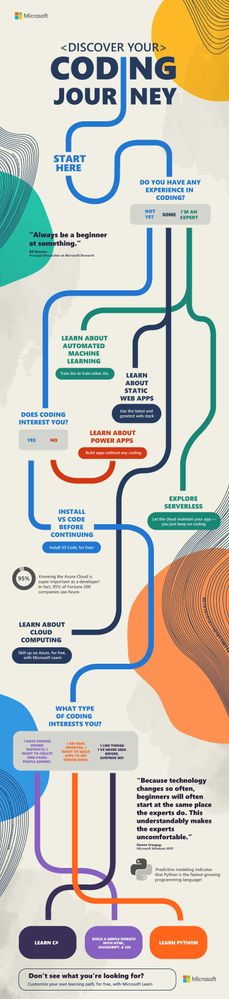

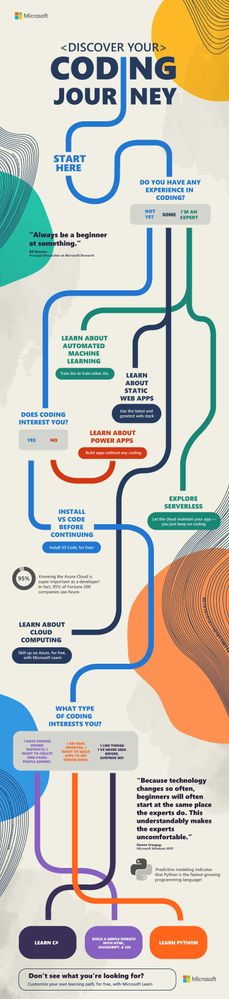

Thanks for joining us for #SkillUpSeptember. We can’t wait to hear what you dove into and what you learned. Just because the month has come to a close, doesn’t mean that your learning stops here. So, we made a fun ‘choose your coding adventure’ infographic so you can easily figure out what you want to learn about next! It will lead you to additional learning resources on topics such as building apps without coding, exploring serverless, creating a simple website, and so much more!

Once you decide what you want to become an expert on next, make sure to check out all of the amazing free tutorials, videos, and online coding environments available on Microsoft Learn and Microsoft Docs.

Discover your coding journey infographic

Discover your coding journey infographic

You can download the full resolution infographic (.pdf) below.

Please post what you learned this month in the comments below or share on Twitter using the #SkillUpSeptember hashtag!

Download the infographic here ?

by Contributed | Sep 30, 2020 | Uncategorized

This article is contributed. See the original author and article here.

Today, I worked on a service request that our customer has an internal process that every night they transferred data from table (iot_table1) to another table (iot_table2) based on several filters. Despite that the number of rows of the source table is increasing every day, this process is taking all the transaction log resource due to amount of data that this process is transferring.

In this type of situation, our best recomendation is to use Business Critical o Premium because the IO capacity is greater if you are using General Purpose or Standard. But, our customer, wants to find an alternative to stay in the Standard/General Purpose without moving to Premium/Business Critical in order to reduce the cost.

Let’s assume that our customer has two tables: IOT_Table1 (source) and IOT_Table2 (destination).

CREATE TABLE [dbo].[iot_table1](

[id] [int] NOT NULL,

[text] [nchar](10) NULL,

[Date] [datetime] NULL,

CONSTRAINT [PK_iot_table1] PRIMARY KEY CLUSTERED

(

[id] ASC

)WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ON [PRIMARY]

) ON [PRIMARY]

GO

CREATE TABLE [dbo].[iot_table2](

[id] [int] NOT NULL,

[text] [nchar](10) NULL,

CONSTRAINT [PK_iot_table2] PRIMARY KEY CLUSTERED

(

[id] ASC

)WITH (STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF) ON [PRIMARY]

) ON [PRIMARY]

GO

We suggested different workarounds to prevent the execution of this process, for example, using an incremental process using Azure Data Factory or the following alternatives using SQL Server Engine:

- Alternative 1) Create a trigger that for every row that you are inserting in the table iot_table1 will be transferred to iot_table2. I’m sharing with you an example about it:

CREATE TRIGGER [dbo].[inserted]

ON [dbo].[iot_table1]

AFTER INSERT

AS

BEGIN

SET NOCOUNT ON;

INSERT INTO IOT_table2(ID,[TEXT]) SELECT ID,[TEXT] from inserted

END

- Alternative 2) Reduce the amount of numbers of rows to be transferred, for example, creating a table per day.

CREATE TRIGGER [dbo].[inserted]

ON [dbo].[iot_table1]

AFTER INSERT

AS

BEGIN

DECLARE @DAY AS VARCHAR(2)

DECLARE @MONTH AS VARCHAR(2)

DECLARE @YEAR AS VARCHAR(4)

SET NOCOUNT ON;

SET @DAY = CONVERT(VARCHAR(2),DAY(GETDATE()))

SET @MONTH = CONVERT(VARCHAR(2),MONTH(GETDATE()))

SET @YEAR = CONVERT(VARCHAR(4),YEAR(GETDATE()))

IF @MONTH=9 AND @DAY =25 AND @YEAR=2020

INSERT INTO IOT_table2_2020_09_25(ID,[TEXT]) SELECT ID,[TEXT] from inserted

IF @MONTH=9 AND @DAY =26 AND @YEAR=2020

INSERT INTO IOT_table2_2020_09_26(ID,[TEXT]) SELECT ID,[TEXT] from inserted

IF @MONTH=9 AND @DAY =27 AND @YEAR=2020

INSERT INTO IOT_table2_2020_09_27(ID,[TEXT]) SELECT ID,[TEXT] from inserted

IF @MONTH=9 AND @DAY =28 AND @YEAR=2020

INSERT INTO IOT_table2_2020_09_28(ID,[TEXT]) SELECT ID,[TEXT] from inserted

IF @MONTH=9 AND @DAY =29 AND @YEAR=2020

INSERT INTO IOT_table2_2020_09_29(ID,[TEXT]) SELECT ID,[TEXT] from inserted

END

- You could create an indexed view that the definition, as example, could be:

CREATE OR ALTER VIEW Data_Per_Day_29_09_2020

WITH SCHEMABINDING

AS

SELECT ID, [TEXT] from dbo.iot_table1 where DAY([DATE])=29 and MONTH([DATE])=9 AND YEAR([DATE])=2020

CREATE UNIQUE CLUSTERED Index Data_Per_Day_29_09_2020_X1 ON Data_Per_Day_29_09_2020(ID)

- When you have an indexed view the data will be automatically saved as materialized data, so, in every row that you added in the table depending on the value of the field DATE you are going to have a materialized view with data. If you run the query using this view SELECT * FROM Data_Per_Day_29_09_2020 WITH (NOEXPAND) the data that you are going to have is the materialized data and will be not retrieved from the table. If you use SELECT * FROM Data_Per_Day_29_09_2020 the data will be retrieved from the table.

Enjoy!!

Recent Comments