by Contributed | Oct 29, 2020 | Technology

This article is contributed. See the original author and article here.

This is the final part of my blog series on looking at performance metrics and tuning for ADF Data Flows. I collected the complete set of slides here to download. These are the previous 2 blog posts, which focused on tuning and performance for data flows with the Azure IR and sources & sinks. In this post, I’ll focus on performance profiles for data flow transformations.

Schema Modifiers

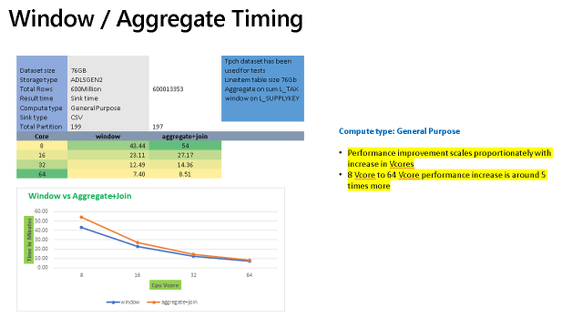

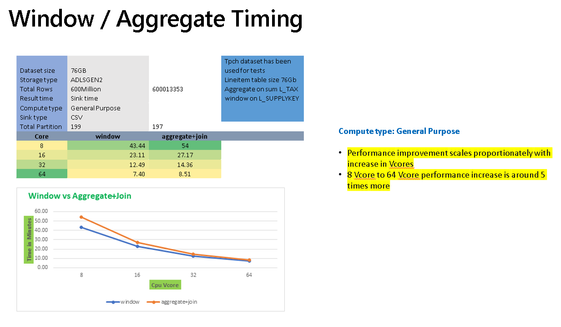

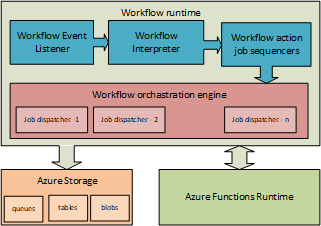

Some of the more “expensive” transformation operations in ADF will be those that require large portions of your data to be grouped together. Schema modified like the Aggregate transformation and Window transformation group data into groups or windows for analytics and aggregations that require the merging of data.

The chart above shows sample timings when using the Aggregate transformation vs. the Window transformation. In both cases, you’ll see similar performance profiles that both gain processing speed as you add more cores to the Azure IR compute settings for data flows. They will also perform better using Memory Optimized or General Purpose options since the VMs for those Spark clusters will have a higher RAM-per-core ratio.

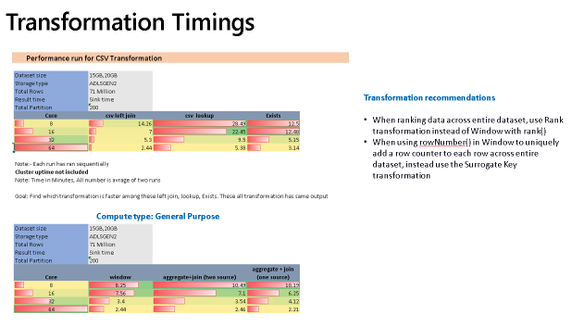

In many situations, you may just need to generate unique keys across all source data or rank an entire data set. If you are performing those common operations in Aggregate or Windows and you are not grouping or generating windows across all of the data using functions such as rowNumber(), rank(), or denseRank(), then you will see better performance to instead use the Surrogate Key transform for unique keys and row numbers, and the Rank transformation for rank or dense rank across the entire data set.

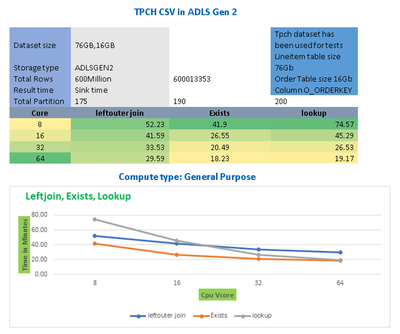

Multiple Inputs/Outputs

The chart above shows that Joins, Lookups, and Exists can all generally scale proportionally as you add more cores to your data flow compute IRs. In many cases, you will need to use transformations that require multiple inputs such as Join, Exists, Lookup. Lookup is essentially a left outer join with a number of additional features for choosing single row or multiple row outputs. Exists is similar to a SQL exists operator that looks for the existence, or non-existence, of values in another data stream. In ADF Data Flows, you’ll see from the chart above, that lookup contains a bit more overhead than the other similar transformations.

When deduping data it is recommended to use Aggregate instead of Join and use Lookups when you need to reduce left outer joins with a single operation, choosing “Any Row” as the fastest operation.

Row Modifiers

Row modifiers like Filter, Alter Row, and Sort, have very different performance profiles. Data flows execute on Spark big data compute clusters, so it is not recommended in most cases to use Sort because it can be an expensive compute operation that can force Spark to shuffle data and reduces the benefit of a distributed data environment.

Alter Row is used to simply tag rows for different database profiles (update, insert, upsert, delete) and Filter will reduce the number of rows coming out of the transformation. Both are very low-cost transformations.

by Contributed | Oct 29, 2020 | Technology

This article is contributed. See the original author and article here.

As our team assists customers in adopting Microsoft Cloud App Security, and continues to encourage customers to leverage the best of its capabilities, we often see that a number of our customers are not aware of how simple and beneficial it can be to connect their other apps (in addition to O365 and Azure) to Cloud App Security.

To help you in that journey, we’ve compiled a short series of videos to help you with key points of integration. To begin, we start with connecting to your favorite apps. Right after connecting these apps, without any additional configuration, you will start benefiting from the threat detection policies built into Cloud App Security. Any policy that you have created (that is not explicitly targeting an app) will also apply (in alert mode only at first, with no risk of accidental governance action).

Of course, much more can be done with the addition of a few simple policies, and we are preparing more blogs on how to protect each of these apps. We have detailed below the connections for GitHub, Salesforce and Box, but there are more to come. Let us know in the comments which app you would like to see next. Stay tuned!

GitHub app connection:

Box app connection:

Salesforce app connection:

We look forward to sharing more important details with you as the weeks progress.

- The Cloud App Security CxE team

by Contributed | Oct 29, 2020 | Technology

This article is contributed. See the original author and article here.

For the recently released Azure Logic Apps (Preview) extension for Visual Studio Code, the Logic Apps team redesigned the Azure Logic Apps runtime architecture for portability and performance. This new design lets you locally build and run logic apps in Visual Studio Code and then deploy to various hosting environments such as Logic Apps Preview in Azure and Docker containers.

The redesigned Logic Apps runtime uses Azure Functions extensibility and is hosted as an extension on Azure Functions runtime, which means you can run logic apps anywhere that Azure Functions runs. You can host the Logic Apps runtime on almost any network topology that you want, choose any available compute size to handle the necessary workload that your workflow needs. Based on the resiliency that you want your app to have, you can also choose an Azure storage account type that best supports your app’s needs. For example, if you don’t need data replication for recovery or failover scenarios, you can use an LRS or ZRS storage account. If you need failover capability, you can use GRS or RA-GRS storage accounts instead. The new runtime also utilizes Azure Function’s request processing pipeline for request and webhook triggers as well as management APIs enabling you to leverage many of App Services http capabilities when deployed to Azure such as custom domains and private endpoints.

With this new approach, the Logic Apps runtime and your workflows become part of your app that you can package together. This capability lets you deploy and run your workflows by simply copying artifacts to the hosting environment and starting your app. This approach also provides a more standardized experience for building dev-ops pipelines around the workflow projects for running the required tests and validations before you deploy changes to production environments.

Azure Logic Apps on Azure Functions runtime

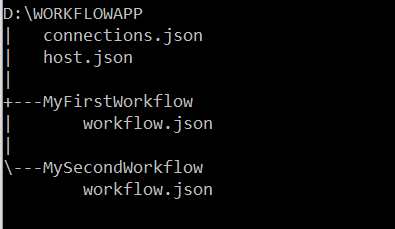

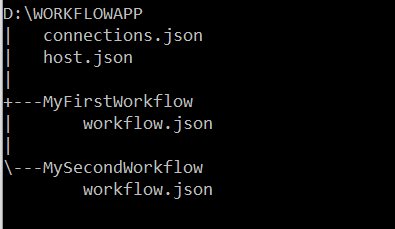

The artifacts for all the workflows in a specific workflow app are in a root project folder that contains a host configuration file, connection definition file, and one or more subfolders. Each subfolder contains the definition for a separate workflow. Here is a representation that shows the folder structure:

Workflow definition file

Each workflow in your project will have a workflow.json file in its own folder that is the entry point for your logic app’s workflow definition, which describes the workflow by using a Logic Apps domain specific language (DSL) that consists of these main parts:

- The trigger definition that describes the event that starts a workflow run.

- Action definitions that describe the subsequent sequence of steps that run when the trigger event happens.

During startup, the Azure Functions runtime loads the Logic Apps extension, which then enumerates through all folders that exist in your workflow project’s root folder and attempts to load any workflow definition (workflow.json) files before starting the Logic Apps runtime. When a workflow loads, the Logic Apps runtime performs the work that’s required to prepare for handling the events that the workflow trigger specifies, such as create an HTTP endpoint that can receive inbound requests for a workflow that starts with the Request trigger, or create an Azure Service Bus listener that receives Service Bus messages. If a workflow has syntactic or semantic errors, the workflow isn’t loaded, and the corresponding errors are output as error logs.

Connection definition file

Connectors provide quick access from Logic Apps to events, data, and actions across other apps, services, systems, protocols, and platforms. By using connectors in your logic apps, you expand the capabilities for your cloud and on-premises apps to perform tasks with the data that you create and already have. Azure Logic Apps connectors are powered by the connector infrastructure that runs in Azure. A workflow running on the new runtime can use these connectors by creating a connection, an Azure resource that provides access to these connectors. The connection definition (connections.json) file is located at the root folder of your project and contains the required configurations such as endpoint details and app settings that describe the authentication details for communicating with the connector endpoints. The Logic Apps runtime uses this information when running these connector actions.

Another capability in the redesigned Logic Apps runtime introduces is the extensibility to add built-in connectors. These built-in connectors are hosted in the same process as the Logic App runtime and provides higher throughput, low latency, and local connectivity. The connections definition file also contains the required configuration information for connecting through these built-in connectors. The preview release comes with the built-in connectors for Azure Service Bus, Azure Event Hub and SQL Server. The extensibility framework that these connectors are built on can be used to build custom connectors to any other service that you need.

Host configuration file

The host configuration (host.json) file contains runtime-specific configurations and is located in the root folder of the workflow app.

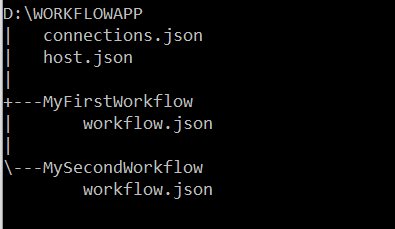

Workflow event handling

The Logic Apps runtime implements each action type in a workflow definition as a job that is run by the underlying Logic Apps job orchestration engine. For example, the HTTP action type is implemented as a job that evaluates the inputs to the HTTP action, sends the outbound request, handles the returned response, and records the result from the action.

Every workflow definition contains a sequence of actions that can be mapped to a directed acyclic graph (DAG) of jobs with various complexity. When a workflow is triggered, the Logic Apps runtime looks up the workflow definition and generates the corresponding jobs that are organized as a DAG, which Logic Apps calls a job-sequencer. Each workflow definition is compiled into a job-sequencer that orchestrates the running of jobs for that workflow definition. The job orchestration engine is a horizontally scalable distributed service that can run DAGs with large numbers of jobs.

For stateful workflows, the orchestration engine schedules the jobs in the sequencers by using Azure Storage queue messages. Behind the scenes, multiple dispatcher worker instances (job dispatchers), which can run on multiple compute nodes, monitor the Logic Apps job queues. By default, the orchestration engine is set up with a single message queue and a single partition on the configured storage account. However, the orchestration engine can also be configured with multiple job queues in multiple storage partitions. For stateless workflows, the orchestration engine keeps their states completely in memory.

A job can run one or multiple times. For example, an HTTP action job can finish after a single run, or the job might have to run several times, for example, when having to retry after getting an “HTTP 500 Internal Server Error” response. After each run, the action job’s state is saved in storage as a checkpoint, which is where the workflow’s actions inherit their “at least once” execution semantic. The job’s checkpoint state includes the action’s result along with the action’s inputs and outputs. Smaller-sized input and output values are stored inline as part of the job’s state in table storage, while larger-sized input and output values are saved in blob storage.

You can change the lifetime for a sequencer up to a 1-year maximum limit. When a sequencer reaches the end of life without finishing, the orchestration engine cancels any remaining jobs from the sequencer.

Wrapping up

The redesigned Logic Apps runtime brings new capabilities that lets you use most of your dev-ops toolbox to build and deploy logic apps, and to set up your logic apps so that they run in different compute and network configurations. The Logic Apps runtime also brings a new extensibility framework that provides more flexibility for writing custom code and building your own custom connectors.

This blog post is the first in a series that the team plans to provide so that you can get in-depth views into various aspects of the redesigned runtime. Stay tuned for more posts about logging, built-in connector extensibility, and performance bench marking.

by Contributed | Oct 29, 2020 | Technology

This article is contributed. See the original author and article here.

Final Update: Thursday, 29 October 2020 22:48 UTC

Our logs indicate the incident started on 10/29, 21:30 UTC and that during the 1 hour that it took to resolve the issue no customers experienced an issue trying to create a new workspace and receiving “internal server error” as a result.

- Root Cause: The failure was due to a DNS limit being reached in China region.

- Incident Timeline: 1 Hours & 0 minutes – 10/29, 21:30 UTC through 10/29, 22:30 UTC

We understand that customers rely on Azure Log Analytics as a critical service and apologize for any impact this incident caused.

-Jeff

Initial Update: Thursday, 29 October 2020 22:04 UTC

We are aware of issues within Log Analytics and are actively investigating. Some customers in the China region may experience an “internal server error” when trying to create a new workspace.

- Next Update: Before 10/30 00:30 UTC

We are working hard to resolve this issue and apologize for any inconvenience.

-Jeff

![[Guest Blog] A Trip to the Amazon Forest – Vision of an RD | MVP Microsoft](https://www.drware.com/wp-content/uploads/2020/10/large-1346)

by Contributed | Oct 29, 2020 | Technology

This article is contributed. See the original author and article here.

This article was written by Andre Ruschel, a Cloud and Datacenter Management MVP and Regional Director from the Brazil. He shares about an interesting Tech for Good project where his team leveraged technology in the Amazon Rainforest and help upskill people in the indigenous villages.

Today I am excited to share with you about work we’re doing within the Amazon Rainforest, bringing technology and learning to the indigenous villages.

In 2018 we held the MVP CONF event, a large conference organized by passionate Microsoft MVPs and where proceeds from sale of conference tickets are all donated to nonprofits.

We decided to donate part of this money to FAS – Amazonas Sustainable Foundation so that they could bring technology to the indigenous villages within the Amazon Rainforest to help preserve nature and culture.

The Amazonas Sustainable Foundation is a civil society organization founded in 2008, with the mission of “contributing to the environmental conservation of the Amazon through the valorization of the standing forest, its biodiversity and the improvement of the quality of life of riverside communities associated with the implementation and dissemination of knowledge about sustainable development“.

Based in Manaus (AM), it carries out environmental, social and economic projects aimed at the conservation of the Amazon Rainforest. It is a non-profit organization, without political-party ties, public utility and welfare charity.

The rainforest is responsible for something even more important: according to National Geographic, it “contributes about 20% of the oxygen produced by photosynthesis on land.” Most of this oxygen is consumed by the trees, plants and microorganisms of the Amazon itself, but it is a habitat in demand for approximately 10% of all species worldwide, including millions of insect types, fish species, among others.

Recent research has found that “disturbed” forest areas have experienced annual temperature increases of 0.44 degrees Celsius than neighboring intact forests, which “equates to approximately half of the warming seen in the region in the last 60 years”. I was particularly impressed with the Amazon Rainforest Chief as he reported his concern with the use of technology and education.

The Amazon Rainforest Chief said, “We want to have education and technology so that our family members can study and stay here to take care of the Forest”

It is very important that new technologies reach the Indians, so that they learn new ways of seeing life, where the use of the Internet is the best way to expand the areas of knowledge, both to bring indigenous culture to the world, and to bring innovations to the Indians.

The Indians, despite living in isolation from the rest of the world, are brimming with creativity and a genuine concern for quality education that many of us who live with privilege do not have. Bringing this immense creativity together with advantages in this digital age will be a turning point in helping improve villagers’ lives and enable them to learn valuable skills.

Working with the Chief and the villagers in the Amazon Rainforest was a fantastic experience and a true honor for me personally, and I recommend to everyone who visits the Amazon Rainforest to take an immersion to get an idea of the importance of preserving the Forest and its cultural practices, while infusing technology to help improve the quality of education and upskill villagers.

I leave here the invitation for everyone to come and see the largest Tropical Forest in the world, The Amazon. Please feel free to reach out to me on Twitter if this is something you might be interested to do the next time you’re in Brazil, and perhaps we can brainstorm on suitable tech solutions together!

#HumansofIT

#TechforGood

Recent Comments