by Contributed | Dec 14, 2020 | Technology

This article is contributed. See the original author and article here.

Databases are essential workers in nearly all applications. They form the bedrock of a data architecture—handling transactions, record-keeping, data manipulation, and other crucial tasks on which modern apps rely. But for as long as databases have existed, people have looked for ways to speed them up. With databases so central to data architecture, even small reductions in throughput or latency performance can cause ripple effects that make the rest of the application sluggish and create a disappointing user experience. And there are financial repercussions too—one study found that the probability of a web site visitor bouncing rose by 90% if the page load time increased from one second to five seconds. This problem will likely become even more pronounced as web and mobile traffic increase. The solution isn’t always simple—scaling up databases can be expensive, and may not solve throughput or latency issues.

Caching Can Improve Application Performance

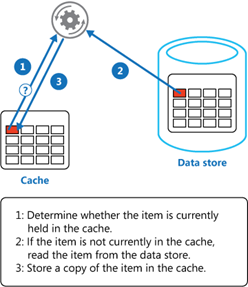

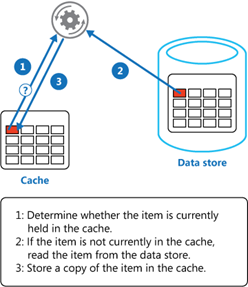

One way you can improve the performance of your data architecture is by implementing caching. In common setups like a cache-aside architecture, the most used data is stored in a fast and easy-to-access cache. When a user requests data, the cache is checked first before querying a database.

Combined with a relational database, a cache can store the most common database queries and return these queries much faster than the database when the application requests them. Not only can this result in significant reductions in latency, but it also reduces the load on the database, lowering the need to overprovision. Additionally, caches are typically better than databases at handling a high throughput of requests—enabling the application to handle more simultaneous users.

Caches are typically most beneficial for read-heavy workloads where the same data is being accessed again and again. Caching pricing, inventory, session state, or financial data are some examples of common use-cases. It’s important to note that caching won’t speed up operations internal to a database (like joins). Instead, caching lessens the need for those operations to occur by returning the results of a query before it reaches the database.

Redis Cache

Redis is one of the most popular caching solutions in the market. It is a key-value datastore that runs in-memory, rather than on disk like most databases. Running in-memory makes it lightning-fast, and a terrific complement to more deliberate and consistent primary databases like Azure SQL Database or PostgreSQL. Redis is available as a fully-managed service on Azure through Azure Cache for Redis, offering automatic patching and updates, high-availability deployment options, and the latest Redis features. Azure Cache for Redis can neatly plug into your Azure data infrastructure as a cache, allowing you to boost data performance. But by how much? We decided to put it to the test.

Performance Benchmark Test

To measure the performance boost from using Redis in conjunction with a database, we turned to GigaOm to run benchmarks on the performance of Azure SQL Database with and without caching. Azure SQL Database is a good point of reference—it’s a highly advanced and full-featured database that is heavily utilized by the most demanding customers. While it already has great price-performance, adding Redis can help accelerate that performance from great to outstanding. To measure this, GigaOm created a sample application based on a real-world example: users viewing inventory on an e-commerce site and placing items into their shopping cart. This test was designed to be realistic while also featuring the benefits of caching. It was run twice: once with just Azure SQL Database handling the inventory and shopping cart data, and once with Azure SQL Database plus Azure Cache for Redis.

Azure App Service was used to host the sample application, and JMeter was used to provide simulated traffic. For each test, additional simultaneous users were periodically added until the database or cache returned errors. GigaOm looked at two performance metrics: throughput and latency.

Throughput Performance Increased by over 800%

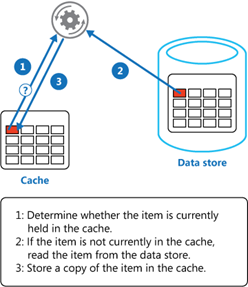

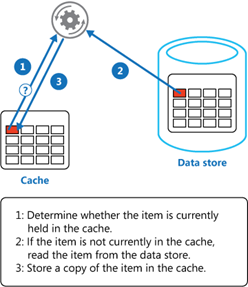

Throughput is a measurement of how much traffic the application can handle simultaneously. High throughput capability is essential to businesses that handle a lot of users or data—especially if demand tends to spike periodically. Azure Cache for Redis can handle millions of simultaneous requests with virtually no slowdown, making it highly suited for enhancing throughput performance. The test results showed this clearly:

Source: GigaOm

Source: GigaOm

Scaling up Azure SQL Database from 8 vCores to 32 vCores produced a 50% increase in throughput performance. While this is useful, adding additional vCores has limited impact on IOPS performance which was the bottleneck factor here. Adding a cache was even more effective: maximum throughput was over 800% higher with Azure Cache for Redis, even when using a smaller database instance (2 vCores vs 8 vCores). That means more users, higher scalability, and better peak traffic performance—all without changing the core database infrastructure. Plus, scaling throughput with Azure Cache for Redis is typically much cheaper than scaling up the database.

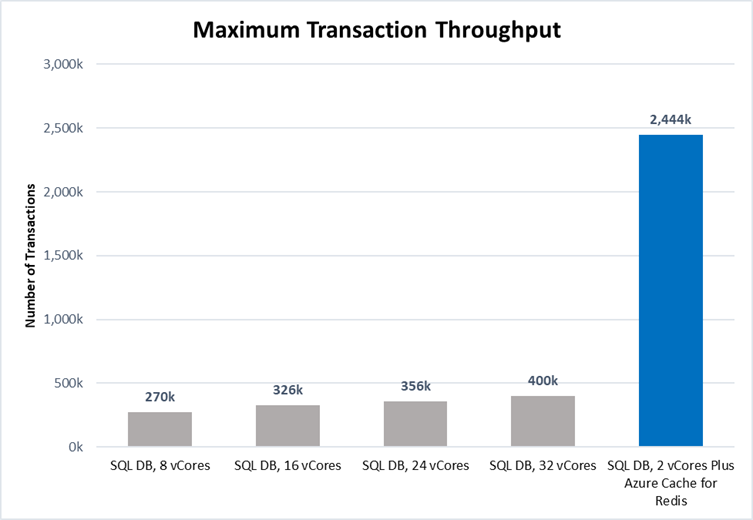

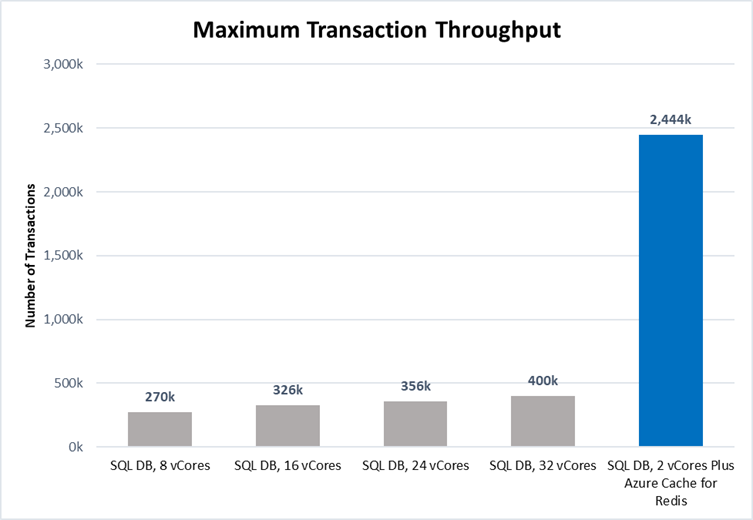

Latency Performance Improved by Over 1000%

Latency measures the time duration between when a request for data is sent by the application and received from the database. The lower the latency, the snappier the user experience and the faster data is returned. Azure Cache for Redis is particularly effective at operating with low latency because it runs in-memory. The benchmarking demonstrated this strongly:

Source: GigaOm

Source: GigaOm

Latency is typically measured at the 95th percentile level or above because delays tend to stack up. This is the “drive-thru” effect. If an order in front of you takes ten minutes, you do not care if your order takes only a few seconds—you had to wait for their order to be completed first! In our test, adding Azure Cache for Redis decreased the 95th percentile latency from over 3s down to 271ms—over a 1000% improvement. At the 99th and 99.9th percentile levels, the difference was even greater. Lower latency means faster applications and happier users, and Azure Cache for Redis is a great way for you to achieve the latency you need.

Try Azure Cache for Redis Today

Azure SQL Database is already a great database with excellent price-performance. Coupled with Azure Cache for Redis, this price-performance edge is amplified even further with a powerful solution for accelerating the throughput and latency performance of your database. Even better, Azure Cache for Redis fits into your existing data architecture and can often be bolted on without requiring huge application changes. Read the full benchmarking report, explore our free online training, and access our documentation to learn more.

Performance claims based on data from a study commissioned by Microsoft and conducted by GigaOm in October 2020. The study compared the performance of a test application using an Azure database with and without implementing Azure Cache for Redis as a caching solution. Azure SQL Database and Azure Database for PostgreSQL were used as the database element in the study. A 2 vCore Gen 5 General Purpose instance of Azure SQL Database and a 2 vCore General Purpose instance of Azure Database for PostgreSQL were used with a 6 GB P1 Premium instance of Azure for Redis. These results were compared with 8, 16, 24, and 32 vCore Gen 5 General Purpose instances of Azure SQL Database and 8, 16, 24, and 32 vCore General Purpose instances of Azure Database for PostgreSQL without Azure Cache for Redis. The benchmark data is taken from the GigaOm Web Application Database Load Test which simulates a common web application and backend database barraged by increasing HTTP requests. Actual results may vary based on configuration and region.

by Contributed | Dec 14, 2020 | Technology

This article is contributed. See the original author and article here.

Databases are essential workers in nearly all applications. They form the bedrock of a data architecture—handling transactions, record-keeping, data manipulation, and other crucial tasks on which modern apps rely. But for as long as databases have existed, people have looked for ways to speed them up. With databases so central to data architecture, even small reductions in throughput or latency performance can cause ripple effects that make the rest of the application sluggish and create a disappointing user experience. And there are financial repercussions too—one study found that the probability of a web site visitor bouncing rose by 90% if the page load time increased from one second to five seconds. This problem will likely become even more pronounced as web and mobile traffic increase. The solution isn’t always simple—scaling up databases can be expensive, and may not solve throughput or latency issues.

Caching Can Improve Application Performance

One way you can improve the performance of your data architecture is by implementing caching. In common setups like a cache-aside architecture, the most used data is stored in a fast and easy-to-access cache. When a user requests data, the cache is checked first before querying a database.

Combined with a relational database, a cache can store the most common database queries and return these queries much faster than the database when the application requests them. Not only can this result in significant reductions in latency, but it also reduces the load on the database, lowering the need to overprovision. Additionally, caches are typically better than databases at handling a high throughput of requests—enabling the application to handle more simultaneous users.

Caches are typically most beneficial for read-heavy workloads where the same data is being accessed again and again. Caching pricing, inventory, session state, or financial data are some examples of common use-cases. It’s important to note that caching won’t speed up operations internal to a database (like joins). Instead, caching lessens the need for those operations to occur by returning the results of a query before it reaches the database.

Redis Cache

Redis is one of the most popular caching solutions in the market. It is a key-value datastore that runs in-memory, rather than on disk like most databases. Running in-memory makes it lightning-fast, and a terrific complement to more deliberate and consistent primary databases like Azure SQL Database or PostgreSQL. Redis is available as a fully-managed service on Azure through Azure Cache for Redis, offering automatic patching and updates, high-availability deployment options, and the latest Redis features. Azure Cache for Redis can neatly plug into your Azure data infrastructure as a cache, allowing you to boost data performance. But by how much? We decided to put it to the test.

Performance Benchmark Test

To measure the performance boost from using Redis in conjunction with a database, we turned to GigaOm to run benchmarks on the performance of Azure SQL Database with and without caching. Azure SQL Database is a good point of reference—it’s a highly advanced and full-featured database that is heavily utilized by the most demanding customers. While it already has great price-performance, adding Redis can help accelerate that performance from great to outstanding. To measure this, GigaOm created a sample application based on a real-world example: users viewing inventory on an e-commerce site and placing items into their shopping cart. This test was designed to be realistic while also featuring the benefits of caching. It was run twice: once with just Azure SQL Database handling the inventory and shopping cart data, and once with Azure SQL Database plus Azure Cache for Redis.

Azure App Service was used to host the sample application, and JMeter was used to provide simulated traffic. For each test, additional simultaneous users were periodically added until the database or cache returned errors. GigaOm looked at two performance metrics: throughput and latency.

Throughput Performance Increased by over 800%

Throughput is a measurement of how much traffic the application can handle simultaneously. High throughput capability is essential to businesses that handle a lot of users or data—especially if demand tends to spike periodically. Azure Cache for Redis can handle millions of simultaneous requests with virtually no slowdown, making it highly suited for enhancing throughput performance. The test results showed this clearly:

Source: GigaOm

Source: GigaOm

Scaling up Azure SQL Database from 8 vCores to 32 vCores produced a 50% increase in throughput performance. While this is useful, adding additional vCores has limited impact on IOPS performance which was the bottleneck factor here. Adding a cache was even more effective: maximum throughput was over 800% higher with Azure Cache for Redis, even when using a smaller database instance (2 vCores vs 8 vCores). That means more users, higher scalability, and better peak traffic performance—all without changing the core database infrastructure. Plus, scaling throughput with Azure Cache for Redis is typically much cheaper than scaling up the database.

Latency Performance Improved by Over 1000%

Latency measures the time duration between when a request for data is sent by the application and received from the database. The lower the latency, the snappier the user experience and the faster data is returned. Azure Cache for Redis is particularly effective at operating with low latency because it runs in-memory. The benchmarking demonstrated this strongly:

Source: GigaOm

Source: GigaOm

Latency is typically measured at the 95th percentile level or above because delays tend to stack up. This is the “drive-thru” effect. If an order in front of you takes ten minutes, you do not care if your order takes only a few seconds—you had to wait for their order to be completed first! In our test, adding Azure Cache for Redis decreased the 95th percentile latency from over 3s down to 271ms—over a 1000% improvement. At the 99th and 99.9th percentile levels, the difference was even greater. Lower latency means faster applications and happier users, and Azure Cache for Redis is a great way for you to achieve the latency you need.

Try Azure Cache for Redis Today

Azure SQL Database is already a great database with excellent price-performance. Coupled with Azure Cache for Redis, this price-performance edge is amplified even further with a powerful solution for accelerating the throughput and latency performance of your database. Even better, Azure Cache for Redis fits into your existing data architecture and can often be bolted on without requiring huge application changes. Read the full benchmarking report, explore our free online training, and access our documentation to learn more.

Performance claims based on data from a study commissioned by Microsoft and conducted by GigaOm in October 2020. The study compared the performance of a test application using an Azure database with and without implementing Azure Cache for Redis as a caching solution. Azure SQL Database and Azure Database for PostgreSQL were used as the database element in the study. A 2 vCore Gen 5 General Purpose instance of Azure SQL Database and a 2 vCore General Purpose instance of Azure Database for PostgreSQL were used with a 6 GB P1 Premium instance of Azure for Redis. These results were compared with 8, 16, 24, and 32 vCore Gen 5 General Purpose instances of Azure SQL Database and 8, 16, 24, and 32 vCore General Purpose instances of Azure Database for PostgreSQL without Azure Cache for Redis. The benchmark data is taken from the GigaOm Web Application Database Load Test which simulates a common web application and backend database barraged by increasing HTTP requests. Actual results may vary based on configuration and region.

by Contributed | Dec 14, 2020 | Technology

This article is contributed. See the original author and article here.

Claire Bonaci On this episode, I welcome Kelly Robke and Toni Thomas, the newest members through our Microsoft health and life sciences industry team. Toni is our US chief patient experience officer. And Kelly is our US chief nursing officer, both women bring an extensive clinical background, as well as innovative ideas on how to transform care delivery and transform healthcare. Hi, Toni, welcome to the podcast. And welcome to Microsoft.

Toni Thomas

Hello, Claire. Thank you for having me on the podcast. And I’m very excited to be at Microsoft and be the first chief patient experience officer.

Claire Bonaci

Yeah, no, that leads me in my first question, you are the first chief patient experience officer that we have ever had at Microsoft, do you mind telling our listeners just a little bit more about yourself?

Toni Thomas

Sure. So just a little background on myself, I actually grew up in a very small rural community in Northwest Ohio. And I always loved solving problems helping people. I loved science. And so especially like biology, anatomy and physiology, and that led me to choose nursing as a career path, as you know how I wanted to get my education. So Blanton finished my first I started at a community college, so I could actually get a two year degree in nursing. And then went on and finished my bachelor’s degree. And then got a master’s degree as I was working. But right after nursing school, I went out and worked in pediatric ICU. And I’m actually still in touch with the same nurse manager that hired me. So we still talk. I spent 17 years in pediatric ICU. And I really have a passion for children and families and ICU care, it’s critical care is something that really, I think it takes a unique type of person to work in a critical care environment. But I’m an adrenaline junkie. And it’s definitely adrenaline for 12 hours a day. But that environment actually is very rich with technology, a lot of machines, a lot of data. So that’s really kind of what got me on the path that led me into healthcare it. And then on another personal note, I’ve been married for 29 years. And my husband Michael is not in health care. And he’s not in technology, which is good. And we have two grown adult daughters.

Claire Bonaci

And why did you make the move to a tech company from the bedside? Was there anything that you saw that you felt, okay, I can be making a bigger difference or this needs to be changed that you wanted to make that way?

Toni Thomas

Yeah, it was always just that constant curiosity. And I will say, you know, for those 17 years in pediatric ICU, I specialized in pediatric cardiothoracic surgery, so children with congenital heart disease, and that the last part of my clinical career, I was working about 100 hours a week, I was the lead nurse practitioner on the cardiothoracic surgery service for pediatrics. So I really, I managed the team, I work side by side with the surgeon in the OR, as well as in the ICU. So number one, I was working a lot of hours. And it is an area in the specialty where there’s a high emotional burnout. But part of the job was also really being part of committees that were looking at or selecting different technologies or software. So I was always that nurse, even at the beginning of my career, I was always that nurse was really interested in the technical or technology aspects of nursing, especially kind of thinking to myself, we’re using all these tools. And there’s data like we’re putting information in, where is the information going, and how is it going to help us and help our patients. So there was a there was a critical moment in my career where I was like, okay, I’m either going to go back to school to get a PhD, or even thought about becoming a nurse anesthetist. And then I was like, You know what, like, do I really want to go back to school? My kids are like approaching junior high. Do I really want to go back to school and make that commitment or do I just want to try to go out and get into the healthcare IT side of things. So I decided to take the health care it route. And I’m now 12, almost 13 years into it. And I love every minute of it, I I still practice nursing, I have all of this rich experience in patient experience and patient engagement. And I now get to help 1000s of people at a time. And that really is what gets me excited every morning when I wake up.

Claire Bonaci

Yeah, I love that you mentioned the scale of that, that really, we can scale up. And that is the most exciting part. Really, I believe that as well. And do you mind touching a little bit more on your patient experience or clinician experience background? And you mentioned it briefly?

Toni Thomas

Sure. So, um, you know, at the bedside, you’re, you’re when you’re a bedside nurse, you’re always about patients and families so that you’re just treating the patient. And since so much of patient experience has to do with the clinicians experience. So there’s that that piece of it that actual hands on experience, that dealing with patients and families that dealing with your colleagues and how do we make? How do we do our work, not just to provide the clinical or the care that’s needed for the patients, the patient care side of it, but how do we make their day better? in all aspects? And then, you know, as you get out into healthcare it and you see that you’re touching 1000s of people at a time, and there’s a lot of power in that there’s good power in that. So your mind is always turning on how do you design solutions for the healthcare systems to solve these critical problems around patient care and their their business problems? And how do you help them provide value to patients and value to families. And then prior to coming to Microsoft, I was at a company that’s actually a Microsoft partner, a company that provides in patient bedside digital technologies. So it’s all about the patient experience. It’s all about engaging the patients and the families and empowering them with data. So a lot of it is about manageable consumable data and how how that is democratized for the patients, how we provide education, and then also the consumer aspects of healthcare delivery. So this is one area that I’m really passionate about. Healthcare is always going to be healthcare, and it’s going to be that niche market. And there is that aspect of seriousness about you know, you are helping someone at the most vulnerable time in their lives. So we all understand that however, patients are consumers, and they come to the experience expecting some of those consumer aspects. And I feel strongly about helping healthcare systems be able to provide that for patients. And I think with Microsoft, you know, and what we’re doing here, there’s never been a better time to do that, especially during this period, that we’re in this unprecedented time of a global pandemic, where healthcare has really been rapidly forced to implement significant changes. And a lot of those changes are very consumer centric.

Claire Bonaci

That’s very true. I love that your background is very extensive. You have all of those experiences to pull from whether that’s bedside or your work at a partner company. What are you most excited about? I know you’re only a few weeks in at Microsoft so far, but what are you looking forward to or most excited about?

Toni Thomas

Well, first of all, let me tell you, it is so exciting to be a part of Microsoft. I’ve actually been a fan girl for a very, very long time. Um, I am a huge fan of Bill Gates. And historically, the company if you think about it, it’s American company and it it changed the world. And so to be able to be a part of that legacy with now with what Satya Nadella is doing here and the culture. And you know how Microsoft has always been a part of healthcare, but now we’re really focused and driven on how we’re going to provide a more longitudinal longitudinal experience in the form of the Microsoft Cloud for healthcare. So that’s, that’s exciting. Being the first chief patient experience officer is very exciting. It also bears great responsibility, I’m, I’m ready for it. I’m excited to be able to educate and inform the colleagues that are going out and evangelizing Microsoft, as well as our healthcare customers and our plans. On another note, I’m really excited about the potential that the pandemic is bringing, I know that sounds really strange. But I always look at opportunity as the upside to something so disruptive, and we’re in a very disruptive period. So the ability to have patients be able to have access to digital health tools on a scale that we’ve not seen before, specifically around virtual visits. I really know artificial intelligence is turned into a buzzword. But the opportunity that AI can bring for good especially not just around for patients and families, but also for clinicians, because we need to do a better job of helping our clinicians have a better day when they go to work. We all know what the nursing profession is going through right now. And I think that, you know, machine learning and AI can help take some of those, the task burden off of their plate and allow them to really focus on the knowledge capital that they have, which is all of their education, and their experience, and how they can use that to treat their patients and spend more time with their patients, versus checking boxes, documenting and, you know, running around doing tasks. So those things really, really excite me.

Claire Bonaci

That’s great. I’m so excited to have you on the team. I’m excited to work more closely with you and to see what happens in the future.

Toni Thomas

Thank you so much for having me and have a great day.

Claire Bonaci

Hi, Kelly, welcome to the podcast.

Kelly Robke

Hi. Glad to be here. Thanks for having me, Claire.

Claire Bonaci

So Kelly, you are brand new to Microsoft, and you are our new chief nursing officer, do you mind telling us a little bit about yourself and your background? Sure.

Kelly Robke

So I have been a registered nurse for 30 years. My nursing career is a little bit unique. I have had clinical practice experience. But I’ve really focused on areas of improving patient population health through innovation and technology. I started off my career working in labor and delivery. With high risk infants in I worked at Piedmont hospital and Memorial Hermann in Houston, Texas. But what’s really cool about that clinical experience is you’re taking care of two patients, the mom and the baby. But you’re having a lot of opportunity to work with not only the EMR, but also the electronic devices that help monitor the vital signs of both mom and baby as well as get some opportunities to work with really cool stuff in the OR, versus cesarian sections. And I also around that time started working in clinical research and through clinical research was really exposed to data and the relevance of data and how important evidence is in helping to drive improvements in care. And I think part of innovation when you talk about health care and improving the quality of care delivery has to involve a conversation around not only how you introduce it, but what the environment looks like as well. And also if you are implementing in a way that really makes it meaningful and beneficial, not only to the patients, but also to the folks that use the the technology in that space. Beyond care delivery. I’ve worked in the CIOs office at MD Anderson Cancer Center, helping the clinicians at one of the largest cancer institutions in the country adopt and utilize technology to the benefit of their patients also worked at a number of technology companies. And most recently, at BD, I helped to bring pyxis enterprise to market. So I’m looking forward to continuing these endeavors with the team at Microsoft.

Claire Bonaci

Yeah, you have a very extensive background, what made you jump from being bedside and really being with the patients to more of that data side more focused on the analytics and really focusing on innovation?

Kelly Robke

Yeah, I think there is so much meaningful benefit to caring for patients and their families, for individuals or patient populations. But there’s something super exciting about being able to influence and impact positive change in healthcare, when you’re talking about groups of people in a certain specialty area, or even around the world. And I think anything you do has to be informed by evidence. There’s also the experience of clinical practice. But it’s super exciting to be involved in this endeavor this these days simply in that there are many different sources of data. And we focus on the inpatient care model, because that’s where the most critical care is delivered, and organize to structure, health. But as we continue in healthcare transformation and efforts to achieve the quadruple aim, we’re really looking outside the four walls of the hospital. And it’s super exciting because care strategies and workflows are different if you’re looking at outpatient settings, procedural settings, but now, particularly in the time we’re in with the pandemic, we really need to focus on other areas where healthcare is consumed, such as retail clinics, such as public health entities, and it’s super excited to be a part of that, and also how data is gathered, interpreted and applied towards change in healthcare.

Claire Bonaci

That’s great. And I love that you do bring up kind of that shift towards making this scalable and really making this more of a larger impact. So you obviously made that big move from a med device company to a tech technology company. What do you hope to accomplish with this move? I guess, what are your your dreams? And what are your goals, either short term or long term

Kelly Robke

Sure, I’m excited to work with the folks, providing health care, both from a provider standpoint from the clinical investigators. And that’s an entire community, as well as the systems that are providing health. Like I just mentioned, I’m excited to be a part of the team that’s really driving the use of analytics, whether it’s descriptive, predictive to help address some common challenges and some formidable challenges were experiencing out in health, not just from the pandemic, but how we can improve the care delivered to chronic condition patients who may have individual needs that can can significantly impact the quadruple aim, again, in terms of cost and quality, but also in improving the health along the continuum of patient populations. How can we make sure that folks are doing preventative activities are able to stay in their home while they’re recovering, in a way that’s both meaningful and beneficial to global health and working with partners to achieve that goal. And also, the team here at Microsoft is is really something I’m looking forward to and proud to be a part of.

Claire Bonaci

Great. Well, I am so happy that you’re on the team. I’m really looking forward to working with you more. And one quick last question for you that you want to share that our listeners probably don’t know about you something fun something and just to get to know you a little bit better.

Kelly Robke

Sure I love to golf, you can find me on a golf course, most weekends. I also like to cycle in races that raise money for charity. So there are several races that I compete in. And you might see me on the road if I’m not on the golf course sometime.

Claire Bonaci

That is great. Thank you so much for being on the podcast and I’m looking forward to having you back next time.

Kelly Robke

Thank you.

Claire Bonaci

Thank you all for watching. Please feel free to leave us questions or comments below and check back soon for more content from the HLS industry team.

Follow Kelly and Toni on LinkedIn

by Contributed | Dec 14, 2020 | Technology

This article is contributed. See the original author and article here.

Enterprise data continues to grow in volume, variety and velocity but much of this data is not analyzed. As Analytics, BI, AI and ML continues to gain momentum, enabling easy access to meaningful data for data consumers is needed now more than ever.

Chief Data Officers can now enable business and technical analysts, data scientists, data engineers with access to trustworthy, valuable data.

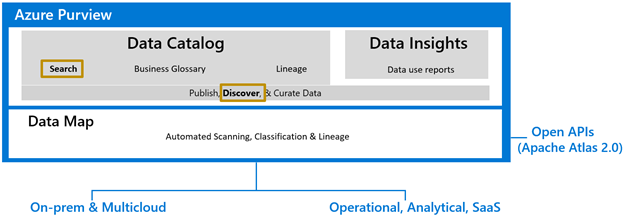

Figure 1: Search & Browse is at the core of Azure Purview that powers the discovery of business & technical metadata to drive data governance.

Intuitive search and browse experience:

The Search & Browse experience in Azure Purview Data Catalog makes finding the right data easy. Users specify what they are looking for in terms they are familiar with – the search index created in Azure Purview does the rest. Based on relationships between technical and business metadata gathered in the Purview Data Map, the search looks up the keyword in the index. Attributes such as name, description, owner, annotations, and so on return matching assets and results. The search results are page-ranked for relevance using Azure Purview scoring profiles building on the Azure Purview Data Map.

As an example, if you have glossary term on a table as “Promotion” and the description of a Power BI report as “Product promotion percent”. Searching for the keyword “promotion” will return the matching table and the report based on the keyword lookup on the term annotations and description.

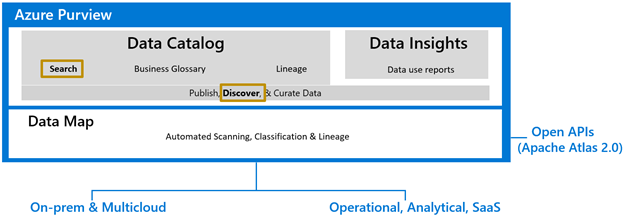

Figure 2: Azure Purview global search box for auto complete, recent search history, auto suggestion and so on.

Search techniques employed include semantics, wildcard search, auto complete, and handling of special characters. As soon as the user starts typing, the search shows three sections.

- Recent search history

- Search suggestions based on a lookup of the index. This shows matching text from attributes like descriptions, owners, classifications, labels and so on.

- Asset suggestions powered by a wildcard lookup.

The user can take up one of the recommendations or proceed as-is.

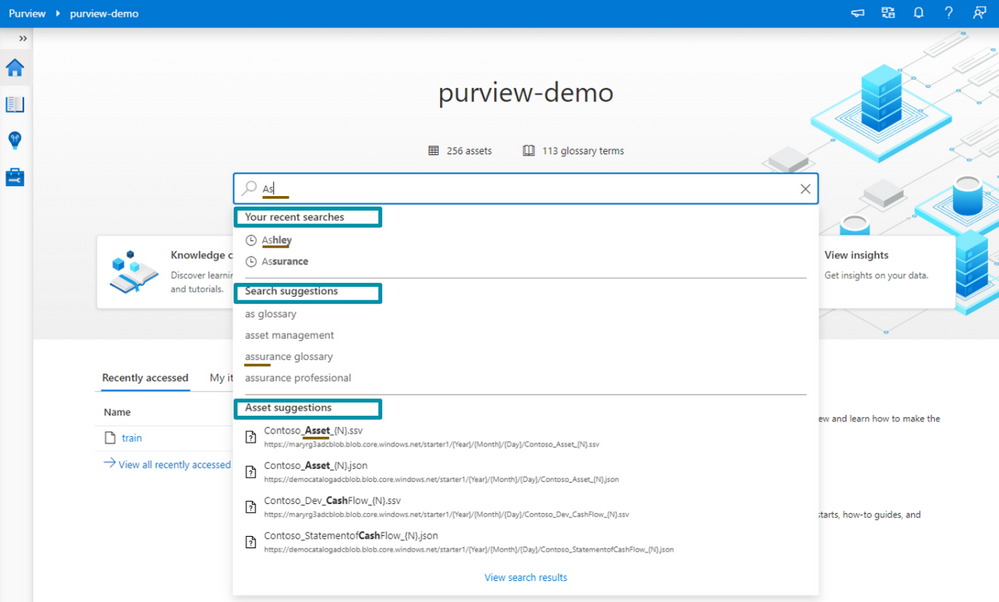

Hierarchical browsing by data source

Another way to discover data is via the Browse asset experience. Here users can navigate through a hierarchy of data systems to discover relevant data assets. For eg: The user wants to get to a specific customer dataset that she knows resides in a folder called “Dimensions” in Data Lake but is unaware of the actual name of the dataset. With Azure Purview, now the user can simply go to the Browse asset experience in Purview and navigate the folder structure of the Data Lake to self-serve the dataset details.

The hierarchical Browse asset experience is available for all types of data sources including SQL Server, Power BI, Storage account, Azure Data Factory, Teradata and so on.

Figure 3: Browse your organizations data using the data source hierarchical name space.

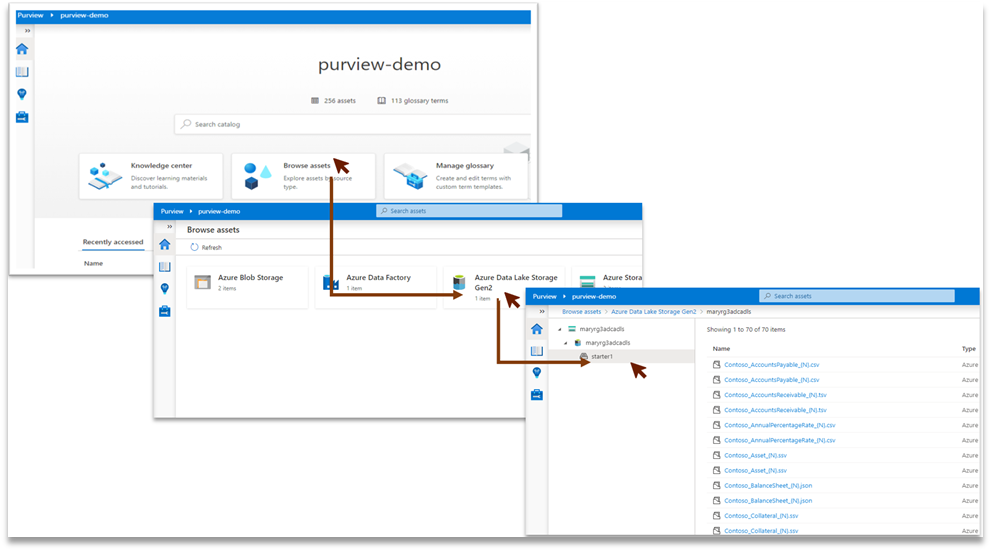

Related assets

Azure Purview combines the search and browse experience to enhance data discovery of structured and unstructured data. The discovery can start with a keyword search to get the list of assets ranked by search relevance. On asset selection, the overview of an asset and additional details like Schema, Lineage, Contacts, and Related tabs are displayed. Users can go to “Related” to see the technical hierarchy for the selected asset. Users can then navigate the hierarchy to see the child assets at each level.

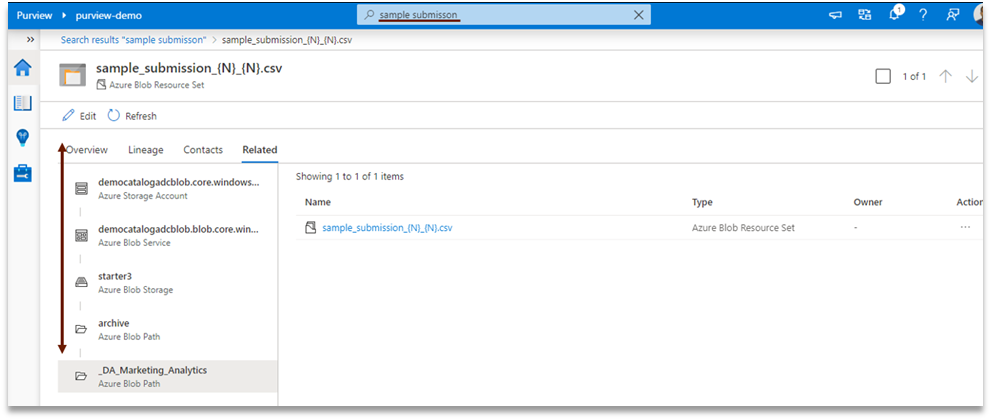

Figure 4: Navigate the hierarchy of data source from Related tab

In the above screen shot, the data discovery started with search for “sample submission”. From the search results, the blob “sample_submission.csv” is selected. In the related tab, you can navigate the hierarchy of the Blob store to see related datasets in each folder.

Get started now!

We are looking forward to hearing how Azure Purview helped unlock the potential of your organization’s data using Search & Browse asset.

- Create an Azure Purview account now and start understanding your data supply chain from raw data to business insights with free scanning for all your SQL Server on-premises and Power BI online

- Use the tutorials to scan and catalog your organization data

- Start discovering your organization data using Search & Browse

Recent Comments