by Contributed | Dec 11, 2020 | Technology

This article is contributed. See the original author and article here.

To connect to Azure SQL Database we could use multiple tools or coding languages like C#, PHP, Java, etc.. In some situations, we need to test our application, for example, how much time take a single connection, review my retry-logic, review the connection, etc..

In this URL you could find an example about several operations that you could use using JAVA.

This application has been designed with a main idea: how to obtain information about the elapsed time in the connectivity process and the query execution to a database of Azure SQL Database using JAVA (Microsoft JDBC Driver for SQL Server). This JAVA console script runs in Windows and Linux.

This JAVA console script has the main features:

- Connect using Microsoft JDBC Driver for SQL Server measuring the time spent.

- Once you have established the connection runs multiple times the query SELECT 1 measuring the time spent.

You could find the instrucctions in readme file.

As always, all feedback and contributions are very welcome.

Enjoy!

by Contributed | Dec 11, 2020 | Technology

This article is contributed. See the original author and article here.

The Develop Hub in Azure Synapse Analytics enables you to write code and define business logic using a combination of notebooks, SQL scripts, and data flows. This gives us a development experience which provides the capability to query, analyze, and model data in multiple languages, along with giving us Intellisense support for these languages. This provides a rich interface for authoring code and in this post, we will see how we can use the Knowledge Center to jump-start our development experience.

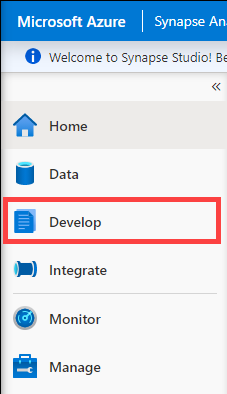

The first thing we will do open up our Synapse workspace. From there, choose the Develop option to open the Develop Hub.

The Develop Hub option is selected

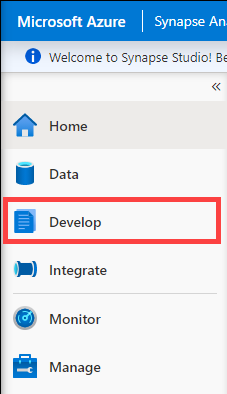

Once inside the Develop Hub, we can create new SQL scripts, notebooks, data flows, and more. In the list of options is the Browse gallery menu item. Select this to open up the Knowledge Center gallery.

The new Develop item option is selected first, followed by the Browse gallery option

Load a notebook from the Knowledge Center

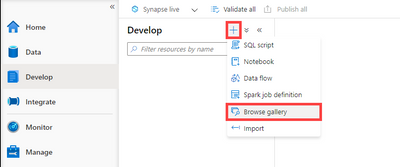

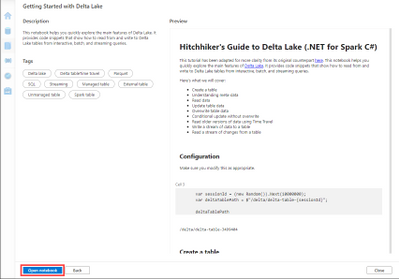

In the Knowledge Center gallery, choose the Notebooks tab. This will bring up the set of pre-created notebooks in the gallery. These notebooks cover a variety of languages, including PySpark, Scala, and C# (with Spark.NET). For this post, we will use the Getting Started with Delta Lake example in Spark.NET C#.

The Getting Started with Delta Lake notebook is selected

This brings up a preview, giving us context around what the notebook has to offer.

The Getting Started with Delta Lake notebook preview tells us what to expect in the notebook

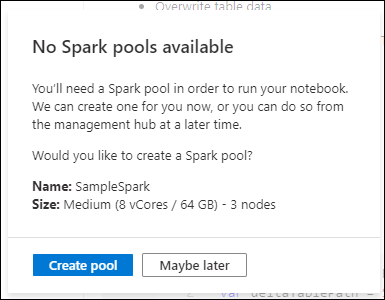

If we do not have an Apache Spark pool already, we will be prompted to create one. Apache Spark pool creation may take a few minutes, and we will receive a notification when it is ready to go.

Create an Apache Spark pool named SampleSpark

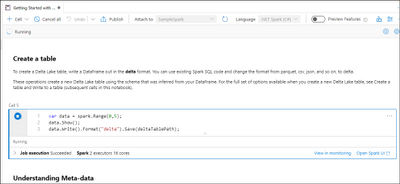

From here, we can execute the cells in the notebook and try out some of the capabilities of the Apache Spark pools. We can make use of Intellisense to simplify our development experience, as well.

A C# notebook execution is in progress

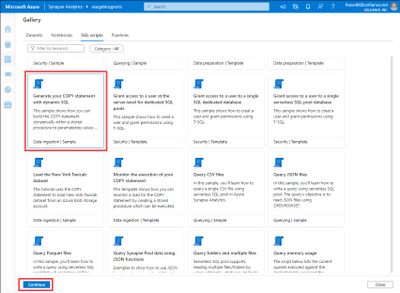

Notebooks are not the only element of the Knowledge Center gallery, however–we can also work with serverless SQL pool and dedicated SQL pools by way of SQL scripts.

Generate a SQL script from the Knowledge Center

Returning to the Knowledge Center gallery, we can choose the SQL scripts tab, which brings up a set of samples and templates for use. We will take a look at the sample SQL script entitled Generate your COPY statement with dynamic SQL. Just as with the notebook from above, we will select the script and then Continue.

The SQL script entitled Generate your COPY statement with dynamic SQL is selected

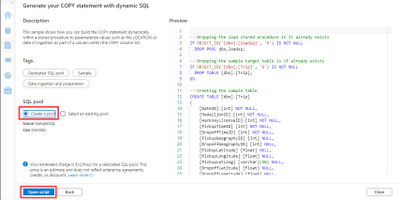

This brings us to a preview page for the script which enables you to create a new dedicated SQL pool or choose an existing one. We will create a new dedicated SQL pool named SampleSQL and select Open script to continue.

The Generate your COPY statement with dynamic SQL preview tells us what to expect in the SQL script

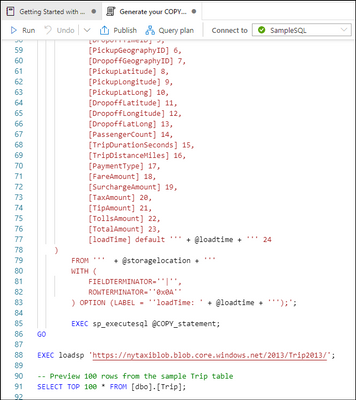

Dedicated SQL pool deployment may take a few minutes, and we will receive a notification when it is ready to go. At this point, we can run the query and see the results. Running this query may take several minutes depending on the size of your dedicated SQL pool, as the script will load 170,261,325 records into the newly-created dbo.Trip table.

The SQL script is ready to be executed

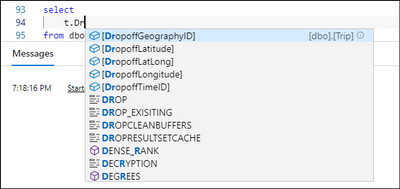

This particular script shows how to use the COPY command in T-SQL to load data from Azure Data Lake Storage into the dedicated SQL pool. Just as with notebooks, the Develop Hub editor for SQL scripts includes Intellisense.

Intellisense provides a list of column names and operators, simplifying the development process

Clean up generated resources

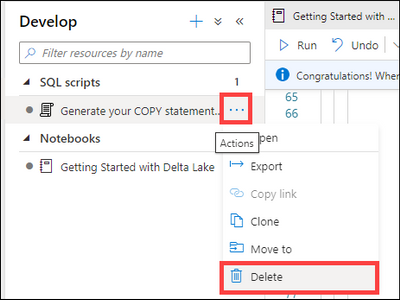

When we’ve finished learning about the Develop Hub, teardown is easy. In the Develop Hub, find the SQL scripts and notebooks we created and for each, select the … menu and then choose the Delete option. We will receive a confirmation prompt before deleting to avoid accidental deletion of the wrong script.

Delete a notebook or a SQL script

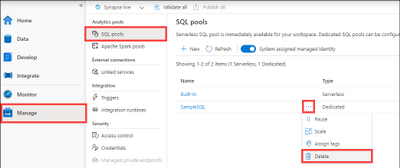

Then, we want to navigate to the Manage Hub and tear down the dedicated SQL pool and the Apache Spark pool that we have created. In the SQL pools menu option, select the … menu and then Delete the pool. We will need to type in the name of the dedicated SQL pool (SampleSQL) as a confirmation.

Delete the dedicated SQL pool

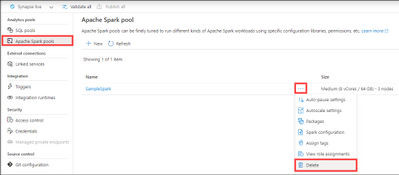

After that, navigate to the Apache Spark pools menu option, select the … menu, and then Delete the pool. We will again need to type in the name of the Apache Spark pool (SampleSpark) to confirm our intent.

Delete the Apache Spark pool

That takes care of all of the resources we created in this blog post.

Conclusion

In this blog post, we learned how we can use the Knowledge Center to gain an understanding of what is possible in the Develop Hub.

Quick get started with Azure Synapse and try this tutorial with these resources:

by Contributed | Dec 11, 2020 | Technology

This article is contributed. See the original author and article here.

We are excited to introduce adutil in public preview, this is a CLI based utility developed to ease the AD authentication configuration for both SQL Server on Linux and SQL Server Linux containers. AD authentication enables domain-joined clients on either Windows or Linux to authenticate to SQL Server using their domain credentials and the Kerberos protocol.

Until today, when configuring the AD authentication for SQL on Linux, for creation of “AD user for SQL Server and setting SPNs” you needed to switch from Linux to Windows machine and then switch back to Linux machine to continue with the rest of steps. With adutil released we aim to make this experience seamless, where from the Linux machine itself you can interact and manage the Active Directory domains through the CLI.

Overall adutil is a utility for interacting with and managing Active Directory domains through the CLI. adutil is designed as a series of commands and subcommands, with additional flags that can be specified for further input. Each top-level command represents a category of administrative functions. Each subcommand is an operation within that category. Using adutil you can manage with users, SPNs, keytabs, groups etc.

To start using adutil please see adutil installation for the install steps

For details on how you can configure AD authentication with adutil for SQL on Linux and containers please refer below:

Configure Active Directory authentication for SQL Server on Linux using adutil

Configure Active Directory authentication for SQL Server on Linux containers using adutil

Thanks,

Engineering lead: Mike Habben

Engineering: Dylan Gray; Dyllon (Owen) Gagnier; Ethan Moffat; Madeline MacDonald

Amit Khandelwal Senior Program Manager

by Contributed | Dec 11, 2020 | Technology

This article is contributed. See the original author and article here.

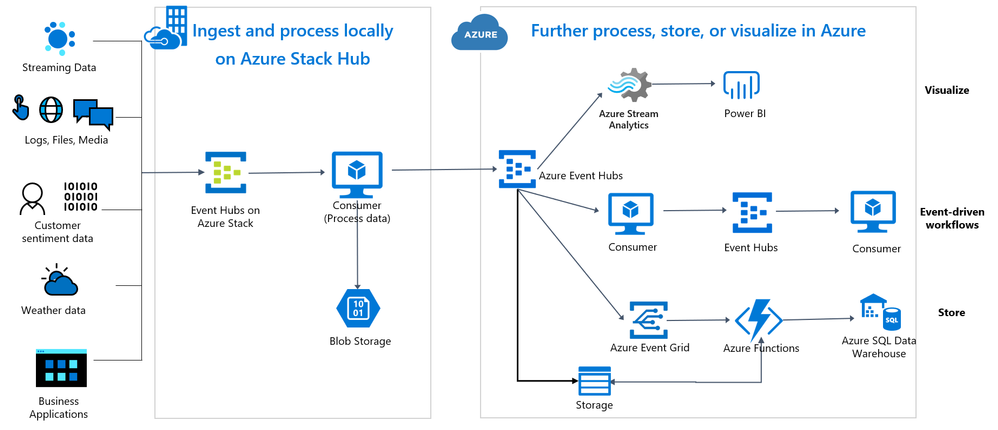

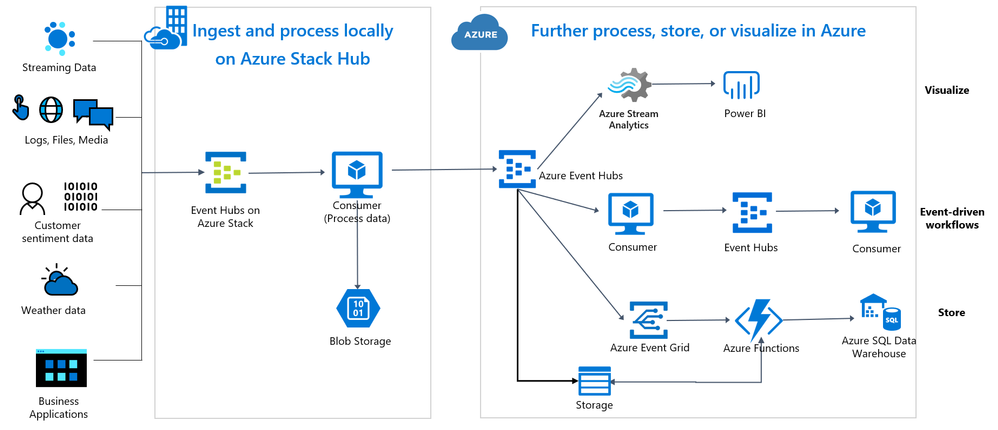

Event Hubs is the popular, highly reliable and extremely scalable event streaming engine that backs thousands of applications across every kind of industry in Microsoft Azure. We are now announcing the general availability of Event Hubs on Azure Stack Hub. Event Hubs on Azure Stack Hub will allow you to realize cloud and on-premises scenarios that use streaming architectures. With this GA release, you can use the same features of Event Hubs on Azure, such as Kafka protocol support, a rich set of client SDKs, and the same Azure operational model. Whether you are using Event Hubs on a hybrid (connected) scenario or on a disconnected scenario, you will be able to build solutions that support stream processing at a large scale that is only bound by the Event Hubs cluster size that you provision according to your needs.

Run event processing tasks and build event-driven applications on site

With the availability of Event Hubs on your Azure Stack Hub you will be able to implement business scenarios like the following:

- AI and machine learning workloads where Event Hubs is the streaming engine.

- Implement streaming architectures in your own sites outside the Azure datacenters.

- Clickstream analytics for your web application(s) deployed on-prem.

- Device telemetry analysis.

- Stream processing with open source frameworks that use Apache Kafka such as Apache Spark, Flink, Storm, and Samza.

- Consume Compute guest OS metrics and events.

Build Hybrid solutions

Build solutions on Azure that complement or leverage the data processed in your Azure Stack Hub. Send to Azure aggregated data for further processing, visualization, and/or storage. If appropriate, leverage serverless computing on Azure.

Feature Parity

Event Hubs offers the same features on Azure and on Azure Stack Hub with very few feature deviations between both platforms. Please consult our documentation on features supported by Event Hubs for more information.

Pricing

Use Event Hubs on Azure Stack Hub for free through December 31st, 2020. Starting on January 1st, 2021 you will be charged for the Event Hubs clusters you create. For more information on pricing, please consult the Azure Stack Hub Pricing page later in December.

New to Event Hubs or not sure if it is the right message broker for your needs?

Learn about Event Hubs by consulting our documentation which is applicable to Event Hubs on Azure and on Azure Stack Hub.

If you have a question whether Event Hubs meets your requirements, please consult this article to help you understand the different message brokers that Azure provides and their applicability for different use cases. While currently only Event Hubs is available on Azure Stack Hub, knowing the messaging requirements each of our message brokers seek to meet will give you a clear idea if Event Hubs is the right solution for you.

Questions?

We are here to help you through your hybrid computing journey. Following are the ways you can get your issues or questions answered by our teams:

- Problems with Event Hubs? Please create a support request using the Azure Portal. Please make sure to select “Event Hubs on Azure Stack Hub” as the value for Service in the Basics (first step) tab.

- Problems with any of our SDK clients? Just submit a GitHub issue in any of the following repositories: Java, Python, JavaScript, and .Net.

- 300+ level questions about the use of Event Hubs? Please send your questions to askeventhubs@microsoft.com or reach out to the community discussion group . You can also leave a message on this blog.

Next Steps

Recent Comments