by Contributed | Jan 26, 2021 | Technology

This article is contributed. See the original author and article here.

Learn to develop real-world Internet of Things solutions built with Microsoft Azure services from experts from around the world!

On January 19, the IoT Advocacy team partnered with 9 amazing IoT focused MVPs from around the world plus our very own Olivier Bloch and CVP of Azure IoT Sam George to deliver a live event across Asia Pacific, Europe Middle East, and the Americas in three unique time zones. This event served as a flagship demonstration of the Internet of Things – Event Learning Path, an open-source collection of IoT focused sessions designed for IoT Solution Architects, Business Decision Makers, and Development teams.

Each session begins with a 15 minute Introductory presentation and is supported by a 45 minute video recording which deep dives into the topics introduced. We encourage partners, field teams, and general IoT enthusiasts to reuse any portions of the content as they see fit for example: user group presentations, third party conferences, or internal training systems!

That is exactly what we did for the All Around Azure – A Developer’s Guide to IoT Event, with the help of a worldwide team of experts from the Azure IoT Community, we adapted the 15 minute introductory sessions for delivery to a global audience. The sessions drew 1,800+ live viewers over three local-specific timeslots.

There really is no better time than now to leverage our IoT skilling content as you can now officialize as an official Azure Certified IoT Developer with the availability of the AZ-220 IoT Developer Exam. And even better, we are currently running a “30 Days to Learn It – IoT Challenge” which challenges you to complete a collection of IoT focused modules on Microsoft Learn, and rewards you with a 50% off voucher to sit for the AZ-220 IoT Developer Exam!

You can catch up on all the excitement and learning from the All Around Azure IoT Event on Channel 9 or right here on the #IoTTechCommunity!

Keynote with Sam George and Olivier Bloch with SketchNote by @nityan

Live Sessions and Associated Deep Dives

With 80% of the world’s data collected in the last 2 years, it is estimated that there are currently 32 billion connected devices generating said data. Many organizations are looking to capitalize on this for the purposes of automation or estimation and require a starting point to do so. This session will share an IoT real world adoption scenario and how the team went about incorporating IoT Azure services.

Data collection by itself does not provide business values. IoT solutions must ingest, process, make decisions, and take actions to create value. This module focuses on data acquisition, data ingestion, and the data processing aspect of IoT solutions to maximize value from data.

As a device developer, you will learn about message types, approaches to serializing messages, the value of metadata and IoT Plug and Play to streamline data processing on the edge or in the cloud.

As a solution architect, you will learn about approaches to stream processing on the edge or in the cloud with Azure Stream Analytics, selecting the right storage based on the volume and value of data to balance performance and costs, as well as an introduction to IoT reporting with PowerBI.

For many scenarios, the cloud is used as a way to process data and apply business logic with nearly limitless scale. However, processing data in the cloud is not always the optimal way to run computational workloads: either because of connectivity issues, legal concerns, or because you need to respond in near-real time with processing at the Edge.

In this session we dive into how Azure IoT Edge can help in this scenario. We will train a machine learning model in the cloud using the Microsoft AI Platform and deploy this model to an IoT Edge device using Azure IoT Hub.

At the end, you will understand how to develop and deploy AI & Machine Learning workloads at the Edge.

A large part of value provided from IoT deployments comes from data. However, getting this data into the existing data landscape is often overlooked. In this session, we will start by introducing what are the existing Big Data Solutions that can be part of your data landscape. We will then look at how you can easily ingest IoT Data within traditional BI systems like Data warehouses or in Big Data stores like data lakes. When our data is ingested, we see how your data analysts can gain new insights on your existing data by augmenting your PowerBI reports with IoT Data. Looking back at historical data with a new angle is a common scenario. Finally, we’ll see how to run real-time analytics on IoT Data to power real time dashboards or take actions with Azure Stream Analytics and Logic Apps. By the end of the presentation, you’ll have an understanding of all the related data components of the IoT reference architecture.

In this session we will explore strategies for secure IoT device connectivity in real-world edge environments, specifically how use of the Azure IoT Edge Gateway can accommodate offline, intermittent, legacy environments by means of Gateway configuration patterns. We will then look at implementations of Artificial Intelligence at the Edge in a variety of business verticals, by adapting a common IoT reference architecture to accommodate specific business needs. Finally, we will conclude with techniques for implementing artificial intelligence at the edge to support an Intelligent Video Analytics solution, by walking through a project which integrates Azure IoT Edge with an NVIDIA DeepStream SDK module and a custom object detection model built using CustomVision.AI to create an end-to-end solution that allows for visualization of object detection telemetry in Azure services like Time Series Insights and PowerBI.

by Contributed | Jan 26, 2021 | Technology

This article is contributed. See the original author and article here.

In this installment of the weekly discussion revolving around the latest news and topics on Microsoft 365, hosts – Vesa Juvonen (Microsoft) | @vesajuvonen, Waldek Mastykarz (Microsoft) | @waldekm, are joined by MVP, “Stickerpreneur”, conference speaker, and engineering lead Elio Struyf (Valo Intranet) | @eliostruyf.

Their discussion focuses on building products on the Microsoft 365 platform, from a partner perspective. Angles explored – platform control, product ownership, communications, a marriage, importance of roadmap, areas for improvement, communications, and value. Considerations behind product development and distribution strategy including on-Prem, SAAS offering or delivery to customer to host in their own cloud with assistance from partners. Valo is effectively an ISV that delivers solutions like an SI.

This episode was recorded on Monday, January 25, 2020.

Did we miss your article? Please use #PnPWeekly hashtag in the Twitter for letting us know the content which you have created.

As always, if you need help on an issue, want to share a discovery, or just want to say: “Job well done”, please reach out to Vesa, to Waldek or to your Microsoft 365 PnP Community.

Sharing is caring!

by Contributed | Jan 26, 2021 | Technology

This article is contributed. See the original author and article here.

Authors: Lu Han, Emil Almazov, Dr Dean Mohamedally, University College London (Lead Academic Supervisor) and Lee Stott, Microsoft (Mentor)

Both Lu Han and Emil Almazov, are the current UCL student team working on the first version of the MotionInput supporting DirectX project in partnership with UCL and Microsoft UCL Industry Exchange Network (UCL IXN).

Examples of MotionInput

Running on the spot

Cycling on an exercise bike

Introduction

This is a work in progress preview, the intent is this solution will become a Open Source community based project.

During COVID-19 it has been increasingly important for the general population’s wellbeing to keep active at home, especially in regions with lockdowns such as in the UK. Over the years, we have all been adjusting to new ways of working and managing time, with tools like MS Teams. It is especially the case for presenters, like teachers and clinicians who have to give audiences instructions, that they do so with regular breaks.

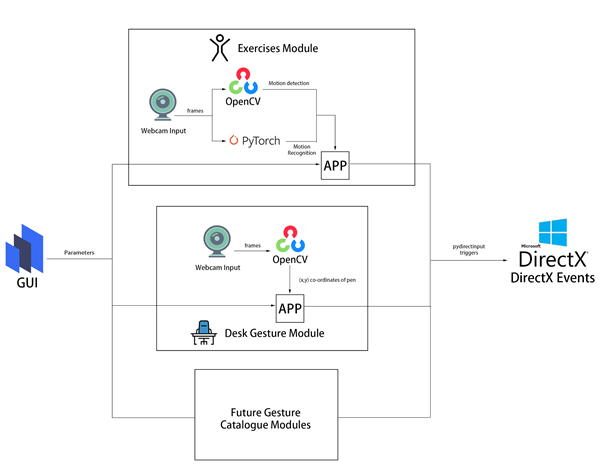

UCL’s MotionInput supporting DirectX is a modular framework to bring together catalogues of gesture inputs for Windows based interactions. This preview shows several Visual Studio based modules that use a regular webcam (e.g. on a laptop) and open-source computer vision libraries to deliver low-latency input on existing DirectX accelerated games and applications on Windows 10.

The current preview focuses on two MotionInput catalogues – gestures from at-home exercises, and desk-based gestures with in-air pen navigation. For desk-based gestures, in addition to being made operable with as many possible Windows based games, preliminary work has been made towards control in windows apps such as PowerPoint, Teams and Edge browser, focusing on the work from home era that uses are currently in.

The key ideas behind the prototype projects are to “use the tech and tools you have already” and “keep active”, providing touchless interactive interfaces to existing Windows software with a webcam. Of course, Sony’s EyeToy and Microsoft Kinect for Xbox have done this before and there are other dedicated applications that have gesture technologies embedded. However, many of these are no longer available or supported on the market and previously only worked with dedicated software titles that they are intended for. The general population’s fitness, the potential for physiotherapy and rehabilitation, and use of motion gestures for teaching purposes is something we intend to explore with these works. Also, we hope the open-source community will revisit older software titles and make selections of them become more “actionable” with further catalogue entries of gestures to control games and other software. Waving your arms outreached in front of your laptop to fly in Microsoft Flight Simulator is now possible!

The key investigation is in the creation of catalogues of motion-based gestures styles for interfacing with Windows 10, and potentially catalogues for different games and interaction genres for use industries, like teaching, healthcare and manufacturing.

The teams and projects development roadmap includes trialing at Great Ormond Street’s DRIVE unit and several clinical teams who have expressed interest for rehabilitation and healthcare systems interaction.

Key technical advantages

- Computer vision on RGB cameras on Windows 10 based laptops and tablets is now efficient enough to replace previous depth-camera only gestures for the specific user tasks we are examining.

- A library of categories for gestures will enable many uses for existing software to be controllable through gesture catalogue profiles.

- Bringing it as close as possible to the Windows 10 interfaces layer via DirectX and making it as efficient as possible on multi-threaded processes reduces the latency so that gestures are responsive replacements to their corresponding assigned interaction events.

Architecture

All modules are connected by a common windows based GUI configuration panel that exposes the parameters available to each gesture catalogue module. This allows a user to set the gesture types and customise the responses.

The Exercise module in this preview examines repetitious at-home based exercises, such as running on the spot, squatting, cycling on an exercise bike, rowing on a rowing machine etc. It uses the OpenCV library to decide whether the user is moving by calculating the pixel difference between two frames.

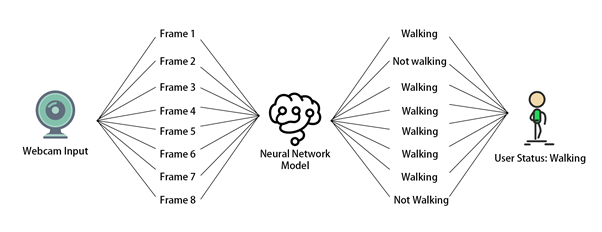

The PyTorch exercise recognition model is responsible for checking the status of the user every 8 frames. Only when the module decides the user is moving and the exercise he/she is performing is recognized to be the specified exercise chosen in the GUI, DirectX events (e.g. A keypress of “W” which is moving forward in many PC games) will be triggered via the PyDIrectInput’s functions.

The Desk Gestures module tracks the x and y coordinates of the pen each frame, using the parameters from the GUI. These coordinates are then mapped to the user’s screen resolution and fed into several PyDirectInput’s functions that trigger DirectX events, depending on whether we want to move the mouse, or press keys on the keyboard and click with the mouse.

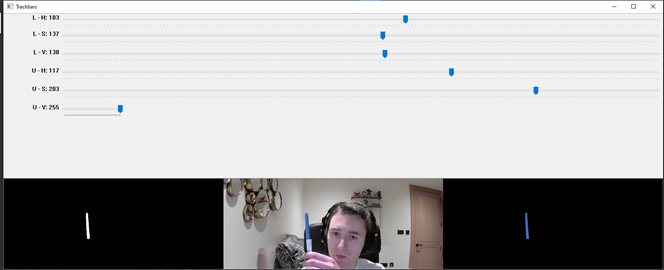

Fig 1 – HSV colour range values for the blue of the pen

From then the current challenge and limitation is having other objects with the same colour range in the camera frame. When this happens, the program detects the wrong objects and therefore, produces inaccurate tracking results. The only viable solution is to make sure that no objects with similar colour range are present in the camera view. This is usually easy to achieve and if not, a simple green screen (or another screen of a singular colour) can be used to replace the background.

In the exercises module, we use OpenCV to do motion detection. This involves subtracting the current frame from the last frame and taking the absolute value to get the pixel intensity difference. Regions of high pixel intensity difference indicate motion is taking place. We then do a contour detection to find the outlines of the region with motion detected. Fig 2 shows how it looks in the module.

Technical challenges

OpenCV

In the desk gestures module, to track the pen, we had to provide an HSV (Hue, Saturation, Value) colour range to OpenCV so that it only detected the blue part of the pen. We needed to find a way to calculate this range as accurately as possible.

The solution involves running a program where the hue, saturation, and value channels of the image could be adjusted so that only the blue of the pen was visible(see Fig 1). Those values were then stored in a .npy file and loaded into the main program.

Fig 2 – Contour of the motion detected

Multithreading

Videos captured by the webcam can be seen as a collection of images. In the program, OpenCV keeps reading the input from the webcam frame by frame, then each frame is processed to get the data which is used to categorize the user into a status (exercising or not exercising in the exercise module, moving the pen to different directions in the desk gesture module). The status change will then trigger different DirectX events.

Initially, we tried to check the status of the user after every time the data is ready, however, this is not possible because most webcams are able to provide a frame rate of 30 frames per second, which means the data processing part is performed 30 times every second. If we check the status of the user and trigger DirectX events at this rate, it will cause the program to run slow.

The solution to this problem is multithreading, which allows multiple tasks to be executed at the same time. In our program, the main thread handles the work of reading input from webcam and data processing, and the status check is executed every 0.1 seconds in another thread. This reduces the execution time of the program and ensures real-time motion tracking.

Human Activity Recognition

In the exercise module, DirectX events are only triggered if the module decides the user is doing a particular exercise, therefore our program needs to be able to classify the input video frames into an exercise category. This then belongs to a broader field of study called Human Activity Recognition, or HAR for short.

Recognizing human activities from video frames is a challenging task because the input videos are very different in aspects like viewpoint, lighting and background. Machine learning is the most widely used solution to this task because it is an effective technique in extracting and learning knowledge from given activity datasets. Also, transfer learning makes it easy to increase the number of recognized activity types based on the pre-trained model. Because the input video can be viewed as a sequence of images, in our program, we used deep learning, convolutional neural networks and PyTorch to train a Human Activity Recognition model that can output the action category given an input image. Fig 3 shows the change of loss and accuracy during the training process, in the end, the accuracy of the prediction reached over 90% on the validation dataset.

Fig 3 – Loss and accuracy diagram of the training

Besides training the model, we used additional methods to increase the accuracy of exercise classification. For example, rather than changing the user status right after the model gives a prediction of the current frame, the status is decided based on 8 frames, this ensures the overall recognition accuracy won’t be influenced by one or two incorrect model predictions [Fig 4].

Fig 4 – Exercise recognition process

Another method we use to improve the accuracy is to ensure the shot size is similar in each input image. Images are a matrix of pixels, the closer the subject is to the webcam, the greater the number of pixels representing the user, that’s why recognition is sensitive to how much of the subject is displayed within the frame.

To resolve this problem, in the exercise module, we ask the user to select the region of interest in advance, the images are then cropped to fit the selection [Fig 5]. The selection will be stored as a config file and can be reused in the future.

Fig 5 – Region of interest selection

DirectX

The open-source libraries used for computer vision are all in Python so the library ‘PyDirectInput’ was found to be most suitable for passing the data stream. PyDirectInput is highly efficient at translating to DirectX.

Our Future Plan

For the future, we plan to add a way for the user to record gestures to a profile and store it in a catalogue. From there on the configuration panel they will be able to assign mouse clicks, any keyboard button presses and sequences of button presses, for the user to map to their specific gesture. This will be saved as gesture catalogue files and can be reused in different devices.

We are also benchmarking and testing the latency between gestures performed and DirectX events triggered to further evaluate efficiency markers, hardware limits and exposing timing figures for the users configuration panel.

We will be posting more videos on our progress of this work on our YouTube channels (so stay tuned!), and we look forward to submitting our final year dissertation project work at which point we will have our open-source release candidate published for users to try out.

We would like to build a community interest group around this. If would like to know more and join our MotionInput supporting DirectX community effort, please get in touch – d.mohamedally@ucl.ac.uk

Bonus clip for fun – Goat Simulator

by Contributed | Jan 26, 2021 | Technology

This article is contributed. See the original author and article here.

Targets are locked. We’re flooding the torpedo tubes so we’re ready to dive into battle for this Feb 2nd event. I believe it’s time to share some freshly declassified details about things we have planned, so let’s kick this off with the event flow and info about our Keynote Speaker for the event.

On Feb 2nd, 7:30 AM PST ITOps Talks: All Things Hybrid will be LIVE on Microsoft Learn TV to kickstart the festivities. I’ll be there chatting with folks from my team about the logistics of the event, why we’re doing this, how you can get involved in hallway conversations and how to participate throughout the coming days. After we’ve gotten the logistical stuff out of the way – we’ll be introducing our featured keynote speaker.

Who is that you ask? (drum roll please)

It’s none other than Chief Technology Officer for Microsoft Azure – Mark Russinovich! After debating with the team about possible candidates – Mark was an obvious choice to ask. As a Technical Fellow and CTO of Azure paired with his deep technical expertise in the Microsoft ecosystem – Mark brings a unique perspective to the table. He’s put together this exclusive session about Microsoft Hybrid Solutions and has agreed to join us for a brief interview and live Q&A following the keynote. I’ve had a quick peek at what he has instore for us and I’m happy to report: it’s really cool.

After the Keynote and Live Q&A on February 2nd, we will be releasing the full breadth of content I introduced to you in my previous blogpost. All sessions will be live for your on-demand viewing at a time and cadence that meets your schedule. You can binge watch them all in a row or pick and choose selective ones to watch when you have time in your busy life / work schedule – It’s YOUR choice. We will be publishing one blog post for each session on the teams ITOpsTalk.com blog with the embedded video so they will be easy to find as well as having all supporting reference documentation, links to additional resources as well as optional Microsoft Learn modules to learn even more about their related technologies.

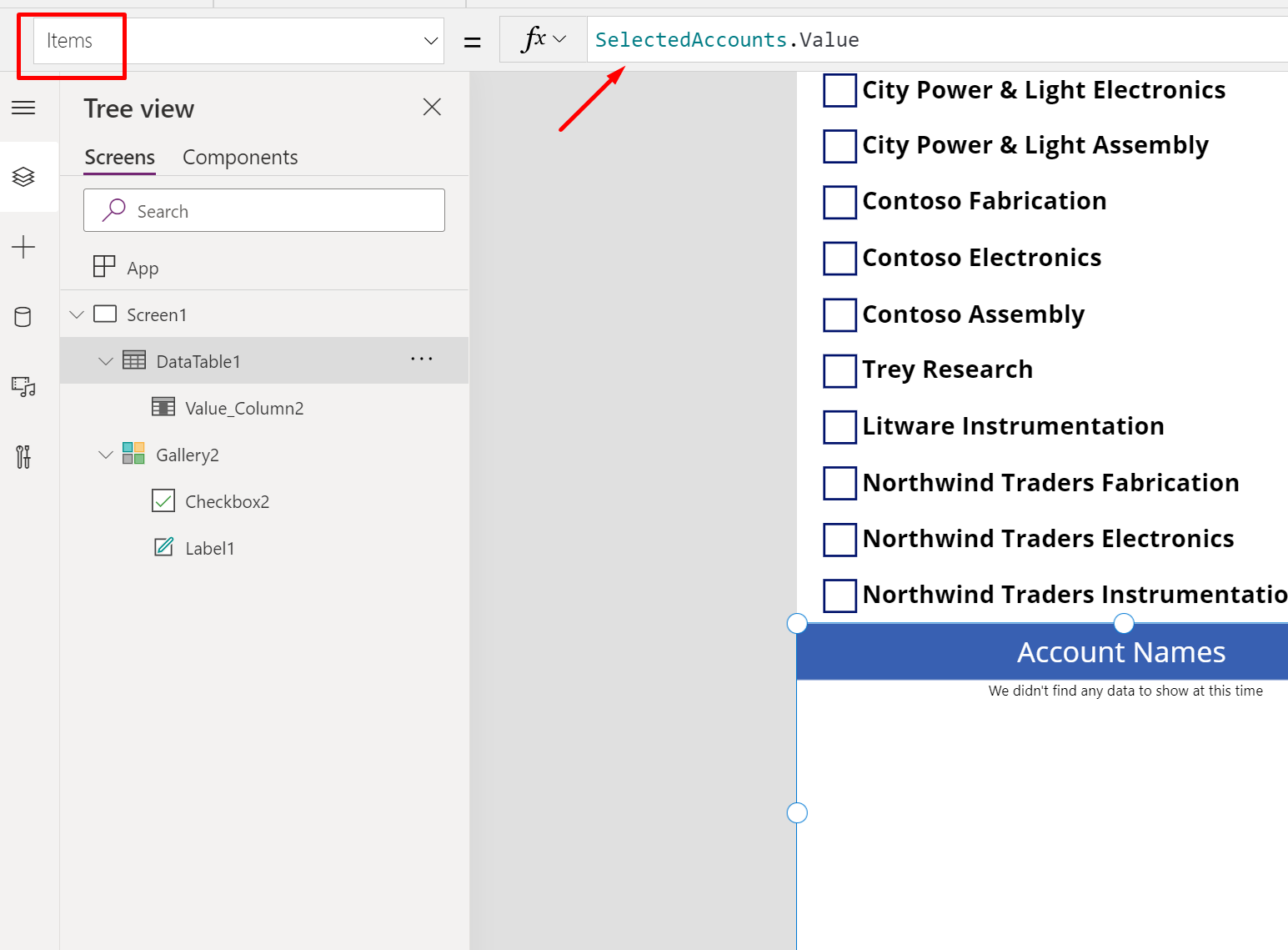

What about the connectedness you would feel during a real event? Where are the hallway conversations? We’re trying something out using our community Discord server. After the keynote and the release of the session content, you will want to login to Discord (have you agreed to the Code of Conduct / Server Rules?) where you will see a category of channels that looks something like this:

The first channel is just a placeholder with descriptions of each talk and a link to that “chat” channel. It’s really just for logistics and announcements. The second channel “itops-talks-main-channel” is where our broad chat area is with no real topic focus, other than supporting the event and connecting with you. The rest of the channels (this graphic is just a sample) identify the session code and title of each session. THIS is where you can post questions, share your observations, answer other folks questions and otherwise engage with the speakers / local experts at ANY point of the day or night. The responses may not be in real-time if the speaker is asleep or if the team is not available at the time you ask – but don’t worry – we’ll be there to connect once we’re up and functioning.

Oh Yeah! Remember – there is no need for registration to attend this event. You may want to block the time in your calendar though…. just in case. Here’s a quick and handy landing page where you can quickly/conveniently download an iCal reminder for the Europe/Eastern NorthAmerica livestream OR Asia Pacific/Western NorthAmerica livestream.

Anything else you’d like to know? Hit me up here in the comments or can ask us in the Discord server. Heck – you can even ping us with a tweet using the #AzOps hashtag on Twitter.

Recent Comments