by Contributed | Jan 22, 2021 | Technology

This article is contributed. See the original author and article here.

I needed to go through this subject this week so I thought that would be a good opportunity to share SQL Serverless Architecture concepts.

1) What is the difference between SQLOD and the former SQLDW?

SQL Serverless or SQL On-demand

- Grow or shrink compute power, within a dedicated SQL pool, without moving data.

- Pause compute capacity while leaving data intact, so you only pay for storage.

- Resume compute capacity during operational hours.

SQL DW you can actually pause and resume. You insert, update and delete. You have storage with your data which SQLDW is held responsible.

What is SQLOD?

SQLOD is a query service over the data in your data lake. You do not need to pause or resume. It is a service per comsumption or per demand. The service is resilient to failure and elastic.

Note: Serverless SQL pool has no local storage, only metadata objects are stored in databases. Basically is for reading, only.

What it means resilient to failures and elastic?

It means it auto-scale the node’s resources if required by the engine while querying your file and it means also if there is any failure in any node it recovers without any user intervention.

What is supported on Serverless?

Supported T-SQL:

- Full SELECT surface area is supported, including a majority of SQL functions

- CETAS – CREATE EXTERNAL TABLE AS SELECT

- DDL statements related to views and security only

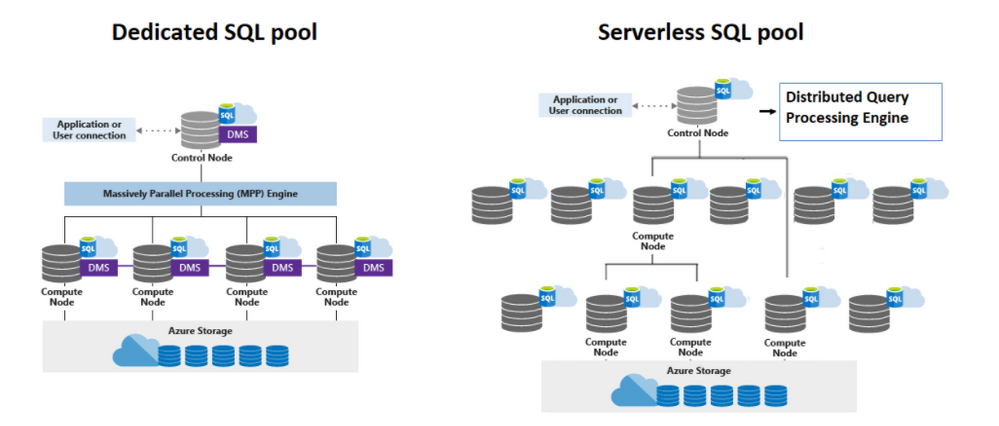

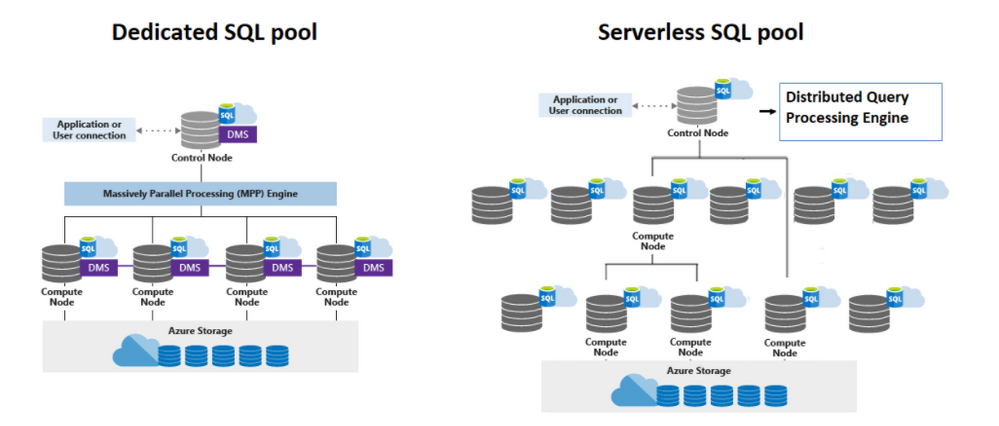

How does it work?

- DQP or Distributed Query Processing.

- Compute Node

DQP is responsible per optimize and orchestrate distributed execution of user queries by splitting them into smaller queries that will be executed on Compute nodes.

The Compute Nodes will execute the tasks creates by the DQP. The tasks are pretty much the query logic break in chunks of data to be processed. Those chunks of data are the files organized in data cells. How many data cells and tasks will be executed depends on the plan optimization.

Plan optimization also depends on the stats. The more serverless the SQL pool knows about your data, the faster it can execute queries against it and in the end, the plan chosen is based on cost, lowest cost. Note: Automatic creation of statistics is turned on for Parquet files. For CSV files, you need to create statistics manually.

Ref: Synapse SQL architecture – Azure Synapse Analytics | Microsoft Docs

Serverless SQL pool – Azure Synapse Analytics | Microsoft Docs

https://www.microsoft.com/en-us/research/publication/polaris-the-distributed-sql-engine-in-azure-synapse/

Create and update statistics using Azure Synapse SQL resources – Azure Synapse Analytics | Microsoft Docs

That is it!

Liliam Leme

UK Engineer.

by Scott Muniz | Jan 22, 2021 | Security

This article was originally posted by the FTC. See the original article here.

For most of us, it’s been a long time since we’ve been able to attend a live event. Think back, if you can, to the last time you tried to buy tickets online to go to a concert, a game, or a play. Were you shut out because tickets sold out before you got yours? You’re not alone.

So what happened? Sometimes there just aren’t enough tickets available for everyone who wants to attend an event, especially if promoters save tickets for artists and other VIPs. Ticket bots may also be a factor. People may use software to buy tickets quicker than the average consumer. They also might use bots to cheat the ticketing system and bypass ticket limits or to buy tickets using fake names and addresses. Then they resell the tickets for higher prices. Congress passed the Better Online Ticket Sales (BOTS) Act to address these problems.

We (the Federal Trade Commission) settled three cases with companies that violated the BOTS Act. The companies circumvented Ticketmaster’s security measures to buy thousands of tickets that they later re-sold at a profit. The court orders require the companies to stop their illegal ticket-buying practices and impose civil penalties. (Read the business blog post to learn more about the case.)

The next time you’re looking to score tickets to a must-see event:

- Look for opportunities to buy tickets before they go on sale to the public. Sign up for newsletters or alerts from ticket sellers, artists, or venues, or follow them on social media. And check with your credit card company about promotions.

- Set up an account with the ticket seller. That way you’ll be ready to buy as soon as tickets go on sale.

- Check back. The promoters might make more tickets available after the initial release or add another show.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Jan 22, 2021 | Technology

This article is contributed. See the original author and article here.

When designing any solution, we often look for common practices or patterns that can be re-used. Think about this from a Software development perspective. You’ve probably heard about the Factory Method pattern, Builder or Singleton patterns.

What about design patterns for the cloud? Fortunately a number of well-known patterns already exist and are documented! You can find those over on the Azure Architecture Center, in the Cloud Design Patterns section.

In the following video, Chris Reddington and Peter Piper explore the Gatekeeper Pattern and the Valet Key Pattern.

https://www.youtube-nocookie.com/embed/zM3hJBZu2vA

What is the Gatekeeper Pattern?

The Gatekeeper Pattern helps you to protect your application and services by exposing your application or service through a dedicated instance. This dedicated instance (the gatekeeper) is a type of Façade layer that decouples clients from your trusted hosts. The gatekeeper may perform tasks like authentication or authorization, or other sanitization steps such as rate limiting or checking for specific metadata in requests.

It may be useful in scenarios where you have a distributed application (e.g. a set of microservices), and want to centralize your validation steps for simplicity. Alternatively, if your application has requirements for a high level of protection from malicious threats, then you may want to consider reviewing this pattern.

What should you consider before implementing the Gatekeeper pattern?

- The Gatekeeper should be kept lightweight, and typically focuses upon validation/sanitization. Try not to get pulled into a trap of any processing related to your applications, which would introduce coupling between services!

- As the Gatekeeper is “less trusted” than your trusted hosts, they are typically hosted in separate environments.

- As the Gatekeeper is a Façade-based pattern, you are introducing an extra step in your application’s routing which means this may increase latency.

- Given that the Gatekeeper is a type of Façade, be careful not to introduce a point of failure into your architecture. Implement scaling of your Gatekeeper component as needed.

This is just a brief summary of the pattern, and some key considerations. For the full detail, check out The Gatekeeper Pattern on the Azure Architecture Center.

What is the Valet Key Pattern?

The Valet Key pattern could also be considered if security is important. At a high level, the Valet Key Pattern is an approach to prevent direct access to resources and instead uses keys or tokens to restrict access to those resources.

Consider an Azure Storage Account with blobs in a private container. You could provide access to the account using the Storage Account Key, but that would grant overall direct access to the storage account and pose a security risk. Instead, you could generate time-bound permissions-restricted access to a set of files in the Storage Account using Shared Access Signatures. A Shared Access Signature is an example implementation of the Valet Key pattern.

What should you consider before implementing the Valet Key pattern?

- As a token/key is required to provide restricted access, how do you provide that secret material to the user in the first place? Make sure to send it to the user securely.

- Ensure that you have a key rotation strategy in place ahead of time. Don’t wait until a token is compromised to test your operational process of rotating keys!

The Valet Key is a separate architectural pattern in its own right, but worth noting it is commonly used in combination with the Gatekeeper pattern.

This is just a brief rundown of the pattern, and some common considerations. For the full detail, check out The Valet Key Pattern on the Azure Architecture Center.

Remember, there are many more cloud design patterns that you can use in your own solutions! Check them out on the Azure Architecture Center. If you prefer video/audio content, then take a look at Architecting in the Cloud, One Pattern a time series on Cloud With Chris.

by Contributed | Jan 22, 2021 | Technology

This article is contributed. See the original author and article here.

Cars Island On Azure

Daniel Krzyczkowski is a Microsoft Azure MVP from Poland. Passionate about Microsoft technologies, Daniel loves learning new things about cloud and mobile development. His main tech interests include Microsoft Azure cloud services, IoT, Azure DevOps and Universal Windows Platform app development. For more, check out his Twitter @dkrzyczkowski and his blog.

CI/CD for Azure Data Factory: Adding a production deployment stage

Craig Porteousis is a Principal Consultant with Advancing Analytics and Microsoft Data Platform MVP. Craig counts more than 12 years of experience working with the Microsoft Data Platform, from database administration, through analytics and data engineering. Craig founded Scotland’s data community conference, DATA:Scotland, co-organises the Glasgow Data User Group and enjoys talking data at conferences across the UK and Europe. For more, check out his Twitter @cporteous

Introducing the LogAnalytics.Client NuGet for .NET Core

Tobias Zimmergren is a Microsoft Azure MVP from Sweden. As the Head of Technical Operations at Rencore, Tobias designs and builds distributed cloud solutions. He is the co-founder and co-host of the Ctrl+Alt+Azure Podcast since 2019, and co-founder and organizer of Sweden SharePoint User Group from 2007 to 2017. For more, check out his blog, newsletter, and Twitter @zimmergren

Building micro services through Event Driven Architecture part13: Consume events from Apache KAFKA and project streams into ElasticSearch.

Gora Leye is a Solutions Architect, Technical Expert and Devoper based in Paris. He works predominantly in Microsoft stacks: Dotnet, Dotnet Core, Azure, Azure Active Directory/Graph, VSTS, Docker, Kubernetes, and software quality. Gora has a mastery of technical tests (unit tests, integration tests, acceptance tests, and user interface tests). Follow him on Twitter @logcorner.

Teams Real Simple with Pictures: New Calendar View in Lists Web App + Teams

Chris Hoard is a Microsoft Certified Trainer Regional Lead (MCT RL), Educator (MCEd) and Teams MVP. With over 10 years of cloud computing experience, he is currently building an education practice for Vuzion (Tier 2 UK CSP). His focus areas are Microsoft Teams, Microsoft 365 and entry-level Azure. Follow Chris on Twitter at @Microsoft365Pro and check out his blog here.

by Contributed | Jan 22, 2021 | Technology

This article is contributed. See the original author and article here.

This article will introduce the difference between control channel and rendezvous channel. And code a simple python application to interpret its process.

Pre-requirement: understand azure relay https://docs.microsoft.com/en-us/azure/azure-relay/relay-what-is-it

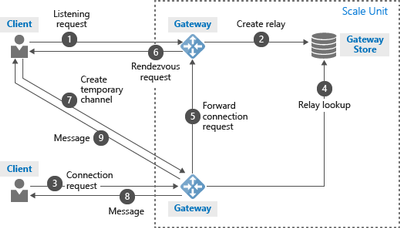

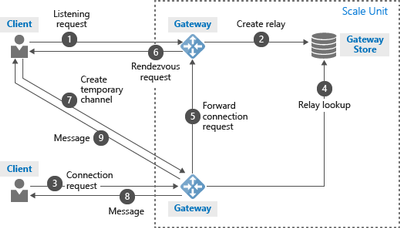

The control channel is used to setup a WebSocket to listen the incoming connection. It allows up to 25 concurrent listeners for one hybrid connection. The control channel takes response from step #1 to #5 in following architecture.

When a sender open a new connection. The control channel chose one active listener. The active listener will setup a new WebSocket as rendezvous channel. The messages will be transferred via this rendezvous channel. The rendezvous channel takes response from step #6 to #9 in above architecture.

Below is a simple Python application to realize control channel and rendezvous channel

- Below code will create a control channel.

wss_uri = ‘wss://{0}/$hc/{1}?sb-hc-action=listen’.format(service_namespace, entity_path) + ‘&sb-hc-token=’ + urllib.quote(token)

ws = WebSocketApp(wss_uri,

on_message=on_message,

on_error=on_error,

on_close=on_close)

- Follow is control channel’s on_message callback function. The sender connection on the control channel will contains a url. The url is the rendezvous url need to be established.

The callback function will use create_connection to create a new WebSocket on this rendezvous url. After finish creating rendezvous channel, the WebSocket will call recv() to receive messages.

def on_message(ws, message):

data = json.loads(message)

url = data[“accept”][“address”]

ws = None

try:

ws = create_connection(url)

connected = ws.connected

if connected:

receive_msg = ws.recv()

//Process message

….

ws.send(message_process_result)

else:

except Exception, ex:

…

finally:

…

Some times, the callback function will be triggered even sender dose not send any message. It is actually control channel notifications. For example, if the relay server wait long time and can’t get response from listener, it will send a “listener not response immediately” notification to listener side when listener recover.

Recent Comments