by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

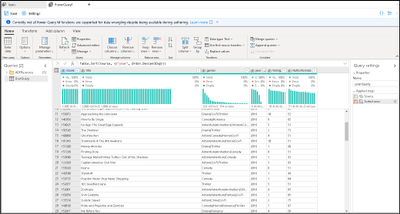

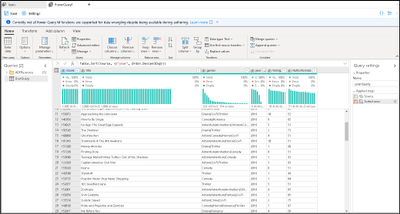

The Azure Data Factory team is excited to announce a new update to the ADF data wrangling feature, currently in public preview. Wrangling in ADF empowers users to build code-free data prep and wrangling at cloud scale using the familiar Power Query data-first interface, natively embedded into ADF. Power Query provides a visual interface for data preparation and is used across many products and services. With Power Query embedded in ADF, you can use the PQ editor to explore and profile data as well as turn your M queries into scaled-out data prep pipeline activities. Data Flows in ADF and Synapse Analytics will now focus on Mapping Data Flows with a logic-first design paradigm, while the Power Query interface will enable the data-first wrangling scenario.

With Power Query in ADF, you now have a powerful tool to use in your ADF ETL projects for data profiling, data prep, and data wrangling. You have immediate feedback from introspection of your Lake and database data with the Power Query M language available for your data exploration. You can then take your resulting mash-up and save it as a first-class ADF object and orchestrate a data pipeline with that same M Power Query executing on Spark.

When you have completed your data exploration, save your work as a Power Query object and then add it as a Power Query activity on the ADF pipeline canvas. With your Power Query activity inside of a pipeline, ADF will execute your M query on Spark so that your activity will automatically scale with your data by leveraging the ADF data flow infrastructure.

In the example above, I added my Power Query activity to my pipeline for cleaning addresses from my ingested Lake data folders with Power Query, then handing the results off to a Data Flow via ADLS Gen2, where I perform data deduplication and then use the ADF pipeline to send emails when the process completes.

Because you are in the context of an ADF pipeline, you can define destination sinks for your Power Query mash-up so that you can persist the results of your transformations to data store like ADLS Gen2 storage or Synapse Analytics SQL Pools. Leverage the power of ADF to define source and destination mappings, database table settings, file and folder options, and other important data pipeline properties that data engineers need when building scalable data pipelines in ADF.

Click here to learn more about Azure Data Factory and the power of data wrangling at cloud scale with the new updated Power Query public preview feature in ADF.

by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

Update: Thursday, 21 January 2021 22:59 UTC

Root cause has been isolated to a backend component scale issue which was impacting customers with workspace-enabled Application Insights resources in West US2 and West US regions. To address this issue we are investigating scaling options in the backend components.

- Work Around: None

- Next Update: Before 01/22 01:00 UTC

-Jeff

Initial Update: Thursday, 21 January 2021 22:33 UTC

We are aware of issues within Application Insights and are actively investigating. Some customers may experience delayed or missed Log Search Alerts and Latency and Data Loss.

- Next Update: Before 01/22 01:00 UTC

We are working hard to resolve this issue and apologize for any inconvenience.

-Jeff

by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

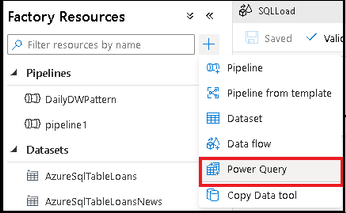

Continuing on our path to release the new EAC, we wanted to tell you that the Groups feature is now available. The experience is modern, improved, and fast. Administrators can now create and manage all 4 types of groups (Microsoft 365 group, Distribution list, Mail-enabled Security group and Dynamic distribution list) from the new portal.

We wanted to call out some improvements in Groups experience in the new EAC when compared with classic EAC.

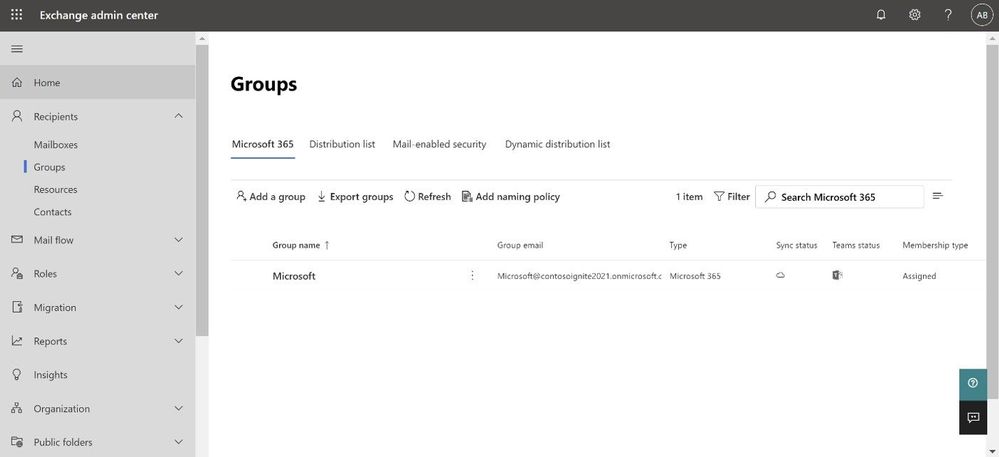

Ease of discoverability: With a new tabbed design approach, groups are now separated out into 4 different pivots. Admins no longer need to filter or sort functions to find group types. You can click on the pivot of the group type you want to manage, and all such groups will be populated in that view.

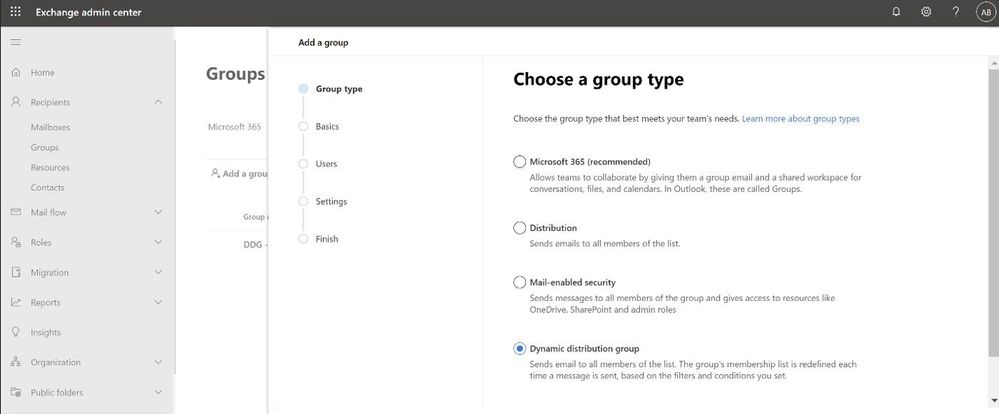

More controls during group creation: The new group creation flow design wizard (the flyout panel that pops from the right) guides you through creation and is consistent with the other parts of the portal. To create any group, there are no pop-ups anymore and you do not have to leave the page that you are on. You simply click on the Add a group button and a guided flyout opens to assist you with group creation.

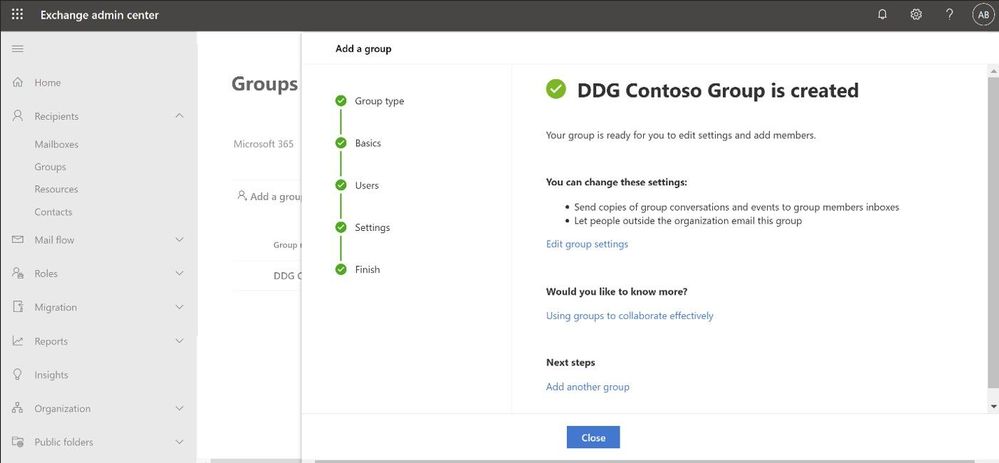

Performance: The new design is faster and more performant. It takes very little time for you to see the groups that are already created. If creating new groups, once the group is created, you can additionally edit the group settings directly from the completion page (see below).

Performance: The new design is faster and more performant. It takes very little time for you to see the groups that are already created. If creating new groups, once the group is created, you can additionally edit the group settings directly from the completion page (see below).

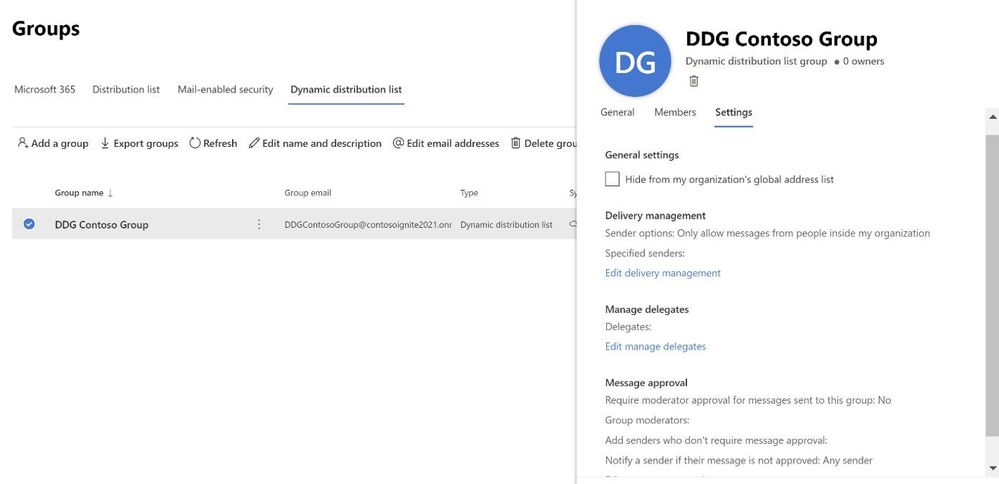

Settings management

All group settings including general settings, delivery management, manage delegates, message approval, etc. are also now available in the new EAC.

Happy groups management!

If you have feedback on the new EAC, please go to Give feedback floating button and let us know what you think:

If you share your email address with us while providing feedback, we will try to reach out to you to get more information, if needed:

Learn more

To learn more, check the updated documentation. To experience groups in the new EAC, click here (this will take you to the new EAC).

The Exchange Online Admin Team

by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

I was recently troubleshooting an issue with Bot Composer 1.2 to create and publish the bot on Azure government cloud. But even after performing all the steps correctly we end up getting a 401 unauthorized error when the bot is tested on web chat.

Below are the things that need to be added to bot for making it work on Azure Gov cloud.

https://docs.microsoft.com/en-us/azure/bot-service/bot-service-resources-faq-ecosystem?view=azure-bot-service-4.0#how-do-i-create-a-bot-that-uses-the-us-government-data-center

Just to isolate the problem more, we deployed the exact same bot from composer on Normal App service and (not azure government one) and everything works smoothly there. So we got to know that the issue only happens when the deployment happens on the Azure government cloud.

We eventually figured out the following steps that are needed to make it work properly over azure government cloud.

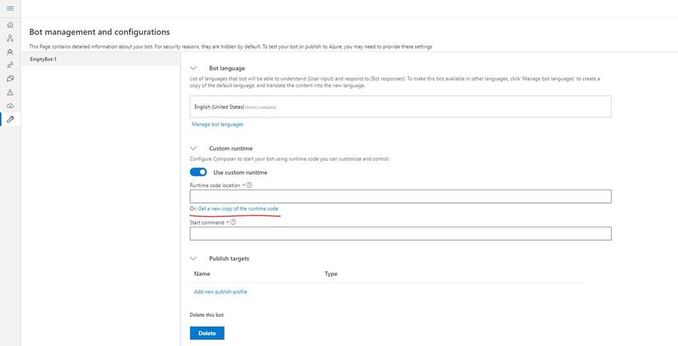

** We need to make a code change for this to work and for making the code change, we would have to eject the run time otherwise composer will never be able to incorporate the change.

Create the bot using Composer.

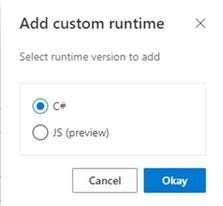

Click on the “Project Settings” and then make sure you select “Custom Runtime” and then click on “get a new copy of runtime code”

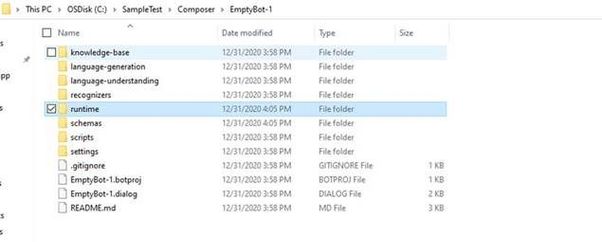

Once you do that, a folder called as “runtime” will be created in the project location for this composer bot.

In the runtime, go to the azurewebapp folder and then open the .csproj file in Visual Studio and make the following changes (the following changes are required for the Azure government cloud)

- Add below line in startup.cs :

services.AddSingleton<IChannelProvider, ConfigurationChannelProvider>()

- Modify BotFrameworkHttpAdapter creation to take channelProvider in startup.cs as follows.

var adapter = IsSkill(settings) ? new BotFrameworkHttpAdapter(new ConfigurationCredentialProvider(this.Configuration), s.GetService<AuthenticationConfiguration>(), channelProvider: s.GetService<IChannelProvider>()) : new BotFrameworkHttpAdapter(new ConfigurationCredentialProvider(this.Configuration), channelProvider: s.GetService<IChannelProvider>());

- Add a setting as following in your appsettings.json (this can be added from composer as well)

"ChannelService": "https://botframework.azure.us"

Build the ejected web app as follows :

C:SampleTestComposerTestComposer1runtimeazurewebapp>dotnet build

Now we need to build the schema by using the given script : (from powershell)

PS C:SampleTestComposerTestComposer1schemas> .update-schema.ps1 -runtime azurewebapp

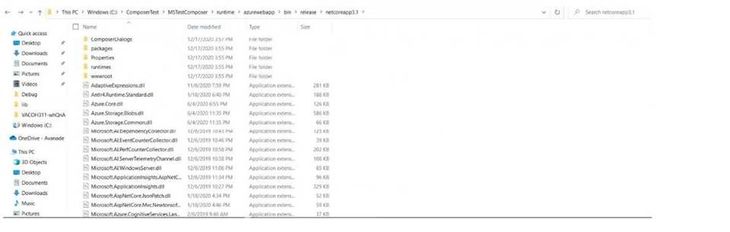

Next we need to publish this bot to Azure app service, we will make use of az webapp deployment command. Firstly create the zip of contents within the “.netcoreapp3.1” folder (not the folder itself) which is inside the runtime/azurewebapp/bin/release :

Now we will use the command as follows :

Az webapp deployment source config-zip –resource-group “resource group name” –name “app service name” –src “The zip file you created”

This will publish the bot correctly to Azure and you can go ahead and do a test in web chat.

In short we had to make few changes from code perspective to get things working in Azure government cloud and for making those changes to a bot created via composer we need to eject the runtime and then make those code changes, build and update the schema and finally publish.

References :

Pre-requisites for Bot on Azure government cloud : Bot Framework Frequently Asked Questions Ecosystem – Bot Service | Microsoft Docs

Exporting runtime in Composer : Customize actions – Bot Composer | Microsoft Docs

by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

As you’re probably aware, Microsoft is in the process of updating Azure services to use TLS certificates from a different set of root certificate authorities (root CAs). Azure TLS Certificate Changes provides details about these updates. Some of these changes affect Azure Sphere, but in most cases no action is required for Azure Sphere customers.

This post provides a primer about the Azure Sphere certificate “landscape”: the types of certificates that the various Azure Sphere components use, where they come from, where they’re stored, how they’re updated, and how to access them when necessary. Furthermore, it also describes how the Azure Sphere OS, SDK, and services make certificate management easier for you. We assume you have a basic familiarity with certificate authorities and the chain of trust. If this is all new to you, we suggest starting with Certificate authority – Wikipedia or other internet sources.

Azure Sphere Devices

Every Azure Sphere device relies on the Trusted Root Store, which is part of the Azure Sphere OS. The Trusted Root Store contains a list of root certificates that are used to validate the identity of the Azure Sphere Security Service when the device connects for device authentication and attestation (DAA), over-the-air (OTA) update, or error reporting. These certificates are provided with the OS.

When daily attestation succeeds, the device receives two certificates: an update certificate and a customer certificate. The update certificate enables the device to connect to the Azure Sphere Update Service to get software updates and to upload error reports; it is not accessible to applications or through the command line. The customer certificate, sometimes called the DAA certificate, can be used by applications to connect to third-party services such wolfSSL that use transport layer security (TLS). This certificate is valid for about 25 hours. Applications can retrieve it programmatically by calling the DeviceAuth_GetCertificatePath function.

Devices that connect to Azure-based services such as Azure IoT Hub, IoT Central, IoT Edge must present their Azure Sphere tenant CA certificate to authenticate their Azure Sphere tenant. The azsphere ca-certificate download command in the CLI returns the tenant CA certificate for such uses.

EAP-TLS network connections

Devices that connect to an EAP-TLS network need certificates to authenticate with the network’s RADIUS server. To authenticate as a client, the device must pass a client certificate to the RADIUS. To perform mutual authentication, the device must also have a root CA certificate for the RADIUS server so that it can authenticate the server. Microsoft does not supply either of these certificates; you or your network administrator is responsible for ascertaining the correct certificate authority for your network’s RADIUS server and then acquiring the necessary certificates from the issuer.

To obtain the certificates for the RADIUS server, you’ll need to authenticate to the certificate authority. You can use the DAA certificate, as previously mentioned, for this purpose. After acquiring the certificates for the RADIUS server, you should store them in the device certificate store. The device certificate store is available only for use in authenticating to a secured network with EAP-TLS. (The DAA certificate is not kept in the device certificate store; it is kept securely in the OS.) The azsphere device certificate command in the CLI lets you manage the certificate store from the command line. Azure Sphere applications can use the CertStore API to store, retrieve, and manage certificates in the device certificate store. The CertStore API also includes functions to return information about individual certificates, so that apps can prepare for certificate expiration and renewal.

See Use EAP-TLS in the online documentation for a full description of the certificates used in EAP-TLS networking, and see Secure enterprise Wi-Fi access: EAP-TLS on Azure Sphere on Microsoft Tech Community for additional information.

Azure Sphere Applications

Azure Sphere applications need certificates to authenticate to web services and some networks. Depending on the requirements of the service or endpoint, an app may use either the DAA certificate or a certificate from an external certificate authority.

Apps that connect to a third-party service using wolfSSL or a similar library can call the DeviceAuth_GetCertificatePath function to get the DAA certificate for authentication. This function was introduced in the deviceauth.h header in the 20.10 SDK.

The Azure IoT library that is built into Azure Sphere already trusts the necessary Root CA, so apps that use this library to access Azure IoT services (IoT Hub, IoT Central, DPS) do not require any additional certificates.

If your apps use other Azure services, check with the documentation for those services to determine which certificates are required.

Azure Sphere Public API

The Azure Sphere Public API (PAPI) communicates with the Azure Sphere Security Service to request and retrieve information about deployed devices. The Security Service uses a TLS certificate to authenticate such connections. This means that any code or scripts that use the Public API, along with any other Security Service clients such as the Azure Sphere SDK (including both the v1 and v2 azsphere CLI), must trust this certificate to be able to connect to the Security Service. The SDK uses the certificates in the host machine’s system certificate store for Azure Sphere Security Service validation, as do many Public API applications.

On October 13, 2020 the Security Service updated its Public API TLS certificate to one issued from the DigiCert Global Root G2 certificate. Both Windows and Linux systems include the DigiCert Global Root G2 certificate, so the required certificate is readily available. However, as we described in an earlier blog post, only customer scenarios that involved subject, name, or issuer (SNI) pinning required changes to accommodate this update.

Azure Sphere Security Service

Azure Sphere cloud services in general, and the Security Service in particular, manage numerous certificates that are used in secure service-to-service communication. Most of these certificates are internal to the services and their clients, so Microsoft coordinates updates as required. For example, in addition to updating the Public API TLS certificate in October, the Azure Sphere Security Service also updated its TLS certificates for the DAA service and Update service. Prior to the update, devices received an OTA Update to the Trusted Root Store which included the new required root certificate. No customer action was necessary to maintain device communication with the Security Service.

How does Azure Sphere make certificate changes easier for customers?

Certificate expiration is a common cause of failures for IoT Devices that Azure Sphere can prevent.

Because the Azure Sphere product includes both the OS and the Security Service, the certificates used by both these components are managed by Microsoft. Devices receive updated certificates through the DAA process, OS and application updates, and error reporting without requiring changes in applications. When Microsoft added the DigiCert Global Root G2 certificate, no customer changes were required to continue DAA, updates, or error reporting. Devices that were offline at the time of the update received the update as soon as they reconnected to the internet.

The Azure Sphere OS also includes the Azure IoT library, so if Microsoft makes further changes to certificates that the Azure IoT libraries use, we will update the library in the OS so that your applications won’t need to be changed. We’ll also let you know through additional blog posts about any edge cases or special circumstances that might require modifications to your apps or scripts.

Both of these cases show how Azure Sphere simplifies application management by removing the need for maintenance updates of applications to handle certificate changes. Because every device receives an update certificate as part of its daily attestation, you can easily manage the update of any locally-managed certificates your devices and applications use. For example, if your application validates the identity of your line-of-business server (as it should), you can deploy an updated application image package that includes updated certificates. The application update services provided by the Azure Sphere platform delivers those updates, removing the worry that the update service itself will incur a certificate expiry issue.

For more information

Recent Comments