by Contributed | Jan 21, 2021 | Technology

This article is contributed. See the original author and article here.

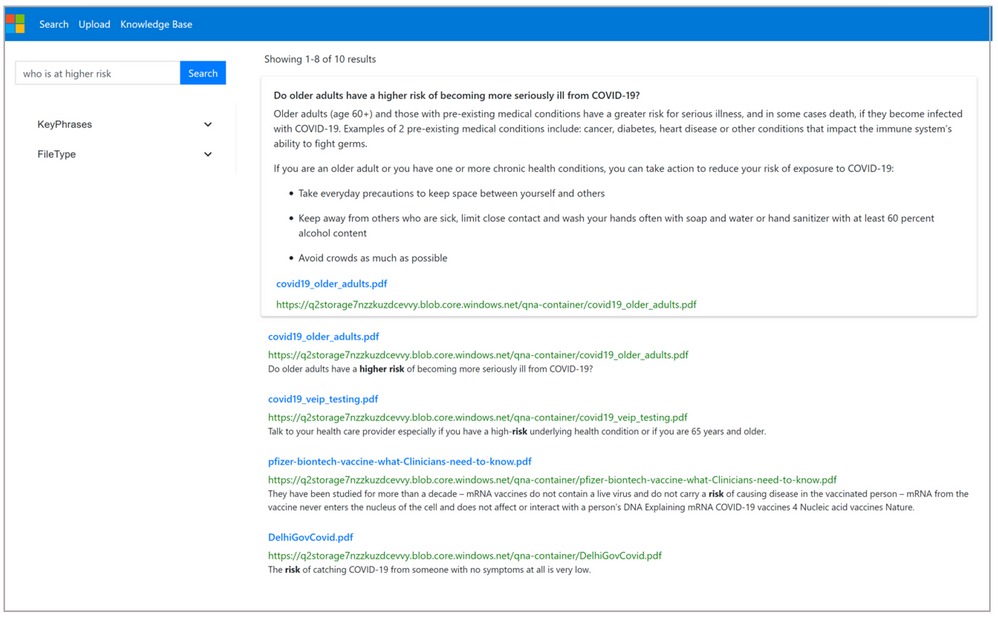

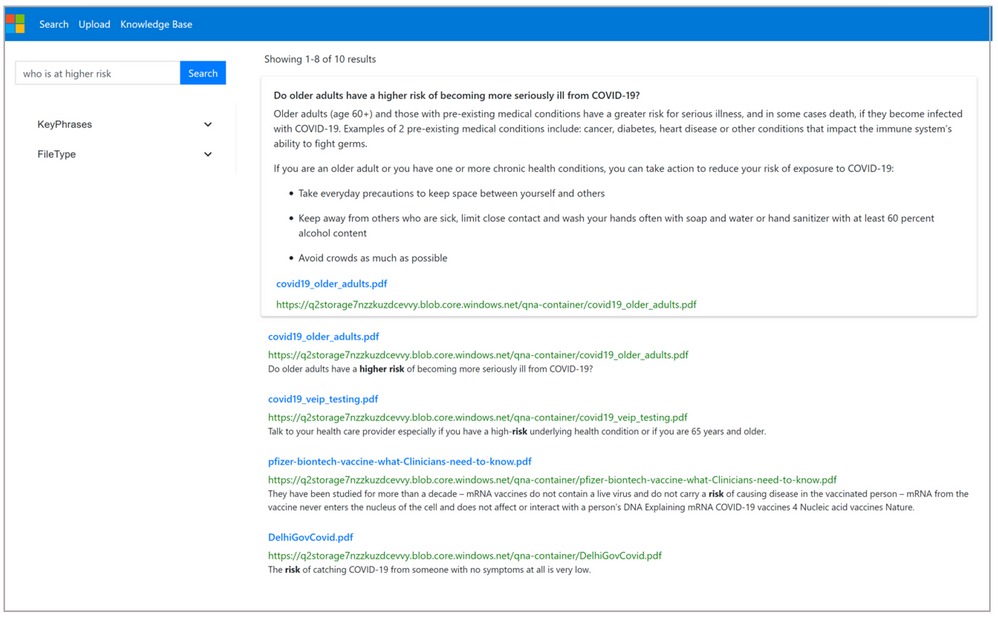

QnA + Azure Cognitive Search enables instant answers over your search results. Now, you do not need to spend time looking through your pile of documents to find the exact answer to your query. There will be an instant answer coming up for the user query from the most relevant documents present in your system. A solution where you can ingest your pile of documents and query over them to get the answer as well as related relevant documents to get more information.

Benefits:

- Converged search experience powering instant answer and relevant documents.

- Search using natural language queries.

- One-click deployment.

- Saves end user time during search.

- Flexibility to enhance and edit instant answers.

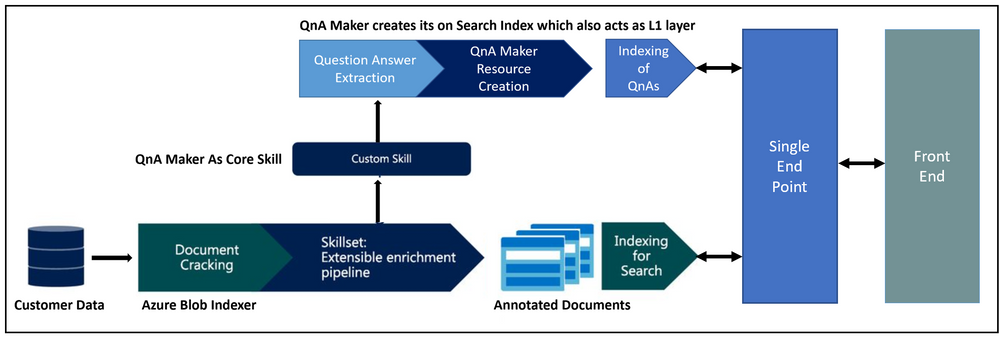

The solution combines the power of both Azure Cognitive Search and QnA Maker to extract question-answer pairs from your documents before storing them in the index. Once you deploy the solution, you get a single endpoint where for each end user query both the services will be called parallelly and you will get a combined result with an instant answer powered by QnA Maker along with the relevant documents coming from Azure Cognitive Search.

Architecture:

This solution accelerator contains the following artifacts:

- ARM template to set up the solution.

- Custom skill in Azure Cognitive Search, which ingests the data into QnA Maker.

- User interface to view the results.

Live Demo Link:

You can view a live demo of this repo at the following link: https://aka.ms/qnaWithAzureSearchDemo

File Type Supported:

Currently instant answers will only be available for the file types supported by QnA Maker. By default, the logic in the Azure Cognitive Search service indexer also ingests only the following file types: .pdf,.docx,.doc,.xlsx,.xls,.html,.rtf,.txt,.tsv. You can change this by modifying the indexedFileNameExtensions property in the Indexer.json.

Tutorial:

NOTE: You need to have a GitHub account to try out this solution.

Resource creation and deployment:

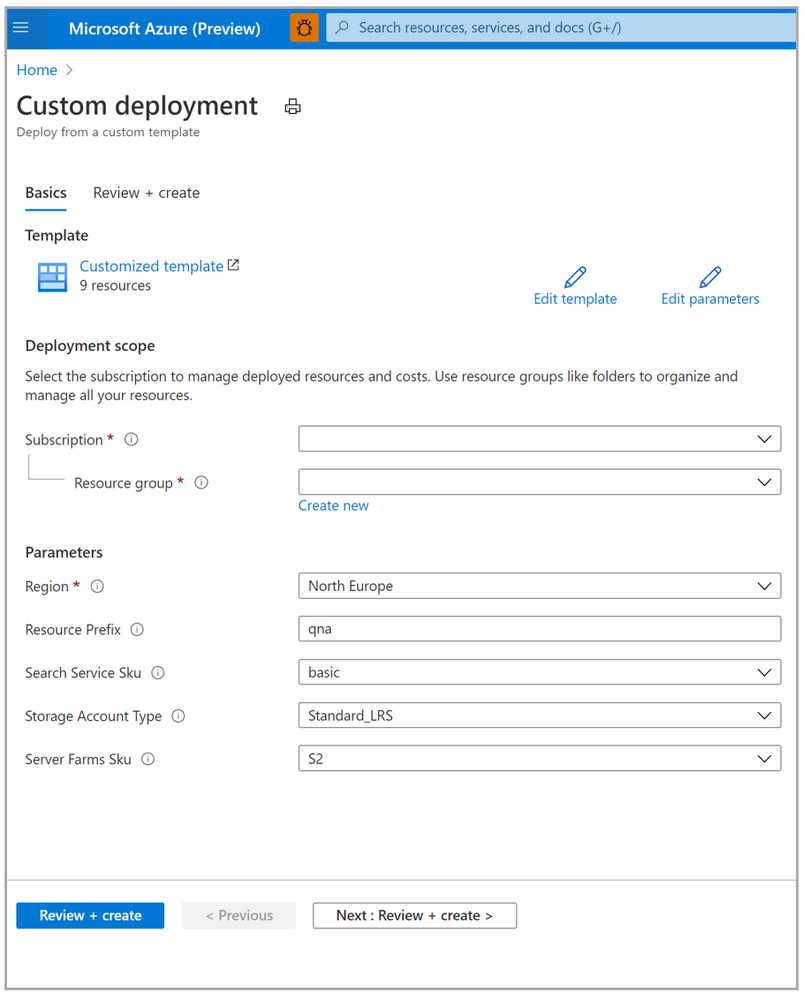

- Click here to Deploy to Azure.

- This will take you to the create blade where all the information will be pre-filled, as shown below. Verify all the information and click Review+ Create button to proceed.

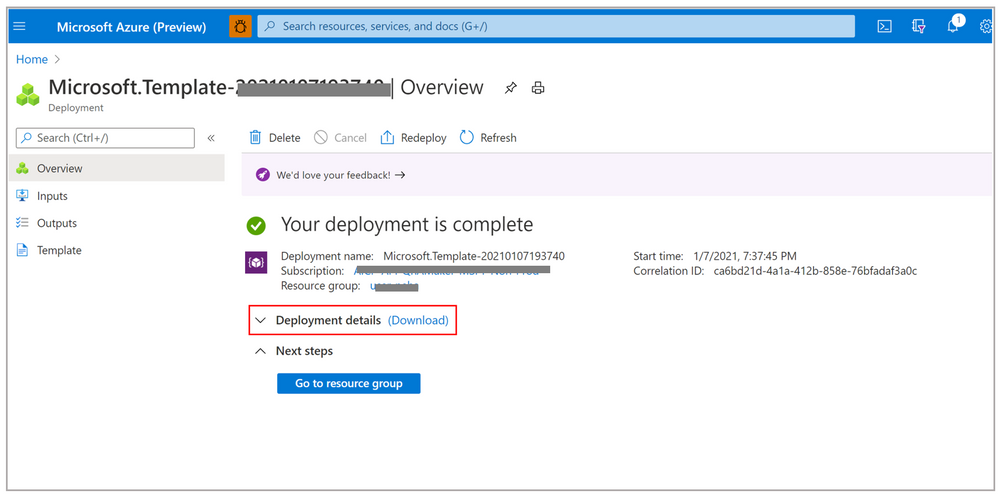

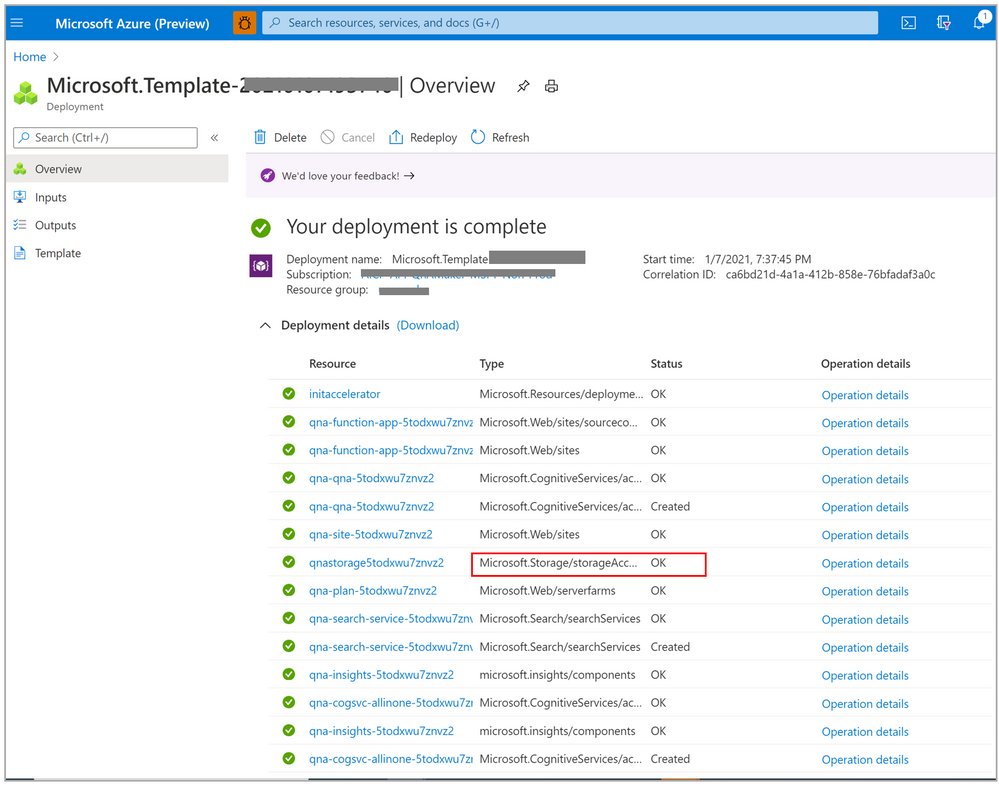

- Your deployment process will take 4-5 minutes to complete. Once completed you will land up on the following page:

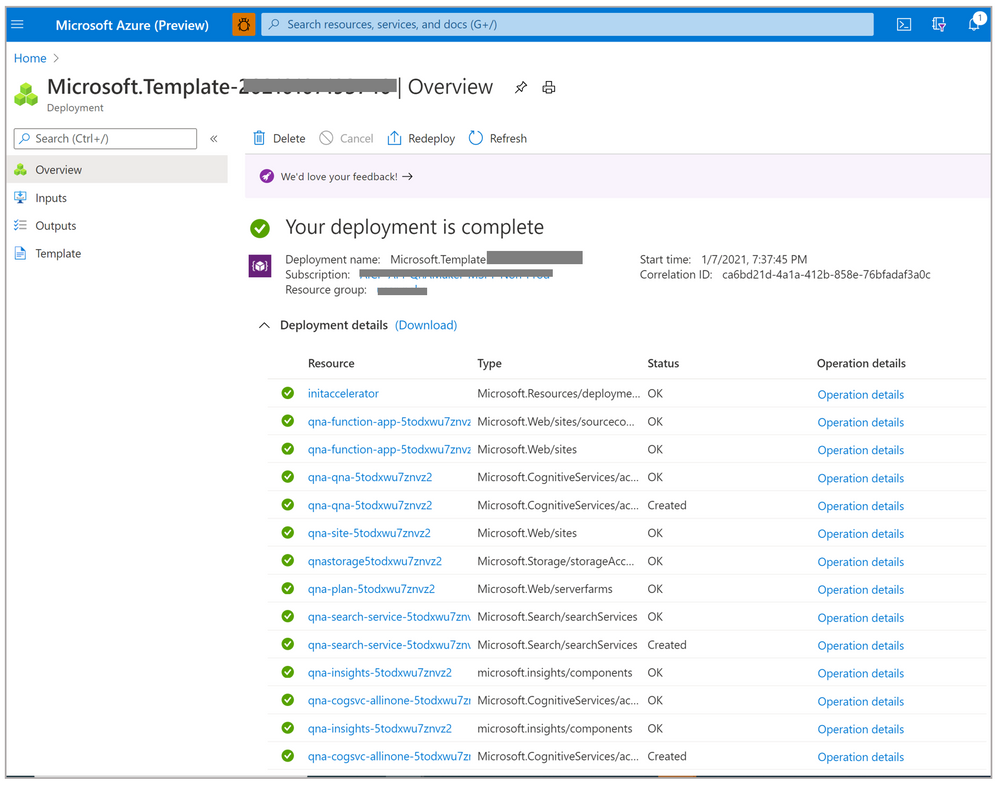

- Click on Deployment details to check all the resources that have been created.

Initialization:

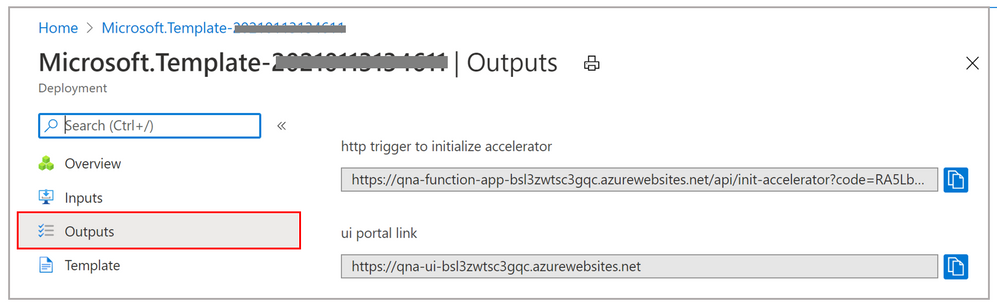

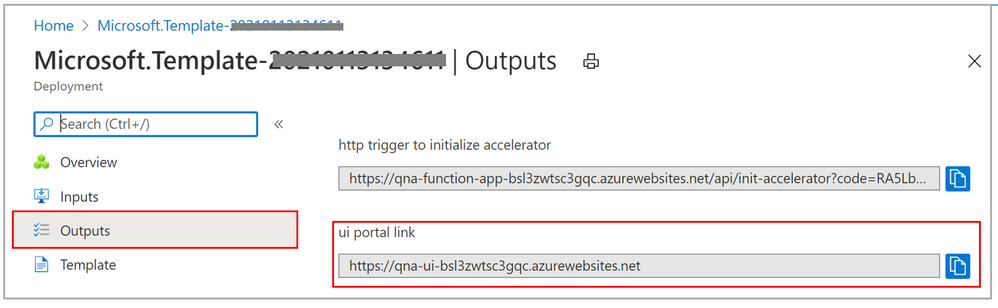

- To initialize the solution, click on the “Outputs” button on the left. Copy the “http trigger to initialize accelerator” value. Open a new browser tab and paste this URL into the browser. This will run for about a minute, and then you’ll see a message indicating success or failure.

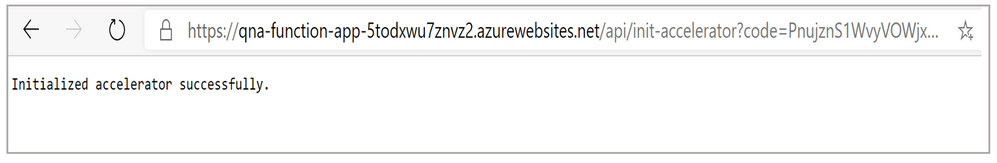

- If the initialization is successful, then following message will appear:

- Once the resources are initialized, you can access the portal through the “UI portal link” value in the Output tab.

Upload Documents:

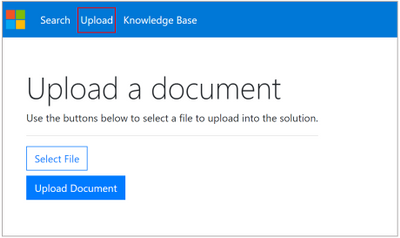

- You can upload the documents one by one through the UI portal, by going to the Upload tab.

- You can also upload the documents in bulk, through a container.

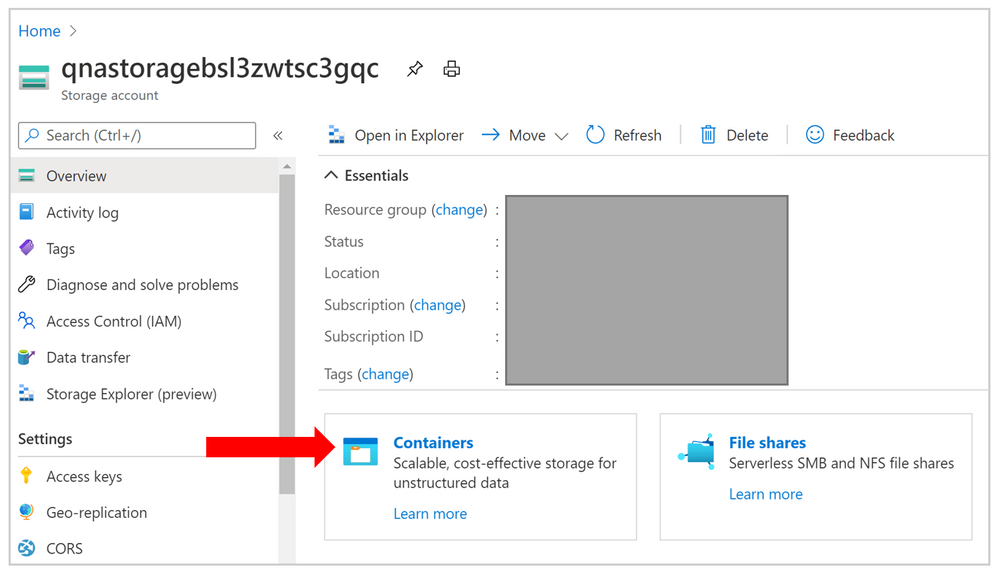

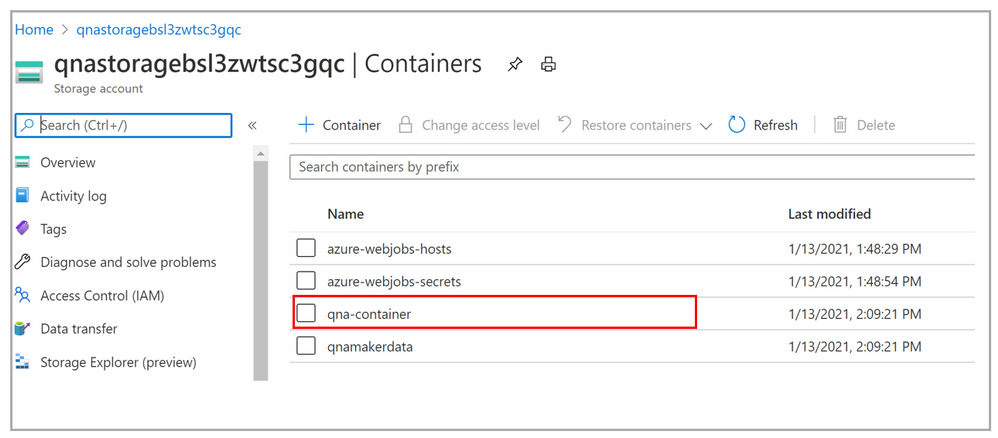

- Go to your storage account.

- Click on Containers and select qna-container to upload the documents in bulk.

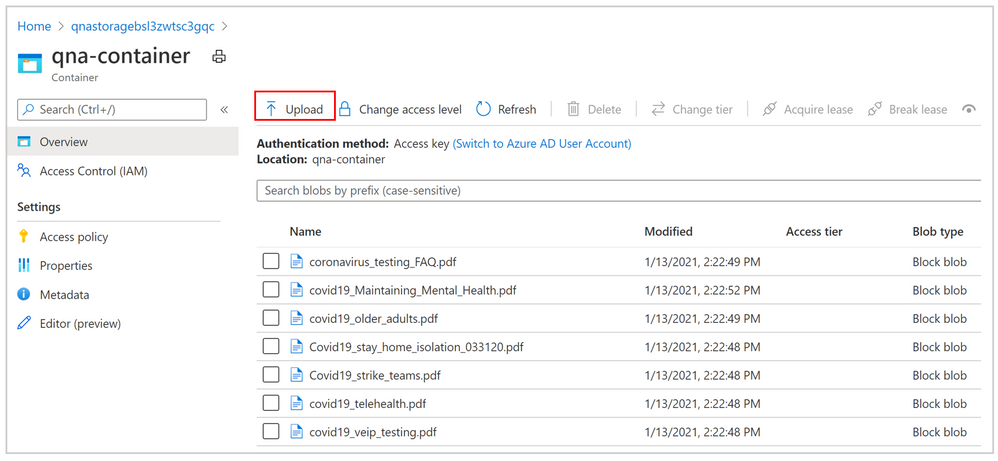

- Use the Upload tab and select the multiple files you want to ingest. It will take some time to index the documents and to extract the Question Answer pairs out of the documents.

Question Answer Enhancement:

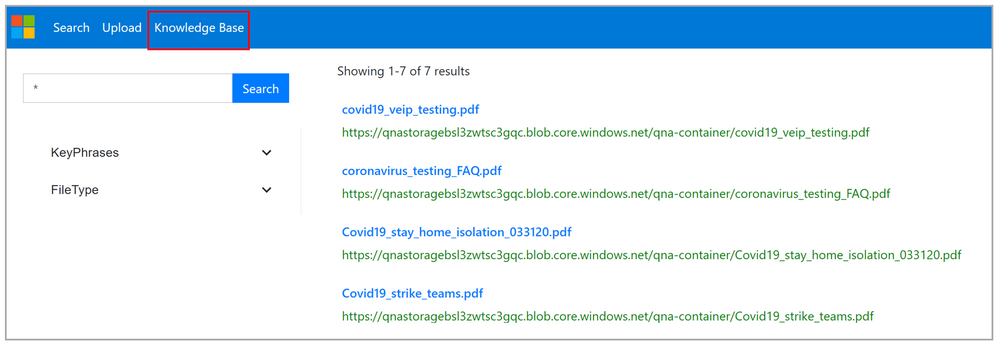

- Once the ingestion is complete, you can view all the Question Answer pairs extracted from the documents by clicking on “Knowledge Base”.

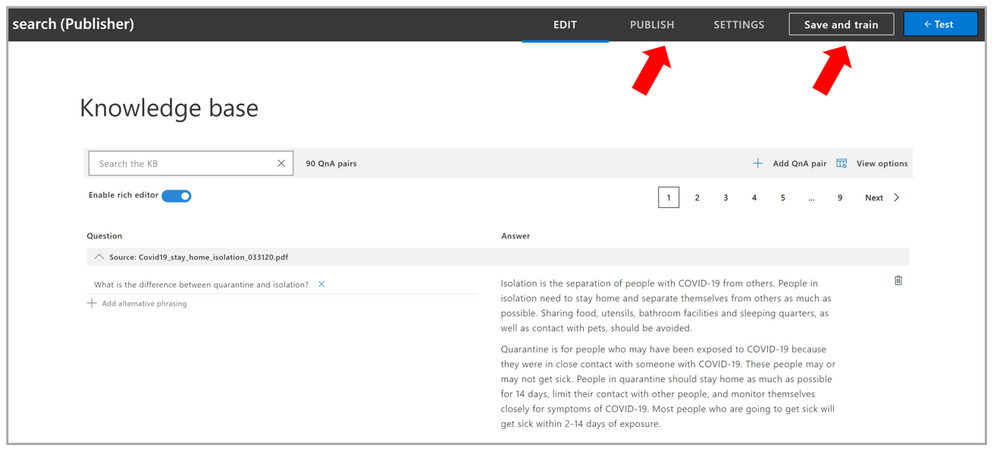

- Play with your knowledge base!, You can also test for different queries using the Test Pane. Once you are satisfied with the experience, click on “Save and Train” and then “Publish” the changes to get these changes reflected on your main portal.

Links:

by Contributed | Jan 20, 2021 | Technology

This article is contributed. See the original author and article here.

If you are a frequent user of Azure CLI or Azure PowerShell, then chances are that you have experienced one of the AI-powered features our service provides for the Azure command line tools. These features include generating up-to-date command usage examples with each new Azure CLI and Azure PowerShell releases to ensure the documentation is always up to date (“–help” or “-h” in the command line), enabling natural language search of a command in the Azure CLI (az find), assisting with failure recovery, and the recently released AZ Predictor (for Azure PowerShell). Our AI service for Azure tools serves thousands of user requests per second. In order to handle such high scale application workloads we use an Azure App Service tier called App Service Environments (ASE). While ensuring our Azure App Service is scalable and performant, we had to address several key challenges like being able to stress test the service with a high volume of traffic, running the service in fully isolated environments without affecting other Azure subscriptions, and throttling bad actors beyond reasonable levels of requests. We will share our journey of discovery, surprises, and learnings that led us to arrive at an enterprise-grade, cloud-scale AI service that powers our own client tools.

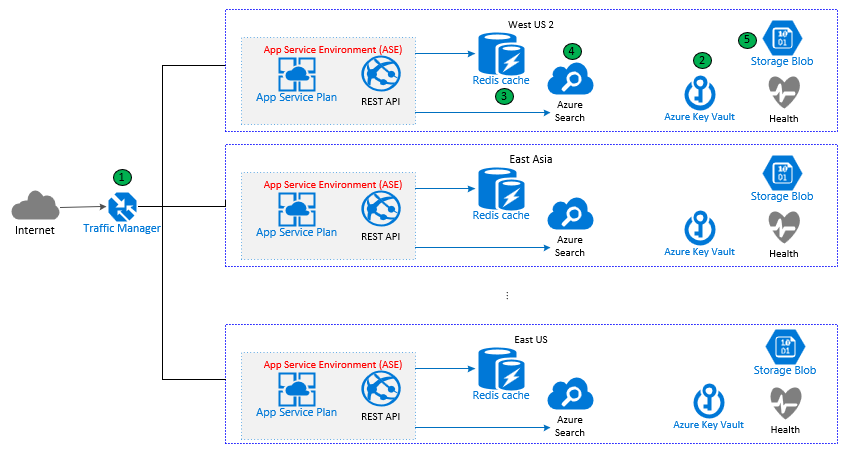

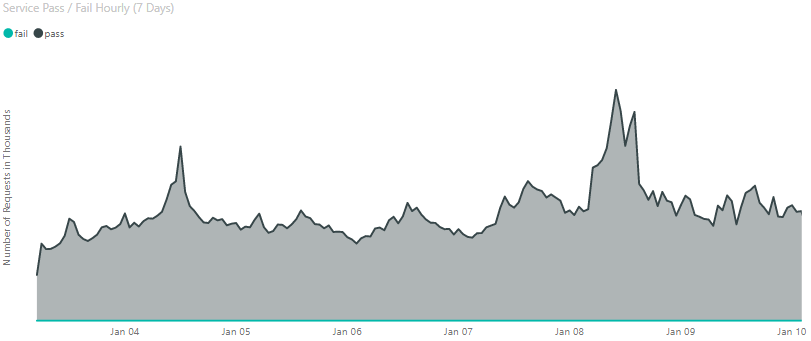

Architecture

When a user interacts with one of our integrations in Azure CLI or Azure PowerShell, a request is made to our App Service. To fulfil the request, the App Service gets the result from the relevant AI model. Note that we use different resources for different types of models. For example, the model that generates examples when a user tries to find a command is powered by Azure Search with natural language search features. The failure recovery and AZ Predictor models are more suited to be stored in Azure Storage and loaded into memory to achieve high performance.

Considering the geo-diversity of our users and the high volume of traffic, the service runs in multiple regions to achieve quick response time and high availability. Each request is routed to the nearest region by Azure Traffic Manager (1). In each region, the REST API might talk to:

- Azure Key Vault (2) to retrieve secrets.

- Azure Redis Cache (3) to get or set cached response for improved performance.

- Azure Search (4) to get some of the model results when they are not cached.

- Azure Storage (5) to load some of the models into memory at service startup or when models need to be refreshed.

Figure 1: App Service Architecture

Extensibility and Scalability

As we continue to enable more features in the service, it is important to design the App Service in a scalable way. We develop common libraries for accessing Azure resources like Azure Key Vault, Azure Search, and Azure Redis Cache. Those libraries can even be shared with other services as well.

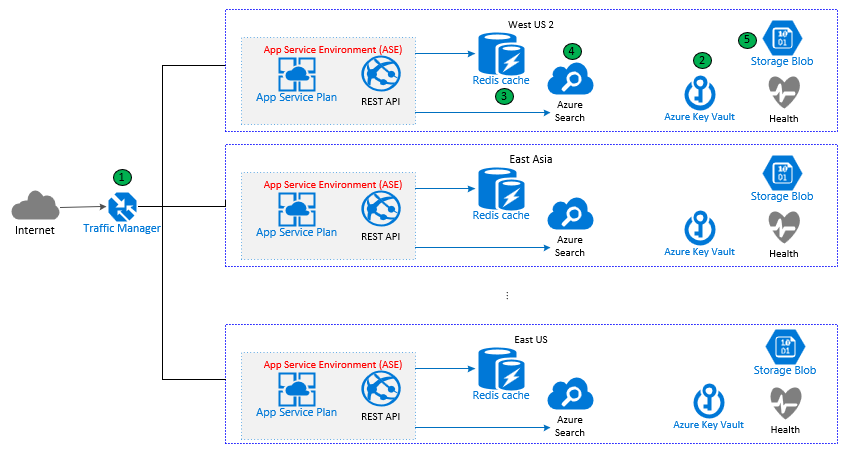

Scalability and performance are critical for the service. A user typing on the command line typically expects a response in less than a second. This requires the service to respond to requests quickly, in the order of milliseconds. We receive thousands of requests per second (RPS), so we need to make sure the service scales fast enough to meet our changing demand. For example, we might experience a peak in the usage pattern when a new version of the Azure CLI comes out. To configure the scaling properly, we started by analyzing Azure CLI usage patterns to understand the expected traffic trends. Figure 2 shows the hourly traffic for the service in the order of 1000s.

Figure 2: Service Hourly Traffic

Based on the extremes from the usage data, the RPS estimation we used for stress testing was in the order of several 1000s of requests. Note that the estimation we used to stress test is higher than the traffic showed in this figure. This is in part because not all Azure CLI or Azure PowerShell users have upgraded to versions with our service integrations. We decided to play it safe with stress testing and prepare for the maximum number of requests we might receive plus some additional margin.

As it turned out, being able to stress test the service with thousands of requests per second was a huge challenge.

Stress Test Journey

We explored a variety of stress testing tools including loader.io and JMeter. At the end we decided to use JMeter because it offers a few key features: the ability to control requests per second, it is fully supported in Azure DevOps, and it is actively maintained. However, using JMeter involved its own challenges:

- RPS limit from one machine. We quickly found that because of the amount of TCP connections created by our JMeter script, we could only send around 1,000 RPS from one machine.

- Configuration needed to maintain multiple VMs in VMSS. Using multiple machines was necessary to overcome the above RPS limit from one machine.

We then considered running Azure Container Instance (ACI) to overcome these limitations. ACI is Azure CLI-friendly and we found it was quite easy to prepare and run a container in Azure. We were able to easily and quickly launch our images in multiple ACIs to reach our target RPS without the pain of having to individually configure and maintain multiple VMs.

App Service Environments

This led us to an unexpected challenge. Hitting our service with 1000s of RPS brought down our service, because it was overloading the load balancing front ends for that stamp. A stamp is a cluster of servers. You can think of it as groups of racks with shared resources like traffic routing within an Azure Data Center. To avoid this issue, we moved to App Service Environments (ASE) for our infrastructure. ASE provides a fully-isolated and dedicated environment for securely running App Services on a high scale. This includes isolated load balancing front ends for network routing.

With the right architecture in place, we were able to scale both horizontally and vertically. Horizontal scaling is done by increasing the number of routing front ends and App instances in proportion to actual traffic. Vertical scaling is done through choosing the right tiers for Azure Redis Cache, Azure Search Service, and App Service plan.

Throttling

Bad actors and broken scripts could bombard the service beyond reasonable levels of requests. Instead of allowing these clients to potentially bring the service down, we would ideally start blocking individual bad actors. The service implements both Client- and IP-based rate limiting. Having both these options allow us to be flexible, minimize slowdowns, and keeps the service running even when hit by bad actors.

Reliability

As shown in the architecture diagram, we deploy the service to multiple geographic regions which are aligned with the distribution of Azure CLI users to make sure every user’s request can be served from a deployment with low latency. This helps us ensure we meet our service health targets. Our reliability goal is that 99.99% of requests in the preceding 5 minutes were successful. Our latency goal is that for the requests in the preceding 15 minutes are served in under 700 milliseconds for the 99.9th percentile.

All traffic for the services is routed by Azure Traffic Manager. When Traffic Manager detects that a region is unhealthy (which is checked via a monitor API defined in the service) the traffic is then routed to the next closest region.

Deployment

We use Azure DevOps and Azure Resource Manager templates (ARM) to deploy the service. Deployment is first tested in Dev and PPE (pre-production environments) environments. An interesting learning is that the first ASE deployment takes 1.5 – 2 hours, though subsequent deployments are much shorter.

Our release pipeline follows the ring-based deployment process with the goal of being able to limit failures to the minimum number of customers.

Monitoring

Monitoring is important to make sure we know right away when something goes wrong. This is essential for us to meet our reliability and availability requirements. The service logs various metrics which are then used in a variety of alerts to the team: when an API call fails, when the App Service performance is degraded, when some resource in a region fails, when a region goes down etc. The metrics data is processed in near-real-time alerts (60s ingestion latency).

Help us make the tools that you love and use every day even better. You can provide feedback by creating an issue in Azure CLI GitHub page and Azure PowerShell GitHub Page.

by Contributed | Jan 20, 2021 | Technology

This article is contributed. See the original author and article here.

Automating Azure Synapse and Azure Analysis Services or Power BI Dataset

Writer: Derek Daniels, Senior Business Intelligence Consultant

Technical Reviewers:

- Mark Kromer, Principal Program Manager, Azure Data Factory (Microsoft)

- Patrick LeBlanc, Principal Program Manager, Power BI Customer Account Team (Microsoft)

- Phil Bennett, IT Software Development Engineer (Microsoft)

Published: January 2021

Applies to: SQL Server Integration Services (on premise), Azure Data Factory, Azure Synapse Analytics workspace (formerly Azure SQL DW), Azure Analysis Services and Azure Logic Apps

Summary:

This article describes an example of how to automate an ELT (extract, load, transform) for your data warehouse and tabular model reporting solutions (AAS (Azure Analysis Services) or Power BI (PBI) dataset) within Azure Synapse Analytics workspace (/studio). The key differentiator of this paper is that the data sits on-premise, and Azure Data Factory is prohibited (i.e. blocked) from accessing the data but, SSIS on-premise can access the data.

Introduction

If you’re looking at moving your on-premises BI solution to Azure to leverage the power of Azure Synapse Analytics, but your data is hidden behind a firewall that won’t allow Azure Data Factory access, then this solution could help you. This article will provide you with a possible Azure solution architecture design to assist you.

This article was created when I began my journey of moving my clients data from an on-premise 2016 SQL Server to Azure. At that time there was no one article that showed a possible end-to-end solution. Here I’ve captured a lot the steps I took to craft my final Automated Azure self-serve BI Solution.

This article assumes you’ve already successfully setup and configured the following:

- A self-hosted IR (integration runtime)

- Azure Synapse Analytics workspace. If not, you can start by going to Microsoft Docs Get Started with Azure Synapse Analytics. Make sure database permissions are setup correctly under Manage > Security > Access Control (Secure your Synapse workspace (preview)).

- If you prefer YouTube videos, Advancing Analytics – The Synapse Sessions might be a good start too.

- Have a working SSIS (SQL Server Integration Services) environment already setup for deployment.

- Built out and deployed a tabular model. If not, there are numerous resources to assist you with this, including Microsoft Documents Lesson 1: Create a New Tabular Model Project.

Setup

First (if you haven’t already), download and install Azure Feature Pack for Integration Services (SSIS) for your version of SSIS. Within this feature pack you’ll want to leverage the Flexible File Destination component to save off the data as Parquet file format in ADLS Gen. 2 (Azure Data Lake Storage Gen. 2/ Blob). The caveat with Parquet files is that they have a major dependence on Java. This created quite the hiccup for me. While I used Oracle’s Java SE Runtime Environment, I still had to make some changes to the System Properties where the SSIS Environment sits. I found the 11 minute YouTube video How to Install Java JDK on Windows 10 (with JAVA_HOME) (March 2020) quite helpful in setting up and validating the setup.

Within your new SSIS Project, you’ll need to create connections to your source system(s) and Azure Storage connection manager.

Extract Load Transform (ELT)

The advantage of placing the data directly from the source into Blob Storage is that it strips away any metadata type mismatch issues. Once completed the data is transformed into a star schema to be later consumed by AAS.

There are three primary ways to connect to a server (database engine):

- Windows Authentication

- SQL Server Authentication

- Azure Active Directory – Integrated

If your Data Factory can create a linked service to your data source via Windows Authentication or SQL Server Authentication, then you should be able to leverage Azure Data Factory Managed Virtual Network (preview). If your data sources requires you to use a Service Principal, and you can use Azure Databricks (Azure Synapse Analytics workspace Notebook), then you should consider PySpark.

Unfortunately, my situation only allows for Azure Active Directory – Integrated, and at the time of publication of this paper, ADF doesn’t support Active Directory Integrated Authentication. Which is why I used SSIS to pull my fact table. However, my dimension tables are located in ADLS Gen. 2, and so I’m able to leverage PySpark.

Extract Load

My Source dataset is a destructive load, so my dataset must be a destructive load too. This step pulls from two different data sources (on-prem & Azure) and allows me to call two activities simultaneously (General – Stored procedure, and Synapse – Notebook).

Extract Load with SSIS

I chose to use SSIS Sequence Containers for each of my data sources, and then create the Data Flow Tasks within (please see image on the right). That way, if there was an issue with the source system it’s easier to identify.

The General – Stored procedure activity leverages an on-premises SSIS 2016 Data Flow Task to copy data from on-premise SQL Server (doesn’t accommodate Azure Data Factory) and paste it into a secure ADLS Gen. 2 repository.

Here you have to explicitly state that you want the Flexible File Destination task to save the data as a Parquet file. If this isn’t setup correctly (System Settings) then you won’t be able to perform this task successfully.

- Location

- Folder Path – this is Hadoop (/Linux) based, therefore case sensitive

- File Name – needs to contain both file name, and file type extension (this case “.parquet”)

- Format

- File Format – select Parquet from the drop down

Once I deployed the package I used Tim Mitchell’s article A Better Way To Execute SSIS Packages With T-SQL (November 2016) (GitHub) to create a SP (Stored Procedure) to call and wait for completion of the SSIS Package. This will be used in the automated data orchestration.

Extract Load with PySpark

Appendix A – PySpark (Python) Destructive Load contains most of the code I used to pull data from ADLS Gen 2 via PySpark. Connecting to ADLS Gen 2 has two options depending how source data has been configured:

The next part stumped for several weeks. Since each time the PySpark notebook is ran, the Parquet file name keep changing in the folder path. Thus, a dynamic naming convention needs to be leveraged. However, once I realized I could create an external table to a folder location (not a file path) that solved my issue. Here’s how I created my external table for DIM Currency.

CREATE EXTERNAL TABLE ext.DIM_Currency (

[DIM_CurrencyId] int,

[CurrencyCode] varchar(8000),

[CurrencyName] varchar(8000)

)

WITH (

LOCATION = ‘<file path>/DIM_Currency/’,

DATA_SOURCE = [dfs_core_windows_net],

FILE_FORMAT = [SynapseParquetFormat],

REJECT_TYPE = VALUE,

REJECT_VALUE = 0

)

GO

|

Extract data with Scala

Appendix B – Spark (Scala) Destructive Load contains most of the code I used to pull data from Azure SQL Database (SQL script with table joins and filtering), and wrote the results into the ADLS Gen 2 environment. I connected the new parquet file the same way I did with the PySpark script.

Transform

This part requires some more setup in the form of mapping the Parquet files in ADLS as External Tables within Synapse (Microsoft Docs Use external tables with Synapse SQL). This needs to be done for both Synapse SQL pool, and SQL on-demand. A short cut to doing this is to go to the Data section, click on the “Linked” icon. Drill down to where one of your Parquet files is located, right click on the file, select New SQL Script > Create external table, select the SQL pool, select the database, type in the table schema and table name in the text box (I created a “stg” schema for all of my staging tables).

Once I created all of the necessary external tables, I wrote two destructive load stored procedures: one for the fact tables and one for the dimension tables. At this time, all of my tables are destructive loads (fact and dimensions) through Stored Procedures in the SQL Pool. In my environment I loaded the transformed (star schematized) tables into the “dbo” schema. From there I wrote SQL Views in the “bi” schema to perform “(NOLOCK)” on the dbo tables. If you’re not familiar with NOLOCK table hint, MS SQL Tips wrote an article explaining it, Understanding the SQL Server NOLOCK hint (April 2019). Having the “bi” schema also helps me limit the access I grant to users, as I may not want to always give them access to the source table.

Publish

Create Tabular Model (AAS or PBI)

Once your data is properly prepped in Synapse Analytics, you’ll need to build out a tabular model either in SSDT (SQL Server Data Tools/ Visual Studios) or Tabular Editor, and deploy it to your AAS Server. Or you can use Power BI Desktop and publish it.

Both options have the ability to leverage DirectQuery but, there are some caveats to be mindful of. They can be found in the DirectQuery model guidance in Power BI. This is because at the time of writing this, this is a requirement for Materialized Views.

In addition, you may also want to point that as of writing of this document, that Materialized Views cannot be created on Existing Views (which you are creating in your bi schema) and they don’t support RLS.

Automation with Synapse Data Factory (Orchestration)

My Synapse Data Factory solution has several parts largely divided up into 3 segments. The first is ELT, the second cleans the tabular model, and the third performs a full refresh on the tabular model. A constraint I have is that all my tables are a destructive load (non-incremental). I would prefer I have the option to perform an incremental (partition) refresh.

Using the SQL BI Vertipaq Analyzer, I quickly discovered my model was just over 10 GB.. Upon reviewing the AAS Pricing Tiers, one would expect the S1 tier to be sufficient to perform a full refresh. Unfortunately, my experience proved otherwise giving me an ambiguous Azure Data Factory Error Code 2108, which meant insufficient memory. I discovered this when I chose to “clearValues”, then perform a full refresh. This workaround also prevented me from spending more money than I needed to.

Microsoft’s Mark Kromer helped me automate the AAS portion by showing me James Coulter article’s Process Azure Analysis Services Models with Azure Data Factory v2 (March 2020), it also shows how to refresh table partitions. From here I created two Data Factory Pipelines (clear values, and full). James figured out how to call and wait for the correct response from AAS.

If you client wishes to use the Power BI instead of AAS, Meagan Longoria wrote Refreshing a Power BI Dataset in Azure Data Factory (July 2020). In May of 2018 Power BI Premium allowed for incremental refresh, and in 2020 that option was available for Power BI Pro licenses too. The You Tube Channel “Guy in a Cube” has covered this topic.

My Production Synapse Orchestration Pipeline has the following sequential task:

- Stored procedure activity

- Calls the SSIS SP

- SQL pool stored procedure activity (dimension tables)

- Calls the destructive load of the dimension tables within Synapse

- SQL pool stored procedure activity (fact tables)

- Calls the destructive load of the fact tables within Synapse

- Execute Pipeline

- AAS

- clear values

- full

- PBI

- Web activity (Azure Logic App call)

Create Azure Logic Apps for messaging

Adam Marczak created a great 30 minute YouTube video labeled Azure Data Factory Custom Email Notifications Tutorial (June 2020). It provides a detailed step by step process to create an Azure Logic App to send a custom email. He suggests as a best practice to create a master pipeline that calls the pipeline doing all of the work to help with error handling.

Appendix A – PySpark (Python) Destructive Load

%%spark

spark.conf.set(“fs.azure.account.auth.type”, “OAuth”)

spark.conf.set(“fs.azure.account.oauth.provider.type”, “org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider”)

spark.conf.set(“fs.azure.account.oauth2.client.id”, “”) //Principal App (client) ID

spark.conf.set(“fs.azure.account.oauth2.client.secret”, “”) //Secret Key

spark.conf.set(“fs.azure.account.oauth2.client.endpoint”, “https://login.microsoftonline.com/ (directory/ tenant ID) /oauth2/token”) //Directory (tenant) ID

//YouTube: Azure Databricks – Accessing Data Lake – Using a Service Principal (April 2020) by Dinesh Priyankara

//https://www.youtube.com/watch?v=odlf3wXBSpY&list=PLEebLi3cHr6SsEJfsrxNze4-Ksju3cQyI&index=7&t=0s

|

# pyspark.sql.SparkSession Main entry point for DataFrame and SQL functionality.

from pyspark.sql import SparkSession

# Define build file Uniform Resource Identifier (URI) for source

def build_file_uri(accountName: str, blobName: str, dirName: str) -> str:

baseFilePath = “abfss://{}@{}.dfs.core.windows.net/Layer01/Layer02/{}/” #File Tree Path

filePath = baseFilePath.format(blobName, accountName, dirName)

filePath = filePath + “{*}”

return filePath

# Define build file Uniform Resource Identifier (URI) for destination

def build_file_uri_alt(accountName: str, blobName: str, dirName: str) -> str:

baseFilePath = “abfss://{}@{}.dfs.core.windows.net/Layer01/Layer02/{}/”

filePath = baseFilePath.format(blobName, accountName, dirName)

#filePath = filePath + “{*}”

return filePath

def build_file_uris(accountName: str, blobName: str, dirNames: [str]) -> [str]:

paths = []

for name in dirNames:

path = build_file_uri(accountName, blobName, name)

paths.append(path)

return paths

def build_file_uris_alt(accountName: str, blobName: str, dirNames: [str]) -> [str]:

paths = []

for name in dirNames:

path = build_file_uri_alt(accountName, blobName, name)

paths.append(path)

return paths

# Define copy type = overwrite

def copy(inputFilePath: str, outputFilePath: str, writeMode: str = “overwrite”, sparkSession: object = None) -> bool:

try:

if not sparkSession:

spark = SparkSession.builder.getOrCreate()

spark = SparkSession.builder.getOrCreate()

df = spark.read.parquet(inputFilePath)

#df = df.repartition(1) #provide a single consolidated file (not partitioned)

df.write.mode(writeMode).parquet(outputFilePath)

except Exception as e:

raise e

# Define copy plural

def copy_many(sourceAccount: str, destinationAccount: str, sourceBlob: str, destinationBlob: str, tableNames: [str]):

sourceUris = build_file_uris(sourceAccount, sourceBlob, tableNames)

destinationUris = build_file_uris_alt(destinationAccount, destinationBlob, tableNames)

spark = SparkSession.builder.getOrCreate()

for i in range(len(sourceUris)):

sourcePath = sourceUris[i]

destPath = destinationUris[i]

copy(sourcePath, destPath, sparkSession = spark)

print(“copied | {} | to | {} |”.format(sourcePath, destPath))

#if __name__ == “__main__”:

sourceAccount = “<Storage Account Name>” #Source Account Name

destinationAccount = “<Storage Account Name>” #Destination Account Name

sourceBlob = “<Blob Container Name>” #Source Blob Container Name

destinationBlob = “<Blob Container Name>” #Destination Blob Container Name

tables = [“DIM_Date”, “DIM_BusinessHierarchy”]

copy_many(sourceAccount, destinationAccount, sourceBlob, destinationBlob, tables)

|

Appendix B – Spark (Scala) Destructive Load

//Use HDInsight Spark cluster to read and write data to Azure SQL Database

//https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-connect-to-sql-database

// Declare the values for your database

val jdbcUsername = “<UserName>”

val jdbcPassword = “<Password>”

val jdbcHostname = “<Name>.database.windows.net” //typically, this is in the form or servername.database.windows.net

val jdbcPort = 1433

val jdbcDatabase =”<DatabaseName>”

//Create Connection String

import java.util.Properties

val jdbc_url = s”jdbc:sqlserver://${jdbcHostname}:${jdbcPort};database=${jdbcDatabase};encrypt=true;trustServerCertificate=false;hostNameInCertificate=*.database.windows.net;loginTimeout=60;”

val connectionProperties = new Properties()

connectionProperties.put(“user”, s”${jdbcUsername}”)

connectionProperties.put(“password”, s”${jdbcPassword}”)

|

//SQL Databases using JDBC (https://docs.databricks.com/data/data-sources/sql-databases.html)

//Push down a query to the database engine (https://docs.databricks.com/data/data-sources/sql-databases.html#push-down-a-query-to-the-database-engine)

// Note: The parentheses are required.

val pushdown_query = “””(SELECT DISTINCT PFam.*, PO.*

FROM dbo.PriceListItems PLI

JOIN dbo.Products Prod ON PLI.ProductSKID = Prod.ProductSKID

JOIN dbo.ProductFamilies PFam ON Prod.ProductFamilySKID = Pfam.ProductFamilySKID

JOIN dbo.ProgramOfferings PO ON PLI.ProgramOfferingSKID = PO.ProgramOfferingSKID

WHERE PLI.ValidTo >= DATEADD(MONTH,-6, CONVERT(datetime, CONVERT(varchar, MONTH(SYSUTCDATETIME())) + ‘-01-‘ + CONVERT(varchar, YEAR(SYSUTCDATETIME()))))

AND PO.ProgramName LIKE ‘%Enterprise%’) EA_PriceList”””

val dfPriceListEA = spark.read.jdbc(url=jdbc_url, table=pushdown_query, properties=connectionProperties)

//display(df)

//df.printSchema

val filePath = “abfss://<BlobContainerName>@<StorageAccountName>.dfs.core.windows.net/<FilePath>”

dfPriceListEA.write.mode(“overwrite”).parquet(filePath)

|

Conclusion

Creating this fully automated data refresh solution allowed me to focus my efforts elsewhere, such as meaningful data visualization with Power BI. Hopefully this article will help you reap similar rewards.

For more information:

Azure Web site

Azure Synapse TechCenter

Recent Comments