by Contributed | Jan 19, 2021 | Technology

This article is contributed. See the original author and article here.

In my previous blog I addressed the issue of managing credentials in the code and presented two different alternatives to secure it. In this post, I will focus on Azure subscription security health and its challenge. I could summarize the subscription security health challenges as follows:

- New resources are deployed to Azure subscriptions all the time, especially if the company has many developers and DevOps working on the same subscription.

- Conducting subscription security health checks frequently for many subscriptions to make sure all the resources within the subscriptions follow the security best practices.

- For a big subscription, a manual check can be challenging.

Of course, there is an option to develop manual security checks as the scripts and run them on the subscription. However maintaining such a tool and updating it would be a nightmare, especially as the company adapts more resources type in the subscription.

Wouldn’t it be nice if there was a tool that I could run against the subscription? And it could come back with a list of security issues and even give me the option to fix them automatically? Fortunately, this tool exists, and it is called a secure DevOps kit for Azure or AzSK.

What is Secure DevOps kit (AzSK)?

- Secure DevOps kit was an internal tool for Microsoft and was developed to help Microsoft internal teams to move to Azure quicker on more easily.

- It is an open-source tool and not an official product.

- Microsoft released this tool so it can share Azure cloud security best practices with the community.

- Both the code and documentation can be found on GitHub.

- It allows the option to customize these is scripts so they can match company needs

AzSK Focus Area:

- Secure the subscription: It can run global subscription health checks then it will come back with a list of improvements. Then I can go ahead and apply these improvements manually or automatically.

- Integrate Security into CICD: These security tests can be integrated into the company’s continuous integration on continuous delivery pipelines.

- Enable Secure Development: It enables secure development. There is a visual extension that can be installed on the developers’ machine. It adds security intelligence to the developers’ IDE, so developers will be presented with the security best practices at the time of development.

- Continuous Assurance: It has the option to integrate these security tests with Azure automation, so this test can be run automatically as part of Azure automation process.

- Alerting & Monitoring: It will provide verbal’s alerting and monitoring data where users can implement it as part of Azure monitor.

- Security Telemetry in App Insights: It can write security telemetry information to an instance of Application Insights.

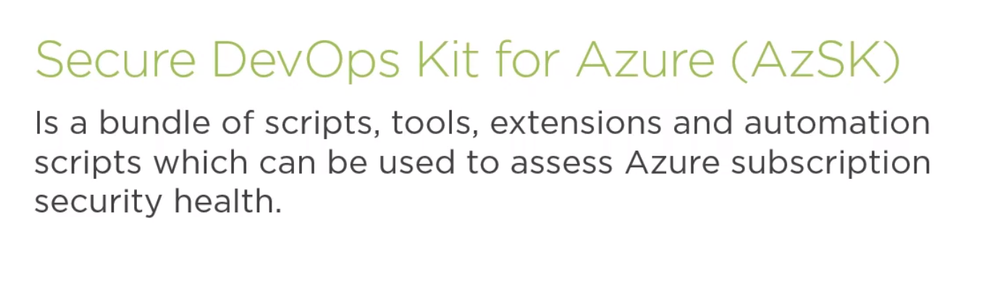

How does it work?

In the subscription, I can have different azure services like Azure SQL Server, virtual machines, storage accounts, Azure key, Walt Instances, API management instances ..etc, there are few options to user AzSK tool

- Running a few security tests against the subscription.

- Performing all these tests on come back with the test result. The test result is a CSV file.

- Automatically fix the issues for us.

NOTE: Not all the issues found can be fixed automatically. DevOps Admin will need to fix them manually.

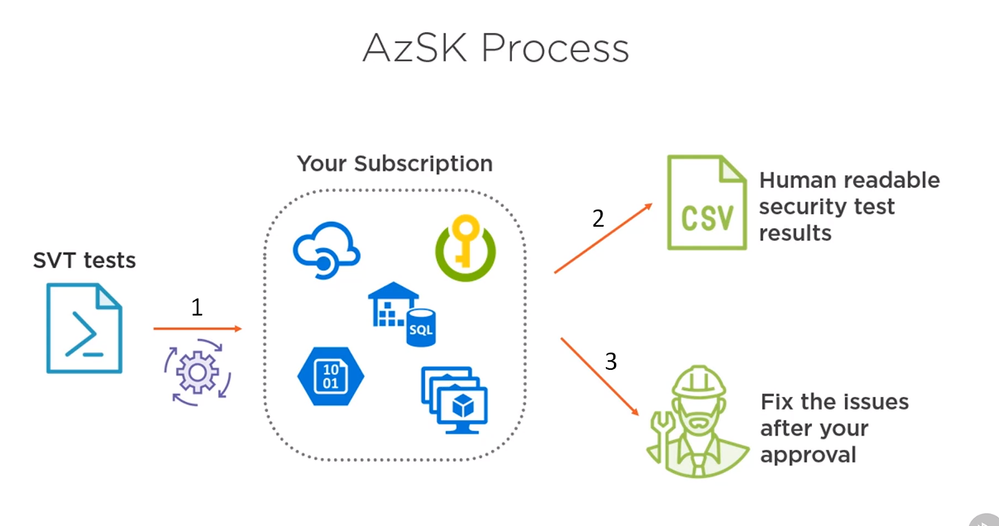

Setting up AzSK

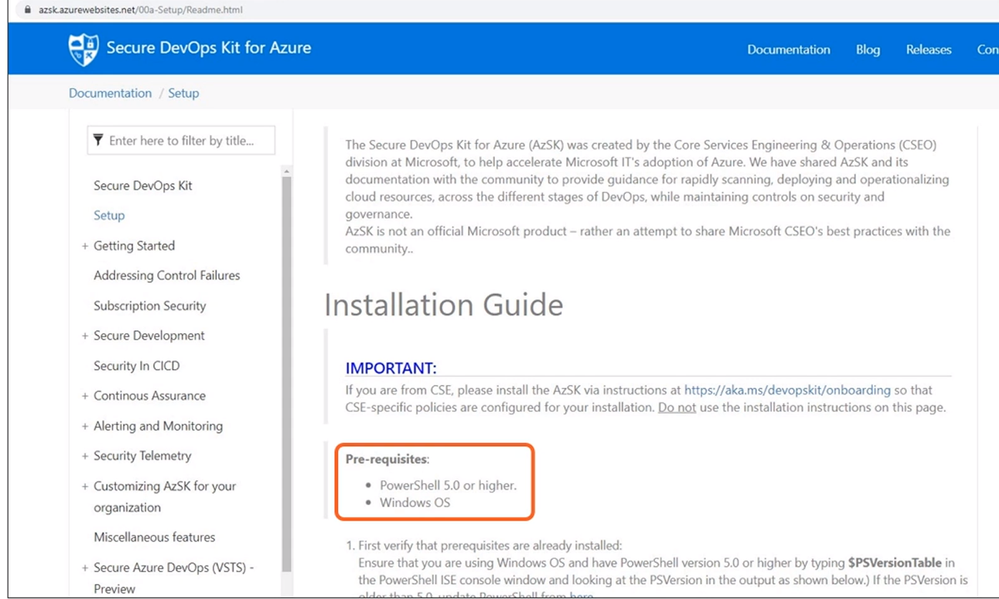

All the requirement and step by step instruction can be found https://azsk.azurewebsites.net/

I will need the following Pre-requisites:

- PowerShell 5.0 or higher.

- Windows OS

To Install the Secure DevOps Kit for Azure (AzSK) PS module:

Install-Module AzSK -Scope CurrentUser

Demo: Running Security Validation Tests (SVT) with AzSK

I am going to use the tool to scan on Azure subscription for Security Health. I will also use the tool to scan a resource group for security health. The resource group will have the following

- Storage Account

- Azure SQL Database.

- Virtual Machine

- Azure Key Vault

- Azure Cosmos DB

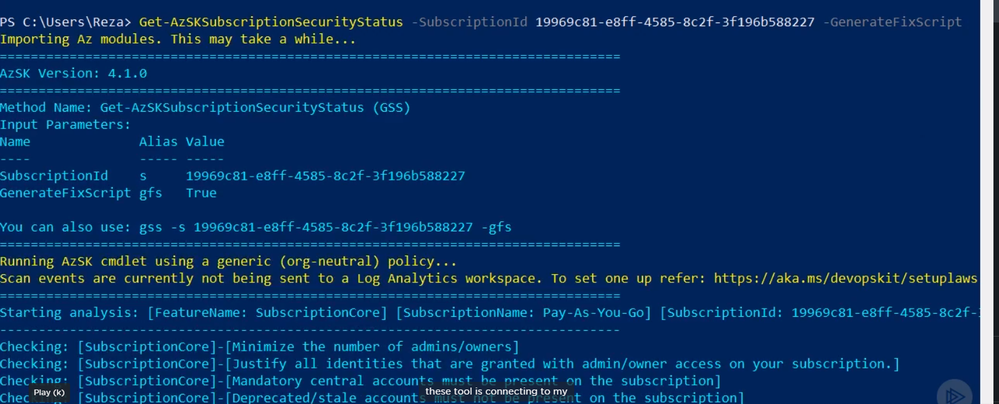

The following the azSK command will run security scans against the subscription.

Get-AzSKSubscriptionSecurityStatus -SubscriptionId '<subscriptionId>' --GenerateFixScript

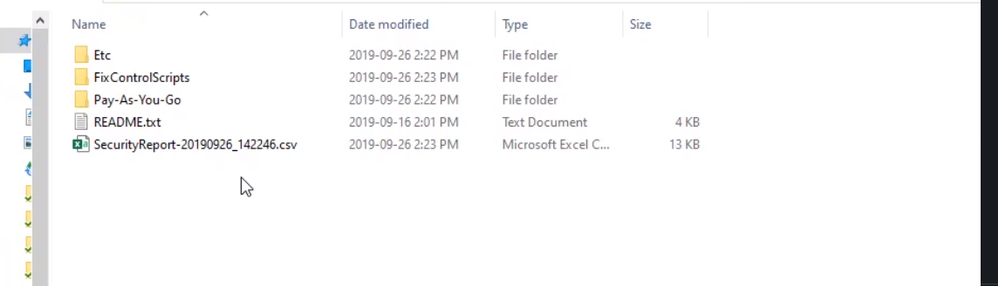

Once the command is finished it will open an explorer window and show the result.

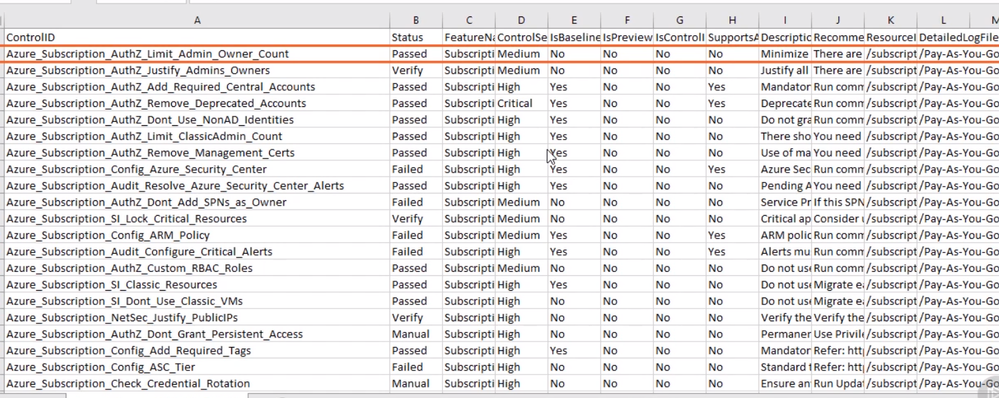

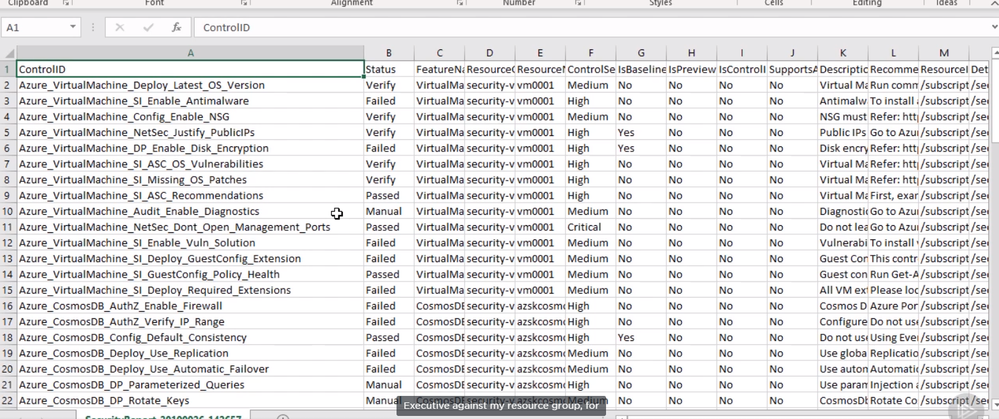

The SecurityReport file will show a list of the subscription level test, which were executed, and its result. For example, there is a test to make sure the admin owners off.

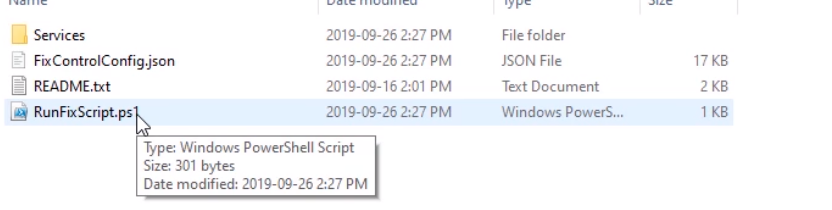

Also, since I used “GenerateFixScript” flag, there is a folder calls “FixControlScript” and if I opened this folder I can see Powershell fix script

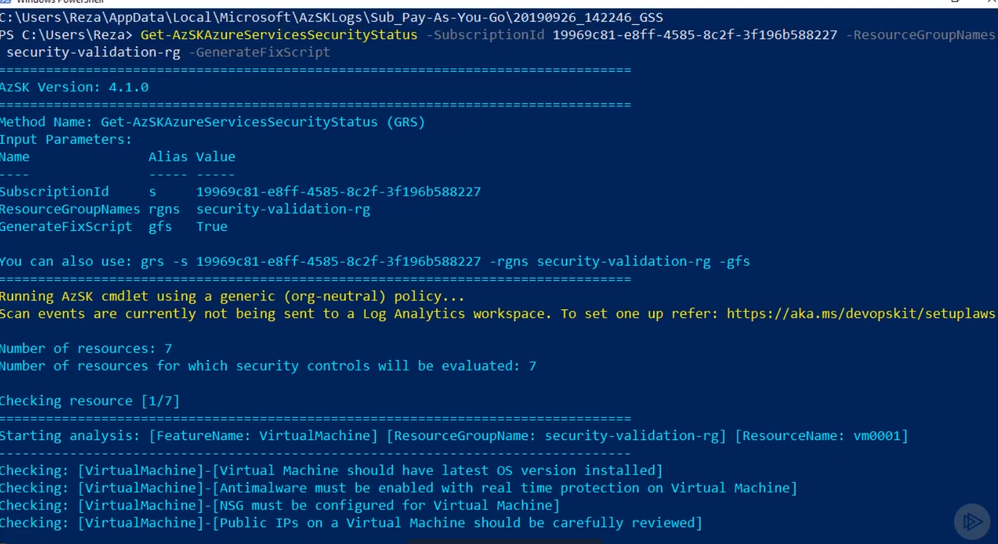

In this second test, I will run the AzSK command against a resource group

Get-AzSKAzureServicesSecurityStatus -SubscriptionId <SubscriptionId> -ResourceGroupNames <rgname>

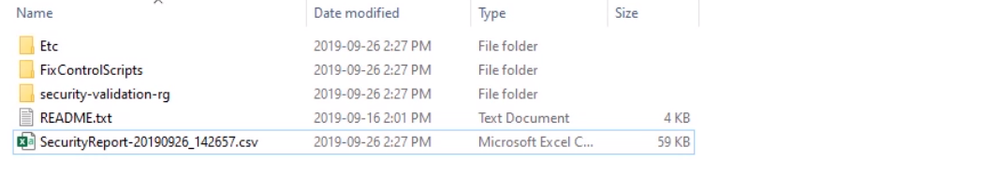

Like the last test, AzSK command will open the result folder where I can find the security scan result report

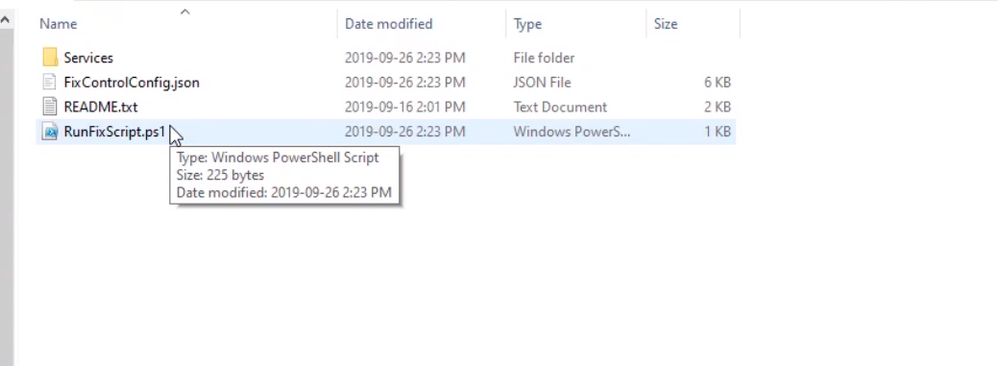

Also, there will be a “FixControlScript” folder where I will find the “RunFixScript” file

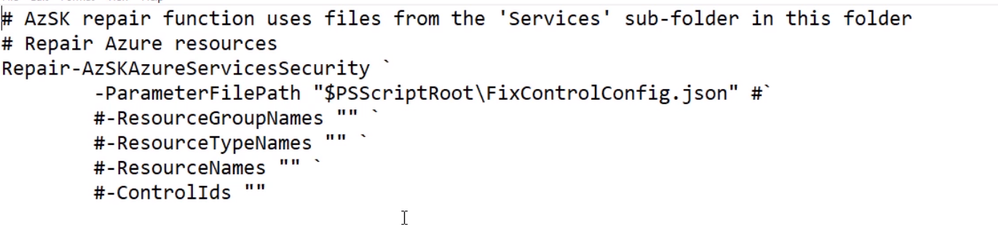

If I edit the file I could see the script and how it will attempt to fix the problem

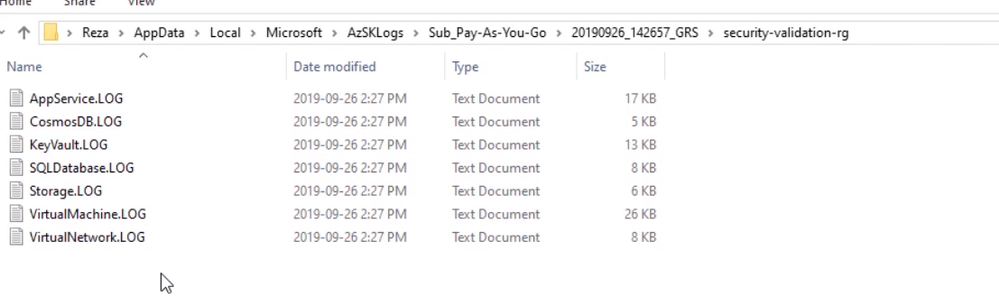

Also if I checked the result folder again I could see a “security-validation-rg” folder that contains logs for each azure service that exists under the resource group. The log will contain information about the test and results that executed against the resource.

Summary

AzSK enables us to run security health checks against our subscriptions or resource groups. The tool will give a report and also an option to automatically fix issues that are found. In our next blog, I will discuss Azure sentinel.

by Contributed | Jan 19, 2021 | Technology

This article is contributed. See the original author and article here.

The guides can be used both independently, but we recommend using all the solutions together for your deployment needs. We are not recommending one solution be implemented before another but have included information in each guide to tie all the solutions together with features to consider during your implementation. The guide covers current released feature as of today and will be updated once additional features progress from beta, or private preview to general availability.

In summary, the deployment guides will help to

➢ One Compliance Story covering how to each solution features complement each.

➢ Best Practices based on the CxE teams experience with customer roadblocks.

➢ Considerations to take and research before starting your deployment.

➢ Help Resources links to additional readings and topics to gain a deeper understanding.

➢ Appendix for additional information on licensing.

We have included 5 Zip files. One with all four guides, and separate Zips for each.

This documentation was written by the global CxE team, thank you all for your hard work to produce the documents.

If you have any questions on the guides or suggestions, please reach out to our Yammer group at aka.ms/askmipteam

by Contributed | Jan 19, 2021 | Technology

This article is contributed. See the original author and article here.

This is our first release of Azure RTOS in 2021. The 6.1.3 patch release incorporates many updates, including new ThreadX ports to new microcontrollers and chips, addition of LwM2M and PTP to NetX Duo, updates to GUIX and more.

Azure RTOS is an embedded development suite including ThreadX, a small but powerful real-time operating system, as well as several libraries for connectivity, file system, user interfaces and all that’s needed to build optimized resource-constrained devices.

In continuous development, you can track the updates on GitHub in the Azure RTOS repositories.

New ThreadX ports

Our team delivers new features regularly responding to customers’ needs and asks, and for this release brings ThreadX to more microcontrollers and chips with new ports to Renesas’s RXv2 for use on IAR, GNU and Xtensa XCC compilers and tools.

New module ports are also now available for Cortex A35 on AC6 workbench and GNU compiler, making ThreadX even more pervasive.

In addition to the new ports, we added new samples projects for Renesas RZ and RX development boards: https://github.com/azure-rtos/samples

Bringing new protocols support in NetX Duo

NetX Duo is Azure RTOS’ advanced, industrial-grade TCP-IP network stack that also provides a rich set of protocols including security and cloud protocols.

In this release, the team added support for Lightweight M2M (LwM2M). The addition of this protocol allows for a seamless integration of IoT devices powered by Azure RTOS into solutions that have invested in LwM2M to connect, service and manage their devices.

Precision Time Protocol (PTP) has also been added to NetX Duo, giving the option to synchronize IoT devices clock at the sub-microsecond level on a local area network.

GUIX enhanced support for screen rotation

As mobile IoT Devices are becoming more sophisticated, they are also more often getting a graphical interface on a screen that will be used in changing orientations.

GUIX, the embedded graphical user interface library of Azure RTOS now supports screen rotation feature for 16bpp color and APIs for handling bi-directional text reordering.

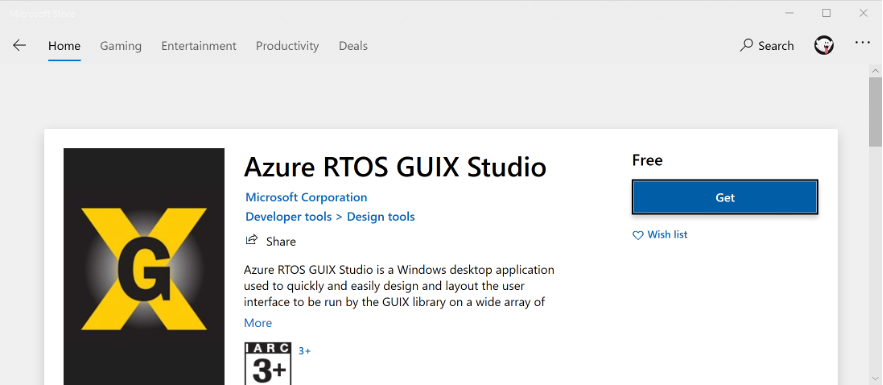

GUIX Studio and TraceX now in the Windows Store

Finding, installing and keeping developer tools up to date is not always trivial. We added the GUIX Studio and TraceX tools to the Windows Store to make your life a little bit simpler.

You can now grab and install them from: https://aka.ms/azrtos-guix-installer and https://aka.ms/azrtos-tracex-installer.

For more details about improvements and bug fixes in this release, you can check the change log within repository of each Azure RTOS component: https://github.com/azure-rtos.

If you encounter any bugs, have suggestions for new features, you can create issues on GitHub or post a question to Stack Overflow using the azure-rtos and the component tags such as threadx.

by Contributed | Jan 19, 2021 | Technology

This article is contributed. See the original author and article here.

The January 2021 Cumulative Update Preview release for Windows includes the following historical Daylight Savings Time (DST) corrections for the Palestinian Authority:

- The 2015 DST end date was October 23, 2015 instead of October 22, 2015.

- The 2019 DST end date was October 23, 2019 at 00:00 instead of 01:00.

- The 2020 DST start date was March 28, 2020 instead of March 27, 2020.

- The 2020 DST end date was October 24, 2020 instead of October 31, 2020.

This change is for devices running the following Windows 10 versions:

- Windows 10, version 1809

- Windows 10, version 1903

- Windows 10, version 1909

- Windows 10, version 2004

Customers running devices with other supported versions of Windows will see the relevant changes in the February 2021 monthly quality update.

For Microsoft’s official policy on DST and time zone changes, please see Daylight saving time help and support. For information on how to update Windows to use the latest global time zone rules, see How to configure daylight saving time for Microsoft Windows operating systems.

by Contributed | Jan 19, 2021 | Technology

This article is contributed. See the original author and article here.

Final Update: Tuesday, 19 January 2021 18:57 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 1/19, 17:53 UTC. Our logs show the incident started on 1/19, 17:26 UTC and that during the 27 minutes that it took to resolve the issue impacted customers experienced ingested data latency and possible alerts misfiring due to latent data.

- Root Cause: The failure was due to a large unexpected ingress of data to a scale unit.

- Incident Timeline: 27 minutes – 1/19, 17:26 UTC through 1/19, 17:53 UTC

We understand that customers rely on Application Insights as a critical service and apologize for any impact this incident caused.

-Jeff

Initial Update: Tuesday, 19 January 2021 18:32 UTC

We are aware of issues within Application Insights and are actively investigating. Some customers in East US region may experience delayed or missed Log Search Alerts and Data Latency.

- Next Update: Before 01/19 20:00 UTC

We are working hard to resolve this issue and apologize for any inconvenience.

-Jeff

Recent Comments