by Contributed | Jan 19, 2021 | Technology

This article is contributed. See the original author and article here.

We are excited to announce the public preview of Azure Data Explorer Insights!

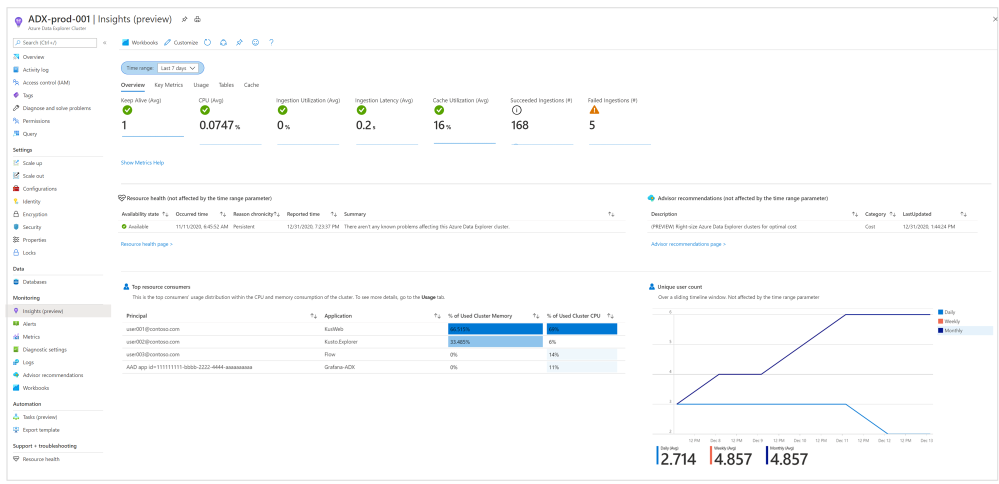

ADX Insights (Azure Monitor for Azure Data Explorer) provides comprehensive monitoring of your clusters by delivering a unified view of your cluster performance, operations, and usage.

Built on the Azure Monitor Workbooks platform, ADX insights offers:

- At scale perspective: showing a snapshot view of the clusters’ primary metrics to easily track performance, ingestion, and export operations. Unsustainable values are highlighted in orange. You can drill down to the “at-resource” view by clicking on the cluster name.

- At-resource perspective: drill-down analysis of a particular Azure Data Explorer cluster based on metrics and platform usage logs.

With this view, you can:

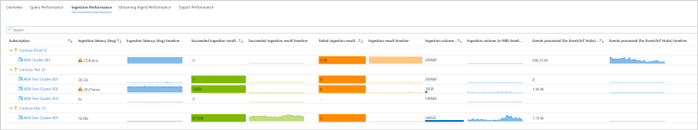

- Identify query lookback patterns per table and compare them to the table’s cache policy.

The Cache tab

The Cache tab

- Identify tables that are used by the most queries.

- Identify unused tables or redundant cache policy periods.

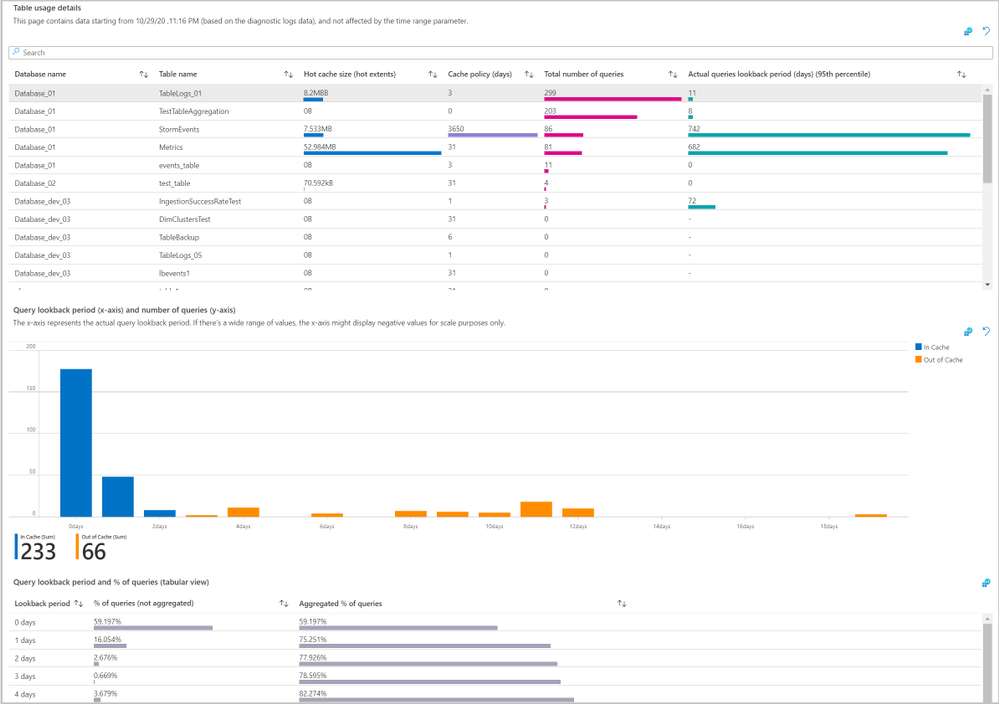

- Find which tables are consuming the most space in the cluster

- Track data growth history by table size, hot data, and the number of rows over time.

The Tables tab

The Tables tab

- Identify which users and applications are sending the most queries or consuming the most CPU and memory.

- Find changes in the number of queries by user and track the number of unique users over time.

- See top users by command and query count and identify top users by the number of failed queries.

- See the query count, CPU, and memory consumption over time.

The Usage tab

The Usage tab

- Get a summary of active Advisor recommendations and resource health status.

The Overview tab

The Overview tab

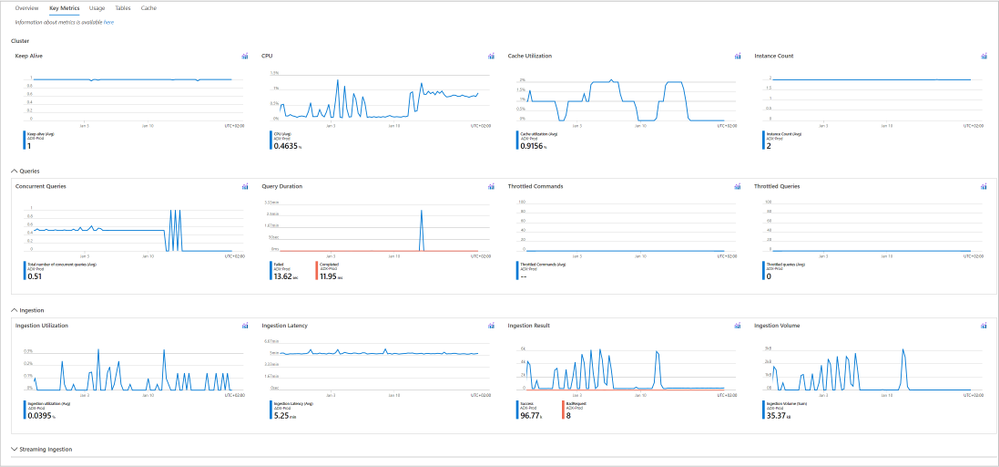

- Explore multiple key metrics on one page and discover correlations between them.

The Key Metrics tab

The Key Metrics tab

Azure Monitor for Azure Data Explorer is now available in the Azure Monitor and Azure Data Explorer blades in the Azure portal. We look forward to hearing your feedback on the new experience.

To learn more, see the ADX Insights documentation.

by Contributed | Jan 18, 2021 | Technology

This article is contributed. See the original author and article here.

There are lots of different ways you can deploy and configure your Azure resources. When customers are first starting their cloud journey it’s common to provision resources manually, deploying and configuring them in the Azure portal. However, this quickly becomes difficult to manage and scale. Infrastructure as Code (IaC) techniques and tools are designed to help you make use of your skills with coding and DevOps practices when working with your cloud infrastructure. However, I frequently work with customers who aren’t completely convinced that IaC is going to help them, or be worth the investment. It can be helpful to have some insight into the benefits that many other customers have seen when using IaC approaches. This post outlines the main reason I think IaC is an essential part of a modern solution for Azure. We won’t get much into the technical details of how you use IaC – this is just a high-level overview of why you should use it.

What is Infrastructure as Code?

The Azure Well-Architected Framework has a great definition of IaC:

Infrastructure as code (IaC) is the management of infrastructure – such as networks, virtual machines, load balancers, and connection topology – in a descriptive model, using a versioning system that is similar to what is used for source code. When you are creating an application, the same source code will generate the same binary every time it is compiled. In a similar manner, an IaC model generates the same environment every time it is applied. IaC is a key DevOps practice, and it is often used in conjunction with continuous delivery.

Ultimately, IaC allows you and your team to develop and release changes faster, but with much higher confidence in your deployments.

Gain higher confidence

One of the biggest benefits of IaC is the level of confidence you can have in your deployments, and in your understanding of the infrastructure and its configuration.

Integrate with your process. If you have a process by which code changes gets peer reviewed, you can use the exact same process for your infrastructure. This can be very helpful when a team member might be proposing a change to a resource, but they don’t realise that the change might not work, could cause issues elsewhere in the solution, or may not meet the requirements. If these changes are made directly in the portal then you may not have the opportunity for your team to review the changes before they are made.

Consistency. Following an IaC process ensures that the whole team is following a standard, well-established process – regardless of who on the team has initiated it. I often work with customers who have a single designated person who is permitted to deploy to production; if this person is unavailable then deployments can be very difficult to complete, since that person will maintain all the knowledge in their head. By following a fully automated process you can move the knowledge of the deployment process into the automation tooling, and then you can broaden the number of people on your team who can initiate deployments while still maintaining the same quality level – and without giving broad administrative access to your environment. Not only does this help with your operational efficiency, it also has security benefits too.

Automated scanning. Many types of IaC assets can be scanned by automated tooling. One such type of tooling is linting, to check for errors in the code. Another type will scan the proposed changes to your Azure infrastructure to make sure they follow security and performance best practices – for example, ensuring that storage accounts are configured to block unsecured connections. This can be an important part of a Continuous Security approach.

Secret management. Every solution requires some secrets to be maintained and managed. These include connection strings, API keys, client secrets, and certificates. Following an IaC approach means that you need to also adopt some best-practice approaches to managing these secrets. For example, Azure has the Key Vault service to maintain these types of data. Key Vault can be easily integrated with many IaC tools and assets to ensure that the person conducting the deployment doesn’t need access to your production secrets, which means you’re adhering to the security principle of least privilege.

Access control. A fully automated IaC deployment pipeline means that all changes to your Azure resources should be done by an automated procedure. By doing this, you can be confident that all changes that are deployed to your Azure environment have followed the correct procedure, and it’s much harder for bad configuration to make its way through to production accidentally. Ideally, you would remove the ability for humans to modify your resources at all – although you may allow for this to be overridden in an emergency, by using a ‘break glass’ account or Privileged Identity Management.

Avoid configuration drift. When I work with customers to adopt IaC approaches, I recommend redeploying all of the assets on every release. IaC tooling is generally built to be idempotent (i.e. to be able to be run over and over again without any bad effects). Usually, the first deployment of an asset will actually deploy the asset, while subsequent redeployments will essentially act as ‘no-ops’ and have no effect. This practice helps in a few ways:

- It ensures that your IaC assets are regularly exercised. If they are only deployed occasionally, it’s much more likely they will become stale and you won’t notice until it’s too late. This is particularly important if you need to rely on your IaC assets as part of a disaster recovery plan.

- It ensures that your application code and infrastructure won’t get out of sync. For example, if you have an application update that needs an IaC asset to be deployed first (such as to deploy a new database), you want to make sure you won’t accidentally forget to do this in the right order. Deploying the two together in one pipeline means you are less likely to encounter these kinds of ‘race conditions’.

- It helps to avoid configuration drift. If someone does accidentally make a change to a resource without following your IaC pipeline, then you want to correct this as quickly as possible and get the resource back to the correct state. By following an IaC approach, the source of truth for your environment’s configuration is in code.

Manage multiple environments

IaC can help with managing your environments. Pretty much every customer has to maintain some non-production environments as well as production. Some customers also maintain multiple production environments, such as for multitenanted solutions or for geographically distributed applications.

Manage non-production environments. A common pain point for customers is when their non-production environments are not the same as their production. This slows down testing of changes and reduces the team’s confidence that they fully understand and what’s happening in production. This configuration drift will often happen when the environments are all created manually, and someone forgets to apply a change somewhere. If you follow an IaC approach then this problem goes away, because the same UaC definition is used to create and deploy all of your environments – both non-production and production. You can specify different configuration for each environment, of course, but the core definition will be the same.

Dynamically provision environments. Once you have your IaC assets, you can then use them to provision new environments very easily. This can be enormously helpful when you’re testing your solution. For example, you could quickly provision a duplicate of your production environment that can then be used for security penetration tests, for load testing, or to help a developer track down a bug.

Scale production environments. Some customers have the requirement to provision multiple production environments. For example, you might be following the deployment stamps pattern, or you might need to create a new instance of your solution in another geographical region. IaC assets can be used to quickly provision all of the components of your solution again, and keep them consistent with the existing environment.

Disaster recovery. In some situations, IaC assets can be used as part of a disaster recovery plan. If you don’t need to keep copies of all of your infrastructure ready for a potential disaster, and can cope with a bit of downtime while you wait for your IaC assets to provision resources in another Azure region, then this can be worth considering. You’ll need to be careful to plan out how you handle disaster recovery for your databases, storage accounts, and other resources that store state, though. You also need to make sure that you fully test your disaster recovery plans and that they meet your requirements for how much downtime you can experience in a disaster scenario (which is often called your Recovery Time Objective).

Better understand your cloud resources

IaC can also help you better understand the state of your resources.

Audit changes. Changes to your IaC assets will be version-controlled. This means you can review each change that has happened, as well as who made it, and when. This can be very helpful if you’re trying to understand why a resource is configured a specific way.

Metadata. Many types of IaC assets let you add metadata, like code comments, to help explain why something is done a particular way. If your organisation has a culture of documenting your code, you can apply the same principles to your infrastructure.

Keep everything together. It’s pretty common for a developer to work on a feature that will require both code changes and infrastructure changes. By keeping your infrastructure defined as code, you’ll be able to group these together and see the relationship. For example, if you see a change to an IaC asset on a feature branch or in a pull request, you’ll have a clearer understanding of what that change relates to.

Better understand Azure itself. The Azure portal is a great way to easily provision and configure resources, but it often simplifies the underlying resource model used. Using IaC will mean that you gain a much deeper understanding of what is happening in Azure and how to troubleshoot it if something isn’t working correctly. For example, if you provision a set of virtual machines manually in the Azure portal, you may not realise that there are actually lots of separate Azure resources provisioned – and that some of these can potentially be shared, which can help to simplify your ongoing operations. Another example is that when you provision a Key Vault instance through the portal, the person creating it will be given access to the vault automatically – which may not necessarily be what you want. Using IaC means you have explicit control.

Declarative and imperative IaC

Broadly speaking, there are two different models for IaC. Imperative IaC involves writing scripts, in a language like Bash, PowerShell, C# script files, or Python. These programmatically execute a series of steps to create or modify your resources. Declarative IaC instead involves writing a definition of how you want your environment to look; the tooling then figures out how to make this happen by inspecting your current state, comparing it to the target state you’ve requested, and applying the differences. There’s a good discussion of imperative and declarative IaC here.

There are great Azure tooling options for both models. For imperative IaC you can use the Azure CLI or PowerShell cmdlets. For declarative IaC you can use Azure Resource Manager (ARM) templates – or, in the near future, the new ARM template language called Bicep, which is my personal favourite option. Another popular choice is Terraform – there’s a good comparison of ARM templates and Terraform in the Azure Well-Architected Framework. There are plenty of other community and commercial tools around too, including Pulumi.

I prefer using declarative IaC approaches – it can sometimes be a little tricky to write imperative scripts that will work consistently and will do exactly what you expect every time. I also feel like you get the most benefit out of IaC when you use declarative tooling. My personal choice is to use ARM templates (or soon, Bicep) for my IaC. However, the choice is yours.

How to get started with IaC

Hopefully these reasons are enough to convince you that IaC is worth the initial upfront investment in time – you’ll need to be prepared to create your IaC assets, and to create a good process for your release and the necessary pipelines to support it.

First, explore and try out a few tools to see which will work best for your team. If you don’t have any experience with IaC already then I recommend you start using Bicep – there’s a great tutorial on how to get started. Try creating a simple dummy solution to see how the end-to-end process works.

Next, try creating some IaC assets for your actual solution. How do this will depend a little on your situation:

- If you’re working on a brand new solution then try to adopt the discipline of only deploying through IaC assets, and consider using the ARM template ‘complete’ deployment mode to help to maintain this discipline.

- If you’ve got existing Azure resources then I recommend following a hybrid approach and slowly migrating to IaC assets. Start by creating IaC assets for a few small pieces of your solution, get those working, and then add more and more until you have are deploying everything in code. At first, make sure you are doing incremental deployments so that you don’t accidentally destroy any existing production resources that you haven’t yet added to your IaC assets.

You’ll also need to build a pipeline to deploy the IaC assets. If you use ARM templates or Bicep, you can integrate these with Azure Pipelines. Make sure that any new resources are created and managed completely within your IaC assets and deployed using your pipelines.

You should also remember to use good coding practices in your IaC assets. Use elements like Bicep modules to help you organise your assets into separate, composable files. Make sure you follow the best practices for ARM templates.

Keep iterating and improving your IaC workflow, adding more and more of your solution to your IaC assets and deployment pipeline. Pretty soon you will start to see all of the benefits of IaC in your own team.

by Contributed | Jan 18, 2021 | Technology

This article is contributed. See the original author and article here.

As we know there are two kinds of operations for program threads — Asynchronous and Synchronous.

These are the definitions we can get from internet.

Asynchronous operation means the process operates independently of other processes.

Synchronous operation means the process runs only as a resource or some other process being completed or handed off.

However, whether it’s good to Receive Message Asynchronously on the Azure Service Bus?

Pre-requirement

Before we start, please read these documents. Service Bus asynchronous messaging and Azure Service Bus messaging receive mode

From the above pre–requisites, we learn the following:

Azure Service Bus support both Asynchronous messaging patterns and Synchronous messaging patterns. Applications typically use asynchronous messaging patterns to enable several communication scenarios.

This test is archived based on Service Bus PeekLock Receive mode. Here is more background information about the principle for PeekLock receive mode.

The principle for PeekLock Receive mode is that:

- Every time the Service Bus finds the next message to be consumed.

- Locks it to prevent other consumers from receiving it.

- Then, return the message to the application.

There is a common exception for the Service Bus Lock expiration. This exception is because the message transaction time longer than Lock duration. It may be due to many reasons like Receive Application has high latency. This blog will also reproduce this Lock expired exception for Receive Messages Asynchronously and Synchronously. Let’s do a test now!

Test Entities:

I use a same Queue to do this test. The Max delivery count is 1. If you are interested about the usage of “Max delivery count” please check from here Service Bus exceeding MaxDeliveryCount.

Message lock duration time is 30s.

My Program:

Here I use different function in .Net for receive messages. All the functions have “Async” like ReceiveBatchAsync means the functions are working Asynchronously.

To simulate the situation by sending a large number of messages, I use Batch function to receive 1000 messages at one time.

- Here is the program that receives messages in Asynchronous patterns.

using Microsoft.ServiceBus.Messaging;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

namespace SendReceiveQueue

{

class Program

{

static string connectionString = “<your connection string>“;

static string queueName = “<queue name>“;

static void Main(string[] args)

{

MainAsync().GetAwaiter().GetResult();

}

public static async Task MainAsync()

{

QueueClient receiveClient = QueueClient.CreateFromConnectionString(connectionString, queueName);

//create a sender on the queue

var Timestamp2 = new DateTimeOffset(DateTime.UtcNow).ToUnixTimeMilliseconds();

Console.WriteLine(“Receiving message -, timestamp:{0}”, Timestamp2);

IEnumerable<BrokeredMessage> messageList = await receiveClient.ReceiveBatchAsync(1000);

foreach (BrokeredMessage message in messageList)

{

try

{

var Timestamp0 = new DateTimeOffset(DateTime.UtcNow).ToUnixTimeMilliseconds();

Console.WriteLine(“Message”+message.GetBody<string>() +“time”+Timestamp0);

await message.CompleteAsync();

}

catch (Exception ex)

{

var Timestamp3 = new DateTimeOffset(DateTime.UtcNow).ToUnixTimeMilliseconds();

Console.WriteLine(“abandon message – timestamp:{0},errorr message {1}”, Timestamp3,ex.Message);

await message.AbandonAsync();

}

}

await receiveClient.CloseAsync();

}

}

}

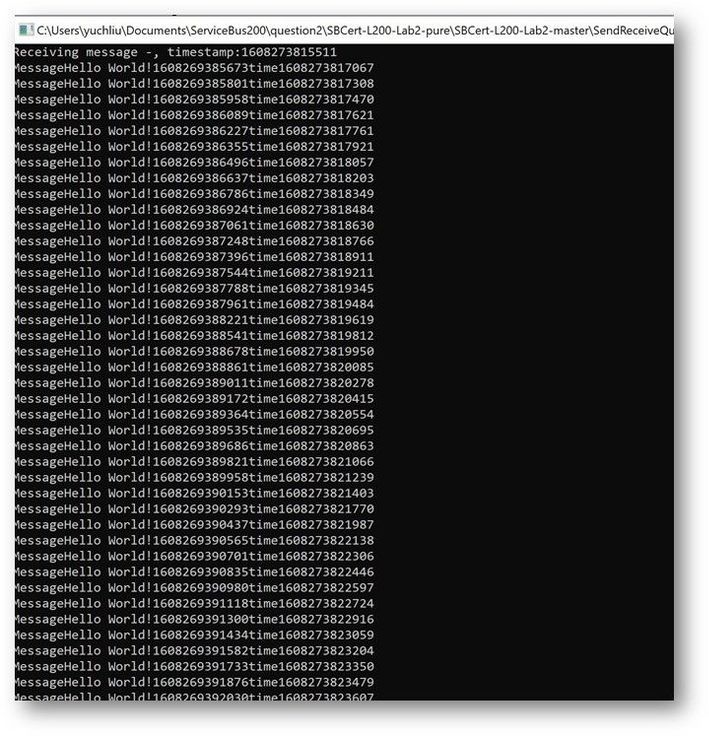

This is the result. The average time of receiving message is in 200ms to 300ms.

- And this is the Code for receiving messages with Synchronous messaging patterns.

using Microsoft.ServiceBus.Messaging;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace SendReceiveQueue

{

class Program

{

static string connectionString = “<your connection string>“;

static string queueName = “<queue name>“;

static void Main(string[] args)

{

MainTest();

}

static void MainTest()

{

QueueClient receiveClient = QueueClient.CreateFromConnectionString(connectionString, queueName);

//create a sender on the queue

var Timestamp2 = new DateTimeOffset(DateTime.UtcNow).ToUnixTimeMilliseconds();

Console.WriteLine(“Receiving message -, timestamp:{0}”, Timestamp2);

IEnumerable<BrokeredMessage> messageList = receiveClient.ReceiveBatch(1000);

foreach (BrokeredMessage message in messageList)

{

try

{

var Timestamp0 = new DateTimeOffset(DateTime.UtcNow).ToUnixTimeMilliseconds();

Console.WriteLine(“Message” + message.GetBody<string>() + “time” + Timestamp0);

message.Complete();

}

catch (Exception ex)

{

var Timestamp3 = new DateTimeOffset(DateTime.UtcNow).ToUnixTimeMilliseconds();

Console.WriteLine(“abandon message – timestamp:{0},errorr message {1}”, Timestamp3, ex.Message);

message.Abandon();

}

}

receiveClient.Close();

Console.Read();

}

}

}

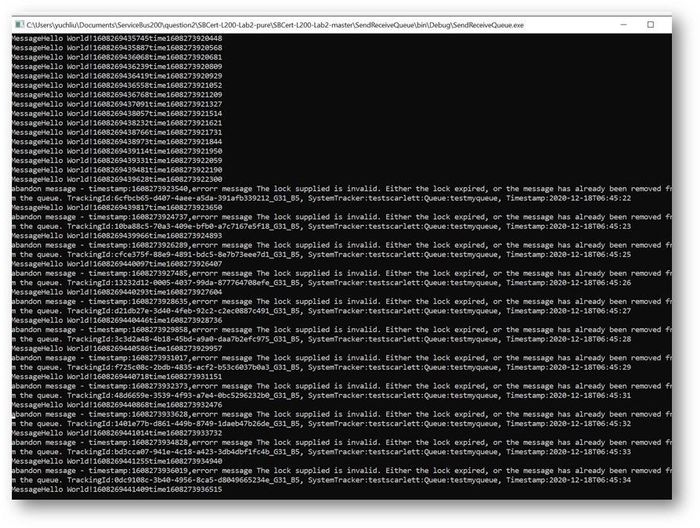

This is the result. At first time the messages can also finish in 200ms to 300ms. But after a while It shows error for “lock expired”.

Why didn’t we get any errors while using the Async pattern in this program? Why we got “Lock expired” exception while using Sync pattern?

This exception is highly possible in receiving messages in batch function. Because all these 1000 messages were received in one operation. Using Peeklock receive mode, Service Bus locked all the 1000 messages at the same time. And then complete messages in Asynchronous patten, Messages can be completed without blocking. The await keyword provides a non-blocking way to start a task, then continue execution when that task is completed. It saved the Message complete time.

But using Synchronous patten, all the Messages was completed one by one, the waiting time exceeds 30s. So, it shows “lock expired” error.

You can get detailed information on how the asynchronous C# backend works from this document. Asynchronous programming in C# | Microsoft Docs

Test Result Summary

- From the test result, it indicates that receiving messages with Asynchronous Messaging pattern would have higher ability than Synchronous Messaging pattern. We recommend using Asynchronous over than Synchronous.

- However, if we receive a larger number of messages in one Batch operation like 10000 messages. This program with Asynchronous Messaging pattern also would get “lock expired” error. As mentioned before this “Lock expired” exception may be due to many reasons. That also the reason Service Bus have Dead Lettering Queue to prevent Service Bus message being lost. If you are interested in this topic, you are welcome to provide your comments.

by Contributed | Jan 18, 2021 | Technology

This article is contributed. See the original author and article here.

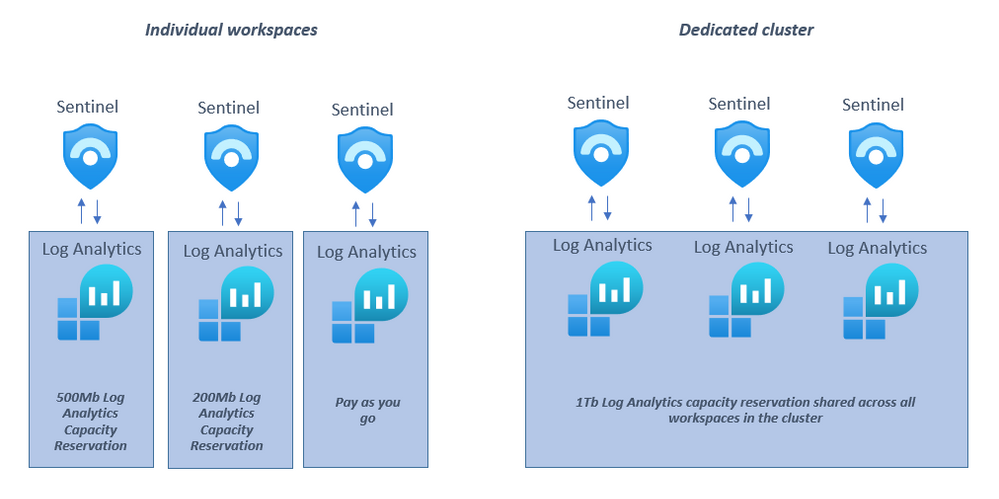

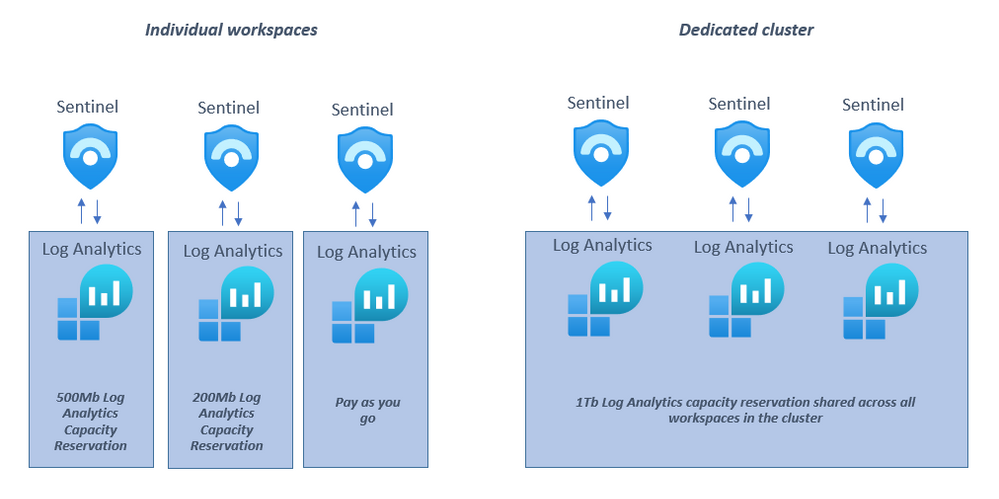

If you ingest over 1Tb a day into your Azure Sentinel workspace and/or have multiple Azure Sentinel workspaces in your Azure enrolment, you may want to consider migrating to a dedicated cluster, a recent addition to the deployment options for Azure Sentinel.

NOTE: Although this blog refers to a “dedicated cluster for Azure Sentinel”, the dedicated cluster being referred to is for Log Analytics, the underlying data store for Azure Sentinel. You may find official documents refer to Azure Monitor; Log Analytics is part of the wider Azure Monitor platform.

Overview

A dedicated cluster in Azure Sentinel does exactly what it says: you are given dedicated hardware in an Azure data center to run your Azure Sentinel instance. This enables several scenarios:

- Customer-managed Keys – Encrypt the cluster data using keys that are provided and controlled by the customer.

- Lockbox – Customers can control Microsoft support engineers access requests for data.

- Double encryption protects against a scenario where one of the encryption algorithms or keys may be compromised. In this case, the additional layer of encryption continues to protect your data.

Additionally, multiple Azure Sentinel workspaces can be added to a dedicated cluster. There are several advantages to using a dedicated cluster from a Sentinel perspective:

- Cross-workspace queries will run faster if all the workspaces involved in the query are added to the dedicated cluster. NB: It is still recommended to have as few workspaces as possible in your environment. A dedicated cluster still retains the limit of 100 workspaces that can be included in a single cross-workspace query.

- All workspaces on the dedicated cluster share the Log Analytics capacity reservation set on the cluster(not the Sentinel capacity reservation), rather than having to have one Log Analytics capacity reservation per workspace which can allow for cost savings and efficiencies. NB: By enabling a dedicated cluster you commit to a capacity reservation in Log Analytics of 1Tb a day ingestion.

Considering migrating to a dedicated cluster?

There are some considerations and limitations for using dedicated clusters:

The max number of clusters per region and subscription is 2.

- All workspaces linked to a cluster must be in the same region.

The maximum of linked workspaces to cluster is 1000.

You can link a workspace to your cluster and then unlink it. The number of workspace link operations on particular workspace is limited to 2 in a period of 30 days.

Workspace link to cluster should be carried ONLY after you have verified that the Log Analytics cluster provisioning was completed. Data sent to your workspace prior to the completion will be dropped and won’t be recoverable.

Cluster move to another resource group or subscription isn’t supported at the time of writing this article.

Workspace link to cluster will fail if it is linked to another cluster.

The great news is that you can retrospectively migrate to a dedicated cluster, so if this feature looks like it would be useful to your organization, you can find more information and migration steps here.

With thanks to @Ofer_Shezaf, @Javier Soriano and @Meir Mendelovich for their input into this blog post.

by Contributed | Jan 18, 2021 | Technology

This article is contributed. See the original author and article here.

React is one of the most used so-called SPA (Single page application) frameworks right now, if not the biggest one with 162k stars https://github.com/facebook/react

Knowing React is clearly advantages when looking for your first job.

I’ve made my React book available as a GitHub repo so teacher or student can easily download as a zip, clone it, or fork it if you’d like to help improve it.

Content

The book covers various aspects of React such as

– Fundamentals, how create components, working with data and functions, rendering data

– Redux, the pattern itself and all the things that goes with it like the store, actions, reducers and saga

– Advanced APIs and patterns like Context API, Render props, Hooks and more

– Testing, using Jest, Nock and React Testing library

Here’s the link to the GH repo https://github.com/softchris/react-book

The Cache tab

The Tables tab

The Usage tab

The Overview tab

The Key Metrics tab

Recent Comments