by Contributed | Jan 15, 2021 | Technology

This article is contributed. See the original author and article here.

I am super thrilled to roll out a new (and detailed) migration guide for those of you who are migrating your Oracle workloads to our Azure Database for PostgreSQL managed database service.

Heterogenous database migrations such as moving from Oracle to PostgreSQL are hard, multi-step projects—especially for complex applications. Now, why are so many teams migrating their Oracle workloads to Postgres these days? This article from Stratoscale explains why there is an increasing demand for these migrations and is one of my favorite posts. I highly recommend a thorough read to get a feel of our industry’s current stand on this topic. To summarize, some of the top reasons that teams are migrating from Oracle to Postgres include: cost savings, decisions to transition to an open source culture, and desire to improve efficiency by modernizing workloads and moving to the public cloud.

If you’re modernizing your entire workload (as opposed to just your database tier) then you’re probably looking to migrate all your dependencies—front-end apps, middleware components, etc.—so you can garner the advantages of moving to the cloud. From our experience, these tough migration challenges can only be solved by a combination of people—specifically, in-house migration experts—and processes such as pre-migration, migration, and post-migration to-dos/checklists—and technology, including tools to automate the migration.

With the new Oracle to Azure Database for PostgreSQL migration guide, we dive deep into this problem space to give you the best practices you will need to manage your database migration. You will also get a 360° view—across the people, process, and tools aspects—into what you can expect from an Oracle to Postgres migration journey.

In this four-part blog series, we’ll dive into the following aspects of Oracle to PostgreSQL migrations:

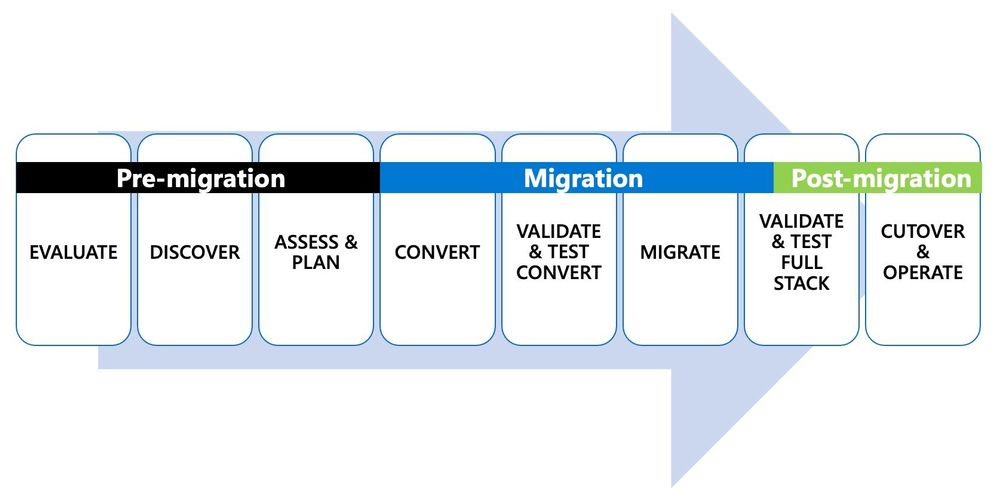

- Process – The journey map of an Oracle to PostgreSQL migration, and how it ties into this new migration guide

- Storage objects – Migrating database objects such as tables, constraints, indexes, etc.

- Code objects – Migrating database code objects (stored procedures, functions, triggers, etc.) and

- Feature Emulations – Emulating various Oracle features in PostgreSQL

Before we dive into the contents of the migration guide and see how it can help you, let’s take a look at what happens in a typical Oracle to PostgreSQL migration. Also, note that the journey map by itself applies for migrations to all deployment options offered by Azure Database for PostgreSQL (Single Server, Flexible Server, and Hyperscale (Citus)). While this migration guide is focused on moving from Oracle to our managed Postgres service on Azure—aka Azure Database for PostgreSQL—you will probably find this migration guide useful, even if you are migrating from Oracle to self-managed Postgres deployments. (We want to live and breathe open source  ).

).

Figure 1: Depiction of the 8 steps in the end-to-end journey of an Oracle to PostgreSQL migration.

Figure 1: Depiction of the 8 steps in the end-to-end journey of an Oracle to PostgreSQL migration.

The first 2 steps in the Oracle to Postgres migration journey: Evaluate & Discover

An important first step in approaching these migrations is to know what existing workloads you have and evaluate whether you need to do the migration at all. From our experience, those of you who choose to migrate from Oracle to Postgres do so for a bunch of different reasons as mentioned above.

Once you have evaluated why you want to migrate, discovering which workloads to migrate is the next step. You can discover workloads with a combination of tools and people who understand the full stack—both your application and your database. Given migrations are done for the full stack, it is also important for you to understand the dependencies between applications and databases in the portfolio. Discovering all possible workloads will help you to:

- Understand scope of the migration

- Evaluate migration strategies

- Assess engagement strategies

- Recognize opportunities to improve

This exercise will also clearly help you understand if certain workloads will need to remain in Oracle for various reasons. If that is the case, the good news is that you can still move your Oracle workloads to Azure and run Oracle applications on Azure.

Step 3 is all about assessing the workload & planning your migration

Once you have pinpointed which workloads are candidates for migration to PostgreSQL, and have a good idea of the dependencies—the next logical step is to assess the overall cost/effort of your Oracle to Postgres migration.

A fleet-wide analysis of your workloads with a cost model for the database migration will show how easy or difficult each migration will be. You might be wondering where this cost model is going to come from? It’s not easy to generate in some instances, I admit, but the good news is that there is a dedicated chapter on “Migration Effort Estimation” in the guide.

The cost model you create will help you to decide on which workloads to start migrating—or at least, which workloads to migrate first. At that point, all the details you collected in the earlier Evaluation step will help you to assess:

- Fit – If your workload can really run in Postgres

- Automation – Tools to use for the end-to-end database migration

- Execute – Choose whether you want to do the migration yourself or engage with some of our awesome partners who do these Oracle to Postgres migrations day-in and day-out

Overall, this planning process puts you on the path to move successfully to PostgreSQL in the cloud. It enables your team to be productive throughout the migration and operate with ease once complete.

Step 4 starts the conversion process & creates a target environment in Postgres

While your developers, database administrators, and PMs may be involved in the initial assessment and planning stages, the conversion process is where technical teamwork is in full swing. In the case of heterogeneous database migrations from Oracle to Azure Database for PostgreSQL, you will need to:

- Convert schema & objects: Convert your source database schema and database code objects such as views, stored procedures, functions, indexes, etc. to a format compatible with Postgres.

- Convert SQL: If an ORM library (such as Hibernate, Doctrine, etc.) is not being used, you will need to scan application source code for embedded SQL statements and convert them to a format compatible with Postgres.

- Understand exceptions: Understand which objects from the database source and application code could not be parsed and converted.

- Track all the changes: Centrally track all conversions, emulations, or substitutions so they can be reused across all the workloads you migrate from Oracle to PostgreSQL, both currently and in the future.

Once the conversion process is complete, step 5 kicks in: validate & test. You will need to ensure that the converted objects in your app or your database undergo thorough testing to ensure functional correctness in the new Postgres environment. You also need to make sure the new Postgres environment meets the performance and SLA requirements of your workload, end-to-end.

Step 6 is when you Migrate data and in Step 7 you validate the full workload stack

When you reach this stage in the Oracle to Postgres migration, you will have a converted and tested Postgres environment you can migrate into. Your technical teams, who understand different aspects of the databases currently being used, prepare for data migration. Your teams will work to understand other requirements such as downtime—and will plan for special considerations such as the need for bi-directional replication—as well as identify tools/services to migrate data.

Typically, to migrate data, you will use logical replication capabilities in Oracle to move initial data and ongoing changes to Postgres. Logical replication is one of the only ways to perform heterogeneous migrations. You can also validate data and test the full stack by taking an instance of your new Postgres database at a given point in time, restored from an Azure Database for PostgreSQL point in time snapshot. Once you have taken this point in time snapshot of your Postgres target database, you can run an instance of the application on the restored snapshot, to check for performance issues and data validity. This testing process ensures everything from functionality correctness to performance requirement baselines is ready, and a cutover can be scheduled.

Step 8 is when you Cutover your workload to use Postgres

On cutover day, for unidirectional replication scenarios, writes from the application are stopped. Writes are stopped in order to let the Postgres database fully catch up, until the overall logical replication latency from Oracle to Postgres is zero. All your migration jobs are stopped once the target is fully caught up and validated.

For applications interfacing with an ORM layer, the Oracle database connection string is changed to point to the new Postgres database, and writes are resumed to complete cutover. For applications that were rearchitected, the new instance of the application now takes up production traffic pointing to the new Postgres database. All code deployment best practices in the cloud need to be followed in this step. The rollback infrastructure should also be in place with clear instructions on steps to rollback along with criteria. Your team should be well trained and ready to operate on the new infrastructure before cutover is complete.

As you can see, the process is pretty involved and requires proper planning to utilize a combination of people + process + tools to be successful.

Despite the effort taken to do these tough migrations, I want to re-emphasize that there are so very many benefits to moving your Oracle workloads to Postgres in the cloud. Given the importance of these database migrations and our experience helping many of you to navigate this landscape, we wanted to give back to the community and thus the idea behind the Oracle to Azure Database for PostgreSQL migration guide was born.

Welcome to the new Oracle to Azure Database for PostgreSQL Migration Guide

From our experience working with those of you who have already done an Oracle to Postgres migration—specifically migrations to our Azure Database for PostgreSQL managed service—people spend more than 60% of the entire migration project in the conversion (step 4) and migration (step 6) phases described in the journey map above.

And as you probably already know, there are already some outstanding open source tools such as Ora2Pg available in the Postgres community. These tools help plan, convert, and perform these migrations at scale. However, some Oracle features will still need manual intervention to ensure the conversion was done as expected. To help you with this challenge, we created this Oracle to Postgres guide—based on our Azure team’s experience helping teams navigate this landscape of migrating their Oracle workloads into Azure Database for PostgreSQL.

The details given in our new migration guide apply fully for migrations to self-managed Postgres as well as our Single Server/Flexible Server deployment options for Azure Database for PostgreSQL. A subset of the best practices discussed in this guide apply to Oracle migrations into our Hyperscale (Citus) deployment option as well. If you’d like to dive deeper into the specifics of Hyperscale (Citus) as a potential target platform for your Oracle workloads, feel free to reach out to us to discuss specifics.

Our goals in creating this Oracle to Postgres migration guide were to give you:

- Go-to, definitive resource: Record all ideas, gotchas, and best practices in one place.

- Best practices: Walk through the best practices of setting up the infrastructure to pull off migrations at scale.

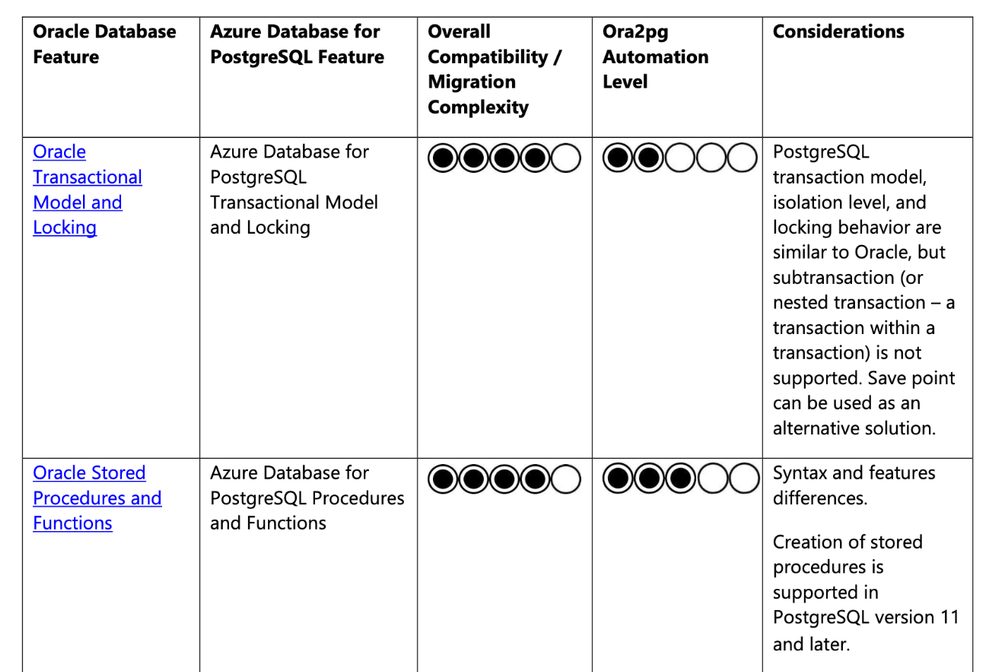

- Feature-by-feature guidance: For each feature in Oracle, provide best practice examples (with code snippets) for their use in Oracle and guidance on how that feature will work in PostgreSQL.

- Postgres version comparisons: Show how PostgreSQL is evolving and show a comparison between PostgreSQL 11 vs. 12 vs. 13 to help you make good decisions from a migration standpoint.

- What to expect from Ora2pg: Dive deep into the general migration complexity of each Oracle feature with the Ora2pg automation capability, so you know what to expect when you use Ora2pg to plan your migration.

Fair warning, folks  : All this work resulted in a 300+ page guide that walks you through multiple facets of an Oracle to PostgreSQL conversion and migration.

: All this work resulted in a 300+ page guide that walks you through multiple facets of an Oracle to PostgreSQL conversion and migration.

I know, that sounds looooong. However, the good news is that we put a lot of thought into the structure of our Oracle to Postgres migration guide to make it easy to look things up and easy to navigate. You don’t need to read through all pages to get value out of the guide. Based on where you are in your migration journey, you can easily navigate to specific chapters within the guide, get the info you need, and move on to next steps.

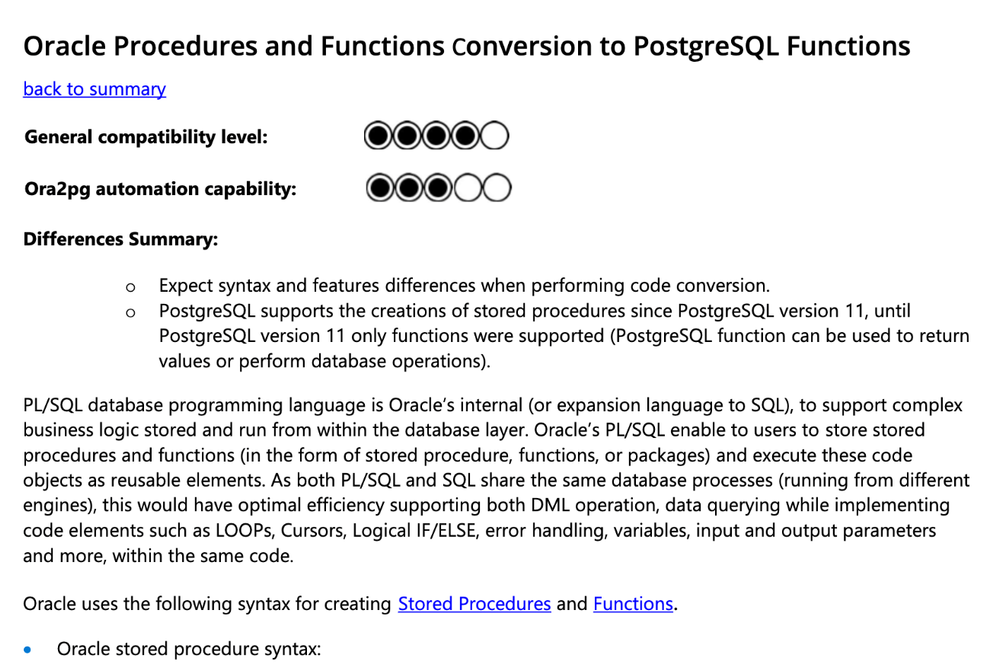

Here are a few screenshots of different parts of the Oracle to Postgres migration guide to give you a visual on the content –

Figure 2: Migration Guide Summary Table

Figure 2: Migration Guide Summary Table

Figure 3: Consume content and go back to summary table for the next lookup.

Figure 3: Consume content and go back to summary table for the next lookup.

Are you ready to migrate from Oracle to Postgres?

Now that you have a good overview of the journey, go ahead, and check out our Oracle to Postgres migration guide. If you would like to chat with us about migrations to PostgreSQL in general, or have questions on our PostgreSQL managed service, you can always reach out to our product team via email at Ask AzureDB for PostgreSQL. I also love all the updates on our Twitter handle (@AzureDBPostgres) and would recommend following us for more Postgres news and updates. We would love to hear from you. Happy learning.

by Contributed | Jan 15, 2021 | Technology

This article is contributed. See the original author and article here.

By now you are hopefully aware of the TLS 1.0/1.1 deprecation efforts that are underway across the industry and Microsoft 365 in particular. Head out to our documentation for more details and references if you need a refresher! Also check out this blog entry to see how you can use reporting in Exchange Online to get an overview about the TLS versions used by mails submitted to your tenant. This topic may be super-relevant to you, because as confirmed by the message center post MC229914, TLS 1.0 and TLS 1.1 deprecation started enforcing for Exchange Online mail flow endpoints beginning January 11th, 2021. The rollout will continue over the following weeks and months. This essentially means, soon this deprecation process will be over, and we will no longer accept TLS 1.0 and TLS 1.1 email connections from external sources. Also note that Exchange Online will never use TLS 1.0 or 1.1 to send outbound email.

We wanted to talk about what this means for SMTP traffic destined to Exchange Online in particular. What happens if a server on your side can only use TLS 1.0 with SMTP? Will sending fail, and if yes, how do you notice TLS 1.0 or TLS 1.1 is the root cause of your email problems? There are different variables that impact this and we will try to mention most frequent scenarios.

Before diving into further details, keep in mind that generally speaking, the TLS implementation in Exchange on-premises or Exchange Online is done opportunistically. This means:

- For receiving mail into Exchange: If the sending server does not support TLS, or if the TLS negotiation fails, Exchange Online will still accept messages unencrypted and without TLS (provided the sending server’s configuration allows that).

- For sending mail from Exchange: For outbound email, if the receiving server does not support TLS (does not advertise the STARTTLS Verb), Exchange on-premises and Exchange Online will send email without TLS (provided TLS is not forced on the send connector or outbound connector).

Another point to keep in mind is that Exchange will always attempt to initially negotiate the highest possible version of TLS which is enabled on the other server. Once this version is selected during the TLS handshake – Exchange does not attempt a lower version of TLS/SSL that might also be enabled on the server. In case there is a failure during communication, Exchange will instead re-attempt the delivery without TLS. Our previously published 3 part blog posts (Exchange Server TLS guidance part 1, Part 2 and Part 3) extensively covered how various components like Schannel, WinHTTP, .Net, etc. work together to decide the version of TLS Exchange server should use during TLS handshakes.

Other than TLS versions, another factor that we tend to overlook are the Cipher Suites supported by Office 365. While the servers or devices may use TLS 1.2, not supporting one of the ciphers suites adopted by Office 365 from the published list could also cause mail flow issues.

Let us look at the details of each scenario!

3rd party SMTP server sending to Exchange Online

The experience here will mostly depend on the sending server’s implementation. In most cases, there should be no impact. Once the TLS 1.0 attempt fails, the sender should fall back to not using TLS at all and send in an unencrypted manner. If the sender is relying solely on TLS 1.0 or TLS 1.1 and cannot send unencrypted, it is again up to the sending server’s implementation on what happens – the mail might remain queued while the sender keeps retrying. Ultimately the sending server should generate an error or an NDR after the message expiration timeout.

Exchange server (external to the organization) sending to Exchange Online

This applies to the case where your Exchange servers in contoso.com would be sending to a different organization, let’s say fabrikam.com, which is hosted in Exchange Online. For most organizations, mail flow will not break. This is because send connectors in Exchange are by default created with the setting “RequireTLS: false”, meaning they will attempt a TLS connection if the remote party supports it, but if TLS negotiation fails, they will simply fall back to not using TLS and will send anyway. The SMTP Send protocol logs will contain entries that resemble the following:

You will see that initially the mail could not be sent to Office 365 and it failed with error: TLS negotiation failed with error SocketError

#Fields: date-time,connector-id,session-id,sequence-number,local-endpoint,remote-endpoint,event,data,context

2021-01-11T16:43:14.811Z,Connector2Fabrikam,08D8B64FC6449F2A,0,,10.1.0.16:25,*,SendRoutingHeaders,Set Session Permissions

2021-01-11T16:43:14.811Z,Connector2Fabrikam,08D8B64FC6449F2A,1,,10.1.0.16:25,*,,attempting to connect

2021-01-11T16:43:14.817Z,Connector2Fabrikam,08D8B64FC6449F2A,2,10.0.0.16:6933,10.1.0.16:25,+,,

2021-01-11T16:43:14.969Z,Connector2Fabrikam,08D8B64FC6449F2A,3,10.0.0.16:6933,10.1.0.16:25,<,”220 BN3USG02FT012.mail.protection.office365.us Microsoft ESMTP MAIL Service ready at Mon, 11 Jan 2021 17:43:14 +0100″,

2021-01-11T16:43:14.969Z,Connector2Fabrikam,08D8B64FC6449F2A,4,10.0.0.16:6933,10.1.0.16:25,>,EHLO exc16.contoso.com,

2021-01-11T16:43:15.012Z,Connector2Fabrikam,08D8B64FC6449F2A,5,10.0.0.16:6933,10.1.0.16:25,<,250 BN3USG02FT012.mail.protection.office365.us Hello [10.0.0.16] SIZE 37748736 PIPELINING DSN ENHANCEDSTATUSCODES STARTTLS X-ANONYMOUSTLS AUTH NTLM X-EXPS GSSAPI NTLM 8BITMIME BINARYMIME CHUNKING XRDST,

2021-01-11T16:43:15.013Z,Connector2Fabrikam,08D8B64FC6449F2A,6,10.0.0.16:6933,10.1.0.16:25,>,STARTTLS,

2021-01-11T16:43:15.016Z,Connector2Fabrikam,08D8B64FC6449F2A,7,10.0.0.16:6933,10.1.0.16:25,<,220 2.0.0 SMTP server ready,

2021-01-11T16:43:15.016Z,Connector2Fabrikam,08D8B64FC6449F2A,8,10.0.0.16:6933,10.1.0.16:25,*,” CN=mail.contoso.com CN=R3, O=Let’s Encrypt, C=US 03C6CCE6D57C1D2DA908BF69EBD10963AE74 AF15A9798388DD9C0C03FEBC897025CD76963178 2020-12-05T09:46:36.000Z 2021-03-05T09:46:36.000Z mail.contoso.com;autodiscover.contoso.com;”,Sending certificate Subject Issuer name Serial number Thumbprint Not before Not after Subject alternate names

2021-01-11T16:43:15.043Z,Connector2Fabrikam,08D8B64FC6449F2A,9,10.0.0.16:6933,10.1.0.16:25,*,,TLS negotiation failed with error SocketError

2021-01-11T16:43:15.043Z,Connector2Fabrikam,08D8B64FC6449F2A,10,10.0.0.16:6933,10.1.0.16:25,-,,Remote

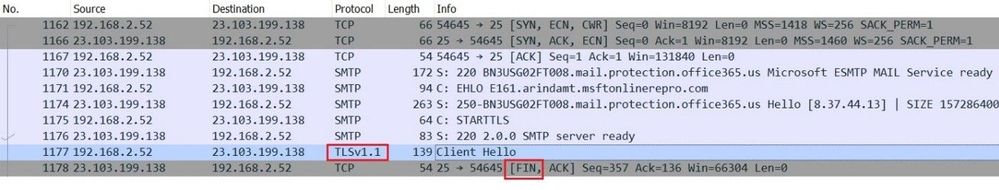

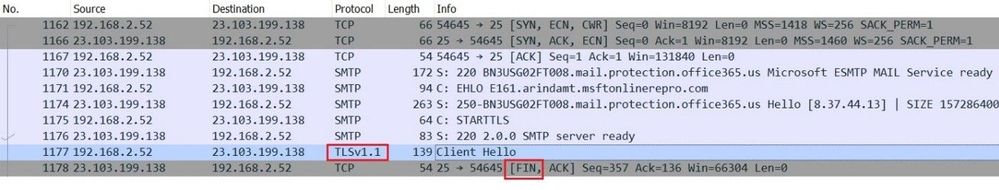

A network capture will resemble the following, which clearly explains the reason behind the failure. As you see in the following screenshot, the sending server, after the exchange of STARTTLS verb, tried to negotiate transport layer security using TLS version 1.1. The Exchange Online server instantly disconnected the session with a “FINISH” flag (FIN):

However, immediately after that, the sending server should fall back to not using TLS and will send the email anyway and it will be accepted by Exchange Online:

2021-01-11T16:43:15.047Z,Connector2Fabrikam,08D8B64FC6449F2B,0,,10.1.0.16:25,*,SendRoutingHeaders,Set Session Permissions

2021-01-11T16:43:15.047Z,Connector2Fabrikam,08D8B64FC6449F2B,1,,10.1.0.16:25,*,,attempting to connect

2021-01-11T16:43:15.050Z,Connector2Fabrikam,08D8B64FC6449F2B,2,10.0.0.16:6934,10.1.0.16:25,+,,

2021-01-11T16:43:15.053Z,Connector2Fabrikam,08D8B64FC6449F2B,3,10.0.0.16:6934,10.1.0.16:25,<,”220 BN3USG02FT012.mail.protection.office365.us Microsoft ESMTP MAIL Service ready at Mon, 11 Jan 2021 17:43:14 +0100″,

2021-01-11T16:43:15.053Z,Connector2Fabrikam,08D8B64FC6449F2B,4,10.0.0.16:6934,10.1.0.16:25,>,EHLO exc16.contoso.com,

2021-01-11T16:43:15.055Z,Connector2Fabrikam,08D8B64FC6449F2B,5,10.0.0.16:6934,10.1.0.16:25,<,250 BN3USG02FT012.mail.protection.office365.us Hello [10.0.0.16] SIZE 37748736 PIPELINING DSN ENHANCEDSTATUSCODES STARTTLS X-ANONYMOUSTLS AUTH NTLM X-EXPS GSSAPI NTLM 8BITMIME BINARYMIME CHUNKING XRDST,

2021-01-11T16:43:15.058Z,Connector2Fabrikam,08D8B64FC6449F2B,6,10.0.0.16:6934,10.1.0.16:25,*,,sending message with RecordId 40900973559810 and InternetMessageId <5149fa60b89741cfaf6e05d5767776a9@contoso.com>

2021-01-11T16:43:15.059Z,Connector2Fabrikam,08D8B64FC6449F2B,7,10.0.0.16:6934,10.1.0.16:25,>,MAIL FROM:<user@contoso.com> SIZE=9031,

2021-01-11T16:43:15.059Z,Connector2Fabrikam,08D8B64FC6449F2B,8,10.0.0.16:6934,10.1.0.16:25,>,RCPT TO:<user@fabrikam.com>,

2021-01-11T16:43:15.118Z,Connector2Fabrikam,08D8B64FC6449F2B,9,10.0.0.16:6934,10.1.0.16:25,<,250 2.1.0 Sender OK,

2021-01-11T16:43:15.120Z,Connector2Fabrikam,08D8B64FC6449F2B,10,10.0.0.16:6934,10.1.0.16:25,<,250 2.1.5 Recipient OK,

2021-01-11T16:43:15.121Z,Connector2Fabrikam,08D8B64FC6449F2B,11,10.0.0.16:6934,10.1.0.16:25,>,BDAT 2932 LAST,

2021-01-11T16:43:18.300Z,Connector2Fabrikam,08D8B64FC6449F2B,12,10.0.0.16:6934,10.1.0.16:25,<,”250 2.6.0 <5149fa60b89741cfaf6e05d5767776a9@contoso.com> [InternalId=171798691842, Hostname=BN3USG02FT012.mail.protection.office365.us] 4228 bytes in 2.816, 1.466 KB/sec Queued mail for delivery”,

2021-01-11T16:43:18.314Z,Connector2Fabrikam,08D8B64FC6449F2B,13,10.0.0.16:6934,10.1.0.16:25,>,QUIT,

2021-01-11T16:43:18.316Z,Connector2Fabrikam,08D8B64FC6449F2B,14,10.0.0.16:6934,10.1.0.16:25,<,221 2.0.0 Service closing transmission channel,

2021-01-11T16:43:18.316Z,Connector2Fabrikam,08D8B64FC6449F2B,15,10.0.0.16:6934,10.1.0.16:25,-,,Local

Note: to see where the SMTP Send protocol logs are stored on your on-premises server, run “Get-TransportServer <servername> | fl SendProtocolLog*”. Logs will be generated once you enable logging with a cmdlet like “Set-SendConnector <connectorname> -ProtocolLoggingLevel Verbose”.

If you explicitly configured your send connector with the setting “RequireTLS: True”, the fallback to non-TLS will not happen. In this case, the behavior will be similar to what is described in the next section.

On-premises Exchange server in a hybrid configuration sending to Exchange Online (internal to the organization)

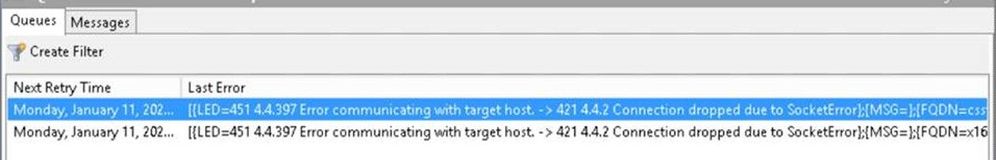

In this scenario, mails are sent from your on-premises recipients to your Exchange Online recipients. When your Exchange servers are configured for hybrid, by default, the “Outbound to Office 365…” connector has “RequireTLS: True”. This means that on-premises servers won’t fall back to sending unencrypted. If the TLS 1.0/1.1 attempt fails, Exchange will keep retrying the connection using TLS several times at various intervals (the exact retry intervals and counts are described here.) The send protocol log entries will be similar to those shown above, with the difference that the “TLS negotiation failed with error SocketError” entries will just keep repeating, since there is no fallback. Unless you modified the default retry configuration, the on-premises Exchange server will keep retrying for 2 days. Throughout this time, the affected mails will stay in the queue. The queue details will look similar to this:

[PS] C:>Get-Queue <queue ID> | fl

(…)

Status : Retry

LastError : [{LED=451 4.4.397 Error communicating with target host. -> 421 4.4.2 Connection dropped due to SocketError};{MSG=};{FQDN=<servername>};{IP=<serverIP>};{LRT=1/11/2021 6:02:39 PM}]

(…)

By default, the sender will receive a delay DSN (the subject starts with “Delivery delayed”, localized) after 4 hours. Unless you do some manual intervention sooner, the sending Exchange server will normally give up after 2 days and generate an NDR. The NDR message would look like this:

Delivery has failed to these recipients or groups:

user@contoso.com

Several attempts to deliver your message were unsuccessful and we stopped trying. It could be a temporary situation. Try to send your message again later.

Diagnostic information for administrators:

Generating server: <servername>

Receiving server: <servername>

user@contoso.com

1/7/2021 7:24:14 PM – Server at <servername> returned ‘550 5.4.300 Message expired -> 451 4.4.397 Error communicating with target host. -> 421 4.4.2 Connection dropped due to SocketError’

1/7/2021 7:23:14 PM – Server at mail.contoso.com (10.0.0.16) returned ‘451 4.4.397 Error communicating with target host. -> 421 4.4.2 Connection dropped due to SocketError’

To avoid such problems, be sure to configure your on-premises Exchange servers to support TLS 1.2, as described in our three-part blog series starting here.

Exchange Online sending to Exchange server (external to the organization)

This experience will depend on how the receiving server has implemented inbound mail flow. Assuming the receiving server supports TLS (advertises STARTTLS Verb), Exchange Online will only use TLS 1.2 to send outbound email. If the receiving server does not support TLS 1.2, Exchange Online being opportunistic will try to send email without TLS. If the receiving mail server does not have TLS enforced for inbound email flow, the email will be sent without TLS. You will know if your server is enforcing TLS by querying for the RequireTLS property of the Receive Connector, e.g. ‘Get-ReceiveConnector “Default Frontend <ServerName>” | fl RequireTLS’. If TLS is enforced at the receiving end, Exchange Online will continue retrying and the email will remain queued, and eventually we will generate NDR message after 24 hours (which is default message expiration timeout for Exchange Online).

On-premises non-Exchange server, application or device relaying external emails through your Exchange Online tenant following this article

If you have an on-premises non-Exchange server, application or device that relays email through your Office 365 tenant either by SMTP AUTH client submission or by using a certificate based inbound connector, make sure these servers or devices or applications support TLS 1.2. If they do not support TLS 1.2, the TLS negotiation will fail, and a subsequent non-TLS retry might be attempted. SMTP AUTH client submission does not work without TLS. And in case relay is configured through a certificate based inbound connector, the common name (CN) or subject alternative name (SAN) verification will fail during non-TLS communication. This will cause an “550 5.7.0. Relay Access Denied” error in both scenarios. Email delivery to mailboxes hosted in your Office 365 tenant will continue to work albeit it will be treated as “anonymous” submission.

Hopefully, this clarifies what you need to look for in case mail flow starts to break with the disablement of TLS 1.0/1.1! We also want to take a moment to thank Mike Brown, Nino Bilic and Sean Stevenson for their contributions and review.

Szabolcs Vajda and Arindam Thokder

Recent Comments