by Contributed | Jan 14, 2021 | Technology

This article is contributed. See the original author and article here.

We’re pleased to announce that the Microsoft Information Protection SDK version 1.8 is now generally available via NuGet and Download Center.

Highlights

This release of the Microsoft Information Protection SDK we’ve focuses on better support for macOS, as well as quality and performance fixes.

Summary:

- Added support for Mac on ARM.

- Signed all dylib files for Mac.

- All clouds are fully supported across all three SDKs.

- Rename

TelemetryConfiguration to DiagnosticConfiguration.

- Updated

MipContext to accept DiagnosticConfiguration instead of TelemetryConfiguration.

- Exposed new

TelemetryDelegate and AuditDelegate.

- Added support for user-defined labels with double key encryption.

- Added an API,

MsgInspector.BodyType to expose body encoding type for MSG files.

- Added APIs to support Double Key Encryption with User-Defined Permissions.

For a full list of changes to the SDK, please review our change log.

Metadata Updates

In November, we announced that the metadata location for Office files would be moving. While the legacy metadata storage in custom.xml is still in use, Office features will be made available in 2021 that require the new storage location. For more details on this update, please review https://aka.ms/mipsdkmetadata. We encourage you to begin updating, testing, and deploying MIP SDK 1.8 so that your customers, applications, and services can take advantage of these new features as they become available. Also be sure to checkout the MIP SDK FAQ for a section on these changes.

Java Support

In MIP SDK 1.7, we announced the public preview of our Java wrapper. We aren’t quite ready to announce that it’s generally available, yet, and will continue to look for feedback as we start work on MIP SDK 1.9.

The Java Wrapper for MIP SDK 1.8 now supports streams. You’ll no longer be required to pass in files directly.

Wrap Up

As always, we appreciate your support, feedback, and efforts to integrate the MIP SDK into your applications and services. Here’s a quick recap of some helpful MIP SDK resources.

-Tom Moser

by Contributed | Jan 14, 2021 | Technology

This article is contributed. See the original author and article here.

This document provides an overview of how enterprise customers can deploy Microsoft Teams-DLP for protecting sensitive information that is traversing with-in or outside of the organization. Unified DLP has integration with multiple workloads that help to protect customer data with a single policy. Teams-DLP is one of the workloads within the Unified-DLP console. This guide walks through the different aspects of deploying use cases across content/containers and shows the effectiveness of the unified DLP portal as a single place to define all aspects of your DLP strategy.

In summary, this play book will help to

- Understand the unified console and interface.

- Develop a strategy for deploying Teams-DLP across the organization.

- Provide near real time Alerts with notifications.

- Review various scenarios to test Teams-DLP over chat and channel communication.

This document helps readers plan and protect sensitive information scenarios that normally exist in every organization. This Playbook helps as a user guide to mitigate the risk of exchanging crucial data while communicating over chat or giving access to sites for guest users.

If you have any questions on this play book or suggestions, please reach out to our yammer group at aka.ms/askmipteam

Thank you Pavan and team for writing this!!! :)

by Contributed | Jan 14, 2021 | Technology

This article is contributed. See the original author and article here.

Happy New Year! We are excited to announce that the January 2021 release (1.2020.1219.0) of the MSIX Packaging Tool is now available!

We have released the MSIX Packaging Tool through the Microsoft Store, and are offering the offline download of the tool and license here as well. You can also check out the Quick Create VM to easily get the latest release in a clean environment.

Our January 2021 release features UX improvements to places like Package files and the Select installer page, as well as a full transition to supporting Device Guard Signing version 2. To learn more about the features and fixes we’ve made, you can check out our release notes. If you have any questions, feature ideas, or just want to connect with the Product Team, join our Tech Community. We love connecting and hearing from you, so don’t hesitate to file any feedback with us via the Feedback Hub as well!

Don’t forget about our Insider Program, which gets you early access to releases as they are in development!

Sharla Akers (@shakers_msft)

Program Manager, MSIX

by Contributed | Jan 14, 2021 | Technology

This article is contributed. See the original author and article here.

Authors: Lei Sun, Neta Haiby, Cha Zhang, Sanjeev Jagtap

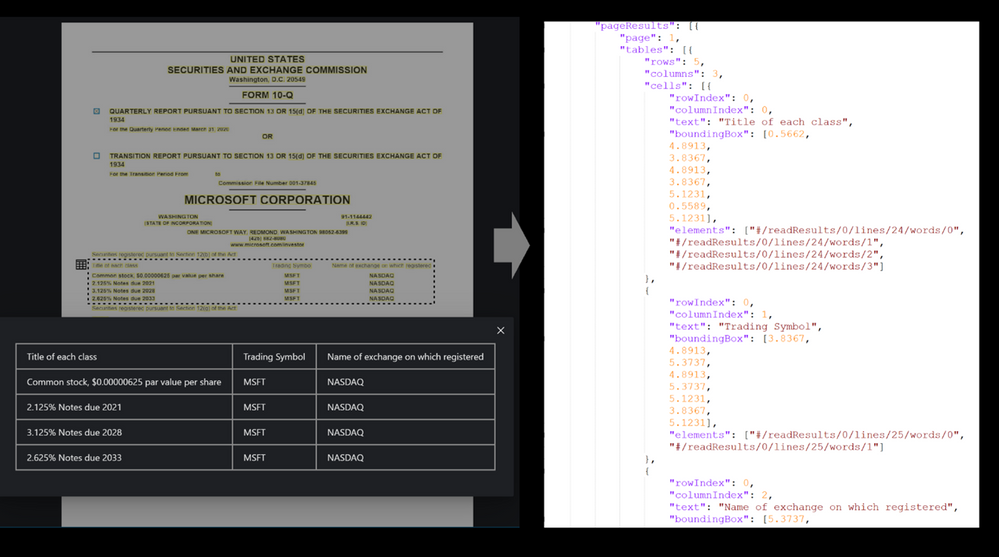

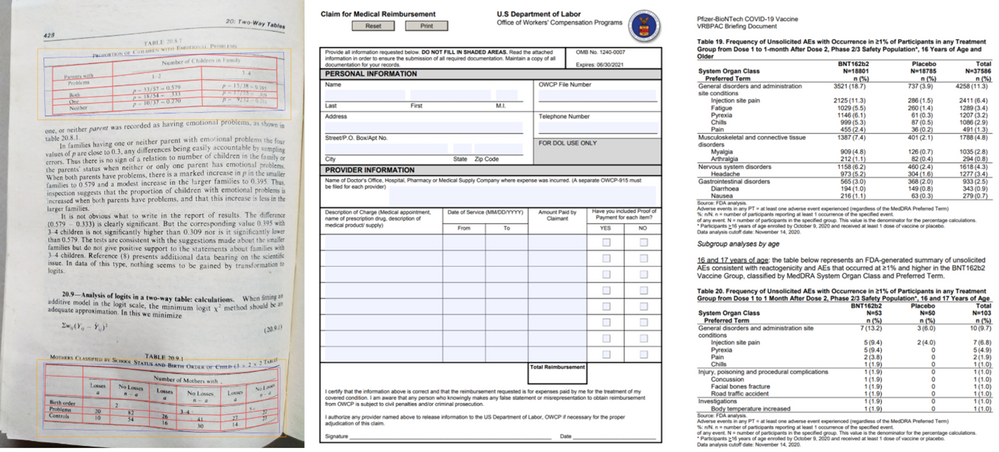

Documents containing tables pose a major hurdle for information extraction. Tables are often found in financial documents, legal documents, insurance documents, oil and gas documents and more. Tables in documents are often the most important part of the document but extracting data from tables in documents presents a unique set of challenges. Challenges include an accurate detection of the tabular region within an image, and subsequently detecting and extracting information from the rows and columns of the detected table, merged cells, complex tables, nested tables and more. Table extraction is the task of detecting the tables within the document and extracting them into a structured output that can be consumed by workflow applications such as robotic process automation (RPA) services, data analyst tools such as excel, databases and search services.

Customers often use manual processes for data extraction and digitization. However, with the new enhanced table extraction feature you can send a document (PDF or images) to Form Recognizer for extraction of all the information into a structured usable data at a fraction of the time and cost, so you can focus more time acting on the information rather than compiling it.

Table extraction challenges

Table extraction from a wide variety of document images is a challenging problem due to the heterogeneous table structures, diverse table contents, and erratic use of ruling lines. To name a few concrete examples, in financial reports and technical publications, some borderless tables may have complex hierarchical header structures, contain many multi-line, empty or spanned cells, or have large blank spaces between neighboring columns. In forms, some tables may be embedded in other more complex tabular objects (e.g., nested tables) and some neighboring tables may be very close to each other which makes it hard to determine whether they should be merged or not. In invoices, tables may have different sizes, e.g., some key-value pairs composed tables may contain only two rows/columns and some line-item tables may span multiple pages. Sometimes, some objects in document images like figures, graphics, code listings, structurally laid out text, or flow charts may have similar textures as tables, which poses another significant challenge for successful detection of tables and reduction of false alarms. To make matters worse, many scanned or camera-captured document images are of poor image quality, and tables contained in them may be distorted (even curved) or contain artifacts or noises. Existing table extraction solutions fall short of extracting tables from such document images with high accuracy, which has prevented workflow applications from effectively leveraging this technology.

Form Recognizer Table extraction

In recent years, the success of deep learning in various computer vision applications has motivated researchers to explore deep neural networks like convolutional neural networks (CNN) or graph neural networks (GNN) for detecting tables and recognizing table structures from document images. With these new technologies, the capability and performance of modern table extraction solutions have been improved significantly.

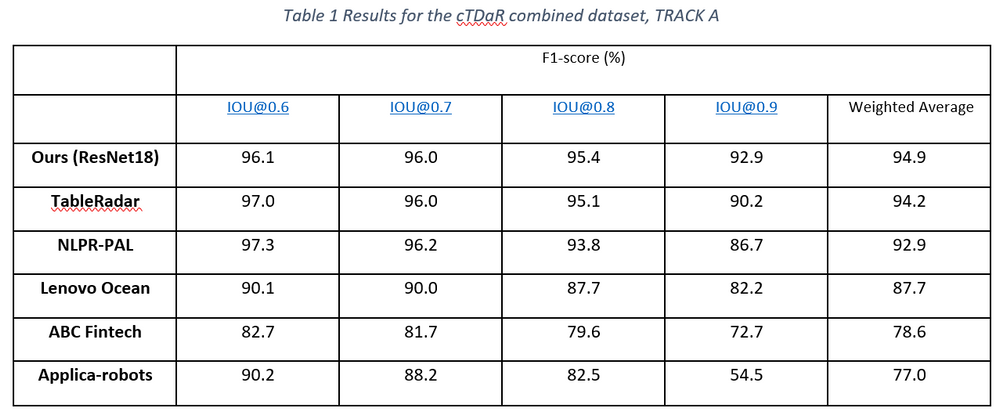

In the latest release of Form Recognizer, we created a state-of-the-art table extraction solution with cutting-edge deep learning technology. After validating that Faster/Mask R-CNN based table detectors are effective in detecting a variety of tables (e.g., bordered or borderless tables, tables embedded in other more complex tabular objects, and distorted tables) in document images robustly, we further proposed a new method to improve the localization accuracy of such detectors, and achieved state-of-the-art results on the ICDAR-2019 cTDaR table detection benchmark dataset by only using a lightweight ResNet18 backbone network (Table 1).

For the challenge of table recognition or table cell extraction, we leveraged existing CNN/GNN based approaches, which have proven to be robust to complex tables like borderless tables with complex hierarchical header structures and multi-line/empty/spanned cells. We further enhanced them to deal with distorted or even slightly curved tables in camera-captured document images, making the algorithm more widely applicable to different real-world scenarios. Figure 1 below shows a few examples to demonstrate such capabilities.

Easy and Simple to use

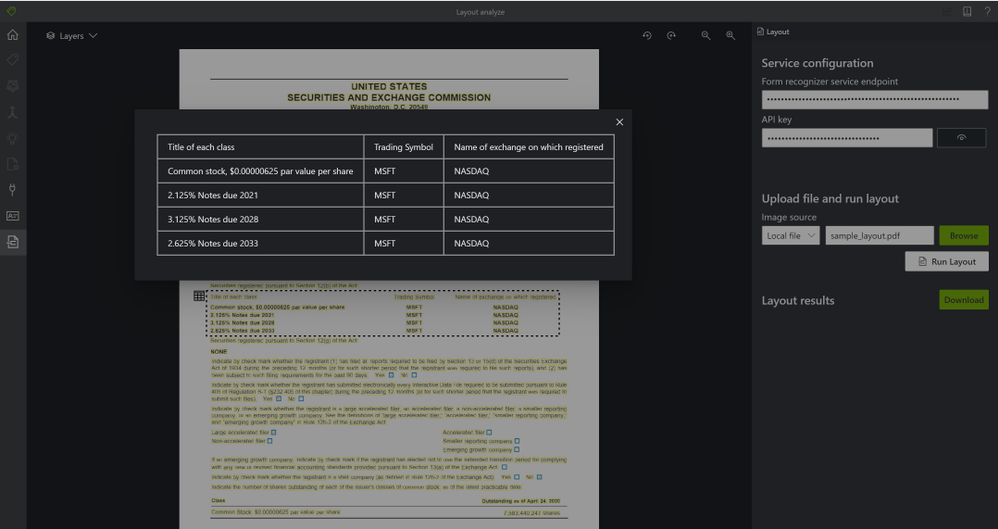

Try it out with the Form Recognizer Sample Tool.

Extracting tables from documents is as simple as 2 API calls, no training, preprocessing, or anything else needed. Just call the Analyze Layout operation with your document (image, TIFF, or PDF file) as the input and extracts the text, tables, selection marks, and structure of the document.

Step 1: The Analyze Layout Operation –

https://{endpoint}/formrecognizer/v2.1-preview.2/layout/analyze

The Analyze Layout call returns a response header field called Operation-Location. The Operation-Location value is a URL that contains the Result ID to be used in the next step.

Operation location –

https://cognitiveservice/formrecognizer/v2.1-preview.2/prebuilt/layout/analyzeResults/44a436324-fc4b-4387-aa06-090cfbf0064f

Step 2: The Get Analyze Layout Result Operation –

Once you have the operation location call the Get Analyze Layout Result operation. This operation takes as input the Result ID that was created by the Analyze Layout operation.

https://{endpoint}/formrecognizer/v2.1-preview.2/layout/analyzeResults/{resultId}

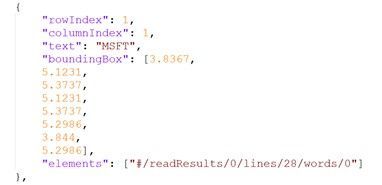

The output of the Get Analyze Layout Results will provide a JSON output with the extracted table – rows, columns, row span, col span, bounding box and more.

For example:

Get started

by Contributed | Jan 14, 2021 | Technology

This article is contributed. See the original author and article here.

The Set Up

While you have many choices for how to bring your service desk into Teams, AtBot provides the most complete solution to facilitate this integration. A bot in Teams offers users a friendly and intuitive way to ask for help. From being able to answer simple questions, drawing on your existing knowledge base, to providing step-by-step resolution instructions, and ultimately creating and assigning new service tickets for issues that the bot cannot resolve.

In this article, I will be expanding on the technical integrations that can empower your bot in Teams. ServiceNow, Microsoft Cognitive Services and the Power Platform, all brought together into a cohesive solution through AtBot. You can employ this strategy for any bot in Teams, not just your service desk, but for the sake of this article, we will be focusing on IT support.

What Makes a Good Bot

The main thing that makes a bot “good” is the fact that your users continue to use it. If the experience is poor, or users do not get value out of their interactions with the bot, then it will go unused. We believe that there are 3 pillars that breath life into your bot.

Language Understanding

The first pillar is the ability to understand natural language. The bot should not only understand what you are asking or trying to accomplish, but also any key components of your ask that are relevant. For example, if a user states, “I need to have Visio installed on my laptop”, the bot should understand that they need a software installation and that Visio is the software they are in need of.

This capability is accomplished through AtBot’s integration with Microsoft LUIS or Language Understanding Intelligent Service. LUIS is an easy-to-use language modelling service that can be leveraged to give your bots a deep understanding of the natural language that would be used within your organization to ask for services (like software installation).

Knowledge

The second pillar is the bot’s ability to respond to questions with knowledgeable answers. AtBot employs a direct connection to Microsoft QnA Maker to give your bots a knowledge base that can be derived from existing content or managed directly in the QnA Maker portal.

This knowledge base empowers your bot to answer questions with static content like “What is the guest WIFI password” or, “what are the service desk hours?”.

Action

The final pillar of any good bot is its ability to take action on the users’ behalf. Whether it is to create a new incident for the user in ServiceNow or to look up the status of a ticket, the bot should be fully capable of doing these things for the user.

This capability is provided through AtBot’s integration with Power Automate and Azure Logic Apps. AtBot has a standard connector in each of these RPA platforms which allows you to create skills for the bot without having to write any code. This is where our integration with ServiceNow comes in as ServiceNow is an out-of-the-box connector as well. If you can create a flow, you can program your bot using AtBot!

Your 24/7 Tier 1 Support Agent

When you put all this together, you can quickly configure, build, and deploy a bot to Teams which can provide support to your users throughout all hours of the day. The built-in integration with ServiceNow means that your existing flow for incidents can be leveraged and the bot can create and route new tickets accordingly.

The video below is an example of an IT support bot that was built using AtBot to combine the powers of Microsoft Cognitive Services, Power Automate, Teams and ServiceNow. This bot took about 6 hours to build from start to finish.

https://youtube.com/watch?v=-RfpRVsm030

Conclusion

If your organization is using Teams for communication and collaboration, now is the time to start looking at integrating other services through bots and application integrations. ServiceNow is one great example, but through Power Automate you can integrate with other systems as well. From Dynamics to SharePoint, FreshDesk to Azure SQL, integrating with your data is as simple as placing a block inside the flow and making the connection. This is all made possible by the AtBot platform, the no-code solution for building bots for Teams.

Recent Comments