by Contributed | Feb 23, 2021 | Technology

This article is contributed. See the original author and article here.

Host: Bhavanesh Rengarajan – Principal Program Manager, Microsoft

Guest: Rudra Mitra – VP of Compliance Solutions, Microsoft

The following conversation is adapted from transcripts of Episode 1 of the Voices of Data Protection podcast. There may be slight edits in order to make this conversation easier for readers to follow along.

This podcast features the leaders, program managers from Microsoft and experts from the industry to share details about the latest solutions and processes to help you manage your data, keep it safe and stay compliant. If you prefer to listen to the audio of this podcast instead, please visit: aka.ms/voicesofdataprotection

BHAVANESH: Welcome to Voices of Data Protection! I’m your host Bhavanesh Rengarajan, and I’m a Principal Program Manager at Microsoft. In this first episode, I talk with Rudy (Rudra) Mitra, Vice President of Compliance Solutions at Microsoft 365. Rudy has been with Microsoft for more than 20 years, helping organizations keep data safe and minimize risks.

Rudy and I will discuss how the pandemic and remote work have accelerated the need for compliance, how organizations are navigating this new landscape, and how Microsoft is developing a strong solutions roadmap to help organizations and people succeed in these unprecedented times!

Thank you, Rudy, for taking time to speak with us today, please give us a quick introduction of your role at M365 Compliance and your charter.

RUDRA: Hey Bhavy, how’s it going? I’m Rudy Mitra, the Vice President of Compliance Solutions at Microsoft 365. And looking forward to having this chat.

BHAVANESH: Would you quickly cover your areas of operation and talk about your team as well?

RUDRA: At Microsoft 365 with our compliance solutions, we think about areas such as data governance, data protection, insider risk management, which we can probably go into a little bit more, discovering content and auditing the access to content. And then of course, compliance management to round it out, all geared towards keeping enterprise data safe, secure, and helping organizations work on risk, reducing risks.

BHAVANESH: So, let me ask you this question, how has compliance as a scene evolved since the pandemic and switching to working from home. And how are you thinking or reassessing the roadmap in these unprecedented tough times?

RUDRA: Of course, as you know, we all find ourselves in these unprecedented times. And organizations are looking to react as the workforce goes remote. And so, you know, a couple of things are coming forward as we sort of listen to customers, as we talked to them with remote work, it’s all about sort of where the organization’s data is now located, where it’s flowing as we work from home. There’s lots of questions in organization’s mind about how to keep their data secure, but also ensure employees can stay as productive as possible, not put-up walls to their productivity. So that’s sort of one key theme that we continue to hear and react to. Also, things like making sure the risk from communications, which are now happening more in the digital medium, you know, like us talking to each other versus the cooler talk or in other places, there’s a lot more of that going on, meaning that there could potentially be risks with that data, what’s talked about, what’s in email, what’s in chats. So covering those bases for compliance, making sure, you know, if there’s anything that needs to be discovered or flagged or protected, that’s another key theme through all of this. So, remote work, we’ve all been transitioning to it, but at the same time, maintaining that sense of compliance, meaning maintaining that sense of security of data protection of that data, key themes for organizations that are pretty much globally is what we hear.

BHAVANESH: Would it be fair to say that the need for compliance has grown over the last few months compared to where we were about a year ago?

RUDRA: Oh, for sure. This whole situation has accelerated the move to some digital medium. I think Satya says this really well from Microsoft, which is, he’s sort of puts it as the transition to digital and acceleration to the cloud move for organizations has really accelerated because that is the way to stay productive. That is the way to operate in this, in this situation. And with that, the need for compliance, as you just pointed out, has tremendously accelerated. And we see this not only in the customer conversations, but we also see this in the adoption and usage of our solutions.

BHAVANESH: Since I heard you say that you’re looking into information protection, governance, and insider risk management as some of the key pillars, what are some of the core concerns or struggles that you hear from our customers and what they feel they do not have a solution around today?

RUDRA: The intellect property of an organization, intuitively, is the thing they are trying to protect most. That’s the place where compliance really is important to make sure that their intellectual property is safe. They know where it is as workers for the enterprise access and data from home, what devices it on. It could be a managed, it could be unmanaged. So, the information protection need for these core assets is the key requirement we hear. When you think about areas like data loss prevention, very geared towards making sure that what is important for the company, stays within the enterprise is control, they know where it’s going, particularly in this distributed environment. And then of course, you know, where, where company secrets are involved, where company intellectual property is involved, being able to classify that content, being able to say what’s the important information from what the non-important information is so important now, to be able to make sure you can protect that 5% of data, 2% of data, 10% of data from everything else. That’s more data in the organization. And just to put this in perspective, by some, some measures it’s estimated that we are now doubling the digital data for an organization large or small every couple of years with continued acceleration on it, which means the volume of data that you’re trying to figure out your intellectual property out of protected, whether that’s patient records, whether that’s taskforce forms, whether that’s blueprints, for manufacturing, financial records, very important things to talk about the scale aspects of it and being able to protect what’s important from maybe all the other digital data that’s floating around.

BHAVANESH: That’s really exciting, Rudy. So how does your roadmap address all these concerns for your customers?

RUDRA: A great question. What we feel at Microsoft is that this explosion of data combined with the trend of remote work just brings to forefront the need for automated solutions, solutions, leverage the best of machine learning. And yeah, yeah, I do assist with the production of data would be identification when there are insider risks. And what we really leaned in on is solutions that are ML and AI powered so that these can scale. It’s very different when you’re trying to do this at small scale versus really the scale at which businesses operate today, large, or small. We talked a little bit about the, the volume of data and in that context, automation is super important, so, that’s sort of number one. Number two for our solutions is being part of the productivity experience or users and users. You know, it’s very easy to sort of say, I’m going to protect data that no one ever accesses, right. I can lock it up in a vault and protect it, but what’s the fun in that then there’s no productivity, but it’s 100% secure. And so, this balance of experiences that are geared towards productivity and security and protection and compliance is important. And so when you think about the work that we’ve done in the Office applications, in SharePoint, in Teams where the person working on it is in the flow of their work, they never leave their flow, but their knowledge, if they’re dealing with sensitive information, they know that they have to deal with it carefully combined with things like IT manageability, where they don’t have to deploy additional add ins it’s part of the product experiences and apps.

This is sort of number two on our sort of areas of focus, which is balancing productivity and protection. And then number three is sort of our partners and the work that we do with our ecosystem to make sure that this is not just production for Microsoft data, but for all data. Because an enterprise is messy, it has data in different places. And so really our production solutions are important as well.

BHAVANESH: And Rudy, I think I’m going to throw you a curveball. It will be good to get your perspective as well as a customer. Let’s say that if they are using a very manually operated system today, like you have manual labeling on sensitivity and retention, what kind of sedation would you give them so that they can basically increase their productivity by moving towards your automated solution, like auto labeling, using sensitive information, machine learning, AI? What would be your top three steps that you would suggest to them to move from here to there?

RUDRA: The idea of starting small with data loss prevention, you know, maybe starting with your data classification in, in more where they can see where the sensitive data is located. That’s sort of how we are approach this question with customers united very daunting, maybe to think about going from having no information production solution to a fully deployed solution overnight. And as you correctly pointed out in your question, yeah. Now, how do you, how do you take this a step at a time with Microsoft 365? The fact that this is data loss prevention built into teams this is built into Word, Excel, PowerPoint, Outlook SharePoint gives you as an organization, the control to start small, you know, see how you can roll out data loss prevention first, or see your insights on sensitive data first in these different repositories where data may be located and from there, and build on different policies.

And with manual data, labeling classification back to your question, you’re sort of still seeing an incomplete picture, but what you can do is automate that with the automation behind the scenes, run that in sort of test mode, run that in simulation mode, see what shows up and then go from there. So that’s, that’s sort of one part of it. If I may, the delivery, I’d kind of extend the question a little bit to also say that, you know, the work that we’ve been doing, an insider risk management, where, you know, we try to think about risks from people within the, within the organization. And just to put that in context, over 90% of organizations we survey and talk to say that they are worried about insider risks, and more than 50% of those risks are inadvertent, meaning that this isn’t a malicious scenario, it’s just an accidental leakage of data. It’s an accident, explore exposure of data.

So when you use the automation, when you use the controls that we build into Microsoft 365, and then extend across your entire digital estate, you sort of see more of the picture light up, whether that’s where the data is located with our know your data products or where sensitive data is that you need to protect or data loss prevention solutions you need to put on the end point or that, or the app. So, the automation can really be a staged rollout that augments what you may be doing already with manual controls.

BHAVANESH: Rudy, I think your last few statements really hits it out of the park. The vein, which I would like to summarize this as all of your companies, initiatives are totally focused towards trying to stop the accident, oversharing and breach of data. That’s how it kind of sums up in my head.

RUDRA: Yeah, that’s right, Bhavy. When we’re all remote, you know, it’s a, it’s a very interesting time. We’re all working in and in these unprecedented times, not to overuse that, but it really is a scenario. We’re all navigating together, but we’ve never seen. It’s work under duress. It’s working in this remote environment and we’ve got so many things going on, you know, just to kind of bringing at home, for me, you know, I’m, I’m juggling the kids at home you know, and trying to be productive in the work I’m doing. I’m multitasking all the time. And making a mistake with the handling of the company’s data, the enterprise data, you know, it’s not going to be something for me, that’s potentially malicious. It’s going to be an accident. And so, yes, being automated to sort of be there to help someone and catch those kinds of scenarios and protect those scenarios, that’s the very likely set of scenarios where we hear the customer need and want to be there to help them with it.

BHAVANESH: What have been some of your biggest learnings throughout your career working in this particular space?

RUDRA: Oh, wow. That is a little bit of a curveball. I would say as an engineer and as a product person, if I had to pick one out, I would say that being customer driven as we’ve worked on these areas in sort of our solution space of compliance, or sort of broadly, as we thought about how Microsoft delivers on security and compliance and identity solutions, it’s really listening to customers. It’s being very customer centric in terms of what we try to achieve for them. Making sure her activity for customers is as important as a compliance security is sort of our guiding light has been probably the biggest takeaway for us.

And, it’s frankly been at the center of every solution that we’re trying to build, because, you know, we could try to do these things in isolation and whatnot, but, you know, just as you talked a couple of times about the situation we find ourselves in right now, listening to the customer, going back to them, asking them how they’re trying to navigate this and then adapting our solutions to it, probably has been very core to what we do. And frankly, very rewarding and trying to help customers.

To learn more about this episode of the Voices of Data Protection podcast, visit: https://aka.ms/voicesofdataprotection.

For more on Microsoft Information Protection & Governance, click here.

To subscribe to the Microsoft Security YouTube channel, click here.

Follow Microsoft Security on Twitter and LinkedIn.

Keep in touch with Bhavanesh: LinkedIn

Keep in touch with Rudra: LinkedIn | Twitter

by Contributed | Feb 23, 2021 | Technology

This article is contributed. See the original author and article here.

We recently released a new version of MSTICPy with a feature called Pivot functions.

This feature has three main goals:

- Making it easy to discover and invoke MSTICPy functionality.

- Creating a standardized way to call pivotable functions.

- Letting you assemble multiple functions into re-usable pipelines.

The pivot functionality exposes operations relevant to a particular entity as methods (or functions) of that entity. These operations include:

- Data queries

- Threat intelligence lookups

- Other data lookups such as geo-location or domain resolution

- and other local functionality

Here are a couple of examples showing calling different kinds of enrichment functions from the IpAddress entity:

>>> from msticpy.datamodel.entities import IpAddress, Host

>>> IpAddress.util.ip_type(ip_str=“157.53.1.1”))

ip result 157.53.1.1 Public

>>> IpAddress.util.whois(“157.53.1.1”))

asn asn_cidr asn_country_code asn_date asn_description asn_registry nets …..

NA NA US 2015–04–01 NA arin [{‘cidr’: ‘157.53.0.0/16’… >>> IpAddress.util.geoloc_mm(value=“157.53.1.1”)) CountryCode CountryName State City Longitude Latitude Asn… US United States None None –97.822 37.751 None…

This second example shows a pivot function that does a data query for host logon events from a Host entity.

>>> Host.AzureSentinel.list_host_logons(host_name=“VictimPc”)

Account EventID TimeGenerated Computer SubjectUserName SubjectDomainName

NT AUTHORITYSYSTEM 4624 2020–10–01 22:39:36.987000+00:00 VictimPc.Contoso.Azure VictimPc$ CONTOSO

You can also add other functions from 3rd party Python packages or ones you write yourself as pivot functions.

Terminology

Before we get into things let’s clear up a few terms.

Entities – These are Python classes that represent real-world objects commonly encountered in CyberSec investigations and hunting. E.g., Host, URL, IP Address, Account, etc.

Pivoting – This comes from the common practice in CyberSec investigations of navigating from one suspect entity to another. E.g., you might start with an alert identifying a potentially malicious IP Address, from there you ‘pivot’ to see which hosts or accounts were communicating with that address. From there you might pivot again to look at processes running on the host or Office activity for the account.

Background reading

This article is available in Notebook form so that you can try out the examples. [TODO]

There is also full documentation of the Pivot functionality on our ReadtheDocs page.

Life before pivot functions

Before Pivot functions your ability to use the various bits of functionality in MSTICPy was always bounded by your knowledge of where a certain function was (or your enthusiasm for reading the docs).

For example, suppose you had an IP address that you wanted to do some simple enrichment on.

ip_addr = “20.72.193.242”

First, you’d need to locate and import the functions. There might also be (as in the GeoIPLiteLookup class) some initialization step you’d need to do before using the functionality.

from msticpy.sectools.ip_utils import get_ip_type

from msticpy.sectools.ip_utils import get_whois_info

from msticpy.sectools.geoip import GeoLiteLookup

geoip = GeoLiteLookup()

Next you might have to check the help for each function to work it parameters.

>>> help(get_ip_type)

Help on function get_ip_type in module msticpy.sectools.ip_utils:

get_ip_type(ip: str = None, ip_str: str = None) -> str

Validate value is an IP address and deteremine IPType category. …

Then finally run the functions.

>>> get_ip_type(ip_addr)

‘Public’

>>> get_whois_info(ip_addr)

(‘MICROSOFT-CORP-MSN-AS-BLOCK, US’,

{‘nir’: None, ‘asn_registry’: ‘arin’, ‘asn’: ‘8075’, ‘asn_cidr’: ‘20.64.0.0/10’, ‘asn_country_code’: ‘US’, ‘asn_date’: ‘2017-10-18’, ‘asn_description’: ‘MICROSOFT-CORP-MSN-AS-BLOCK, US’, ‘query’: ‘20.72.193.242’, ‘nets’: …

>>> geoip.lookup_ip(ip_addr)

([{‘continent’:

{‘code’: ‘NA’, ‘geoname_id’: 6255149, ‘names’:

{‘de’: ‘Nordamerika’, ‘en’: ‘North America’, ‘es’: ‘Norteamérica’, ‘fr’: ‘Amérique du Nord’, ‘ja’: ‘北アメリカ’, ‘pt-BR’: ‘América do Norte’, ‘ru’: ‘Северная Америка’, ‘zh-CN’: ‘北美洲’}}, ‘country’: {‘geoname_id’: 6252001, ‘iso_code’: ‘US’, ‘names’: {‘de’: ‘USA’, ‘en’: ‘United States’, ‘es’: ‘Estados Unidos’, …

At which point you’d discover that the output from each function was somewhat raw and it would take a bit more work if you wanted to combine it in any way (say in a single table).

In the rest of the article we’ll show you how Pivot functions make it easier to discover data and enrichment functions. We’ll also show how pivot functions bring standardization and handle different types of input (including lists and DataFrames) and finally, how the standardized output lets you chain multiple pivot functions together into re-usable pipelines of functionality.

Getting started with pivot functions

Let’s get started with how to use Pivot functions.

Typically, we use MSTICPy‘s init_notebook function at the start of any notebook. This handles checking versions and importing some commonly-used packages and modules (both MSTICPy and 3rd party packages like pandas).

>>> from msticpy.nbtools.nbinit import init_notebook

>>> init_notebook(namespace=globals());

Processing imports……..

Then there are a couple of preliminary steps needed before you can use pivot functions. The main one is loading the Pivot class.

Pivot functions are added to the entities dynamically by the Pivot class. The Pivot class will try to discover relevant functions from queries, Threat Intel providers and various utility functions.

In some cases, notably data queries, the data query functions are themselves created dynamically, so these need to be loaded before you create the Pivot class. (You can always create a new instance of this class, which forces re-discovery, so don’t worry if mess up the order of things).

Note in most cases we don’t need to connect/authenticate to a data provider prior to loading Pivot.

Let’s load our data query provider for AzureSentinel.

>>> az_provider = QueryProvider(“AzureSentinel”)

Please wait. Loading Kqlmagic extension…

Now we can load and instantiate the Pivot class.

>>> from msticpy.datamodel.pivot import Pivot

>>> pivot = Pivot(namespace=globals())

Why do we need to pass “namespace=globals()”?

Pivot searches through the current objects defined in the Python/notebook namespace to find provider objects that it will use to create the pivot functions. This is most relevant for QueryProviders – when you create a Pivot class instance it will find and use the relevant queries from the az_provider object that we created in the previous step. In most other cases (like GeoIP and ThreatIntel providers, it will create new ones if it can’t find existing ones).

Easy discovery of functionality

Find the entity name you need

The simplest way to do this is simply enumerate (using Python dir() function) the contents of the MSTICPy entities sub-package. This should have already been imported by the init_notebook function that we ran earlier.

The items at the beginning of the list with proper capitalization are the entities.

>>> dir(entities)

[‘Account’, ‘Alert’, ‘Algorithm’, ‘AzureResource’, ‘CloudApplication’, ‘Dns’, ‘ElevationToken’, ‘Entity’, ‘File’, ‘FileHash’, ‘GeoLocation’, ‘Host’, ‘HostLogonSession’, ‘IpAddress’, ‘Malware’, …

We’re going to make this a little more elegant in a forthcoming update with this helper function.

>>> entities.find_entity(“ip”)

Match found ‘IpAddress’msticpy.datamodel.entities.ip_address.IpAddress

Listing pivot functions available for an entity

Note you can always address an entity using its qualified path, e.g. “entities.IpAddress” but if you are going to use one or two entities a lot, it will save a bit of typing if you import them explicitly.

>>> from msticpy.datamodel.entities import IpAddress, Host

Once you have the entity loaded, you can use the get_pivot_list() function to see which pivot functions are available for it. The example below has been abbreviated for space reasons.

>>> IpAddress.get_pivot_list()

[‘AzureSentinel.SecurityAlert_list_alerts_for_ip’,

‘AzureSentinel.SigninLogs_list_aad_signins_for_ip’,

‘AzureSentinel.AzureActivity_list_azure_activity_for_ip’,

‘AzureSentinel.AzureNetworkAnalytics_CL_list_azure_network_flows_by_ip’,

…

‘ti.lookup_ip’,

‘ti.lookup_ipv4’,

‘ti.lookup_ipv4_OTX’,

…

‘ti.lookup_ipv6_OTX’,

‘util.whois’,

‘util.ip_type’,

‘util.ip_rev_resolve’,

‘util.geoloc_mm’,

‘util.geoloc_ips’]

Some of the function names are a little unwieldy but, in many cases, this is necessary to avoid name collisions. You will notice from the list that the functions are grouped into containers: “AzureSentinel”, “ti” and “util” in the above example.

Although this makes the function name even longer, we thought that this helped to keep related functionality together – so you don’t get a TI lookup function, when you thought you were running a query.

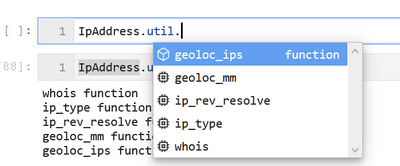

Fortunately, Jupyter notebooks/IPython support tab completion so you should not normally have to remember these names.

The containers (“AzureSentinel”, “util”, etc.) are also callable functions – they just return the list of functions they contain.

>>> IpAddress.util()

whois functionip_type functionip_rev_resolve functiongeoloc_mm functiongeoloc_ips function

Now we’re ready to run any of the functions for this entity (we take the same initial examples from the “Life before pivot functions” plus a few more).

>>> IpAddress.util.ip_type(ip_addr)

|

ip

|

result

|

0

|

20.72.193.242

|

Public

|

>>> IpAddress.util.whois(ip_addr)

|

asn

|

asn_cidr

|

asn_country_code

|

asn_date

|

asn_description

|

asn_registry

|

nets

|

0

|

8075

|

20.64.0.0/10

|

US

|

2017-10-18

|

MICROSOFT-CORP-MSN-AS-BLOCK, US

|

arin

|

[{‘cidr’: ‘20.128.0.0/16, 20.48, …

|

>>> IpAddress.util.ip_rev_resolve(ip_addr)

|

qname

|

rdtype

|

response

|

ip_address

|

0

|

20.72.193.242

|

PTR

|

The DNS query name does not exist: 20.72.193.242.

|

20.72.193.242

|

>>> IpAddress.util.geoloc_mm(ip_addr)

|

CountryCode

|

CountryName

|

State

|

City

|

Longitude

|

Latitude

|

Asn

|

edges

|

Type

|

AdditionalData

|

IpAddress

|

0

|

US

|

United States

|

Washington

|

None

|

-122.3412

|

47.6032

|

None

|

{}

|

geolocation

|

{}

|

20.72.193.242

|

>>> IpAddress.ti.lookup_ip(ip_addr)

|

Ioc

|

IocType

|

SafeIoc

|

QuerySubtype

|

Provider

|

Result

|

Severity

|

Details

|

0

|

20.72.193.242

|

ipv4

|

20.72.193.242

|

None

|

Tor

|

True

|

information

|

Not found.

|

0

|

20.72.193.242

|

ipv4

|

20.72.193.242

|

None

|

VirusTotal

|

True

|

unknown

|

{‘verbose_msg’: ‘Missing IP address’, ‘response_code’: 0}

|

0

|

20.72.193.242

|

ipv4

|

20.72.193.242

|

None

|

XForce

|

True

|

warning

|

{‘score’: 1, ‘cats’: {}, ‘categoryDescriptions’: {}, ‘reason’: ‘Regional Internet Registry’, ‘re…

|

Notice that we didn’t need to worry about either the parameter name or format (more on this in the next section). Also, whatever the function, the output is always returned as a pandas DataFrame.

For Data query functions you do need to worry about the parameter name

Data query functions are slightly more complex than most other functions and specifically often support many parameters. Rather than try to guess which parameter you meant, we require you to be explicit about it.

Before we can use a data query, we need to authenticate to the provider.

>>> az_provider.connect(WorkspaceConfig(workspace=“CyberSecuritySoc”).code_connect_str)

If you are not sure of the parameters required by the query you can use the built-in help

>>> Host.AzureSentinel.SecurityAlert_list_related_alerts?

Signature: Host.AzureSentinel.SecurityAlert_list_related_alerts(*args, **kwargs) -> Union[pandas.core.frame.DataFrame, Any]

Docstring:

Retrieves list of alerts with a common host, account or process

Parameters

———-

account_name: str (optional)

The account name to find

add_query_items: str (optional)

Additional query clauses

end: datetime (optional)

Query end time

host_name: str (optional)

The hostname to find

path_separator: str (optional)

Path separator (default value is: )

process_name: str (optional) …

In this case we want the “host_name” parameter.

>>> Host.AzureSentinel.SecurityAlert_list_related_alerts(host_name=“victim00”).head(5)

|

TenantId

|

TimeGenerated

|

AlertDisplayName

|

AlertName

|

Severity

|

Description

|

ProviderName

|

VendorName

|

VendorOriginalId

|

SystemAlertId

|

ResourceId

|

SourceComputerId

|

AlertType

|

ConfidenceLevel

|

ConfidenceScore

|

IsIncident

|

StartTimeUtc

|

EndTimeUtc

|

ProcessingEndTime

|

RemediationSteps

|

ExtendedProperties

|

Entities

|

SourceSystem

|

WorkspaceSubscriptionId

|

WorkspaceResourceGroup

|

ExtendedLinks

|

ProductName

|

ProductComponentName

|

AlertLink

|

Status

|

CompromisedEntity

|

Tactics

|

Type

|

Computer

|

src_hostname

|

src_accountname

|

src_procname

|

host_match

|

acct_match

|

proc_match

|

0

|

8ecf8077-cf51-4820-aadd-14040956f35d

|

2020-12-10 09:10:08+00:00

|

Suspected credential theft activity

|

Suspected credential theft activity

|

Medium

|

This program exhibits suspect characteristics potentially associated with credential theft. Onc…

|

MDATP

|

Microsoft

|

da637426874826633442_-1480645585

|

a429998b-8a1f-a69c-f2b8-24dedde31c2d

|

|

|

WindowsDefenderAtp

|

|

NaN

|

False

|

2020-12-04 14:00:00+00:00

|

2020-12-04 14:00:00+00:00

|

2020-12-10 09:10:08+00:00

|

[rn “1. Make sure the machine is completely updated and all your software has the latest patc…

|

{rn “MicrosoftDefenderAtp.Category”: “CredentialAccess”,rn “MicrosoftDefenderAtp.Investiga…

|

[rn {rn “$id”: “4”,rn “DnsDomain”: “na.contosohotels.com”,rn “HostName”: “vict…

|

Detection

|

|

|

|

Microsoft Defender Advanced Threat Protection

|

|

https://securitycenter.microsoft.com/alert/da637426874826633442_-1480645585

|

New

|

victim00.na.contosohotels.com

|

CredentialAccess

|

SecurityAlert

|

victim00

|

victim00

|

|

|

True

|

False

|

False

|

1

|

8ecf8077-cf51-4820-aadd-14040956f35d

|

2020-12-10 09:10:08+00:00

|

‘Mimikatz’ hacktool was detected

|

‘Mimikatz’ hacktool was detected

|

Low

|

Readily available tools, such as hacking programs, can be used by unauthorized individuals to sp…

|

MDATP

|

Microsoft

|

da637426874826014018_-1390662053

|

edb68e6d-012d-4c6b-7408-20e679fb41c8

|

|

|

WindowsDefenderAv

|

|

NaN

|

False

|

2020-12-04 14:00:01+00:00

|

2020-12-04 14:00:01+00:00

|

2020-12-10 09:10:08+00:00

|

[rn “1. Make sure the machine is completely updated and all your software has the latest patc…

|

{rn “MicrosoftDefenderAtp.Category”: “Malware”,rn “MicrosoftDefenderAtp.InvestigationId”: …

|

[rn {rn “$id”: “4”,rn “DnsDomain”: “na.contosohotels.com”,rn “HostName”: “vict…

|

Detection

|

|

|

|

Microsoft Defender Advanced Threat Protection

|

|

https://securitycenter.microsoft.com/alert/da637426874826014018_-1390662053

|

New

|

victim00.na.contosohotels.com

|

Unknown

|

SecurityAlert

|

victim00

|

victim00

|

|

|

True

|

False

|

False

|

2

|

8ecf8077-cf51-4820-aadd-14040956f35d

|

2020-12-10 09:10:08+00:00

|

Malicious credential theft tool execution detected

|

Malicious credential theft tool execution detected

|

High

|

A known credential theft tool execution command line was detected.nEither the process itself or…

|

MDATP

|

Microsoft

|

da637426874824572229_-192666782

|

39912e77-045b-a082-a91e-8a18958d1b1c

|

|

|

WindowsDefenderAtp

|

|

NaN

|

False

|

2020-12-04 14:00:00+00:00

|

2020-12-04 14:00:00+00:00

|

2020-12-10 09:10:08+00:00

|

[rn “1. Make sure the machine is completely updated and all your software has the latest patc…

|

{rn “MicrosoftDefenderAtp.Category”: “CredentialAccess”,rn “MicrosoftDefenderAtp.Investiga…

|

[rn {rn “$id”: “4”,rn “DnsDomain”: “na.contosohotels.com”,rn “HostName”: “vict…

|

Detection

|

|

|

|

Microsoft Defender Advanced Threat Protection

|

|

https://securitycenter.microsoft.com/alert/da637426874824572229_-192666782

|

New

|

victim00.na.contosohotels.com

|

CredentialAccess

|

SecurityAlert

|

victim00

|

victim00

|

|

|

True

|

False

|

False

|

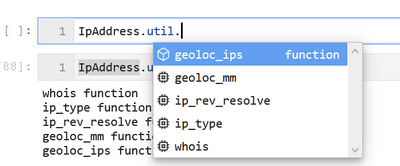

Shown below is a preview of a notebook tool that lets you browser around entities and their pivot functions, search for a function by keyword and view the help for that function. This is going to be released shortly.

>>> Pivot.browse()

Standardized way of calling Pivot functions

Due to various factors (historical, underlying data, developer laziness and forgetfulness, etc.) the functionality in MSTICPy can be inconsistent in the way it uses input parameters.

Also, many functions will only accept inputs as a single value, or a list or a DataFrame or some unpredictable combination of these.

Pivot functions allow you to largely forget about this – you can use the same function whether you have:

- a single value

- a list of values (or any Python iterable, such as a tuple or even a generator function)

- a DataFrame with the input value in one of the columns.

Let’s take an example.

Suppose we have a set of IP addresses pasted from somewhere that we want to use as input.

We need to convert this into a Python data object of some sort.

To do this we can use another Pivot utility %%txt2df. This is a Jupyter/IPython magic function – to use it, just paste you data in a cell that you want to import into an empty. Use

%%txt2df –help

in an empty cell to see the full syntax.

In the example below, we specify a comma separator, that the data has a headers row and to save the converted data as a DataFrame named “ip_df”.

Warning if you specify the “–name” parameter, this will overwrite any existing variable of this name.

%%txt2df –sep , –headers –name ip_df

idx, ip, type

0, 172.217.15.99, Public

1, 40.85.232.64, Public

2, 20.38.98.100, Public

3, 23.96.64.84, Public

4, 65.55.44.108, Public

5, 131.107.147.209, Public

6, 10.0.3.4, Private

7, 10.0.3.5, Private

8, 13.82.152.48, Public

|

idx

|

ip

|

type

|

0

|

0

|

172.217.15.99

|

Public

|

1

|

1

|

40.85.232.64

|

Public

|

2

|

2

|

20.38.98.100

|

Public

|

3

|

3

|

23.96.64.84

|

Public

|

4

|

4

|

65.55.44.108

|

Public

|

5

|

5

|

131.107.147.209

|

Public

|

6

|

6

|

10.0.3.4

|

Private

|

7

|

7

|

10.0.3.5

|

Private

|

8

|

8

|

13.82.152.48

|

Public

|

For demonstration purposes, we’ll also create a standard Python list from the “ip” column of the DataFrame.

>>> ip_list = list(ip_df.ip)

>>> print(ip_list)

[‘172.217.15.99’, ‘40.85.232.64’, ‘20.38.98.100’, ‘23.96.64.84’, ‘65.55.44.108’, ‘131.107.147.209’, ‘10.0.3.4’, ‘10.0.3.5’, ‘13.82.152.48’]

How did this work before?

If you recall the earlier example of get_ip_type, passing it a list or DataFrame doesn’t result in anything useful.

>>> get_ip_type(ip_list)

[‘172.217.15.99’, ‘40.85.232.64’, ‘20.38.98.100’, ‘23.96.64.84’, ‘65.55.44.108’, ‘131.107.147.209’, ‘10.0.3.4’, ‘10.0.3.5’, ‘13.82.152.48’]

does not appear to be an IPv4 or IPv6 address

‘Unspecified’

Pivot versions are (somewhat) agnostic to input data format

However, the “pivotized” version can accept and correctly process a list.

>>> IpAddress.util.ip_type(ip_list)

|

ip

|

result

|

0

|

172.217.15.99

|

Public

|

1

|

40.85.232.64

|

Public

|

2

|

20.38.98.100

|

Public

|

3

|

23.96.64.84

|

Public

|

4

|

65.55.44.108

|

Public

|

5

|

131.107.147.209

|

Public

|

6

|

10.0.3.4

|

Private

|

7

|

10.0.3.5

|

Private

|

8

|

13.82.152.48

|

Public

|

In the case of a DataFrame, we have to tell the function the name of the column that contains the input data.

>>> IpAddress.util.whois(ip_df) # won’t work!

—————————————————————————

KeyError Traceback (most recent call last)

<ipython-input-32-debf57d805c7> in <module>

…

173 input_df, input_column, param_dict = _create_input_df(

–> 174 input_value, pivot_reg, parent_kwargs=kwargs

175 )

…

KeyError: (“‘ip_column’ is not in the input dataframe”,

‘Please specify the column when calling the function.

You can use one of the parameter names for this:’, [‘column’, ‘input_column’, ‘input_col’, ‘src_column’, ‘src_col’])

>>> entities.IpAddress.util.whois(ip_df, column=”ip”) # correct

nir

|

asn_registry

|

asn

|

asn_cidr

|

asn_country_code

|

asn_date

|

asn_description

|

query

|

nets

|

NaN

|

arin

|

15169

|

172.217.15.0/24

|

US

|

2012-04-16

|

GOOGLE, US

|

172.217.15.99

|

[{‘cidr’: ‘172.217.0.0/16’, ‘name’: ‘GOOGLE’, ‘handle’: ‘NET-172-217-0-0-1’, ‘range’: ‘172.217.0…

|

NaN

|

arin

|

8075

|

40.80.0.0/12

|

US

|

2015-02-23

|

MICROSOFT-CORP-MSN-AS-BLOCK, US

|

40.85.232.64

|

[{‘cidr’: ‘40.80.0.0/12, 40.124.0.0/16, 40.125.0.0/17, 40.74.0.0/15, 40.120.0.0/14, 40.76.0.0/14…

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

NaN

|

Note: for most functions you can ignore the parameter name and just specify it as a positional parameter. You can also use the original parameter name of the underlying function or the placeholder name “value”.

The following are all equivalent:

>>> IpAddress.util.ip_type(ip_list)

>>> IpAddress.util.ip_type(ip_str=ip_list)

>>> IpAddress.util.ip_type(value=ip_list)

>>> IpAddress.util.ip_type(data=ip_list)

When passing both a DataFrame and column name use:

>>> IpAddress.util.ip_type(data=ip_df, column=“col_name”)

You can also pass an entity instance of an entity as a input parameter. The pivot code knows which attribute or attributes of an entity will provider the input value.

>>> ip_entity = IpAddress(Address=”40.85.232.64″)

>>> IpAddress.util.ip_type(ip_entity)

|

ip

|

result

|

0

|

40.85.232.64

|

Public

|

Iterable/DataFrame inputs and single-value functions

Many of the underlying functions only accept single values as inputs. Examples of these are the data query functions – typically they expect a single host name, IP address, etc.

Pivot knows about the type of parameters that the function accepts. It will adjust the input to match the expectations of the underlying function. If a list or DataFrame is passed as input to a single-value function Pivot will split the input and call the function once for each value. It then combines the output into a single DataFrame before returning the results.

You can read a bit more about how this is done in the Appendix – “how do pivot wrappers work?”

Data queries – where does the time range come from?

The Pivot class has a built-in time range, which is used by default for all queries. Don’t worry – you can change it easily.

To see the current time setting:

>>> Pivot.current.timespan

TimeStamp(start=2021-02-15 21:01:40.381864, end=2021-02-16 21:01:40.381864, period=-1 day, 0:00:00)

Note: “Pivot.current” gives you access to the last created instance of the Pivot class – if you’ve created multiple instances of Pivot (which you rarely need to do), you can always get to the last one you created using this class attribute.

You can edit the time range interactively

Pivot.current.edit_query_time()

Or by setting the timespan property directly.

>>> from msticpy.common.timespan import TimeSpan

>>> # TimeSpan accepts datetimes or datestrings

>>> timespan = TimeSpan(start=”02/01/2021″, end=”02/15/2021″)

>>> Pivot.current.timespan = timespan

TimeStamp(start=2021-02-01 00:00:00, end=2021-02-15 00:00:00, period=-14 days +00:00:00)

In an upcoming release there is also a convenience function for setting the time directly with Python datetimes or date strings.

Pivot.current.set_timespan(start=”2020-02-06 03:00:00″, end=”2021-02-15 01:42:42″)

You can also override the built-in time settings by specifying start and end as parameters to the query function.

Host.AzureSentinel.SecurityAlert_list_related_alerts(host_name=”victim00″, start=dt1, end=dt2)

Supplying extra parameters

The Pivot layer will pass any unused keyword parameters to the underlying function. This does not usually apply to positional parameters – if you want parameters to get to the function, you have to name them explicitly. In this example the add_query_items parameter is passed to the underlying query function

>>> entities.Host.AzureSentinel.SecurityEvent_list_host_logons(

host_name=”victimPc”,

add_query_items=”| summarize count() by LogonType”

)

|

LogonType

|

count_

|

0

|

5

|

27492

|

1

|

4

|

12597

|

2

|

3

|

6936

|

3

|

2

|

173

|

4

|

10

|

58

|

5

|

9

|

8

|

6

|

0

|

19

|

7

|

11

|

1

|

Pivot Pipelines

Because all pivot functions accept DataFrames as input and produce DataFrames as output, it means that it is possible to chain pivot functions into a pipeline.

Joining input to output

You can join the input to the output. This usually only makes sense when the input is a DataFrame. It lets you keep the previously accumulated results and tag on the additional columns produced by the pivot function you are calling.

The join parameter supports “inner”, “left”, “right” and “outer” joins (be careful with the latter though!) See pivot joins documentation for more details.

Although joining is useful in pipelines you can use it on any function whether in a pipeline or not. In this example you can see that the idx, ip and type columns have been carried over from the source DataFrame and joined with the output.

>>> entities.IpAddress.util.whois(ip_df, column=”ip”, join=”inner”)

idx

|

ip

|

type

|

nir

|

asn_registry

|

asn

|

asn_cidr

|

asn_country_code

|

asn_date

|

asn_description

|

query

|

nets

|

0

|

172.217.15.99

|

Public

|

NaN

|

arin

|

15169

|

172.217.15.0/24

|

US

|

2012-04-16

|

GOOGLE, US

|

172.217.15.99

|

[{‘cidr’: ‘172.217.0.0/16’, ‘name’: ‘GOOGLE’, ‘handle’: ‘NET-172-217-0-0-1’, ‘range’: ‘172.217.0…

|

1

|

40.85.232.64

|

Public

|

NaN

|

arin

|

8075

|

40.80.0.0/12

|

US

|

2015-02-23

|

MICROSOFT-CORP-MSN-AS-BLOCK, US

|

40.85.232.64

|

[{‘cidr’: ‘40.80.0.0/12, 40.124.0.0/16, 40.125.0.0/17, 40.74.0.0/15, 40.120.0.0/14, 40.76.0.0/14…

|

2

|

20.38.98.100

|

Public

|

NaN

|

arin

|

8075

|

20.36.0.0/14

|

US

|

2017-10-18

|

MICROSOFT-CORP-MSN-AS-BLOCK, US

|

20.38.98.100

|

[{‘cidr’: ‘20.64.0.0/10, 20.40.0.0/13, 20.34.0.0/15, 20.128.0.0/16, 20.36.0.0/14, 20.48.0.0/12, …

|

Pipelines

Pivot pipelines are implemented pandas customr accessors. Read more about Extending pandas here.

When you load Pivot it adds the mp_pivot pandas DataFrame accessor. This appears as an attribute to DataFrames.

>>> ips_df.mp_pivot

<msticpy.datamodel.pivot_pd_accessor.PivotAccessor at 0x275754e2208>

The main pipelining function run is a method of mp_pivot. run requires two parameters

- the pivot function to run and

- the column to use as input.

See mp_pivot.run documentation for more details.

Here is an example of using it to call four pivot functions, each using the output of the previous function as input and using the join parameter to accumulate the results from each stage.

1. (

2. ips_df

3. .mp_pivot.run(IpAddress.util.ip_type, column=”IP”, join=”inner”)

4. .query(“result == ‘Public'”).head(10)

5. .mp_pivot.run(IpAddress.util.whois, column=”ip”, join=”left”)

6. .mp_pivot.run(IpAddress.util.geoloc_mm, column=”ip”, join=”left”)

7. .mp_pivot.run(IpAddress.AzureSentinel.SecurityAlert_list_alerts_for_ip, source_ip_list=”ip”, join=”left”)

8. ).head(5)

Let’s step through it line by line.

- The whole thing is surrounded by a pair of parentheses – this is just to let us split the whole expression over multiple lines without Python complaining.

- Next we have ips_df – this is just the starting DataFrame, our input data.

- Next we call the mp_pivot.run() accessor method on this dataframe. We pass it the pivot function that we want to run (IpAddress.util.ip_type) and the input column name (IP). This column name is the column in ips_df where our input IP addresses are. We’ve also specified an join type of “inner”. In this case the join type doesn’t really matter since we know we get exactly one output row for every input row.

- We’re using the pandas query function to filter out unwanted entries from the previous stage. In this case we only want “Public” IP addresses. This illustrates that you can intersperse standard pandas functions in the same pipeline. We could have also added a column selector expression ([[“col1”, “col2″…]]), for example, if we wanted to filter the columns passed to the next stage

- We are calling a further pivot function – whois. Remember the “column” parameter always refers to the input column, i.e. the column from previous stage that we want to use in this stage.

- We are calling geoloc_mm to get geo location details joining with a “left” join – this preserves the input data rows and adds null columns in any cases where the pivot function returned no result.

- Is the same as 6 except the called function is a data query to see if we have any alerts that contain these IP addresses. Remember, in the case of data queries we have to name the specific query parameter that we want the input to go to. In this case, each row value in the ip column from the previous stage will be sent to the query.

- Finally we close the parentheses to form a valid Python expression. The whole expression returns a DataFrame so we can add further pandas operations here (like .head(5) shown here).

>>> (

ips_df

.mp_pivot.run(entities.IpAddress.util.ip_type, column=”IP”, join=”inner”)

.query(“result == ‘Public'”).head(10)

.mp_pivot.run(entities.IpAddress.util.whois, column=”ip”, join=”left”)

.mp_pivot.run(entities.IpAddress.util.geoloc_mm, column=”ip”, join=”left”)

.mp_pivot.run(entities.IpAddress.AzureSentinel.SecurityAlert_list_alerts_for_ip, source_ip_list=”ip”, join=”left”)

).head(5)

|

TenantId

|

TimeGenerated

|

AlertDisplayName

|

AlertName

|

Severity

|

Description

|

ProviderName

|

VendorName

|

VendorOriginalId

|

SystemAlertId

|

AlertType

|

ConfidenceLevel

|

0

|

8ecf8077-cf51-4820-aadd-14040956f35d

|

2020-12-23 14:08:12+00:00

|

Microsoft Threat Intelligence Analytics

|

Microsoft Threat Intelligence Analytics

|

Medium

|

Microsoft threat intelligence analytic has detected Blocked communication to a known WatchList d…

|

Threat Intelligence Alerts

|

Microsoft

|

91d806d3-6b6f-4e5c-a78f-e674d602be51

|

625ff9af-dddc-0cf8-9d4b-e79067fa2e71

|

ThreatIntelligence

|

83

|

1

|

8ecf8077-cf51-4820-aadd-14040956f35d

|

2020-12-23 14:08:12+00:00

|

Microsoft Threat Intelligence Analytics

|

Microsoft Threat Intelligence Analytics

|

Medium

|

Microsoft threat intelligence analytic has detected Blocked communication to a known WatchList d…

|

Threat Intelligence Alerts

|

Microsoft

|

173063c4-10dd-4dd2-9e4f-ec5ed596ec54

|

c977f904-ab30-d57e-986f-9d6ebf72771b

|

ThreatIntelligence

|

83

|

2

|

8ecf8077-cf51-4820-aadd-14040956f35d

|

2020-12-23 14:08:12+00:00

|

Microsoft Threat Intelligence Analytics

|

Microsoft Threat Intelligence Analytics

|

Medium

|

Microsoft threat intelligence analytic has detected Blocked communication to a known WatchList d…

|

Threat Intelligence Alerts

|

Microsoft

|

58b2cda2-11c6-42b8-b6f1-72751cad8f38

|

9ee547e4-cba1-47d1-e1f9-87247b693a52

|

ThreatIntelligence

|

83

|

Other pipeline functions

In addition to run, the mp_pivot accessor also has the following functions:

- display – this simply displays the data at the point called in the pipeline. You can add an optional title, filtering and the number or rows to display

- tee – this forks a copy of the DataFrame at the point it is called in the pipeline. It will assign the forked copy to the name given in the var_name parameter. If there is an existing variable of the same name it will not overwrite it unless you add the clobber=True parameter.

In both cases the pipelined data is passed through unchanged.

See Pivot functions help for more details.

Use of these is shown below in this partial pipeline.

…

.mp_pivot.run(IpAddress.util.geoloc_mm, column=”ip”, join=”left”)

.mp_pivot.display(title=”Geo Lookup”, cols=[“IP”, “City”]) # << display an intermediate result

.mp_pivot.tee(var_name=”geoip_df”, clobber=True) # << save a copy called ‘geoip_df’

.mp_pivot.run(IpAddress.AzureSentinel.SecurityAlert_list_alerts_for_ip, source_ip_list=”ip”, join=”left”)

In the next release we’ve also implemented:

- tee_exec – this executes a function on a forked copy of the DataFrame The function must be a pandas function or custom accessor. A good example of the use of this might be creating a plot or summary table to display partway through the pipeline.

Extending Pivot – adding your own (or someone else’s) functions

You can add pivot functions of your own. You need to supply:

- the function

- some metadata that describes where the function can be found and how the function works

Full details of this are described here.

The current version of Pivot doesn’t let you add functions defined inline (i.e. written in the notebook itself) but this will be possible in the forthcoming release.

Let’s create a function in a Python module my_module.py. We can do this using the %%write_file magic function and running the cell.

%%writefile my_module.py

“””Upper-case and hash”””

from hashlib import md5

def my_func(input: str):

md5_hash = “-“.join(hex(b)[2:] for b in md5(input.encode(“utf-8”)).digest())

return {

“Title”: input.upper(),

“Hash”: md5_hash

}

We also need to create a YAML definition file for our pivot function. Again we can use %%write_file to create a local file in the current directory. We need to tell Pivot

- the name of the function and source module,

- the name of the container that the function will appear in,

- the input type expected by the function (“value”, “list” or “dataframe”)

- which entities to add the pivot to, along with a corresponding attribute of the entity. (The attribute is used in cases where you are passing an instance of an entity itself as an input parameter – if in doubt just use any valid attribute of the entity).

- The name of the input attribute of the underlying function.

- An (optional) new name to give the function.

%%writefile my_func.yml

pivot_providers:

my_func_defn:

src_func_name: my_func

src_module: my_module

entity_container_name: cyber

input_type: value

entity_map:

Host: HostName

func_input_value_arg: input

func_new_name: upper_hash_name

Now we can register the function we created as a pivot function.

>>> from msticpy.datamodel.pivot_register_reader import register_pivots

>>> register_pivots(“my_func.yml”)

An then run it.

>>> Host.cyber.upper_hash_name(“host_name”)

|

Title

|

Hash

|

input

|

0

|

HOST_NAME

|

5d-41-40-2a-bc-4b-2a-76-b9-71-9d-91-10-17-c5-92

|

host_name

|

In the next release, this will be available as a simple function that can be used to add a function defined in the notebook as shown here.

from hashlib import md5

def my_func2(input: str):

md5_hash = “-“.join(hex(b)[2:] for b in md5(input.encode(“utf-8”)).digest())

return {

“Title”: input.upper(),

“Hash”: md5_hash

}

Pivot.add_pivot_function(

func=my_func2,

container=”cyber”, # which container it will appear in on the entity

input_type=”value”,

entity_map={“Host”: “HostName”},

func_input_value_arg=”input”,

func_new_name=”il_upper_hash_name”,

)

Host.cyber.il_upper_hash_name(“host_name”)

|

Title

|

Hash

|

input

|

0

|

HOST_NAME

|

5d-41-40-2a-bc-4b-2a-76-b9-71-9d-91-10-17-c5-92

|

host_name

|

Conclusion

We’ve taken a short tour through the MSTICPy Pivot functions, looking at how they make the functionality in MSTICPy easier to discover and use.

I’m particularly excited about the pipeline functionality. In the next release we’re going to make it possible to define reusable pipelines in configuration files and execute them with a single function call. This should help streamline some common patterns in notebooks for Cyber hunting and investigation.

Please send any feedback or suggestions for improvements to msticpy@microsoft.com or create an issue on https://github.com/microsoft/msticpy.

Happy hunting!

Appendix – how do pivot wrappers work?

In Python you can create functions that return other functions. This is called wrapping the function.

It allows the outer function to do additional things to the input parameters and the return value of the inner function.

Take this simple function that just applies proper capitalization to an input string.

def print_me(arg):

print(arg.capitalize())

print_me(“hello”)

Hello

If we try to pass a list to this function we get an expected exception since the function only knows how to process a string

print_me([“hello”, “world”])

—————————————————————————

AttributeError Traceback (most recent call last)

<ipython-input-36-94b3e61eb86f> in <module>

…

AttributeError: ‘list’ object has no attribute ‘capitalize’

We could create a wrapper function that checked the input and iterated over the individual items if arg is a list. The works but we don’t want to have to do this for every function that we want to have flexible input!

def print_me_list(arg):

if isinstance(arg, list):

for item in arg:

print_me(item)

else:

print_me(arg)

print_me_list(“hello”)

print_me_list([“how”, “are”, “you”, “?”])

Hello

How

Are

You

?

Instead, we can create a function wrapper.

In the example below, the outer function dont_care_func defines an inner function – list_or_str – and then returns this function. The inner function list_or_str is what implements the same “is-this-a-string-or-list” logic that we saw in the previous example. Crucially though, it isn’t hard-coded to call print_me but calls whatever function is passed (the func parameter) to it from the outer function dont_care_func.

# Our magic wrapper

def dont_care_func(func):

def list_or_str(arg):

if isinstance(arg, list):

for item in arg:

func(item)

else:

func(arg)

return list_or_str

How do we use this?

We simply pass the function that we want to wrap to dont_care_func. Recall, that this function just returns an instance of the inner function. In this case the value func will have been replaced by the actual function print_me.

print_stuff = dont_care_func(print_me)

Now we have a wrapped version of print_me that can handle different types of input. Magic!

print_stuff(“hello”)

print_stuff([“how”, “are”, “you”, “?”])

Hello

How

Are

You

?

We can also define further functions and create wrapped versions of those by passing them to dont_care_func.

def shout_me(arg):

print(arg.upper(), “U0001F92C!”, end=” “)

shout_stuff = dont_care_func(shout_me)

shout_stuff(“hello”)

shout_stuff([“how”, “are”, “you”, “?”])

HELLO ?! HOW ?! ARE ?! YOU ?! ? ?!

The wrapper functionality in Pivot is a bit more complex than this but essentially operates this way.

by Contributed | Feb 23, 2021 | Technology

This article is contributed. See the original author and article here.

For most people, remote work—whether part-time or full-time—is the new way of working. That’s why over 115 million daily users rely on Microsoft Teams to connect and collaborate, and also why Teams was recognized as a Leader in the Gartner Magic Quadrant for Unified Communications as a Service. We designed Teams, your digital hub for teamwork, and OneDrive, your personal cloud storage that lets you access your files from anywhere, to work seamlessly and securely together. You can use Microsoft 365 Apps including Word, PowerPoint, Excel, or OneNote notebooks to create your work, store those files using OneDrive, and share them securely with colleagues in Teams, where you can co-author documents, meet with your teammates, and chat or meet with people inside or outside organization, all in one place.

Imagine a day in the life using Teams + OneDrive

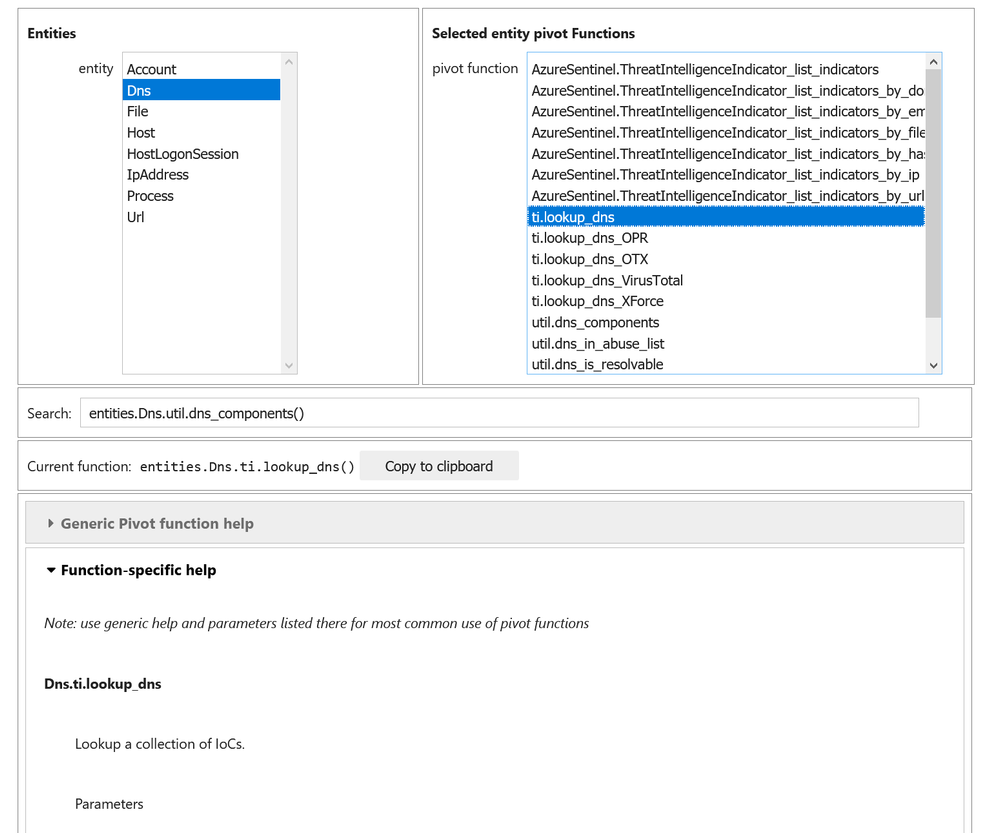

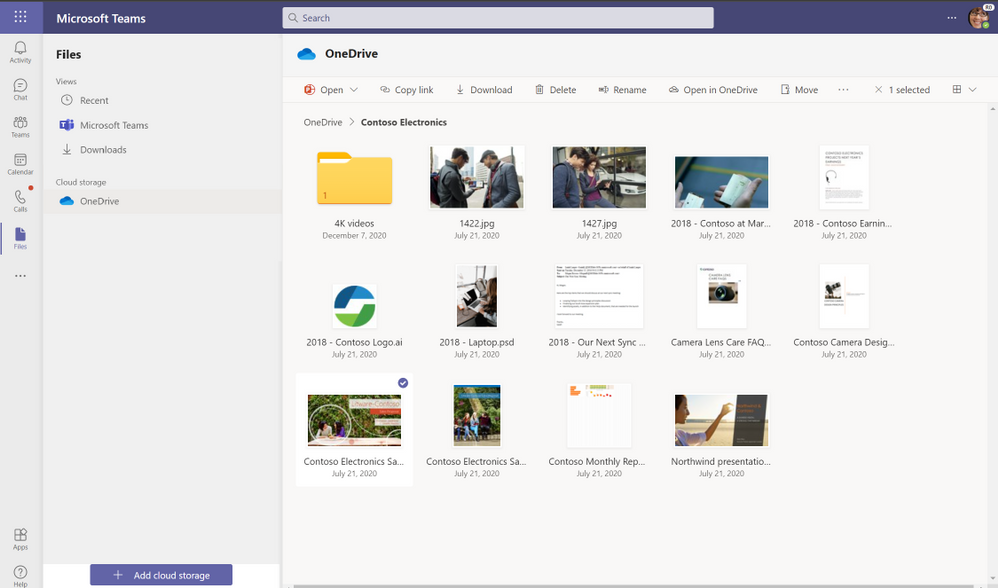

Let’s walk through a day of using OneDrive and Teams together for work. You’re preparing a PowerPoint presentation for a conference call with an important client, Contoso, later in the week. In the morning, you open Teams and select the Files list, which shows you Recent files you’ve been working on, your Microsoft Teams files, and Downloads, as well as any cloud storage services connected with Teams. OneDrive is the default files app for Microsoft 365. When you click on OneDrive, you can see all your individual files for work. You can quickly add, upload, and sync files right in Teams, or open in them directly OneDrive.

In the OneDrive view, you navigate to a folder called “Contoso Electronics” and open the Contoso Electronics Sales presentation draft you’ve been working on and recently shared with your manager for review via chat in Teams. When you share your OneDrive files in chat, each file has unique permissions granted based on the person or people in the chat—that way, you don’t have to worry about unintended recipients accessing your content.

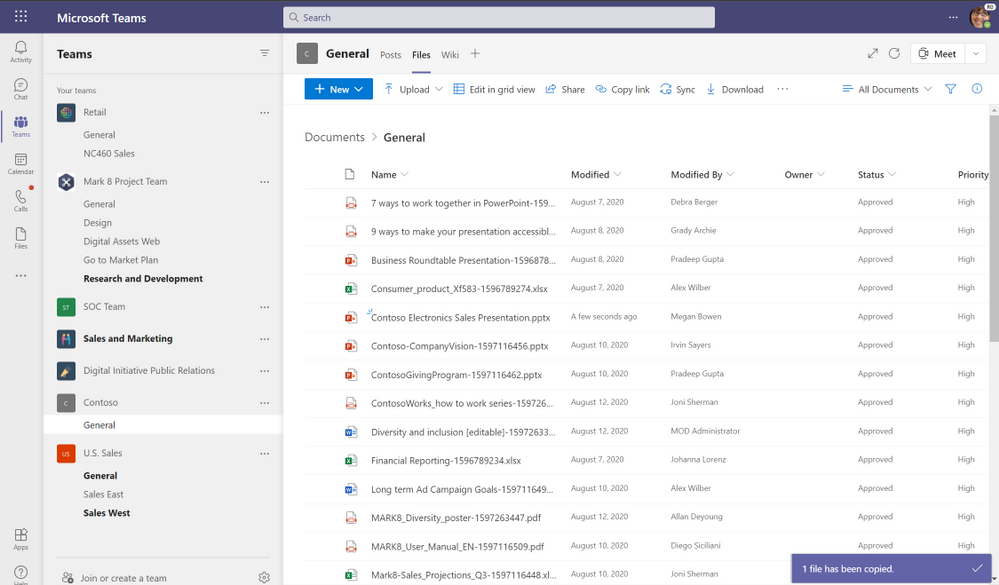

After you’ve reviewed your manager’s edits and updated the presentation, you decide it’s ready for the whole team to review. Your department has a team called “ Contoso” where you share and manage all deliverables for the client. Whenever a team is created, a corresponding Microsoft 365 Group and SharePoint shared library are also automatically created. All the files created in or uploaded to your Contoso folder are stored and backed up in the SharePoint library. You copy the presentation from your OneDrive to the documents folder within Contoso team and then select the Posts tab to leave a message to let everyone on your team know the file is ready for review. Leveraging OneDrive you can sync all the files in the Contoso shared library directly to your device so you can work on them while offline.

Due to the deep integration between OneDrive, Teams, and Office, your team can select from a variety of tools to annotate, highlight, and comment on content. They can use @mentions to flag comments and tasks for you or other reviewers, and they can also track version history and restore previous file versions as needed. And because all edits are synced and stored in the cloud, they can start editing a document on one device and finish it on another. When the afternoon team meeting to review the presentation begins, everyone can easily access the file to review each other’s comments and use co-authoring to finish editing the presentation together in real time.

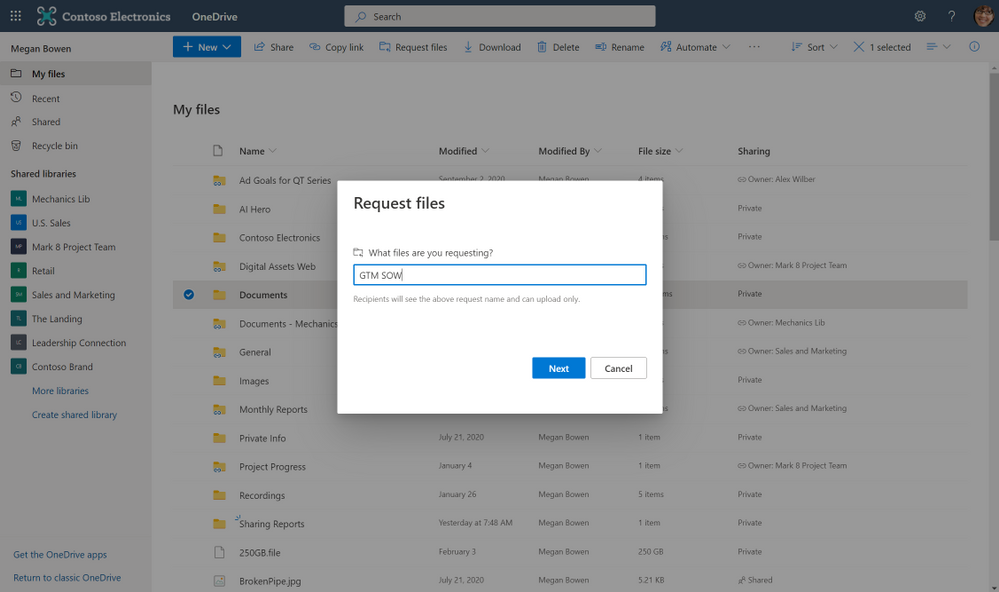

Later in the day, you’ve scheduled focus time to work on a project outlining the go-to market plan for a new product series. A co-worker has already started the outline and uploaded the document to the Go-To Market channel in Teams. You decide to work on the document at a park near your house, so you take your tablet. Since you had already added a shortcut to the Go To Market shared library using the Add to OneDrive feature, which brings all your shared content from OneDrive, Teams, and SharePoint into one place , you can swiftly fetch the outline and start working on the same right within your OneDrive app. You also realize that several GTM deliverables are missing approved vendor contracts. Using the file request feature in OneDrive you create an upload-only link and share the same in your “Partner Program“ team which consists of all your suppliers as guests members. This enables each vendor to upload their proposed scope of work directly to your OneDrive in a location that you chose—without having visibility to the other files in the folder.

Share files and collaborate securely, with peace of mind

While popular online storage apps integrate with Teams so you can access and share files, OneDrive provides a more secure sharing experience to help control data leakage and access to sensitive company information. Having your content in OneDrive enables you to share files as a link, internally and externally, so that every recipient has access to the most updated version. Depending on how your IT organization has configured sharing permissions in OneDrive and SharePoint, you can set permissions for who can access that link—anyone, people only inside the organization, specific people or people in the Teams group chat—and whether they can view or edit the file. You can also set expiration dates (for example, for outside vendors you don’t want accessing files or folders past a certain date) or set passwords to protect sensitive company or employee information. Blocking downloads on files also prevents recipients from saving files to their computers.

Sharing integration in Microsoft Teams

Sharing integration in Microsoft Teams

Work confidently, knowing that IT can protect your data

Exposure of sensitive company or client information can have serious legal and compliance implications. Today’s remote working environment can heighten these worries for IT, because people need to share information outside the bounds of a protected company environment. Teams and OneDrive not only provide coherent collaborative experiences for you but also bring consistency for the admins as they maintain the productivity apps. Instead of managing multiple third-party tools in silo the seamless integration between Teams and OneDrive empower admins to set governance and compliance policies at an organization level that can be extended to both OneDrive and Teams.

To keep you protected, IT can configure secure sharing policies in OneDrive which automatically gets adopted by Teams, ensuring you have the tools you need to collaborate securely and consistently.

IT can also use Microsoft Information Protection to create policies for automatic classification of sensitive data, so if you create a document that contains sensitive client data, that document will automatically be classified by the system and encrypted for additional protection. This takes the burden of worrying about security off you, letting you focus on getting work done. Using information barriers, IT can also restrict communication and collaboration in Teams or OneDrive between two departments or segments to avoid a conflict of interest from occurring or between certain people to safeguard internal information.

IT can also keep an eye on how you and your team interact with shared content, adding an extra layer of security and control. Through detailed audit logs and reports available in the Microsoft 365 Security and Compliance Center, IT can trace OneDrive activity at the folder, file, and user levels, so they can see at a glance if any unauthorized users have tried to access sensitive company or client information. Every user action, including changes and modifications made to files and folders, is recorded for a full audit trail. In addition, even remotely, IT has the device visibility and control that’s especially important for thwarting breaches and ransomware attacks.

To learn more about why Teams and OneDrive are better together and how you can streamline your workday, check out our latest episode on Sync Up- a OneDrive podcast where we talk with Cory Kincaid, a Customer Success Manager for Modern Work, who advises customers on how to use technologies like Teams and OneDrive to improve their business.

https://html5-player.libsyn.com/embed/episode/id/18023576/height/90/theme/custom/thumbnail/yes/direction/backward/render-playlist/no/custom-color/f99400/

Thank you for your time reading all about OneDrive,

Ankita Kirti

OneDrive

Carbon Negative

Under construction: Climeworks’ new large-scale direct air capture and storage plant, “Orca.” (Credit: Climeworks)

Avoiding worst case climate scenarios will require removing emissions from the atmosphere.

Like most organizations, Scope 3 accounts for the vast majority of Microsoft’s carbon emissions.

A deeper look at Microsoft’s FY21 Carbon Removal Portfolio

Recent Comments