by Contributed | Apr 29, 2021 | Technology

This article is contributed. See the original author and article here.

The Office Add-ins developer platform team has new updates to share this month on Office Add-ins Patterns and Practices. PnP is a community effort, so if you are interested in contributing, see our good first issue list.

Use Outlook event-based activation to set the signature (preview)

This sample uses event-based activation to run an Outlook add-in when the user creates a new message or appointment. The add-in can respond to events, even when the task pane is not open. It also uses the setSignatureAsync API. If no signature is set, the add-in prompts the user to set a signature, and can then open the task pane for the user.

Contributors

Thank you to our contributors who are actively helping each month with the PnP-OfficeAddins community effort.

Want to contribute?

PnP is a community effort by developers like you. Check out our good first issue list as a great place to help with some samples. Feel free to contribute to existing samples or create new ones.

About Office Add-ins Patterns & Practices (PnP)

Office Add-ins PnP is a Microsoft-led, community driven effort that helps developers extend, build, and provision customizations on the Office platform the right way by providing guidance and help through official documentation and open-source initiatives. The source is maintained in GitHub where anyone can participate. You can provide contributions to the samples, reusable components, and documentation. Office Add-ins PnP is owned and coordinated by Office engineering teams, but the work is done by the community for the community.

You can find code samples for Office Add-in development in the Office Add-ins PnP repo. Some samples are also documented in the Office Add-ins docs, such as Open in Excel.

Additional resources

Learn more by joining the monthly Office Add-ins community call.

by Contributed | Apr 29, 2021 | Technology

This article is contributed. See the original author and article here.

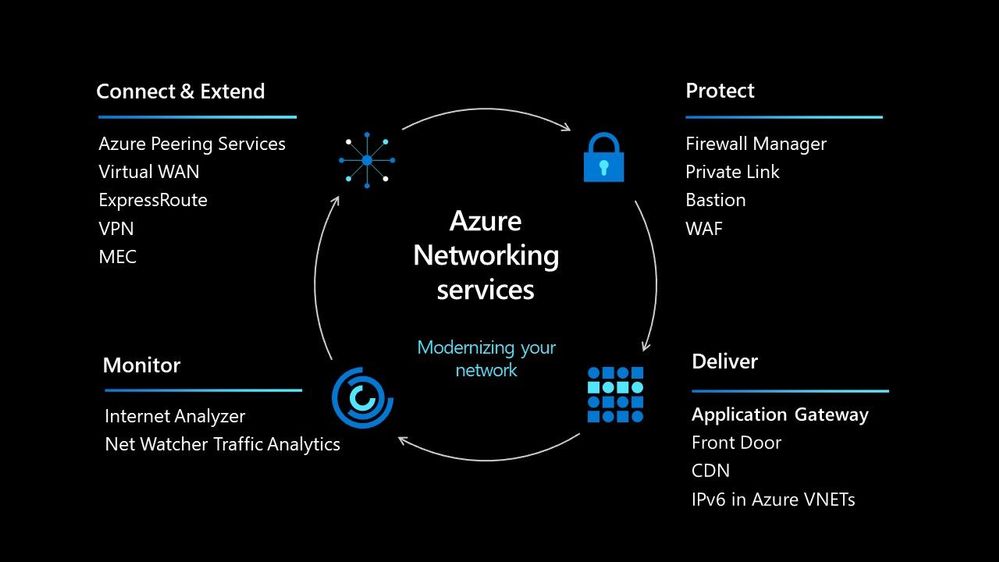

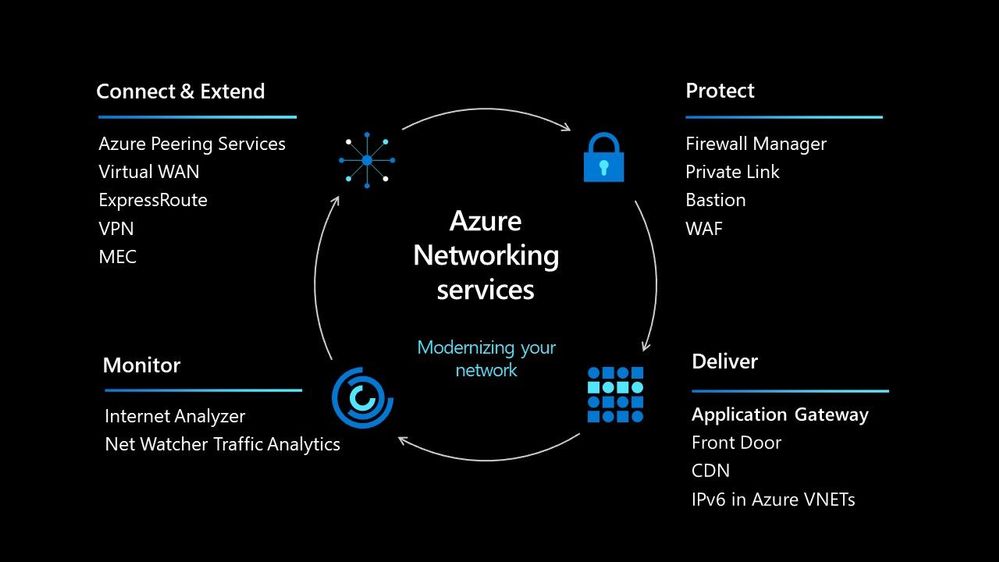

In today’s world we must be able to scale our workloads, whether that be dealing with an internal workload or an external workload serving your organisations’ customers. There are lots of options available to you to implement that scale and deal with the traffic to your workload, let’s walk through some of the options within Azure and help you understand their use cases.

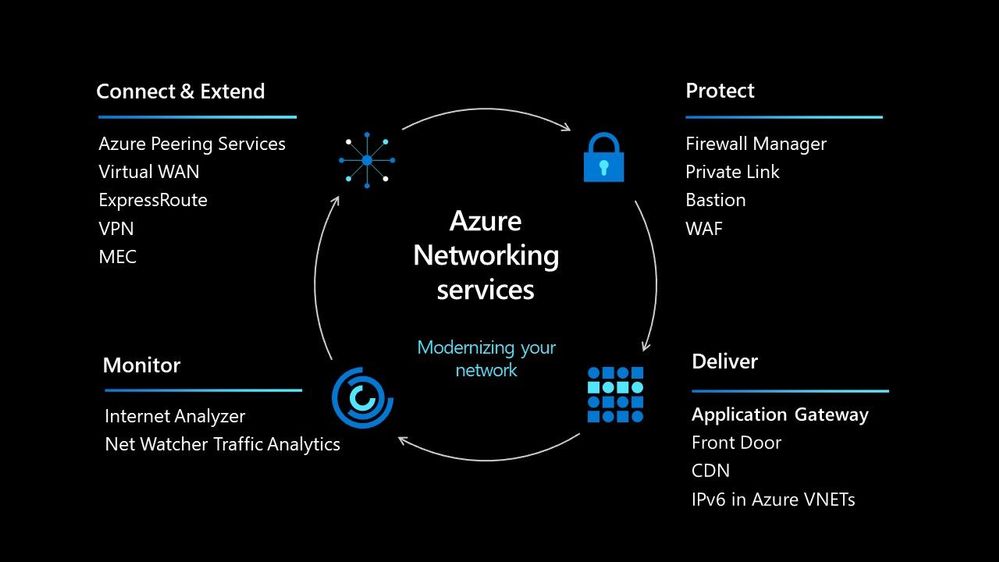

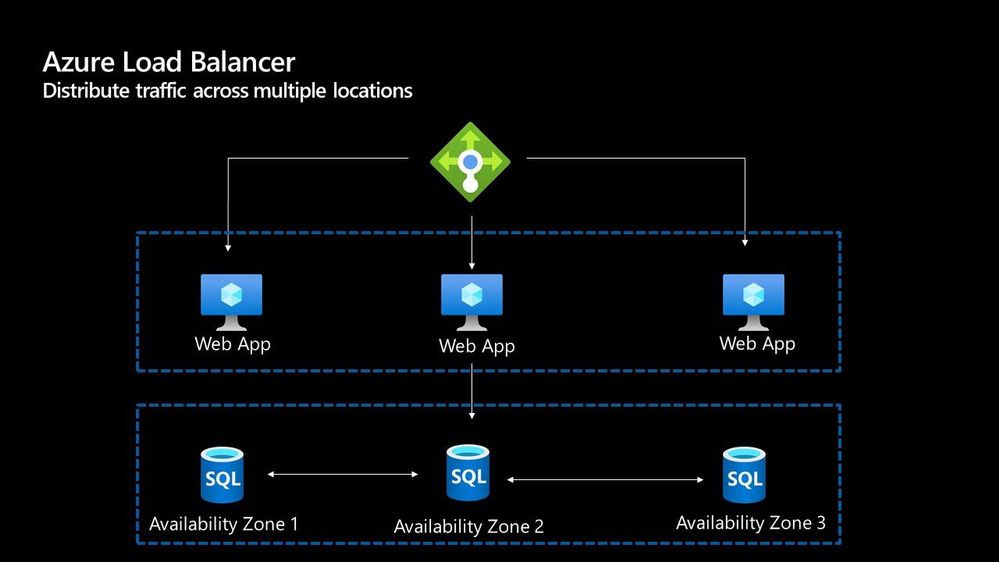

Azure Load Balancer

The Azure Load Balancer is one of the first options that you have to help deal with scaling your workload. It supports TCP/UDP based protocols such as HTTP, HTTPS and SMTP and other protocols used within real-time voice and video messaging applications. It helps you distribute traffic to your backend virtual machines and is a fully managed service.

With the load balancer you can distribute traffic either from external traffic or for internal traffic. The Azure Load balancer works on Layer 4 of the OSI model and is a transparent load balancer. Meaning it won’t do anything to the packets that it receives and just sends to them onto the endpoint should depend on the routing algorithm. The Azure Load Balancer has a lot of features, one that I love is the outbound connection feature, which allows you to configure all outbound traffic from your virtual network to the Internet to be displayed as from the frontend IP of the load balancer.

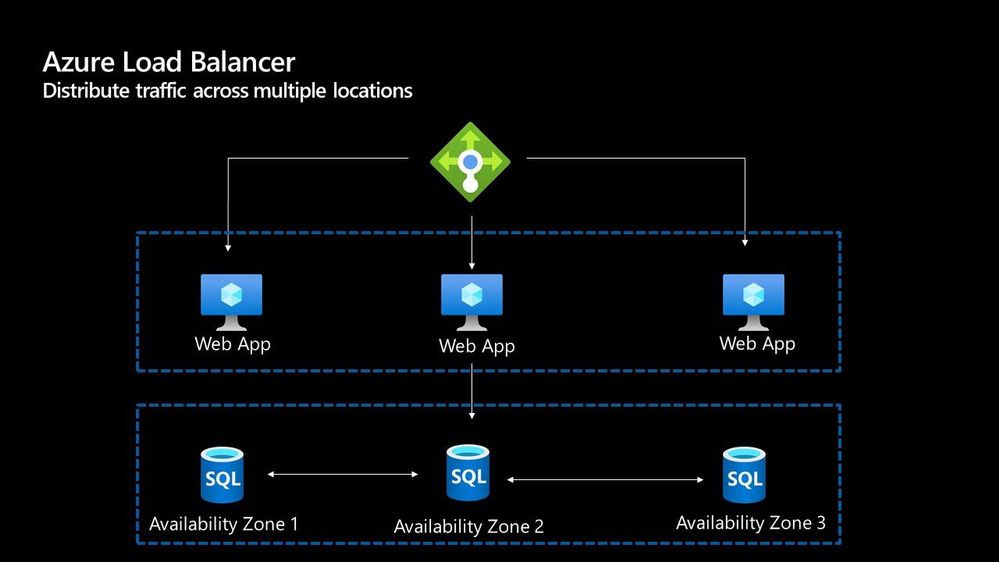

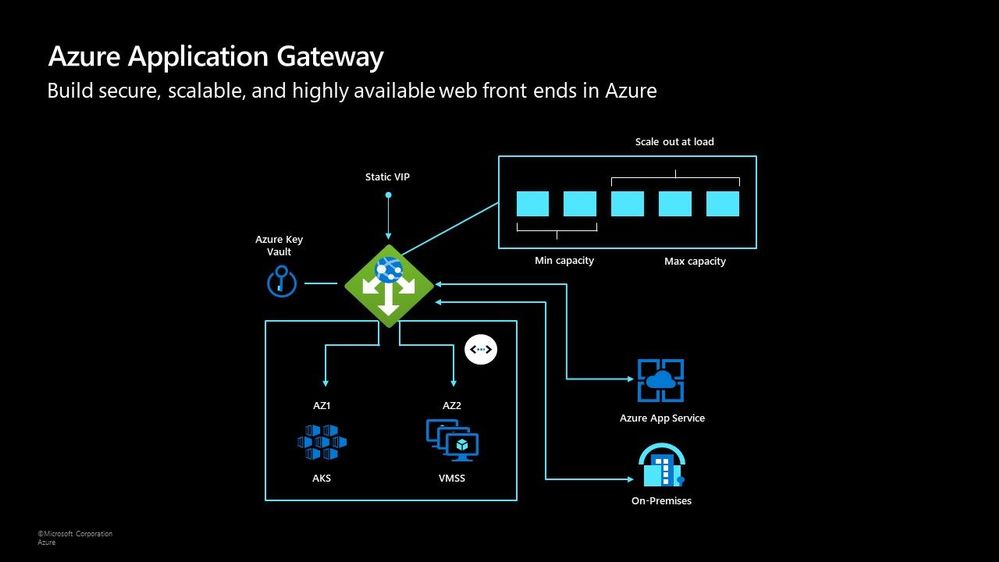

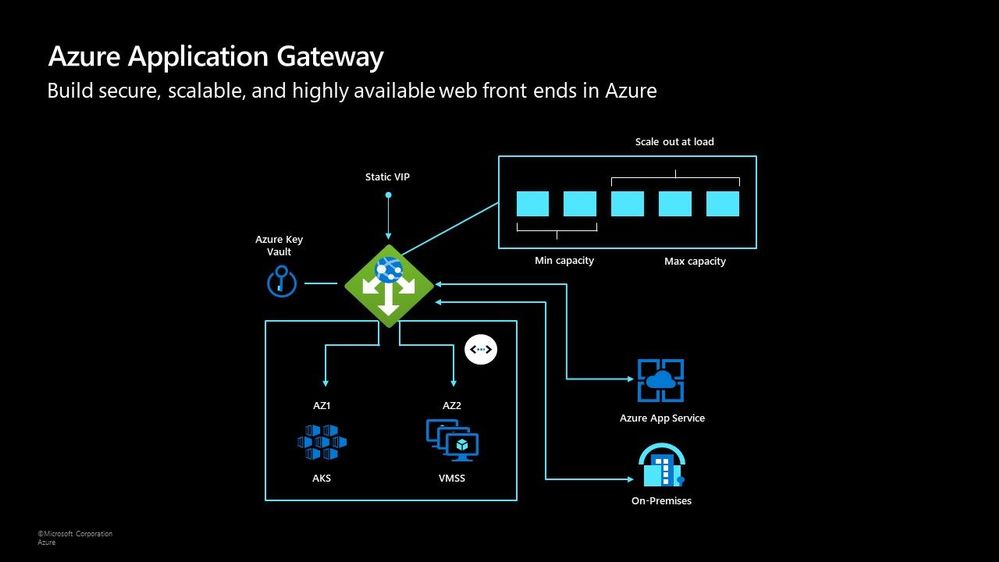

Azure Application Gateway

The Azure Application Gateway is an HTTP or HTTPs load balancer and behaves differently to the networking load balancer. It will take the connection coming into it, terminate and start a new connection to the backend endpoint based on its routing algorithms. This opens new possibilities such as redirections or URL rewrites or header changes.

Two of my favourite features of the Azure Application Gateway are connection draining and autoscaling. Both help give you confidence of running your environment and help save on operational costs.

With connection draining it helps you to gracefully remove backend endpoints to carry out planned maintenance or upgrades. With autoscaling it can start to scale out or in based on the traffic pattern to your Application Gateway. This not only helps to eliminate the guessing game of over provisioning workloads and helps to avoid your operations teams having to manually scale out or in when traffic spikes.

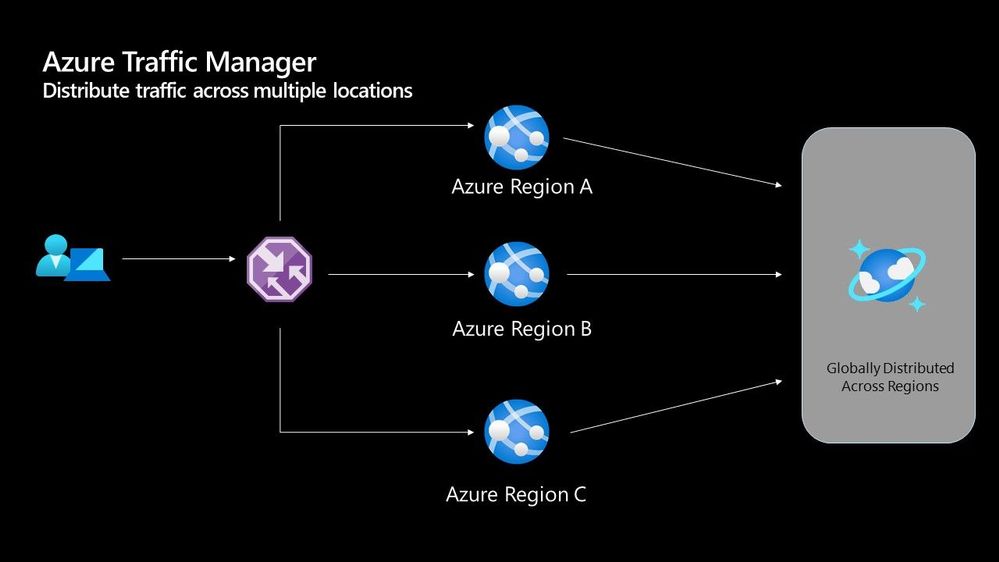

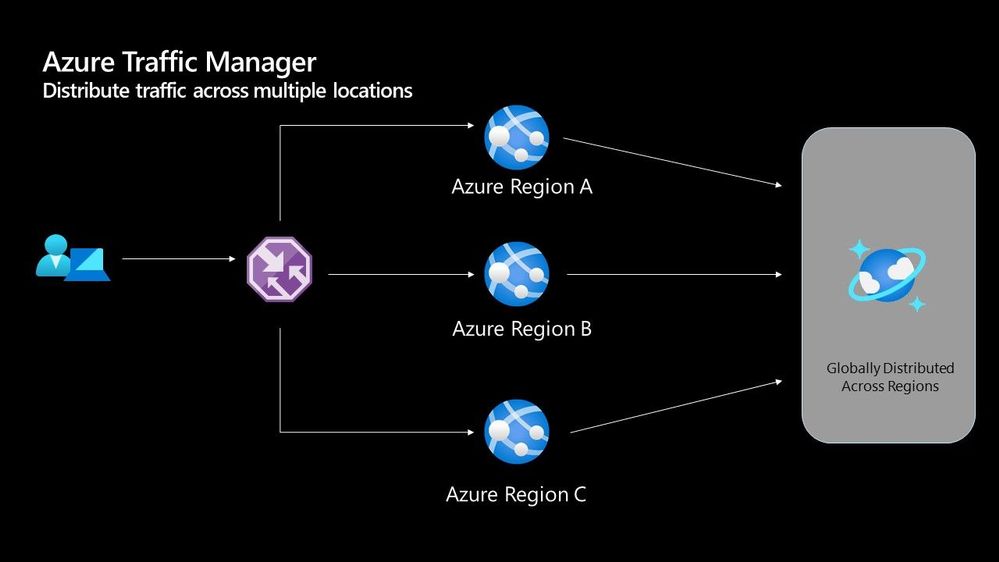

Azure Traffic Manager

The Azure Traffic Manager is a DNS-based load balancer, it allows you to distribute traffic across your public facing applications. With the traffic manager it will use DNS to direct requests from your users to the appropriate endpoint based on the traffic-routing method that you have configured. Your endpoints can be any Internet-facing service hosted inside OR outside of Azure.

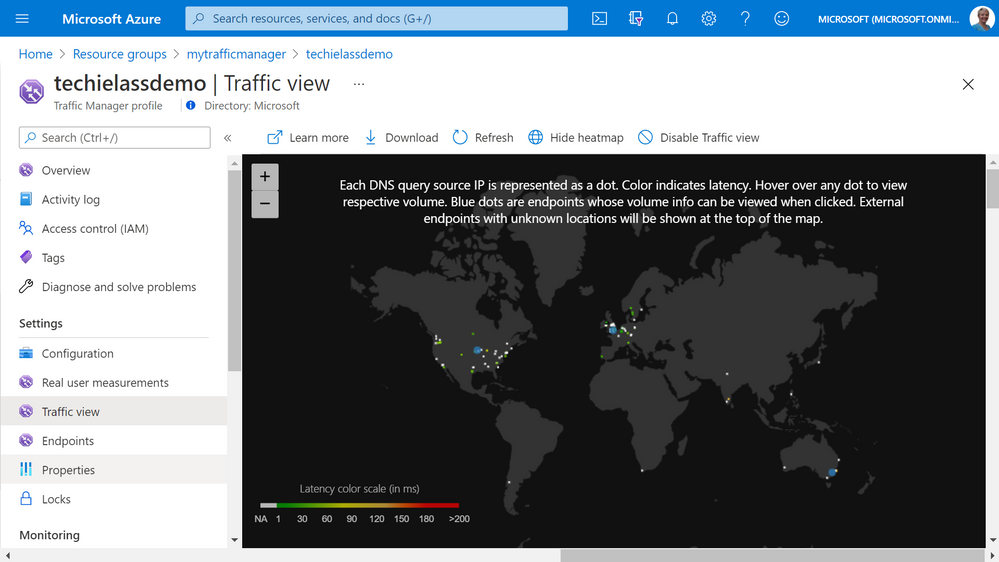

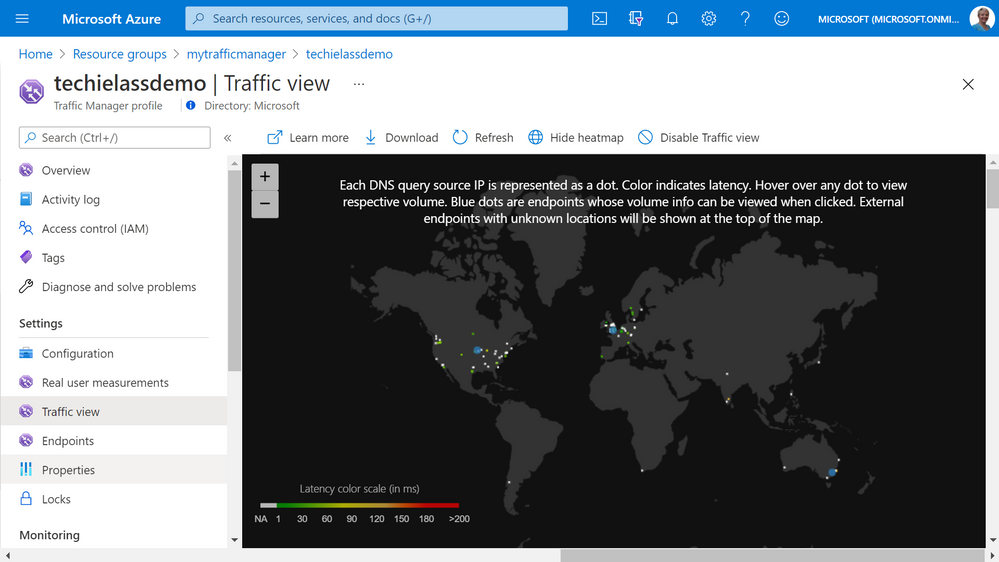

With Traffic Manager you can help to provide great performance to your end users with distributed endpoints through out the globe. The features that I really enjoy is the Real User Measurements and Traffic View features. They can be used to give you a real insight into where your users are based and the performance they are experiencing. And the data that is collected can really help you make informed decisions on how to develop your application in the future.

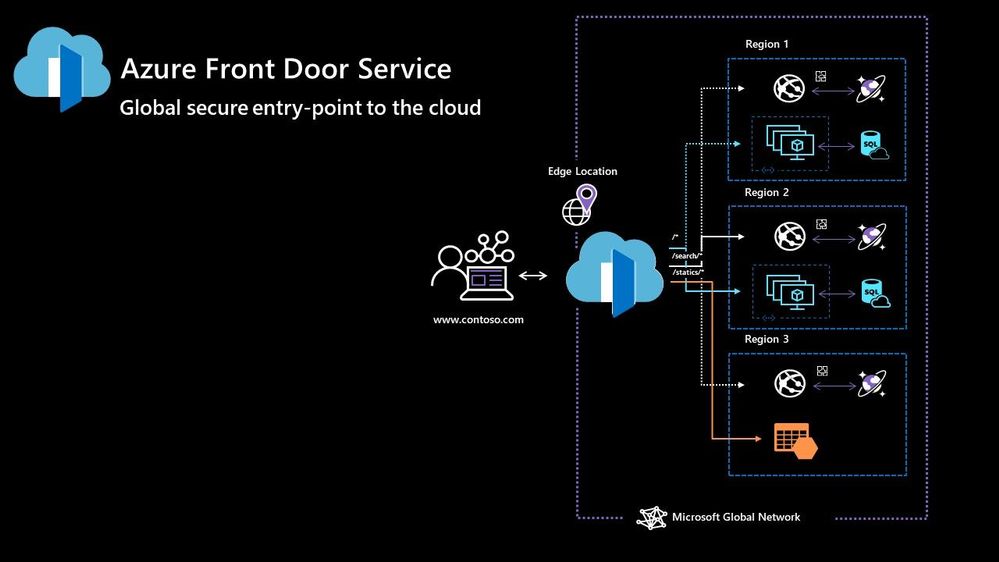

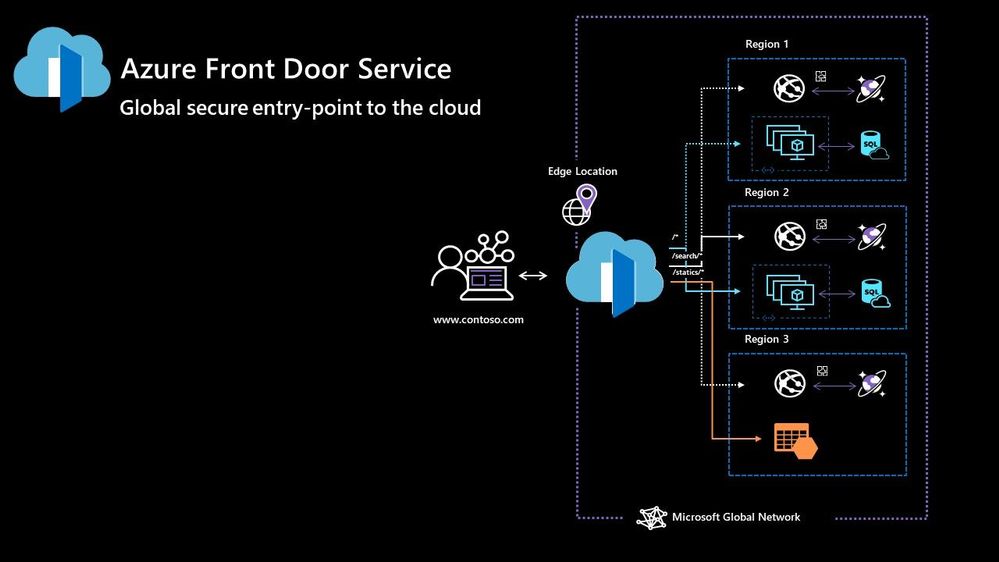

Azure Front Door

Azure Front Door works at Layer 7 of the OSI model and based on your routing method you can ensure that your customer is routed to the fastest and most available endpoint on offer. Like Traffic Manager, Front Door is resilient to failures, including failures to an entire Azure region. With Front Door you can offload SSL and certificate management, define a custom domain, and provide application security with integrated Web Application Firewall (WAF), with a ton of other features as well.

Your end points can be based within Azure or elsewhere. No matter where or what your endpoints are Front Door will help provide the best experience for your end user.

Azure DDoS Protection Basic is integrated into the Front Door platform by default and helps to defend against the most common and frequently occurring Layer 7 DNS query floods and layer 3 and 4 volumetric attacks that target public endpoints.

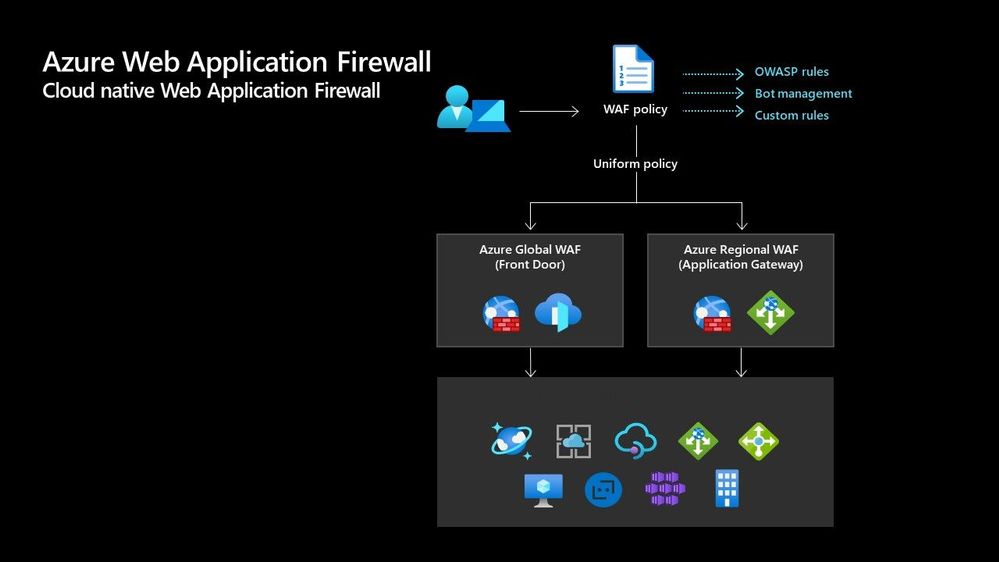

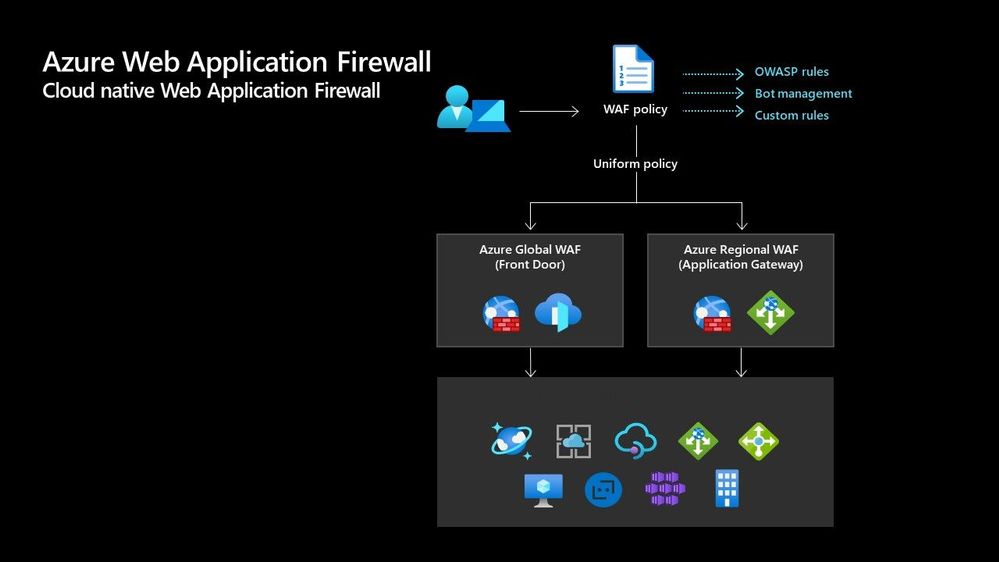

Azure Web Application Firewall

Azure Web Application Firewall (WAF) can also be used to protect your Front Door and Application Gateway implementations. Because the WAF policies are “global resources” you can apply the same policy to your Front Door and Application Gateway implementations and have a consistent approach to prevention and detection.

Which one?

There are times when it’s not a question of which one should I use, as there are scenarios when you would use two of these options together to provide the best experience all round. In preview there is a feature within the Azure Portal where it will walk you through some questions and give some advice on the best options for you – Load Balancing – help me choose.

Each of the load balancer have unique features and unique use cases, and as I said can often be used in combination together. For examples of how to use them in different scenarios be sure to check out the Azure Architecture Centre for reference architecture diagrams to get your imagination sparked!

by Contributed | Apr 28, 2021 | Technology

This article is contributed. See the original author and article here.

Hi All,

After 5 public previews I am thrilled to announce the release candidate build of AKS on Azure Stack HCI! If you have not tried out AKS on Azure Stack HCI there is no better time than the present. You can evaluate the AKS on Azure Stack HCI release candidate by registering for the Public Preview here: https://aka.ms/AKS-HCI-Evaluate (If you have already downloaded AKS on Azure Stack HCI – this evaluation link has now been updated with the release candidate).

The release candidate build has a number of fixes and improvements in response to the feedback that we have been receiving from y’all over the past months.

There is a lot to cover – but here are some highlights:

Networking improvements –

We have further extended our networking configuration options, so that you can now configure separate networks for each Kubernetes cluster you want to deploy. You can even place separate Kubernetes clusters on separate VLANs. With this release we are also now providing full support for Calico networking (in addition to our previous support for Flannel).

Storage improvements –

We are now including a new CSI storage driver that allows you to use SMB & NFS shares for read-write many storage. This is in addition to our existing driver for VHDX based storage. We have also made updates to our Linux worker nodes to enable the use of OpenEBS on top of AKS on Azure Stack HCI.

Updated Kubernetes Versions –

In this update we have updated the supported Kubernetes versions to:

- Linux: 1.17.13, 1.17.16, 1.18.10, 1.18.14, 1.19.6, 1.19.7

- Windows: 1.18.10, 1.18.14, 1.19.6, 1.19.7

We have done a lot of work “behind the scenes” to increase the reliability of deployment – and made numerous improvements to the usability of our PowerShell and Windows Admin Center based experiences.

Once you have downloaded and installed the AKS on Azure Stack HCI release candidate – you can report any issues you encounter, and track future feature work on our GitHub Project at https://github.com/Azure/aks-hci. And, like with past releases, if you do not have the hardware handy to evaluate AKS on Azure Stack HCI you can follow our guide for evaluating AKS-HCI inside an Azure VM: https://aka.ms/aks-hci-evalonazure.

I look forward to hearing from you all!

Cheers,

Ben

by Contributed | Apr 28, 2021 | Technology

This article is contributed. See the original author and article here.

Have you deployed multiple applications in your HCI cluster? Do you have application workloads in your cluster that need access to the Internet? Do you have remote users accessing application workloads in your HCI cluster? If the answer to any of these questions is yes, please read on to find out how you can protect your workloads from unauthorized access and cyber-attacks (Internal or External).

Problem: Need for network security and issues with traditional approaches

Network security is a top concern for organizations today. We are faced with increasing breaches, threats, and cyber risk. Cyber criminals are targeting high business impact data with sophisticated attacks designed to bypass perimeter controls. Once inside the company network, attackers are free to move from one system to another, in search of sensitive or personally identifiable information. These breaches can go unnoticed for a long time.

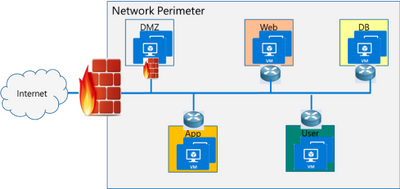

Network segmentation has been around for a long time to isolate high value data and systems. In traditional networks, security is set at the edge, where North-south communication takes place (interactions that are travelling in and out of the datacenter). This secures intranet from outside world. But offers little protection in the modern hybrid cloud world, where the perimeter has all but evaporated and a large portion of the traffic flows east-west, or server to server, between applications.

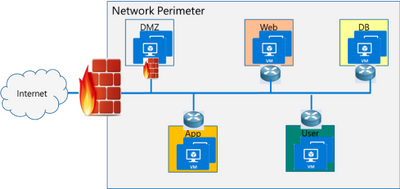

If you look at the topology below, end points can communicate with each other freely within VLANs or subnets behind a firewall. Infections on one of these servers are not contained and can easily spread to other servers.

Organizations may use physical firewalls to protect east west traffic. But that has its own drawbacks. Significant network resource utilization bottlenecks are created by sending east-west communication through a physical firewall. If firewall capacity is exhausted, security can be scaled by replacing with larger firewall or adding additional physical firewall, which is cumbersome and expensive.

Moreover, the use of physical firewalls can also create additional latency for certain applications. All traffic must traverse a physical firewall to be segmented, even when residing on the same physical server.

Solution: Microsegmentation in Azure Stack HCI

The ideal solution to complete protection is to protect every traffic flow inside the data center with a firewall, allowing only the flows required for applications to function. This is the Zero Trust Model.

Microsegmentation is the concept of creating granular network policies between applications and services. This essentially reduces the security perimeter to a fence around each application or virtual machine. The fence can permit only necessary communication between application tiers or other logical boundaries, thus making it exceedingly difficult for cyber threats to spread laterally from one system to another. Logical boundaries can be completely custom. They can be your different environments: Dev, Test, Production. Or different types of applications, or different tiers within an application. This securely isolates networks from each other and reduces the total attack surface of a network security incident.

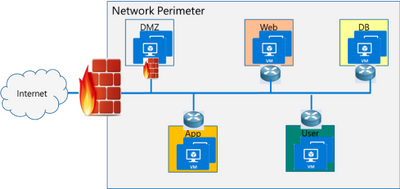

With Azure Stack HCI, you can define granular segmentation for your applications and workloads and protect them from both external and internal attacks. This is achieved through a distributed firewall, enabling administrators to define access control lists to restrict access for workloads attached to traditional VLAN networks and overlay networks. This is a network layer firewall, allowing or restricting access based on source and destination IP addresses, source and destination ports and network protocol. You can read more about this here.

IMPORTANT: The microsegmentation policies can be applied to all Azure Stack HCI workloads attached to traditional VLAN networks.

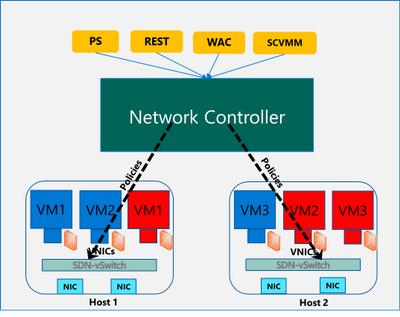

The firewall policies are configured through the management plane. You have multiple options here – standard REST interface, Powershell, Windows Admin Center (WAC) and System Center Virtual Machine Manager (SCVMM). The management plane sends the policies to a centralized control plane, Network Controller. This ships as a Server role in Azure Stack HCI OS. Network Controller pushes the policies to all the applicable Hyper-V hosts, and the policies are plumbed at the vSwitch port of virtual machines. Network Controller also ensures that the policies stay in sync, and any drift is remediated.

Configure and manage microsegmentation in Azure Stack HCI

There are two high level steps to configure microsegmentation for HCI. First, you need to setup the Network Controller and then, configure microsegmentation policies.

Setup Network Controller

Network Controller can be setup using SDN Express Powershell scripts or the Windows Admin Center (WAC) or through System Center Virtual Machine Manager (SCVMM).

Windows Admin Center

If you are deploying HCI for the first time, you can use the Windows Admin Center deployment wizard to setup Network Controller. SDN deployment is Step 5 of the wizard. This deploys the Network Controller component of SDN. Detailed instructions for launching this wizard, setting up the HCI cluster and setting up Network Controller is provided here. In the default case, you need to provide only two pieces of input:

- Path to the Azure Stack HCI OS vhdx file. This is used to deploy the Network Controller VMs.

- Credentials to join the Network Controller VMs to the domain and local admin credentials.

If you do not have DHCP configured on your management network, you will need to provide static IP addresses for the Network Controller VMs. A demo of the SDN setup is provided below:

SDN Express Scripts

If you already have an existing HCI cluster, you cannot deploy SDN through Windows Admin Center today. This support is coming soon.

In this case, you can deploy Network Controller (NC) component of SDN using SDN Express scripts. The scripts are available in the official Microsoft SDN github repository. The scripts need to be downloaded and executed on a machine which has access to the HCI cluster management network. Detailed instructions for executing the script are provided here.

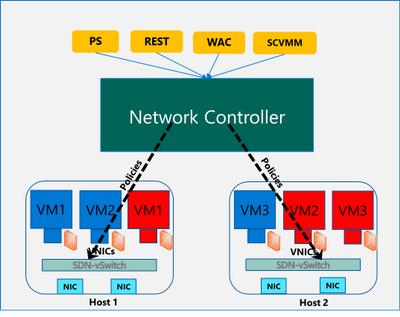

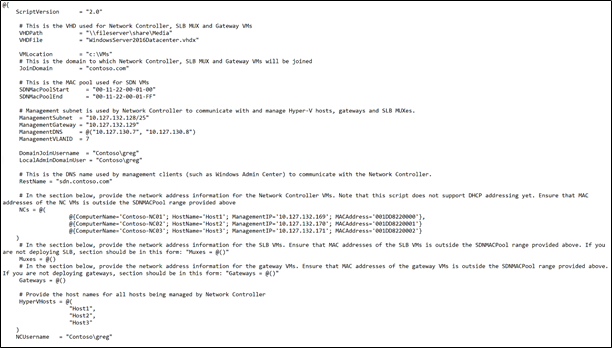

The script takes a configuration file as input. Template file can be found in the github repository here. You will need the provide/change the following parameters to setup Network Controller for microsegmentation on traditional HCI VLAN networks:

- VHDPath: VHDX file path used by NC VMs. Script must have access to this file path.

- VHDFile: VHDX file name used by NC VMs.

- JoinDomain: domain to which NC VMs are joined.

- Management network details (ManagementSubnet, ManagementGateway, ManagementDNS, ManagementVLANID): This is the management network of the HCI cluster.

- DomainJoinUsername: Username to join NC VMs to the domain

- LocalAdminDomainUser: Domain user for NC VMs who is also local admin on the NC VMs

- RestName: DNS name used by management clients (such as Windows Admin Center) to communicate with NC

- Details of NC VMs (ComputerName, HostName, ManagementIP, MACAddress): Name of NC VMs, Host name of Server where NC VMs are located, management network IP Address of NC VMs, MAC address of NC VMs

- HyperVhosts: Host server names in the HCI cluster

- NCUserName: NC Administrator account. Should have permission to do everything needed by someone administering the NC (primarily configuration and remoting). Usually, this can be same as LocalAdminDomainUser account.

Some other important points:

- The parameters VMLocation, SDNMacPoolStart, SDNMacPoolEnd can use default values.

- The following sections should be blank: Muxes, Gateways (Muxes = @())

- If you are deploying microsegmentation for VLAN networks, you should keep the PA network details section blank. Otherwise, if you are deploying overlay networks, please fill in that section.

- Rest of the parameters below the PA network section can be commented out.

A sample file is shown below:

Configure Microsegmentation Policies

Once Network Controller is setup, you can go ahead and deploy your microsegmentation policies.

- The first step is to create a logical network for your workloads hosted on VLAN networks. This is documented here.

- Next, you need to create the security ACL rules that you want to apply to your workloads. This is documented here.

- Once the ACL rules have been created, you can apply them to the network or a network interface.

- For applying ACLs to a traditional VLAN network, see instructions here.

- For applying ACLs to a virtual network, see instructions here.

- For applying ACLs to a network interface, see instructions here.

After the ACL rules have been applied to the network, all virtual machines in that network will get the policies and will have restricted access based on the rules. If the ACL rule has been applied to a network interface, the network interface will get the policies and will have restricted access based on the rules.

So, as you can see, with microsegmentation, you can protect every traffic flow in your HCI cluster, allowing only the flows required for your applications to function. Please try this out and give us feedback at sdn_feedback@microsoft.com. Feel free to reach out for any questions as well.

by Contributed | Apr 28, 2021 | Technology

This article is contributed. See the original author and article here.

We have released a new early technical preview of the JDBC Driver for SQL Server which contains a few additions and changes.

Precompiled binaries are available on GitHub and also on Maven Central.

Below is a summary of the new additions and changes.

Added

- Added replication connection option #1566

Fixed

- Fixed an issue where

trustStorePassword is null when using applicationIntent=ReadOnly #1565

- Fixed an issue where redirected token contains named instance in servername #1568

Getting the latest release

The latest bits are available on our GitHub repository, and Maven Central.

Add the JDBC preview driver to your Maven project by adding the following code to your POM file to include it as a dependency in your project (choose .jre8, .jre11, or .jre15 for your required Java version).

<dependency>

<groupId>com.microsoft.sqlserver</groupId>

<artifactId>mssql-jdbc</artifactId>

<version>9.3.1.jre11</version>

</dependency>

Help us improve the JDBC Driver by taking our survey, filing issues on GitHub or contributing to the project.

Please also check out our

tutorials to get started with developing apps in your programming language of choice and SQL Server.

David Engel

Recent Comments