Enable read-aloud for your application with Azure neural TTS

This article is contributed. See the original author and article here.

This post is co-authored with Yulin Li, Yinhe Wei, Qinying Liao, Yueying Liu, Sheng Zhao

Voice is becoming increasingly popular in providing useful and engaging experiences for customers and employees. The Text-to-Speech (TTS) capability of Speech on Azure Cognitive Services allows you to quickly create intelligent read-aloud experience for your scenarios.

In this blog, we’ll walk through an exercise which you can complete in under two hours, to get started using Azure neural TTS voices and enable your apps to read content aloud. We’ll provide high level guidance and sample code to get you started, and we encourage you to play around with the code and get creative with your solution!

What is read-aloud

Read-aloud is a modern way to help people to read and consume content like emails and word documents more easily. It is a popular feature in many Microsoft products, which has received highly positive user feedback. A few latest examples:

- Play My Emails: In outlook iOS, users can listen to their incoming email during the commute to the office. They can choose from a female and a male voice to read the email aloud, anytime their hands may be busy doing other things.

- Edge read aloud: In recent chromium-based edge browser, people can listen to the web pages or pdf documents when they are doing multi-tasking. The read-aloud voice quality has been enhanced with Azure neural TTS, which becomes the ‘favorite’ feature to many (Read the full article).

- Immersive reader is a free tool that uses proven techniques to improve reading for people regardless of their age or ability. It has adopted Azure neural voices to read aloud content to students.

- Listen to Word documents on mobile. This is an eyes-off, potentially hands-off modern consumption experience for those who want to do multitask on the go. In specific, this feature supports a longer listening scenario for document consumption, now available with Word on Android and iOS.

With all these examples and more, we’ve seen clear trending of providing voice experiences for users consuming content on the go, when multi-tasking, or for those who tend to read in an audible way. With Azure neural TTS, it is easy to implement your own read-aloud that is pleasant to listen to for your users.

The benefit of using Azure neural TTS for read-aloud

Azure neural TTS allows you to choose from more than 140 highly realistic voices across 60 languages and variants that enables fluid, natural-sounding speech, with rich customization capabilities available at the same time.

High AI quality

Why is neural TTS so much better? Traditional TTS is a multi-step pipeline, and a complex process. Each step could involve human, expert rules or individual models. There is no end-to-end optimization in between, so the quality is not optimal. The AI based neural TTS voice technology has simplified the pipeline into three major components. Each component can be modeled by advanced neural deep learning networks: a neural text analysis module, which generates more correct pronunciations for TTS to speak; a neural acoustic model, like uni-TTS which predicts prosody much better than the traditional TTS, and a neural vocoder, like hifiNet which creates audios in higher fidelity.

With all these components, Azure neural TTS makes the listening experience much more enjoyable than the traditional TTS. Our studies repeatedly show that the read-aloud experience integrated with the highly natural voices on the Azure neural TTS platform can significantly increase the time that people spend on listening to the synthetic speech continuously, and greatly improve the effectiveness of their consumption of the audio content.

Broad locale coverage

Usually, the reading content is available in many different languages. To read aloud more content and reach more users, TTS needs to support various locales. Azure neural TTS now supports more than 60 languages off the shelf. Check out the details in the full language list.

By offering more voices across more languages and locales, we anticipate developers across the world will be able to build applications that change experiences for millions. With our innovative voice models in the low-resource setting, we can also extend to new languages much faster than ever.

Rich speaking styles

Azure neural TTS provides you a rich choice of different styles that resonate your content. For example, the newscast style is optimized for news content reading in a professional tone. The customer service style supports you to create a more friendly reading experience for conversational content focusing on customer support. In addition, various emotional styles and role-play capabilities can be used to create vivid audiobooks in synthetic voices.

Here are some examples of the voices and styles used for different types of content.

Language | Content type | Sample | Note |

English (US) | Newscast | Aria, in the newscast style | |

English (US) | Newscast | Guy, in the general/default style | |

English (US) | Conversational | Jenny, in the chat style | |

English (US) | Audiobook | Jenny, in multiple styles | |

Chinese (Mandarin, simplified) | Newscast | Yunyang, in the newscast style | |

Chinese (Mandarin, simplified) | Conversational | Yunxi, in the assistant style | |

Chinese (Mandarin, simplified) | Audiobook | Multiple voices used: Xiaoxiao and Yunxi

Different styles used: lyrical, calm, angry, disgruntled, angry, embarrassed, with different style degrees applied

|

These styles can be adjusted using SSML, together with other tuning capabilities, including rate, pitch, pronunciation, pauses, and more.

Powerful customization capabilities

Besides the rich choice of prebuilt neural voices, Azure TTS provides you a powerful capability to create a one-of-a-kind custom voice that can differentiate your brand from others. Using Custom Neural Voice, you can build a highly realistic voice using less than 30 minutes of audio as training data. You can then use your customized voices to create a unique read-aloud experience that reflects your brand identity or resonate the characteristics of your content.

Next, we’ll walk you through the coding exercise of developing the read-aloud feature with Azure neural TTS.

How to build read-aloud features with your app

It is incredibly easy to add the read-aloud capability using Azure neural TTS to your application with the Speech SDK. Below we describe two typical designs to enable read-aloud for different scenarios.

Prerequisites

If you don’t have an Azure subscription, create a free account before you begin. If you have a subscription, log in to the Azure Portal and create a Speech resource.

Client-side read-aloud

In this design, the client directly interacts with Azure TTS using the Speech SDK. The following steps with the JavaScript code sample provide you the basic process to implement the read-aloud.

Step 1: create synthesizer

First, create the synthesizer with the selected language and voices. Make sure you select a neural voice to get the best quality.

const config = SpeechSDK.SpeechConfig. fromAuthorizationToken(“YourAuthorizationToken”, “YourSubscriptionRegion”);

config.SpeechSynthesisVoiceName = voice; config.SetSpeechSynthesisOutputFormat(SpeechSynthesisOutputFormat.Riff24Khz16BitMonoPcm);

const player = new SpeechSDK.SpeakerAudioDestination();

var audioConfig = SpeechSDK.AudioConfig.fromSpeakerOutput(player);

var synthesizer = new SpeechSDK.SpeechSynthesizer(config, audioConfig);

Then you can hook up the events from the synthesizer. The event will be used to update the UX while the read-aloud is on.

player.onAudioEnd = function (_) {

window.console.log("playback finished");

};

Step 2: Collect word boundary events

The word boundary event is fired during synthesis. Usually, the synthesis speed is much faster than the playback speed of the audio. The word boundary event is fired before you get the corresponding audio chunks. The application can collect the event and the time stamp information of the audio for your next step.

synthesizer.wordBoundary = function (s, e) {

window.console.log(e);

wordBoundaryList.push(e);

};

Step 3: Highlight word boundary during audio playback

You can then highlight the word as the audio plays, using the code sample below.

setInterval(function () {

if (player !== undefined) {

const currentTime = player.currentTime;

var wordBoundary;

for (const e of wordBoundaryList) {

if (currentTime * 1000 > e.audioOffset / 10000) {

wordBoundary = e;

} else {

break;

}

}

if (wordBoundary !== undefined) {

highlightDiv.innerHTML = synthesisText.value.substr(0, wordBoundary.textOffset) +

"" + wordBoundary.text + "" +

synthesisText.value.substr(wordBoundary.textOffset + wordBoundary.wordLength);

} else {

highlightDiv.innerHTML = synthesisText.value;

}

}

}, 50);

See the full example here for more details.

Server-side read-aloud

In this design, the client interacts with a middle layer service, which then interacts with Azure TTS through the Speech SDK. It is suitable for below scenarios:

- It is required to put the authentication secret (e.g., subscription key) on the server side.

- There could be additional related business logics such as text preprocessing, audio postprocessing etc.

- There is already a service to interact with the client application.

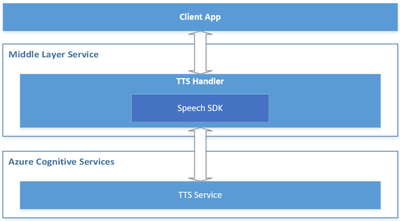

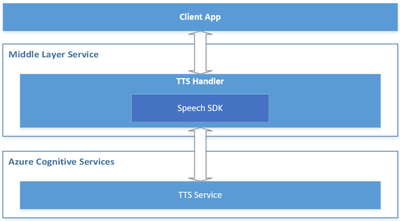

Below is a reference architecture for such design:

Reference architecture design for the server-side read-aloud

Reference architecture design for the server-side read-aloud

The roles of each component in this architecture are described below.

- Azure Cognitive Services – TTS: the cloud API provided by Microsoft Azure, which converts text to human-like natural speech.

- Middle Layer Service: the service built by you or your organization, which serves your client app by hosting the cross-device / cross-platform business logics.

- TTS Handler: the component to handle TTS related business logics, which takes below responsibilities:

- Wraps the Speech SDK to call the Azure TTS API.

- Receives the text from the client app and makes preprocessing if necessary, then sends it to the Azure TTS API through the Speech SDK.

- Receives the audio stream and the TTS events (e.g., word boundary events) from Azure TTS, then makes postprocessing if necessary, and sends them to the client app.

- Client App: your app running on the client side, which interacts with end users directly. It takes below responsibilities:

- Sends the text to your service (“Middle Layer Service”).

- Receives the audio stream and TTS events from your service (“Middle Layer Service”), and plays the audio to your end users, with UI rendering like real-time text highlight with the word boundary events.

Check here for the sample code to call Azure TTS API from server.

Comparing to the client-side read-aloud design, the server-side read-aloud is a more advanced solution. It can cost higher but is more powerful to handle more complicated requirements.

Recommended practices for building a read-aloud experience

The section above shows you how to build a read-aloud feature in the client and service scenarios. Below are some recommended practices that can help to make your development more efficient and improve your service experience.

Segmentation

When the content to read is long, it’s a good practice to always segment your reading content to sentences or short paragraphs in each request. Such segmentation has several benefits.

- The response is faster for shorter content.

- Long synthesized audio will cost more memory.

- Azure speech synthesis API requires the synthesized audio length to be less than 10 minutes. If your audio exceeds 10 minutes, it will be truncated to 10 minutes.

Using the Speech SDK’s PullAudioOutputStream, the synthesized audio in each turn could be easily merged into one stream.

Streaming

Streaming is critical to lower the latency. When the first audio chunk is available, you can start the playback or start to forward the audio chunks immediately to your clients. The Speech SDK provides PullAudioOutputStream, PushAudioOutputStream, Synthesizing event, and AudioDateStream for streaming. You can select the one that best suites the architecture of your application. Find the samples here.

Besides, with the stream objects of the Speech SDK, you can get the seek-able in-memory audio stream, which works easily for any downstream services.

Tell us your experiences!

Whether you are building a voice-enabled chatbot or IoT device, an IVR solution, adding read-aloud features to your app, converting e-books to audio books, or even adding Speech to a translation app, you can make all these experiences natural sounding and fun with Neural TTS.

Let us know how you are using or plan to use Neural TTS voices in this form. If you prefer, you can also contact us at mstts [at] microsoft.com. We look forward to hearing about your experience and developing more compelling services together with you for the developers around the world.

Get started

Add voice to your app in 15 minutes

Explore the available voices in this demo

Deploy Azure TTS voices on prem with Speech Containers

Learn more about other Speech scenarios

Recent Comments