by Contributed | Apr 27, 2021 | Technology

This article is contributed. See the original author and article here.

Final Update: Wednesday, 28 April 2021 02:39 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 04/27, 02:10 UTC. Our logs show the incident started on 04/27, 01:46 UTC and that during the 24 minutes that it took to resolve the issue 5% of customers experienced

data access issues and delayed or missed alerts in South East Australia.- Root Cause: Engineers determined that backend storage device became unhealthy.

- Mitigation: Engineers determined that the service is auto recovered by Azure platform.

- Incident Timeline: 24 minutes – 04/27, 01:46 UTC through 04/27, 02:10 UTC

We understand that customers rely on Azure Log Analytics as a critical service and apologize for any impact this incident caused.

-Vincent

by Contributed | Apr 27, 2021 | Technology

This article is contributed. See the original author and article here.

Welcome to your single resource for ways to learn and communities to join to always stay up-to-date on Azure Storage.

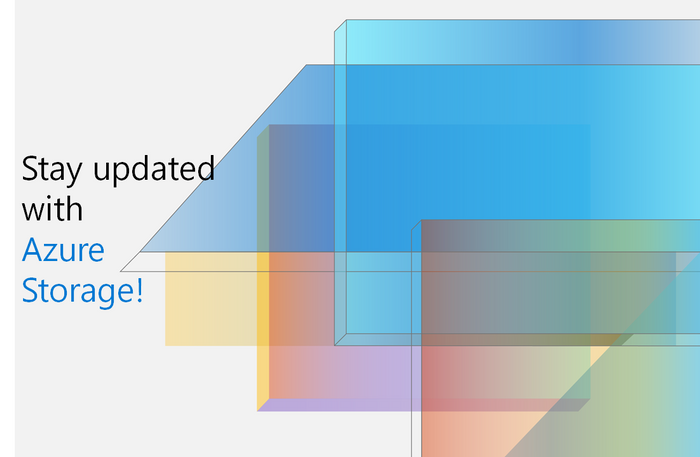

Microsoft Learn

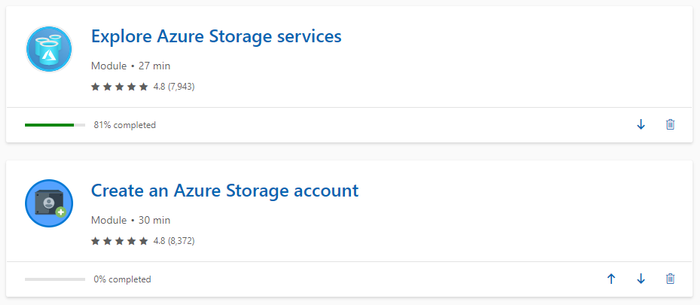

Azure Storage Learning Paths & Modules

Explore Azure Storage in-depth through guided paths and learn how to accomplish a specific task through individual modules.

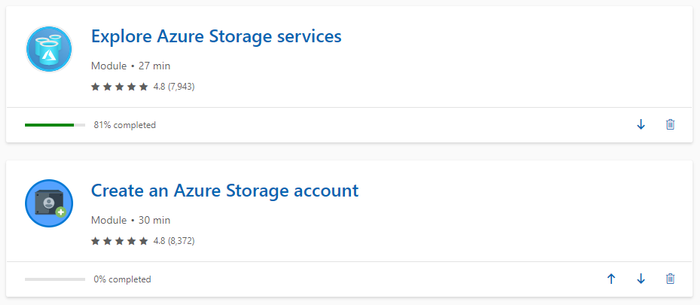

Technical Videos

Azure Storage on the Microsoft Azure YouTube Channel

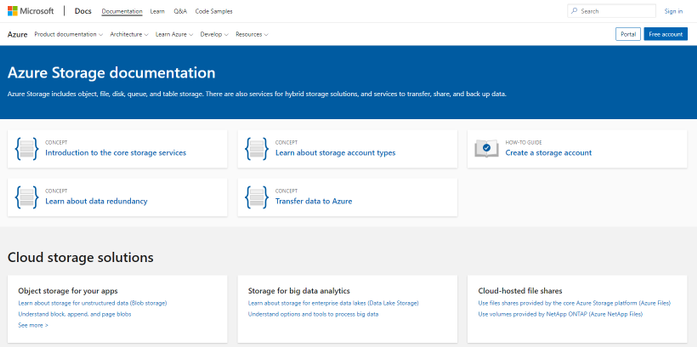

Azure Storage Documentation

Check out technical documentation, frequently asked questions, and learning resources on the Azure Storage Documentation page.

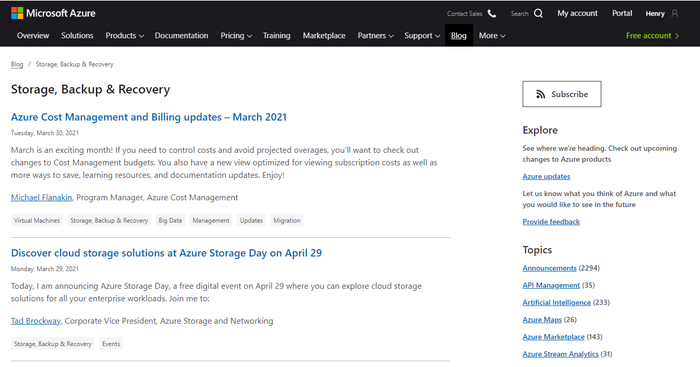

Azure Storage Blogs

Stay up to date on the latest news, product updates and announcements on the Azure Storage blog.

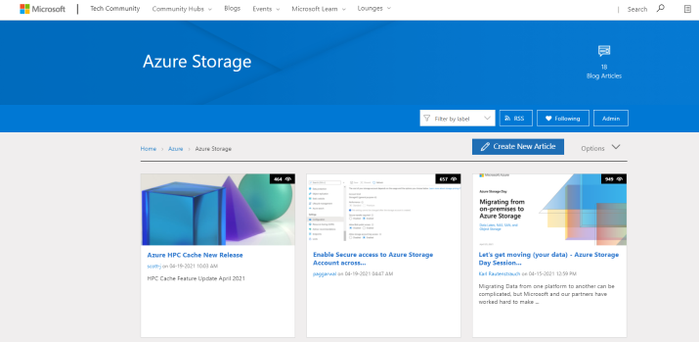

Azure Storage Tech Community

Share best practices, get the latest news, and learn from experts about Azure Storage here.

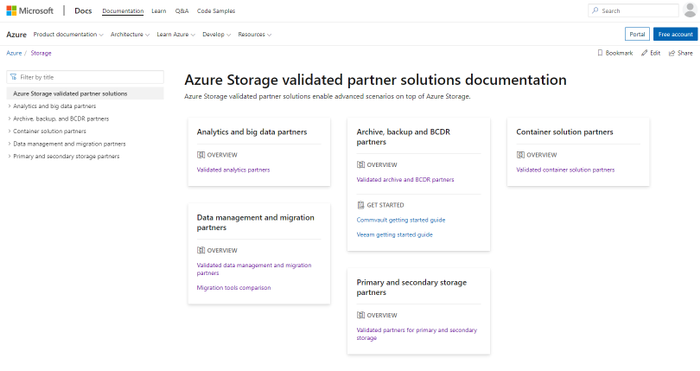

Azure Storage Partners

Discover the wide range of Azure Storage partner solutions that enable advanced scenarios on top of Azure Storage services.

With all these resources and content, we are excited see what you build with Azure Storage!

by Contributed | Apr 27, 2021 | Technology

This article is contributed. See the original author and article here.

According to a recent study, cloud misconfigurations take an average 25 days to fix. This number can even be higher if you are managing the cloud security posture across multiple providers without having an aggregate visualization of the current security state of all cloud workloads. Not only it becomes a challenge to understand the current security state, but also to manage multiple dashboards and prioritize which issues should be resolved first.

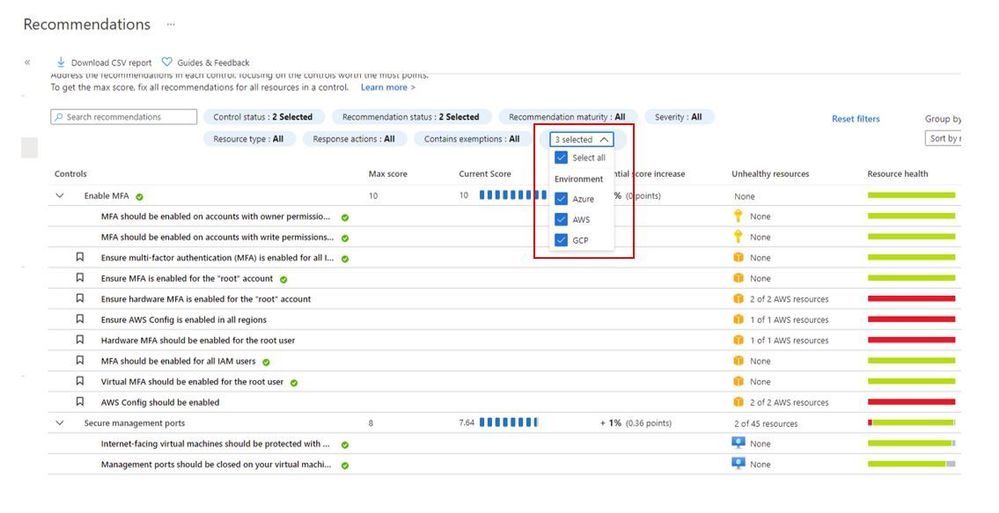

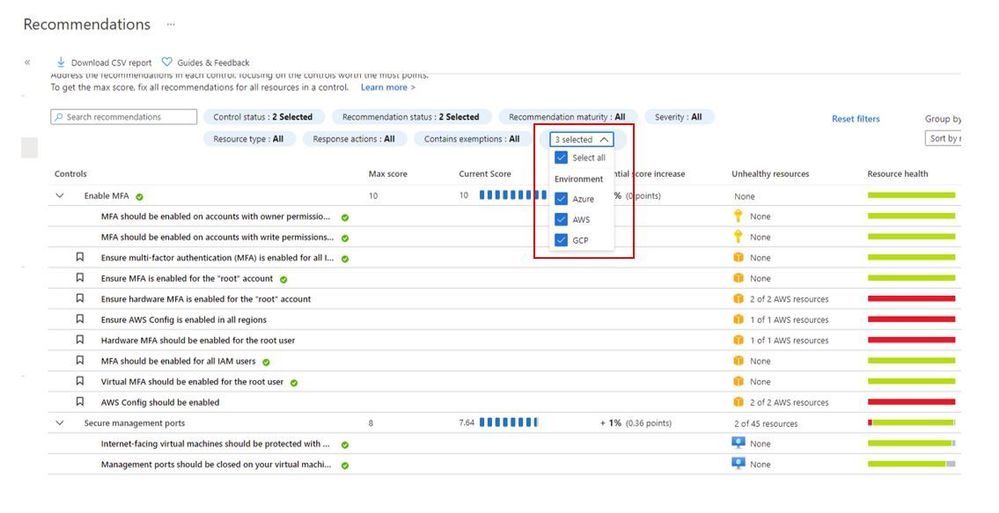

When you upgrade Azure Security Center free tier to Azure Defender you will be able to connect to AWS and GCP using native Azure Defender connectors. Once you connect to each cloud provider, you will be able to use the Security Recommendations to quickly filter the environment and see only the recommendations that are relevant for the cloud provider that you want, as shown below:

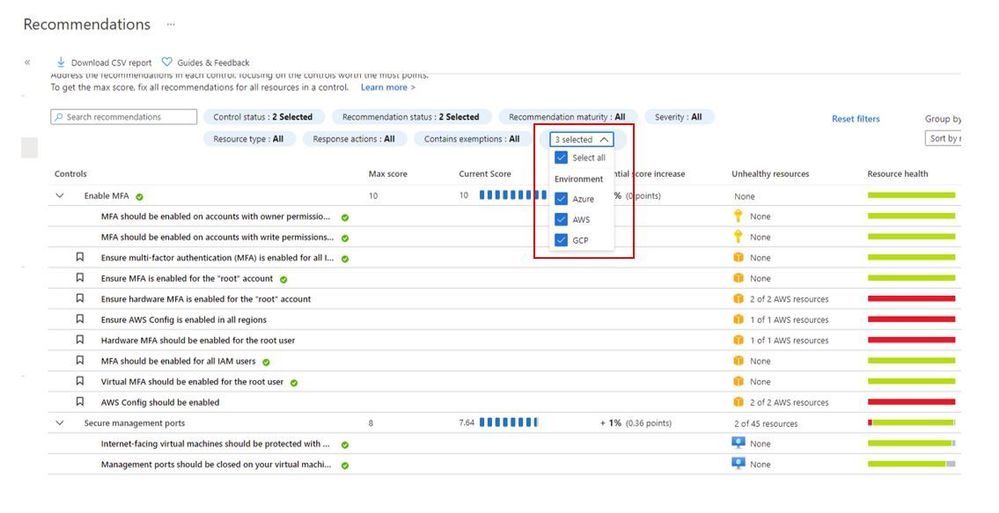

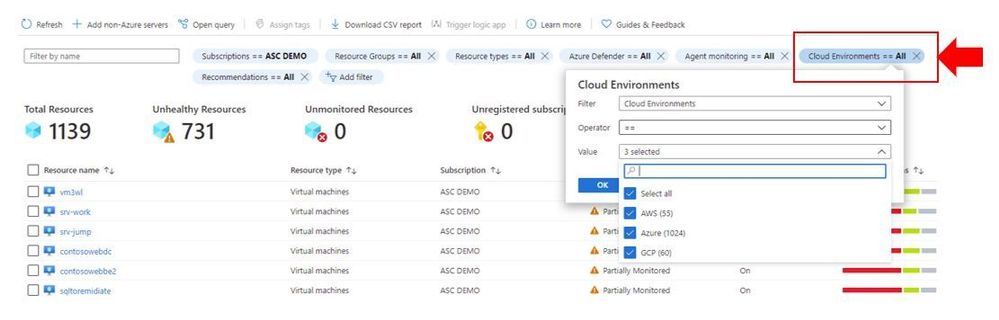

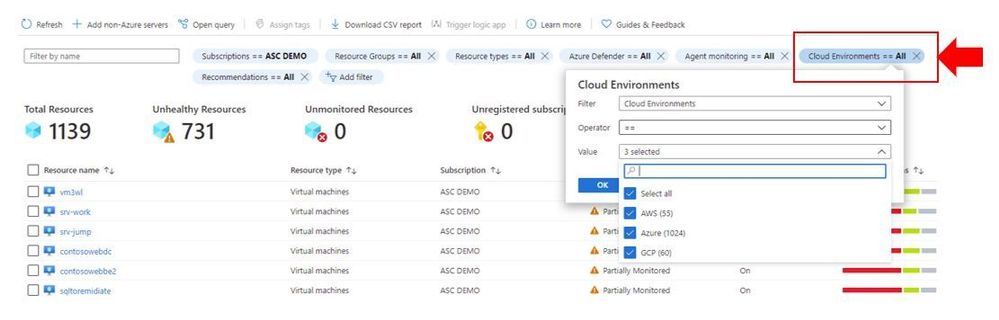

You can also quickly identify resources on each cloud provider by using the Inventory dashboard, by using the Cloud Environment filter as shown below:

In addition to all that, you can also take advantage of centralized automation by leveraging the Workflow Automation feature to automate response for security recommendations generated in Azure, AWS or GCP.

Cloud security posture management and workload protection

The security recommendations are relevant for the cloud security posture management scenario, which means that you drive the enhancement of your security posture across multiple cloud providers by remediating those recommendations. However, this is not the only scenario available for multi-cloud, you can also use the following Azure Defender plans to enhance your workload protection. When planning cloud workload protection for workloads in AWS and GCP, make sure to first enable the VMs to use Azure Arc, once you do that, the following Azure Defender plans will be available across Azure, AWS and GCP:

The potential alerts generated by workloads protected by those plans is going to be surfaced in the Security Alerts dashboard in Azure Defender. Which means that you again will have a single dashboard to visualize alerts across different cloud providers. These alerts can be streamed to your SIEM platform using Continuous Export feature in Azure Security Center.

Design considerations

Prior to implementing your multi-cloud adoption using Azure Defender, it is important to consider the following aspects:

When connecting with AWS

- An account is onboarded to a subscription, the subscription has to have Azure Defender for Servers enabled

- The VMs under this account will automatically be onboarded to Azure using Azure Arc, and will be covered by Defender (list of supported OS)

- Arc cost is inclusive with Defender (you won’t pay twice)

- To receive the security recommendations, you will need to enable AWS Security Hub on the accounts you want to onboard

- Security Hub is a paid service that can vary depending on how many accounts and regions it’s enabled on (please refer to AWS official pricing)

When connecting with GCP

- Same requirement for Defender enabled on the subscription

- Servers are not onboarded automatically, and will need to be onboarded through Arc (Arc onboarding guide)

- To receive security recommendations, you will need to enable GCP Security Command Center

- Google Security Command Center have two pricing tiers: standard (free) and premium

- Free tier includes ~12 recommendations, premium around 120

- Premium tier costs 5% of annual spending in GCP, please refer to GCP official pricing)

Additional Resources

The resources below will be useful for you to implement this multi-cloud capability in Azure Defender:

Reviewer

Or Serok Jeppa, Program Manager

by Contributed | Apr 27, 2021 | Technology

This article is contributed. See the original author and article here.

Active geo-replication for Azure SQL Hyperscale now in preview

Business continuity is a key requirement to implement any business-critical system, not having a disaster recovery plan in place can put organizations at great financial loss, reputation damage and customer churn.

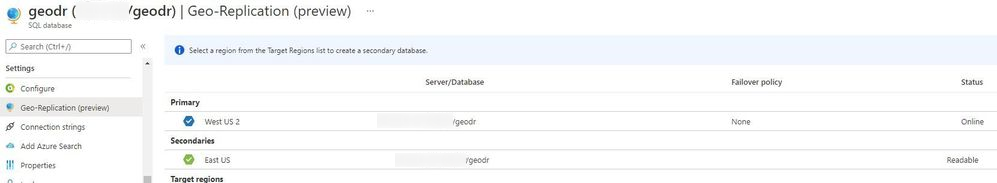

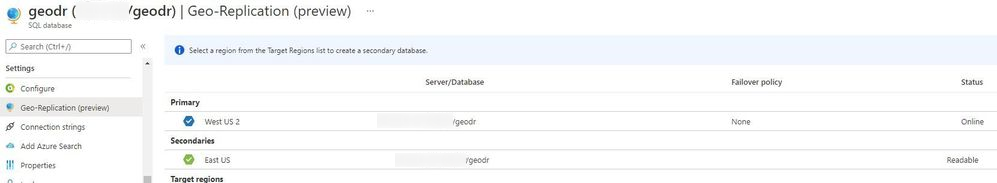

We are excited to announce the preview release of Active geo-replication for Azure SQL Database Hyperscale tier. Azure SQL geo-replication feature provides the availability to create a readable secondary database in the same or in different region, in the case of regional disaster, failover to the secondary can initiated to have business continuity.

Hyperscale service tier supports 100 TB of database size, rapid scale (out and up) and nearly instantaneous database backups, removing the limits traditionally seen in cloud databases.

How Geo-replication works for Hyperscale?

When creating a geo-replica for Hyperscale all data is copied from the primary to a different set of page servers. A geo-replica does not share page servers with the primary, even if they are in the same region. This provides the necessary redundancy for geo-failovers.

Current preview limitations:

- Only one geo-secondary in the same or a different region

- Only forced failover supported

- Using a geo-replica as the source database for Database Copy, or as the primary for another geo-secondary is not supported

- Restore database from geo-secondary not supported

- Currently no support for Auto-failover groups

We are working on addressing these limitations to have Hyperscale with the same Active geo-replication capabilities that we have for other Azure SQL service tiers including Auto-failover groups support.

Available regions

Active Geo-replication for Hyperscale will be supported in all regions where Azure SQL Hyperscale is supported.

Quick Start

a. Configure from Portal using the Geo Replication blade

b. Configure using Azure CLI

c. Configure using Powershell

To learn more,

https://aka.ms/activegeoreplication

https://docs.microsoft.com/en-us/azure/azure-sql/database/service-tier-hyperscale

by Contributed | Apr 27, 2021 | Technology

This article is contributed. See the original author and article here.

Hello and welcome back to another blog post about new improvements with Microsoft Exact Data Match (EDM). I am going to first cover improvements launching today and upcoming, then I will circle back on some previously released improvements.

First up and launching today, is the ability to test the EDM based SITs just like you can currently do with all other SITS (All clouds)! Being able to do a quick test to ensure you got your EDM SITs correctly setup and that your data was correctly imported can help you get going rapidly. There are a lot of moving parts in EDM: schema, data uploads, SITs / rule package, and then policy setup. Trying to troubleshoot a SIT and a DLP Policy relying on EDM SIT at the same time is difficult. This will enable you to confirm EDM is working as expected before moving to use it in your DLP or auto labeling rules, and help you keep any required troubleshooting focused by excluding what you know is working correctly.

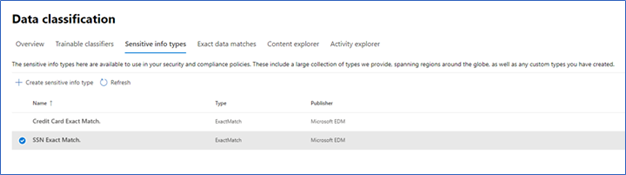

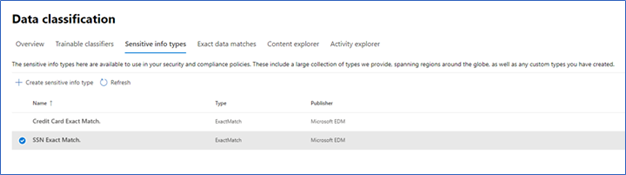

Figure 1. Choose EDM SIT

Figure 1. Choose EDM SIT

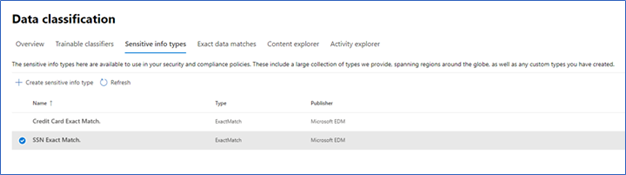

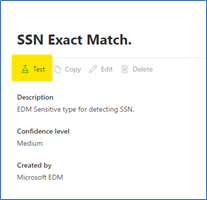

Figure 2. Select Test

Figure 2. Select Test

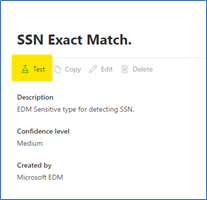

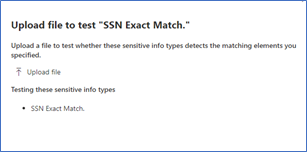

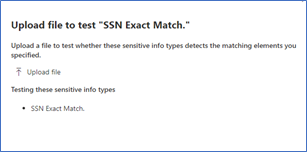

Figure 3. Upload file containing test data

Figure 3. Upload file containing test data

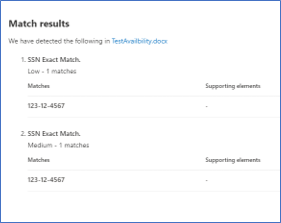

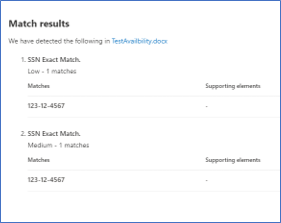

Figure 4. Review test results

Figure 4. Review test results

The ability to apply a sensitivity label to content automatically using EDM Sensitive Information Types (SIT) will be coming soon (initially Commercial Cloud only)! This will allow compliance admins to be able to scan the companies SharePoint Online and OneDrive for Business repositories and apply sensitivity labels, with or without encryption, to some of the most important and highly sensitive data they hold.

While automatic labeling using regular Sensitive Information Types is functionality that has been available for some time, bulk labeling using this type of content detection can lead to some false positives, and while false positives may not be a big issue when occurring in front of a user that can notice and fix an incorrect labeling action, this is considerably more problematic when it’s done in bulk over a large number of documents without interactive human supervision. This is where EDM shines: its ability to detect matches to specific, actual sensitive data with minimal or no false positives is a great match for this scenario. This is important for our Regulated Industry customers, like my Health and Life Sciences (HLS) customers. Electronic Medical Records (EMR) contain extremely sensitive information about every single patient a medical facility, company or doctor has had contact with. Strict regulations and certifications standards such as HIPAA and HITRUST, require close control of Personal Health Information (PHI) and being able to easily identify and label data at rest will help everyone!

Another new feature that is in Public Preview right now is the use of Customer Key for Microsoft 365 at the tenant level to protect additional elements in your tenant including your EDM sensitive information tables. This is a broad preview and includes many more data points than just EDM, but that protection of EDM data is included in this preview shows it is now a first-class citizen in the Microsoft Compliance world.

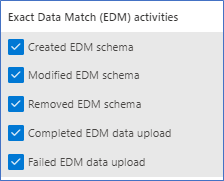

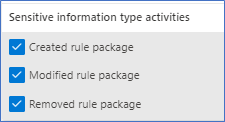

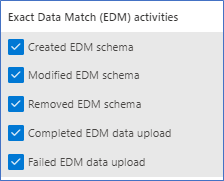

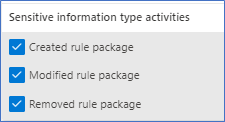

The next two items are being covered together, Improved Auditability and Upload Notifications are GA (All clouds). This gives the Compliance admins to ability to audit and be alerted when these EDM related activities happen:

Figure 5. EDM Audit Activities

Along with the Sensitive Information Type activities:

Figure 6. SIT Audit Activities

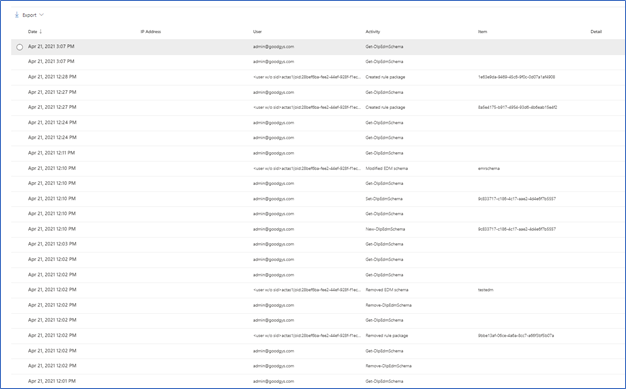

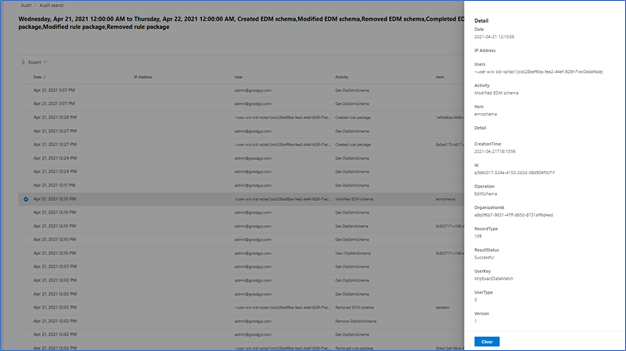

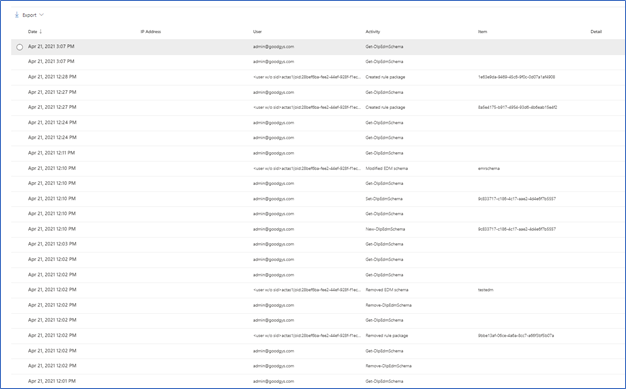

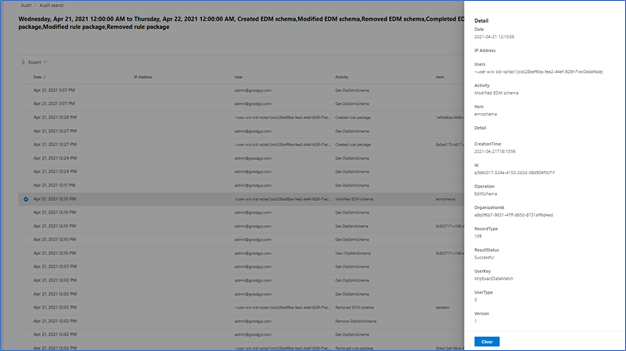

To check out the new auditing features, I decided to do some cleanup of an EDM datastore I setup for fun and created a new EDM datastore and SITs. Now let’s go check out what this looks like in the Audit logs.

Figure 7. Audit Items

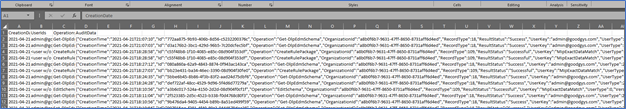

As you can see above, starting from the bottom up are the actions I took yesterday related to SITs. Now let’s take a closer look at some of these. One way to take a closer look is to download the results. In Figure 5 you can see the Export item at the top left.

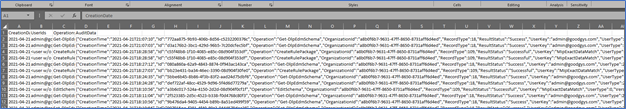

Figure 8. Sample export of audit items

You can also select one of the alerts to look at in in the interface.

Figure 9. Sample details of Audit Item

Audit data should appear in the log between 30 minutes and 2 hours. This data is also available as part of the Office 365 Management Activity API reference | Microsoft Docs.

I think this covers it for today. If you would like to learn more about EDM you can check out my previous blogs, Implementing Microsoft Exact Data Match (EDM) Part 1 – Microsoft Tech Community and Enhancements to Microsoft Exact Data Match – Microsoft Tech Community.

Recent Comments