by Contributed | Apr 26, 2021 | Technology

This article is contributed. See the original author and article here.

Transfer your group chat emails to Teams with Power Automate

I recently covered this idea on my blog but it is a Cloud Flow that I make use of often so I thought I would share it with you all. My organisation is well in to their Teams adoption journey and whilst a large proportion of users use Teams for their first port of call for a group chat, it still often surprises me when a conversation is struck up via Outlook Email and very quickly we get lost between reply to alls and reply to sender. Could Power Automate help you move that conversation group or one to one to Teams with a mouse click before the conversation gets out of control?

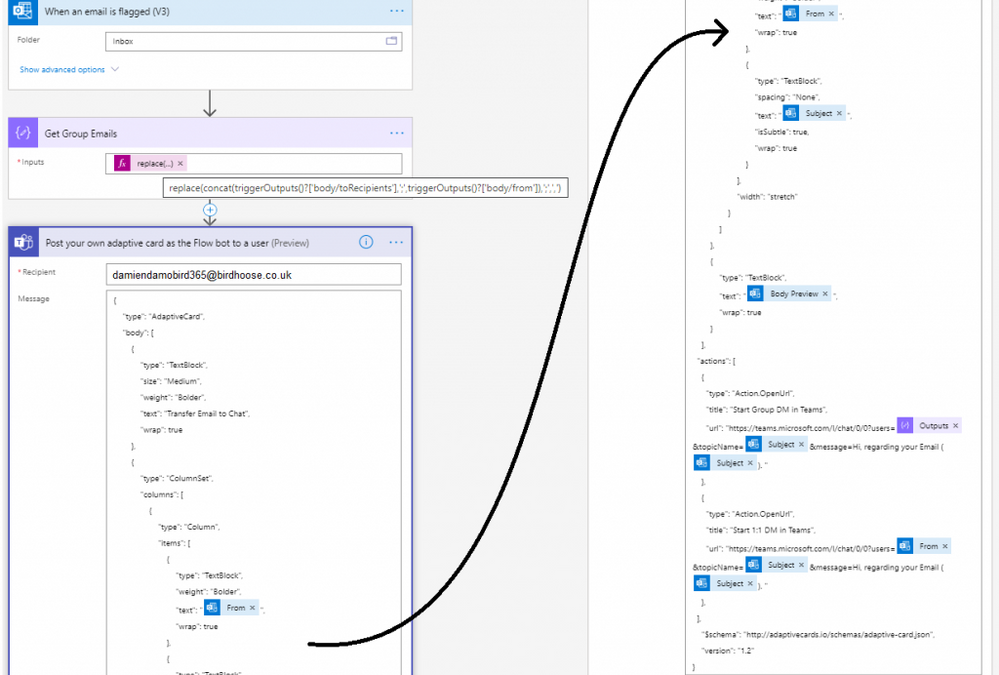

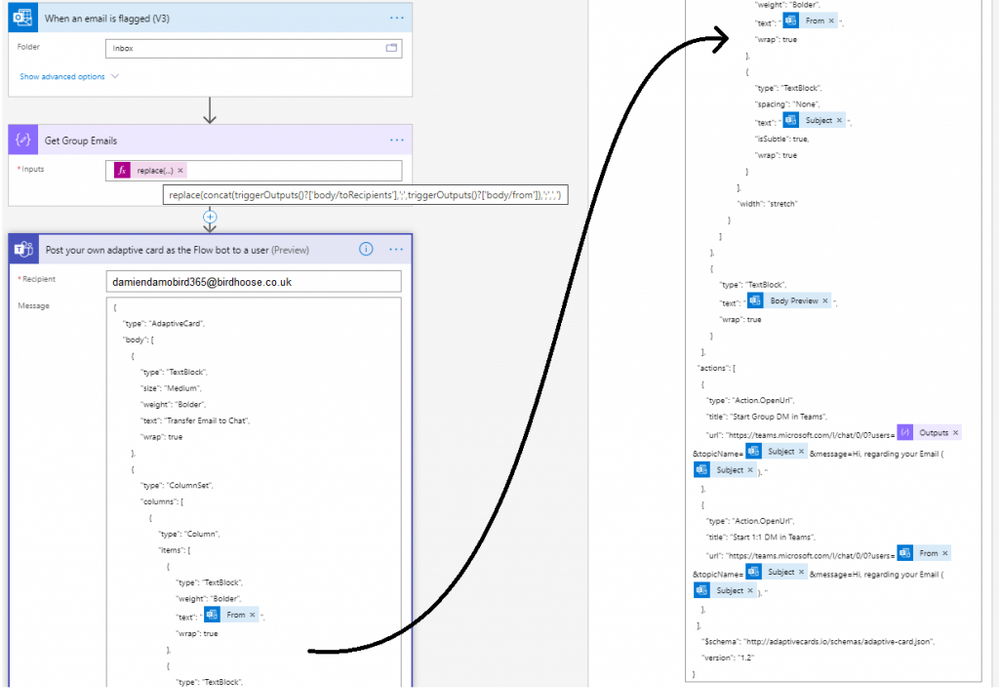

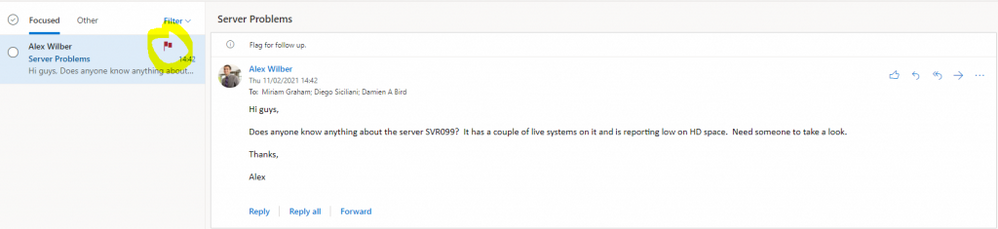

The simplest way for me, to transfer the conversation from Outlook and trigger a flow, was to use “When an email is flagged” trigger. Therefore I can simply flag an email in Outlook and take the conversation onto Teams.

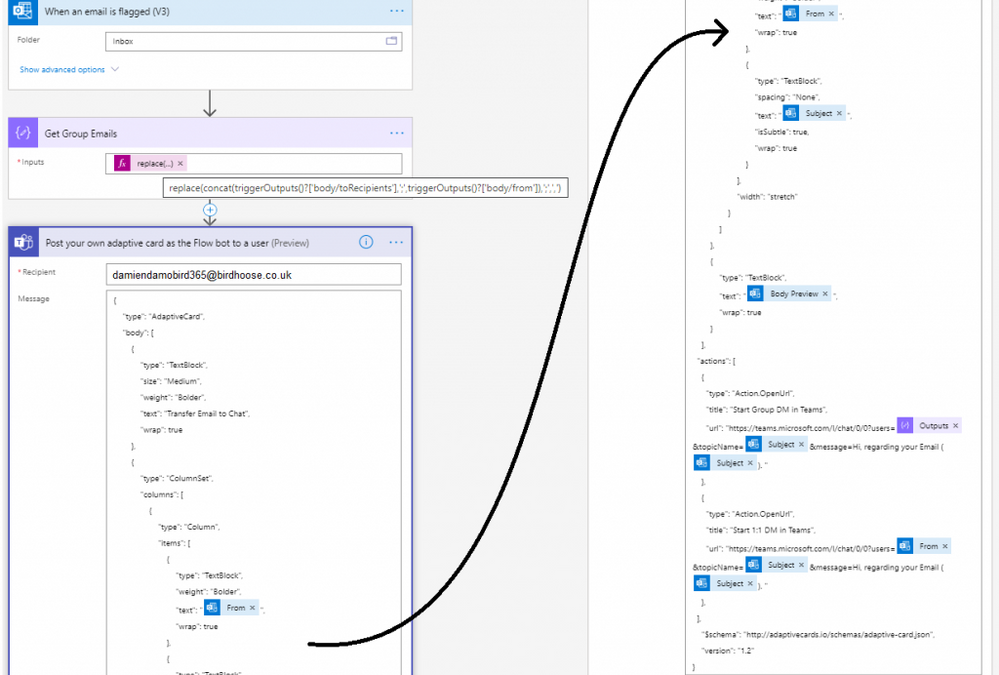

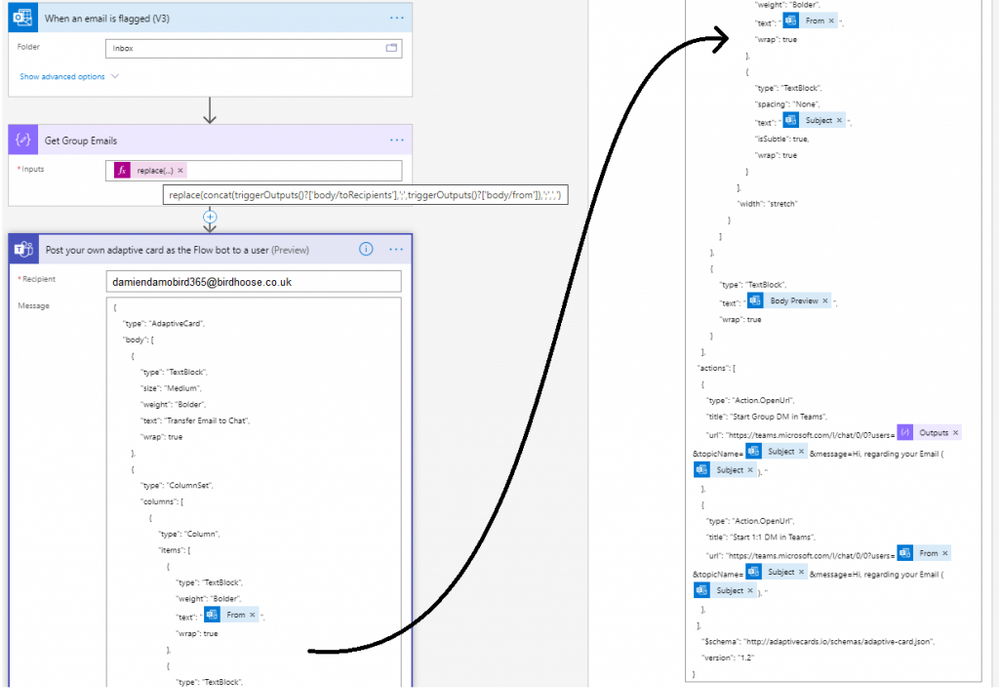

With the variables provided by the email trigger, I compose (by default) the to and from fields in order to get all mail recipients (albeit you may want to include the CC’d emails to) and simply replace the semi colon with a comma, this is needed for the final and only other step.

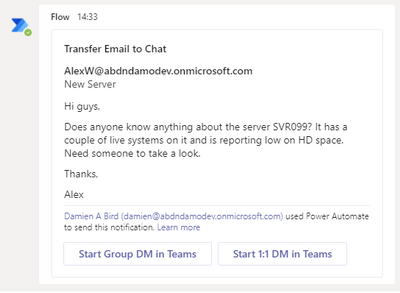

Using the “Post your own adaptive card as the Flow bot to a user” step, I am able to send an adaptive card notification in Teams to myself and provide a summary of the email I am transferring, from, subject and body preview and in my case the option to start a one to one chat with the sender or a group chat if there are more than one recipients of the email. This is done using the Deep Link feature of Teams.

I have supplied a copy/paste option at the end of my post that will allow you to replicate this in your personal PowerAutomate / Teams environment in a matter of seconds.

**NOTE** make sure you update the TO field in the post adaptive card action as it’s currently set to youremail[at]yourdomain[dot]com.

So, what does the Cloud Flow look like?

The cloud flow only has 2 actions

The flow simply consists of a compose action in order to structure the list of emails from the email trigger, above I don’t include the cc’d emails. You’ve therefore a couple options for the get group email compose action, do you want to include cc’d or not in the Group Chat Deep Link? The below sample snippet expression includes the ccRecipients, but feel free to adjust as necessary.

replace(tolower(concat(triggerOutputs()?[‘body/toRecipients’], ‘;’, triggerOutputs()?[‘body/from’], ‘;’, triggerOutputs()?[‘body/ccRecipients’])), ‘;’, ‘,’)

The second and final step is an adaptive card built via adaptive cards designer. It’s a steep learning curve but Designer site gives you plenty of sample cards to experiment with and it is here that you need to include the deep links to your new Teams conversations which will automatically launch a teams conversation with these users.

The User Experience

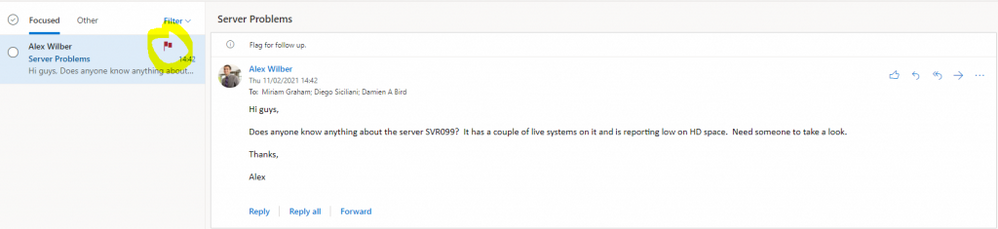

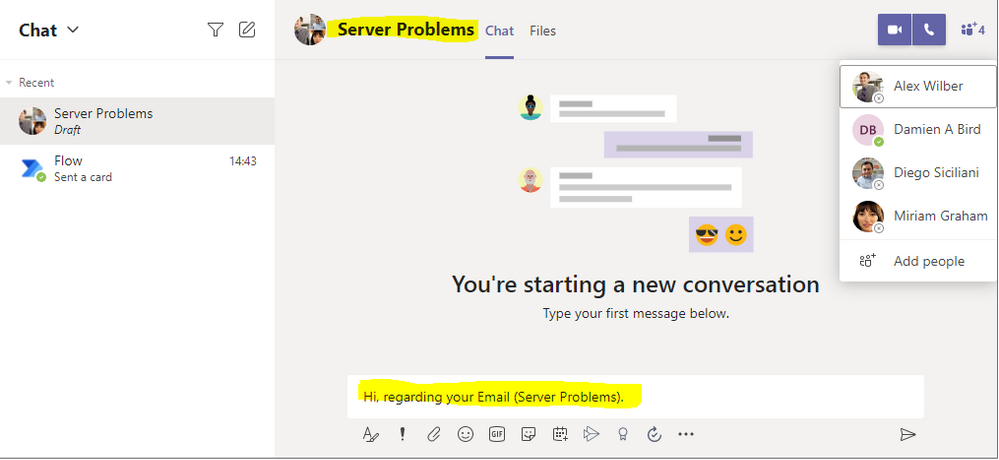

My colleague Alex, sends me an email with a list of colleagues in the to field. Before the conversation gets out of control, I simply flag the email and instigate a move to Teams Group Chat.

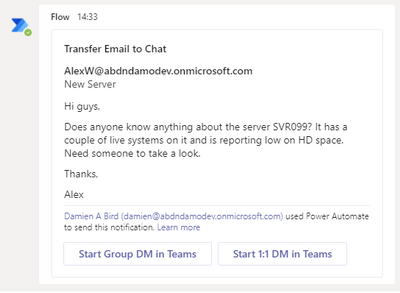

Within a matter of seconds, basically as fast as your Flow is triggered, a Flow Bot message is received to my Teams application with the subject and summary email body including a link to both a one to one and group chat.

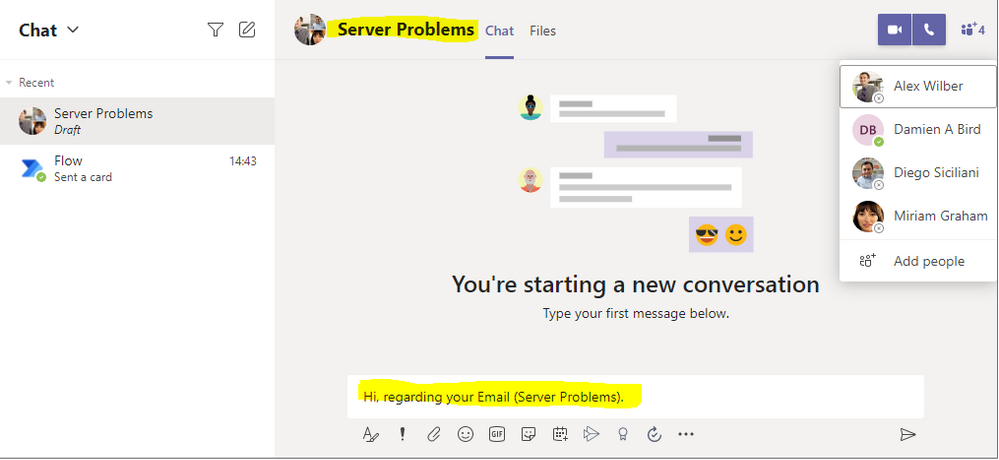

Clicking on that Group DM in Teams button results in an opening conversation line and conversation subject (in draft), all courtesy of the deep link. Now it’s time to get the conversation going.

Want to try the solution quickly?

Did you know that you can copy and paste Cloud Flow actions between environments really easily? Simply by creating a new Cloud Flow with the “When an email is flagged” trigger you can then copy the provided code below and paste it into your ClipBoard by clicking Next Step, selecting My ClipBoard and pasting with Ctrl + V. The only field you need to change is the Adaptive Card Recipient which by default is set to youremail[at]yourdomain[dot]com. Just make sure you set this to your own email address to receive the Adaptive Card.

{"id":"f616b2c7-1645-4360-aff5-1710-a2bfb6a1","brandColor":"#8C3900","connectionReferences":{"shared_office365":{"connection":{"id":"/providers/Microsoft.PowerApps/apis/shared_office365/connections/shared-office365-2c7a215d-616e-4cc2-9dab-9d05f14c21a5"}},"shared_teams_1":{"connection":{"id":"/providers/Microsoft.PowerApps/apis/shared_teams/connections/shared-teams-c32e6b36-e3dd-4ca6-806d-5969ba7e6dee"}}},"connectorDisplayName":"Control","icon":"data:image/svg+xml;base64,PHN2ZyB3aWR0aD0iMzIiIGhlaWdodD0iMzIiIHZlcnNpb249IjEuMSIgdmlld0JveD0iMCAwIDMyIDMyIiB4bWxucz0iaHR0cDovL3d3dy53My5vcmcvMjAwMC9zdmciPg0KIDxwYXRoIGQ9Im0wIDBoMzJ2MzJoLTMyeiIgZmlsbD0iIzhDMzkwMCIvPg0KIDxwYXRoIGQ9Im04IDEwaDE2djEyaC0xNnptMTUgMTF2LTEwaC0xNHYxMHptLTItOHY2aC0xMHYtNnptLTEgNXYtNGgtOHY0eiIgZmlsbD0iI2ZmZiIvPg0KPC9zdmc+DQo=","isTrigger":false,"operationName":"DamoBird365_Transfer_Email_To_Teams","operationDefinition":{"type":"Scope","actions":{"Get_All_To_and_From_Emails":{"type":"Compose","inputs":"@replace(tolower(concat(triggerOutputs()?['body/toRecipients'], ';', triggerOutputs()?['body/from'])), ';', ',')","runAfter":{}},"Post_your_own_adaptive_card_as_the_Flow_bot_to_a_user":{"type":"OpenApiConnection","inputs":{"host":{"connectionName":"shared_teams_1","operationId":"PostUserAdaptiveCard","apiId":"/providers/Microsoft.PowerApps/apis/shared_teams"},"parameters":{"PostAdaptiveCardRequest/recipient/to":"youremail[at]yourdomain[dot]com;","PostAdaptiveCardRequest/messageBody":"{n "type": "AdaptiveCard",n "body": [n {n "type": "TextBlock",n "size": "Medium",n "weight": "Bolder",n "text": "Transfer Email to Chat",n "wrap": truen },n {n "type": "ColumnSet",n "columns": [n {n "type": "Column",n "items": [n {n "type": "TextBlock",n "weight": "Bolder",n "text": "@{triggerOutputs()?['body/from']}",n "wrap": truen },n {n "type": "TextBlock",n "spacing": "None",n "text": "@{triggerOutputs()?['body/subject']}",n "isSubtle": true,n "wrap": truen }n ],n "width": "stretch"n }n ]n },n {n "type": "TextBlock",n "text": "@{triggerOutputs()?['body/bodyPreview']}",n "wrap": truen }n ],n "actions": [n {n "type": "Action.OpenUrl",n "title": "Start Group DM in Teams",n "url": "https://teams.microsoft.com/l/chat/0/0?users=@{outputs('Get_All_To_and_From_Emails')}&topicName=@{triggerOutputs()?['body/subject']}&message=Hi, regarding your Email (@{triggerOutputs()?['body/subject']}). "n },n {n "type": "Action.OpenUrl",n "title": "Start 1:1 DM in Teams",n "url": "https://teams.microsoft.com/l/chat/0/0?users=@{triggerOutputs()?['body/from']}&topicName=@{triggerOutputs()?['body/subject']}&message=Hi, regarding your Email (@{triggerOutputs()?['body/subject']}). "n },n ],n "$schema": "http://adaptivecards.io/schemas/adaptive-card.json",n "version": "1.2"n}","PostAdaptiveCardRequest/messageTitle":"Transfer Email To Teams"},"authentication":"@parameters('$authentication')"},"runAfter":{"Get_All_To_and_From_Emails":["Succeeded"]}}},"runAfter":{},"description":"***Please make sure you update the TO: in the Adaptive Card***"}}

Summary

Get that internal conversation moved from traditional Email into modern Teams. Not all conversation is better suited to Teams, I accept, but it’s often far more productive to have the order of conversation in front of you, expecially if you have had a couple days off. If a colleague has taken the initiative to shift a conversation into Teams, it will save the group a lot of effort having to sift through that email string and potentially allows you to work in real time seeing if someone is available/online, rather than wait for that next email to come in or maybe a couple of people reply to that email at the same time with a different opinion  I’ve enjoyed working with you email, but Teams messaging is my preferred internal conversation route these days if I can’t start a call of course.

I’ve enjoyed working with you email, but Teams messaging is my preferred internal conversation route these days if I can’t start a call of course.

What trigger would suit you best?

Would you include To/From or CC’d members too in your Group Email?

Have you used Teams Deep Links before?

Check out my blog for more ideas or if you have an idea of your own but don’t know where to start, give me a shout via the various platforms – DamoBird365

by Contributed | Apr 26, 2021 | Technology

This article is contributed. See the original author and article here.

Hello, dear readers! My name is Hélder Pinto and I am sharing here some tips about how to leverage NSG Flow Logs and Traffic Analytics to improve your Azure network security hygiene and, at the end, simplify your NSG rules and, more importantly, uncover security vulnerabilities.

Introduction

Traffic Analytics is an Azure-native service that allows you to get insights about the Azure Virtual Network flows originated by or targeted to your applications. For example, identifying network activity hot spots, security threats or network usage patterns is made very easy by navigating over the several ready-made Traffic Analytics dashboards. This service depends on the Flow Logs generated by the network activity evaluated by Network Security Group (NSG) rules. Whenever a network flow tries to go from A to B in your network, it generates a log for the NSG rule that allows/denies the flow. Traffic Analytics is not enabled by default and you must turn it on for each NSG. You can read more details about Traffic Analytics here.

Traffic Analytics dashboards are built on top of the logs that are collected at least every hour into a Log Analytics workspace. If you drill down some of its charts, you’ll notice that everything depends on the AzureNetworkAnalytics_CL custom log table. Of course, these flow logs can be used for other purposes besides feeding Traffic Analytics dashboards. In this article, I’ll demonstrate how to leverage Traffic Analytics logs to improve your Azure network security hygiene and, at the end, simplify your NSG rules and, more importantly, uncover security vulnerabilities.

The problem

When managing NSGs, inbound/outbound rules are added/updated as new services are onboarded into the Virtual Network. Sometimes, rules are changed temporarily to debug a failing flow. Meanwhile, services and network interfaces are decommissioned, temporary rules are not needed anymore, but too frequently NSG rules are not updated after those changes. As time goes by, it is not uncommon to find NSG rules for which we don’t know the justification at all or, in many cases, containing IP address-based sources/destinations that are not easy to identify. As a result, motivated by the fear of breaking something, we let those rules hanging around.

This negligence in network security hygiene sometimes originates vulnerabilities that have a dire impact in your Azure assets. Some examples:

- An outbound allow rule for Azure SQL that is not needed anymore and that can be exploited for data exfiltration.

- A deny rule that is never hit because someone inserted a permissive allow rule with higher priority.

- An RDP allow rule for a specific source/destination that is never hit because, again, someone added a higher priority rule that suppresses its effect.

Even if some unneeded NSG rule is innoxious, it is a general best practice to keep your Azure resources as optimized and clean as possible – the cleaner your environment, the more manageable it is.

NSG Optimization Workbook

Based on Azure Resource Graph and on the AzureNetworkAnalytics_CL Log Analytics table generated by Traffic Analytics, I built an Azure Monitor workbook that helps you spot NSG rules that haven’t been used for a given period, i.e., good candidates for cleanup or maybe a symptom that something is wrong with your NSG setup.

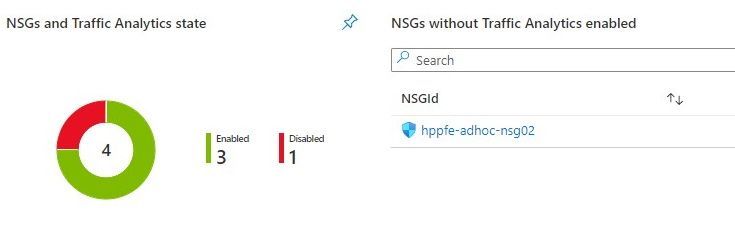

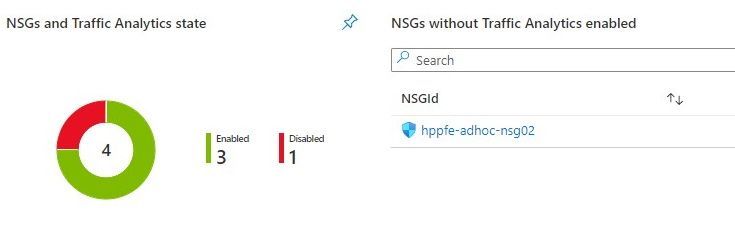

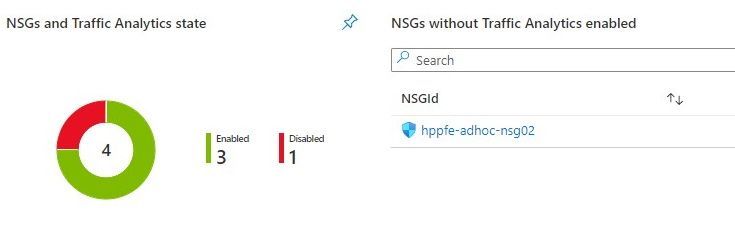

The workbook starts by showing you:

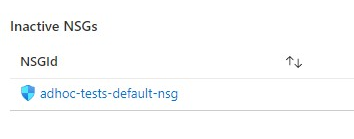

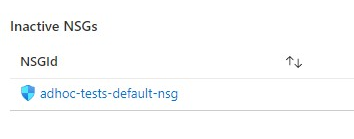

- which NSGs do not have Traffic Analytics enabled – this means no visibility over their flows.

- which NSGs have been inactive, i.e., not generating any flow logs – maybe these NSGs were associated to subnets or network interfaces that were decommissioned.

Workbook tiles with a summary of Traffic Analytics state in all NSGs

Workbook tiles with a summary of Traffic Analytics state in all NSGs

Workbook tile listing NSGs that have not been generating flow logs (inactive)

Workbook tile listing NSGs that have not been generating flow logs (inactive)

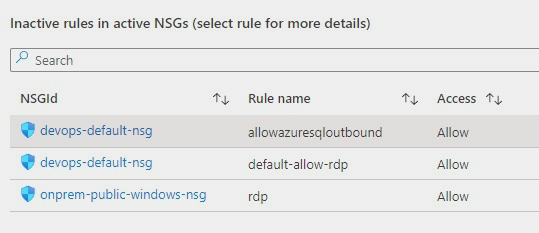

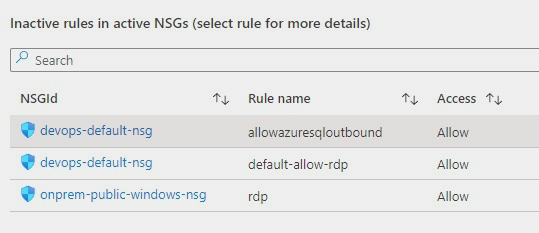

If you scroll down a bit more, you’ll also get details about NSG rules that have been inactive for the period specified as parameter. These rules are not generating NSG Flow Logs and this may be a symptom of some misconfiguration or, at least, of some lack of security hygiene.

Workbook tile with a list of NSG rules that have not been used by network traffic

Workbook tile with a list of NSG rules that have not been used by network traffic

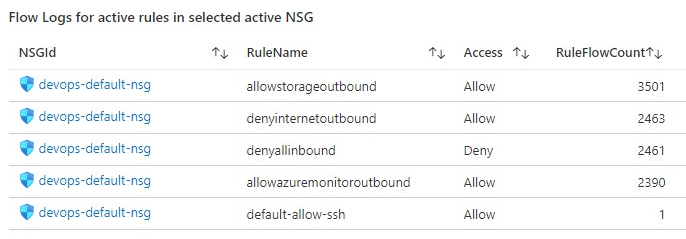

If you select a rule from the list, you get statistics about other rules in the same NSG that are generating flows, which may give you a hint for the reason why that rule has not generated any log lately.

Workbook tile with the statistics for all the NSG rules that have generating traffic for the NSG where the inactive rule sits in

Workbook tile with the statistics for all the NSG rules that have generating traffic for the NSG where the inactive rule sits in

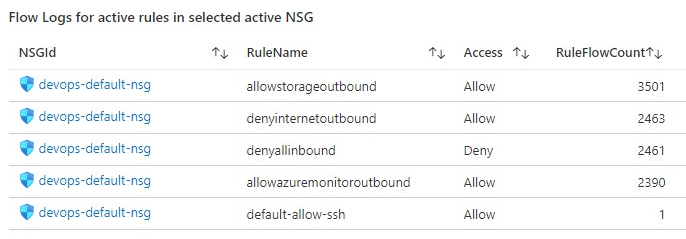

Deploying the Workbook

The NSG Optimization workbook can be found at the address below:

https://github.com/helderpinto/azure-wellarchitected-toolkit/blob/main/security/workbooks/nsg-optimization.json

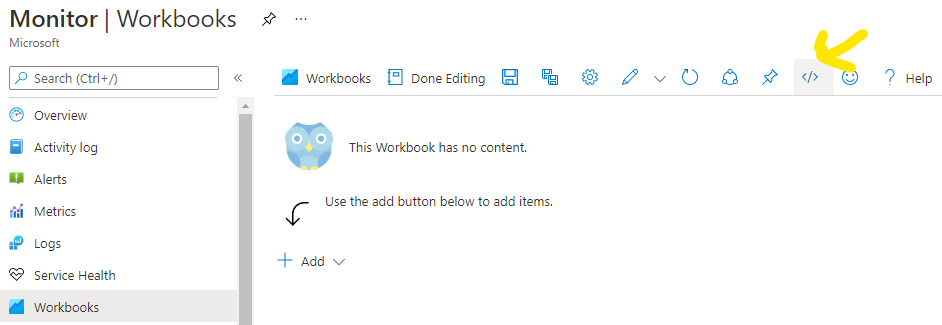

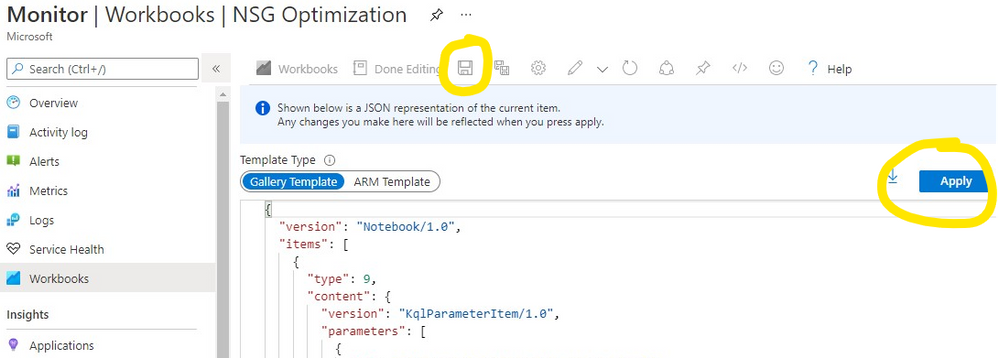

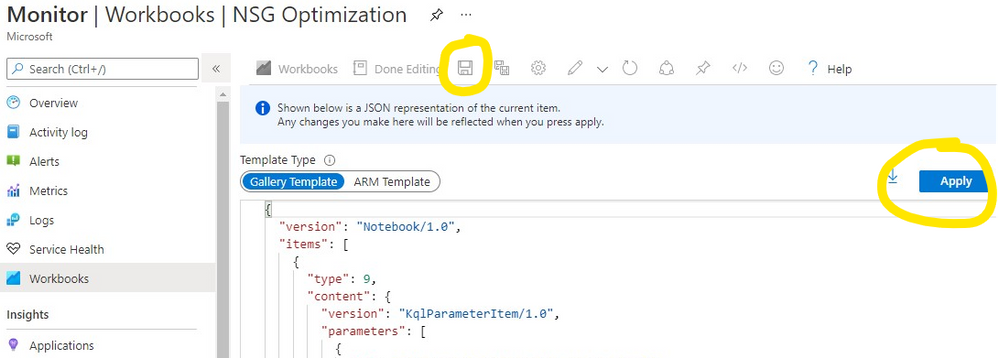

For instructions on how to setup a workbook, check the Azure Monitor documentation. Once in Azure Monitor Workbooks, you must create a new Workbook and, in edit mode, click on the Advanced Editor button (see picture below) and replace the gallery template code by the one you find in the GitHub URL above. After applying the workbook code, you should see the dashboard described in this article. The workbook looks quite simple but the logic behind is somewhat complex and, depending on the volume of your NSG Flow Logs and on the NSG activity time range you chose, it may take a while to fully load each table. Don’t forget to save your workbook!

Screenshot of the Workbooks user interface for creating a new workbook from existing code

Screenshot of the Workbooks user interface for creating a new workbook from existing code

Screenshot of the Workbooks user interface for applying and saving a new workbook from existing code

Screenshot of the Workbooks user interface for applying and saving a new workbook from existing code

Conclusion

As we saw in this article, keeping a good network security hygiene may save you from troubles. Some of these potential issues come out of unneeded or obsolete network security rules defined in your NSGs. The NSG Optimization Workbook here described will help you uncover NSGs or NSG rules that have not been used over a specific time range, based on Traffic Analytics logs. An NSG rule that has not generated flow logs is probably a symptom of some misconfiguration. So, go turn on Traffic Analytics, deploy the NSG Optimization Workbook and give a start to your NSG spring cleanup! :smiling_face_with_smiling_eyes:

by Contributed | Apr 26, 2021 | Technology

This article is contributed. See the original author and article here.

Which community project do you maintain?

yo teams

How does it help people?

yo teams helps developers kickstart their Teams extensibility projects by providing an easy way to create the first Teams App all the way to scaffolding a solution that can be deployed, scaled and maintained.

What have you been working on lately?

yo teams is continuously evolving based on feedback and real-world feedback and experience from the community. As of lately we’ve been focused on making it easier and faster to create a project and at the same keep a high level of flexibility for those developers who wants to control and manage everything themselves.

What do you do at work?

I’m the global innovation lead for Modern Workplace at Avanade

Why are you a part of the M365 community?

Because I learn new stuff all the time – the benefits of a community is the diversity of different voices and opinions. This eventually makes me become better in what I do, and I hope that others learn from what I do.

What was you first community contribution?

My first true open source community contribution was in the form of a web part for SharePoint 2007/WSS 3.0, called the ChartPart

One tip for someone who’d like to start contributing

Just do it. You never know what you might learn and who you might learn to know.

by Contributed | Apr 26, 2021 | Technology

This article is contributed. See the original author and article here.

Pssst! You may notice the Round Up looks different – we’re rolling out a new, concise way to show you what’s been going on in the Tech Community week by week.

Instead of scrolling through every blog posted here, you can scroll through and see every blog on our blog page here.

Top news this week:

- iPad support now available in Microsoft Lists for iOS

- #VisualGreenTech Challenge – EarthDay 2021

- Learn to debug your Power Apps

- Dashboard in a Day with Power BI

- Realtime analytics from SQL Server to Power BI with Debezium

- Azure Percept enables simple AI and computing on the edge

- Export Power Apps and Power Automate user licenses

- Getting started and Learn PowerShell on Microsoft Learn!

- Localize your website with Microsoft Translator

Important Events:

by Contributed | Apr 25, 2021 | Technology

This article is contributed. See the original author and article here.

In the previous post, I introduced the very early stage of Project Bicep. At that time, it was the version of 0.1.x, but now it’s updated to 0.3.x. You can use it for production, and many features keep being introduced. Throughout this post, I’m going to discuss new features added since the last post.

Azure CLI Integration

While Bicep CLI works as a stand-alone tool, it’s been integrated with Azure CLI from v2.20.0 and later. Therefore, you can run bicep in either way.

# Bicep CLI

bicep build azuredeploy.bicep

# Azure CLI

az bicep build –file azuredeploy.bicep

NOTE: Although Bicep CLI could build multiple files by v0.2.x, it’s now only able to build one file at a time from v0.3.x. Therefore, if you want to build multiple files, you should do it differently. Here’s a sample PowerShell script, for example.

Get-ChildItem -Path **/*.bicep -Recurse | ForEach-Object {

az bicep build –file $_.FullName

}

Because of the Azure CLI integration, you can also provision resources through the bicep file like below:

# ARM template file

az deployment group create

–name

–resource-group

–template-file azuredeploy.json

–parameters @azuredeploy.parameters.json

# Bicep file

az deployment group create

–name

–resource-group

–template-file azuredeploy.bicep

–parameters @azuredeploy.parameters.json

Bicep Decompiling

From v0.2.59, Bicep CLI can convert ARM templates to bicep files. It’s particularly important because still many ARM templates out there have been running and need maintenance. Run the following command for decompiling.

# Bicep CLI

bicep decompile azuredeploy.json

# Azure CLI

az bicep decompile –file azuredeploy.json

NOTE: If your ARM template contains a copy attribute, bicep can’t decompile it as of this writing. In the later version, it should be possible.

Decorators on Parameters

Writing parameters has become more articulate than v0.1.x, using the decorators. For example, there are only several possible SKU values of a Storage Account available, so using the @allowed decorator like below makes the code better readability.

// Without decorators

param storageAccountSku string {

allowd: [

‘Standard_GRS’

‘Standard_LRS’

]

default: ‘Standard_LRS’

}

// With decorators

@allowed([

‘Standard_GRS’

‘Standard_LRS’

])

param storageAccountSku string = ‘Standard_LRS’

Conditional Resources

You can use ternary operations for attributes. What if you can conditionally declare a resource itself using conditions? Let’s have a look. The following code says if the location is only Korea Central, the Azure App Service resource can be provisioned.

param location = resourceGroup().location

resource webapp ‘Microsoft.Web/sites@2020-12-01’ = if (location == ‘koreacentral’) {

…

}

Loops

While ARM templates use both copy attribute and copyIndex() function for iterations, bicep uses the for…in loop. Have a look at the code below. You can declare Azure App Service instances using the array parameter through the for…in loop.

param webapps array = [

‘dev’

‘test’

‘prod’

]

// Use array only

resource webapp ‘Microsoft.Web/sites@2020-12-01’ = [for name in webapps: {

name: ‘my-webapp-${name}’

…

}]

You can also use both array and index at the same time.

// Use both array and index

resource webapp ‘Microsoft.Web/sites@2020-12-01’ = [for (name, index) in webapps: {

name: ‘my-webapp-${name}-${index + 1}’

…

}]

Instead of the array, you can use the range() function in the loop.

// Use range

resource webapp ‘Microsoft.Web/sites@2020-12-01’ = [for i in range(0, 10): {

name: ‘my-webapp-${index + 1}’

…

}]

Please note that you MUST use the array expression ([…]) outside the for…in loop because it declares the array of the resources. Bicep will do the rest.

Modules

Personally, I love this part. While ARM templates use the linked template, bicep uses the module keyword for modularisation. Here’s the example for Azure Function app provisioning. For this, you need at least Storage Account, Consumption Plan and Azure Functions resources. Each resource can be individually declared as a module, and the orchestration bicep file calls each module. Each module should work independently, of course.

Storage Account

// storageAccount.bicep

param resourceName string

param location string = resourceGroup().location

resource st ‘Microsoft.Storage/storageAccounts@2021-02-01’ = {

name: resourceName

location: location

…

}

output id string = st.id

output name string = st.name

Consumption Plan

// consumptionPlan.bicep

param resourceName string

param location string = resourceGroup().location

resource csplan ‘Microsoft.Web/serverfarms@2020-12-01’ = {

name: resourceName

location: location

…

}

output id string = csplan.id

output name string = csplan.name

Azure Functions

// functionApp.bicep

param resourceName string

param location string = resourceGroup().location

param storageAccountId string

param storageAccountName string

param consumptionPlanId string

resource fncapp ‘Microsoft.Web/sites@2020-12-01’ = {

name: resourceName

location: location

…

properties: {

serverFarmId: consumptionPlanId

…

siteConfig: {

appSettings: [

{

name: ‘AzureWebJobsStorage’

value: ‘DefaultEndpointsProtocol=https;AccountName=${storageAccountName};EndpointSuffix=${environment().suffixes.storage};AccountKey=${listKeys(storageAccountId, ‘2021-02-01′).keys[0].value}’

}

…

]

}

}

}

output id string = fncapp.id

output name string = fncapp.name

Modules Orchestration

Here’s the orchestration bicep file to combine modules. All you need to do is to declare a module, refer to the module location and pass parameters. Based on the references between modules, dependencies are automatically calculated.

// azuredeploy.bicep

param resourceName string

param location string = resourceGroup().location

module st ‘./storage-account.bicep’ = {

name: ‘StorageAccountProvisioning’

params: {

name: resourceName

location: location

}

}

module csplan ‘./consumption-plan.bicep’ = {

name: ‘ConsumptionPlanProvisioning’

params: {

name: resourceName

location: location

}

}

module fncapp ‘./function-app.bicep’ = {

name: ‘FunctionAppProvisioning’

params: {

name: resourceName

location: location

storageAccountId: st.outputs.id

storageAccountName: st.outputs.name

consumptionPlanId: csplan.outputs.id

}

}

NOTE: Unfortunately, as of this writing, referencing to external URL is not supported yet, unlike linked ARM templates.

So far, we’ve briefly looked at the new features of Project Bicep. As Bicep is one of the most rapidly growing toolsets in Azure, keep using it for your resource management.

This article was originally published on Dev Kimchi.

![]() I’ve enjoyed working with you email, but Teams messaging is my preferred internal conversation route these days if I can’t start a call of course.

I’ve enjoyed working with you email, but Teams messaging is my preferred internal conversation route these days if I can’t start a call of course.

Recent Comments