by Contributed | Apr 23, 2021 | Technology

This article is contributed. See the original author and article here.

The combination of artificial intelligence and computing on the edge enables new high-value digital operations across every industry: retail, manufacturing, healthcare, energy, shipping/logistics, automotive, etc. Azure Percept is a new zero-to-low-code platform that includes sensory hardware accelerators, AI models, and templates to help you build and pilot secured, intelligent AI workloads and solutions to edge IoT devices. This posting is a companion to the new Azure Percept technical deep-dive on YouTube, providing more details for the video:

Hardware Security

The Azure Percept DK hardware is an inexpensive and powerful 15-watt AI device you can easily pilot in many locations. It includes a hardware root of trust to protect AI data and privacy-sensitive sensors like cameras and microphones. This added hardware security is based upon a Trusted Platform Module (TPM) version 2.0, which is an industry-wide, ISO standard from the Trusted Computing Group. Please see the Trusted Computing Group website for more information with the complete TPM 2.0 and ISO/IEC 11889 specification. The Azure Percept boot ROM ensures integrity of firmware between ROM and operating system (OS) loader, which in turn ensures integrity of the other software components, creating a chain of trust.

The Azure Device Provisioning Services (DPS) uses this chain of trust to authenticate and authorize each Azure Percept device to Azure cloud components. This enables an AI lifecycle for Azure Percept: AI models and business logic containers with enhanced security that can be encrypted in the cloud, downloaded, executed at the edge, with properly signed output sent to the cloud. This signing attestation provides tamper-evidence for all AI inferencing results, providing a more fully trustworthy environment. More information on how the Azure Percept DK is authenticated and authorized via the TPM can be found here: Azure IoT Hub Device Provisioning Service – TPM Attestation.

Example AI Models

This example showcases a Percept DK semantic segmentation AI model (Github source link) based upon U-Net, trained to recognize the volume of bananas in a grocery store. In the video below, the bright green highlights are the inferencing results from the U-Net AI model running on the Azure Percept DK:

Semantic segmentation AI models label each pixel in a video with the class of object for which it was trained, which means it can compute the two-dimensional size of irregularly shaped objects in the real world. This could be the size of an excavation from a construction site, the volume of bananas in a bin, or the density of packages in a delivery truck. Since you can perform AI inferencing over these items periodically, this enables time-series data upon the change in shape of the objects being detected. How fast is the hole being excavated? How efficient is the cargo space loading utilization in the delivery truck? In the banana example above, this time series data allows retailers reduce food waste by creating more efficient supply chains with less safety stock. In turn this reduces CO2 emissions by less transportation of fresh food, and less fertilizer required in the soil.

This example also showcases the Bring Your Own Model (BYOM) capabilities of Azure Percept. BYOM allows you to bring your own custom computer vision pipeline to your Azure Percept DK. This tutorial shows how to convert your own TensorFlow, ONNX or Caffe models for execution upon the Intel® Movidius™ Myriad™ X within the Azure Percept DK, and then how to subclass video pipeline IoT container to integrate your inferencing output. Many of the free, pre-trained open-source AI models in the Intel Model Zoo will run on the Myriad X.

People Counting Reference Application

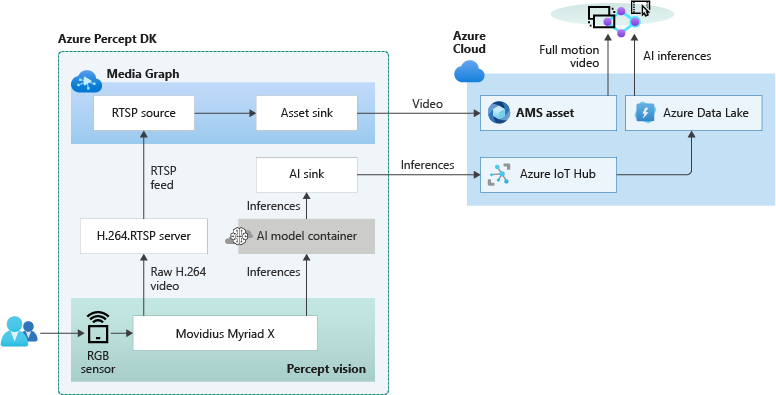

Combining edge-based AI inferencing and video with cloud-based business logic can be complex. Egress, storage and synchronization of the edge AI output and H.264 video streams in the cloud makes it even harder. Azure Percept Studio includes a free, open source reference application which detects people and generates their {x, y} coordinates in a real-time video stream. The application also provides a count of people in a user-defined polygon region within the camera’s viewport. This application showcases the best practices for security and privacy-sensitive AI workloads.

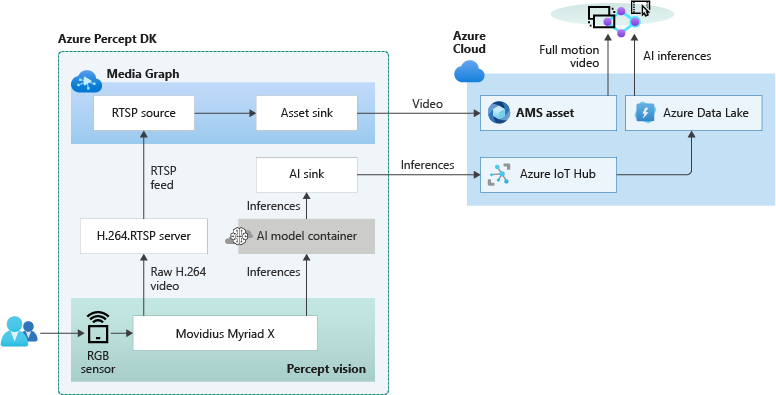

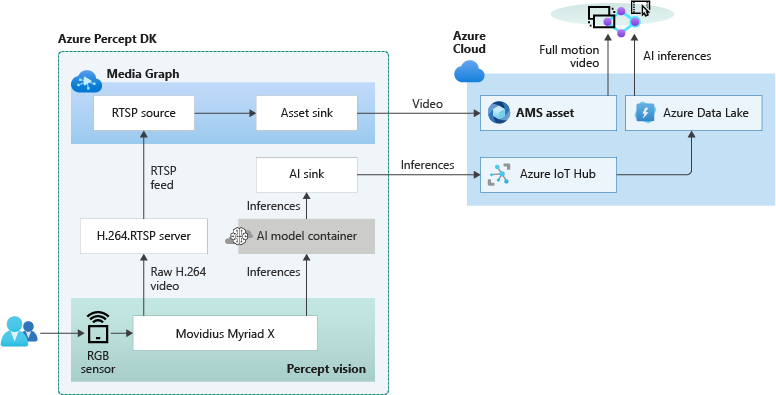

The overall topology of the reference application is shown below. The left side of the illustration contains the set of components which run at the edge within Azure Percept DK. The H.264 video stream and the AI inferencing results are then sent in real-time to the right side which runs in the Azure public cloud:

Because the Myriad X performs hardware encoding of both the compressed full motion H.264 video stream and AI inferencing results, hardware-synchronized timestamps in each stream makes it possible to provide downstream frame-level synchronization for applications running in the public cloud. The Azure Websites application provided in this example is fully stateless, simply reading the video + AI streams from their separate storage accounts in the cloud and composing the output into a single user interface:

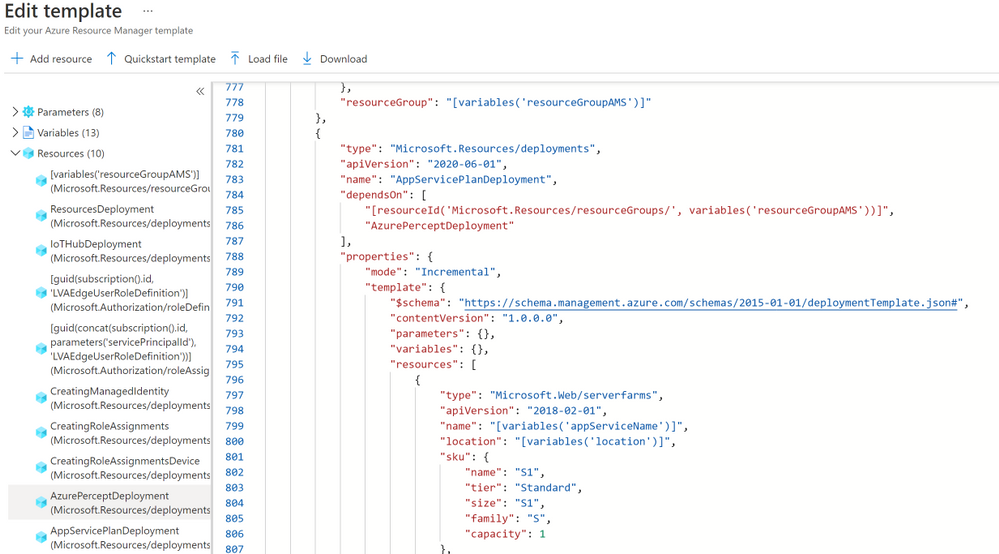

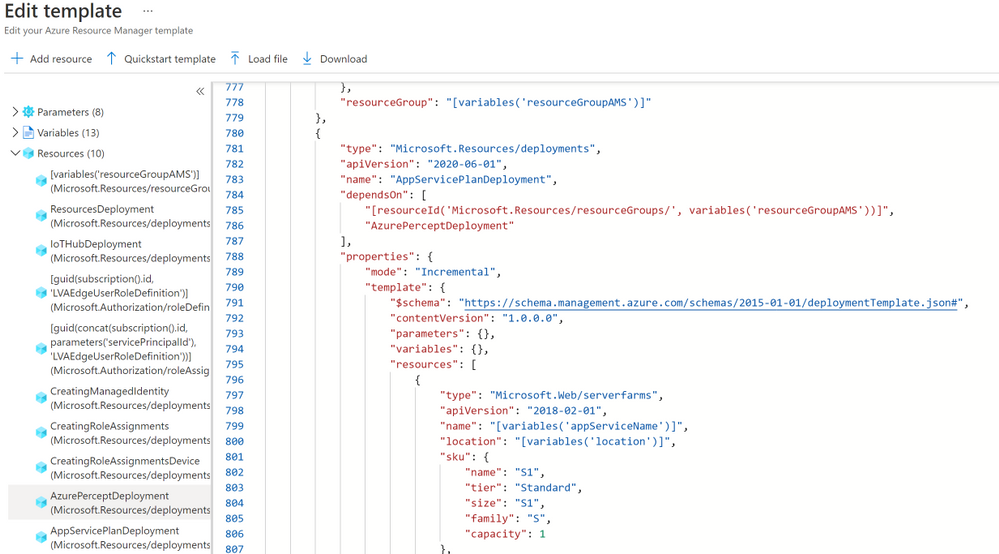

Included in the reference application is also a fully automated deployment model utilizing an Azure ARM template. If you click the “Deploy to Azure” button, then “Edit Template”, you will see this code:

This ARM template deploys the containers to the Azure Percept DK, creates the storage accounts in the public cloud, connects the IoT Hub message routes to the storage locations, deploys the stateless Azure Websites, and then connects the website to the storage locations. This example can be used to accelerate the development of your own hybrid edge/cloud workloads.

Get Started Today

Order your Percept DK today and get started with our easy-to-understand samples and tutorials. You can rapidly solve your business modernization scenarios no matter your skill level, from beginner to an experienced data scientist. With Azure Percept, you can put the cloud and years of Microsoft AI solutions to work as you pilot your own edge AI solutions.

by Contributed | Apr 23, 2021 | Technology

This article is contributed. See the original author and article here.

The Ultimate How To Guide for Presenting Content in Microsoft Teams

Vesku Nopanen is a Principal Consultant in Office 365 and Modern Work and passionate about Microsoft Teams. He helps and coaches customers to find benefits and value when adopting new tools, methods, ways or working and practices into daily work-life equation. He focuses especially on Microsoft Teams and how it can change organizations’ work. He lives in Turku, Finland. Follow him on Twitter: @Vesanopanen

Backup all WSP SharePoint Solutions using PowerShell

Mohamed El-Qassas is a Microsoft MVP, SharePoint StackExchange (StackOverflow) Moderator, C# Corner MVP, Microsoft TechNet Wiki Judge, Blogger, and Senior Technical Consultant with +10 years of experience in SharePoint, Project Server, and BI. In SharePoint StackExchange, he has been elected as the 1st Moderator in the GCC, Middle East, and Africa, and ranked as the 2nd top contributor of all the time. Check out his blog here.

Getting started with Azure Bicep

Tobias Zimmergren is a Microsoft Azure MVP from Sweden. As the Head of Technical Operations at Rencore, Tobias designs and builds distributed cloud solutions. He is the co-founder and co-host of the Ctrl+Alt+Azure Podcast since 2019, and co-founder and organizer of Sweden SharePoint User Group from 2007 to 2017. For more, check out his blog, newsletter, and Twitter @zimmergren

Azure: Talk about Private Links

George Chrysovalantis Grammatikos is based in Greece and is working for Tisski ltd. as an Azure Cloud Architect. He has more than 10 years’ experience in different technologies like BI & SQL Server Professional level solutions, Azure technologies, networking, security etc. He writes technical blogs for his blog “cloudopszone.com“, Wiki TechNet articles and also participates in discussions on TechNet and other technical blogs. Follow him on Twitter @gxgrammatikos.

Teams Real Simple with Pictures: Hyperlinked email addresses in Lists within Teams

Chris Hoard is a Microsoft Certified Trainer Regional Lead (MCT RL), Educator (MCEd) and Teams MVP. With over 10 years of cloud computing experience, he is currently building an education practice for Vuzion (Tier 2 UK CSP). His focus areas are Microsoft Teams, Microsoft 365 and entry-level Azure. Follow Chris on Twitter at @Microsoft365Pro and check out his blog here.

by Contributed | Apr 23, 2021 | Technology

This article is contributed. See the original author and article here.

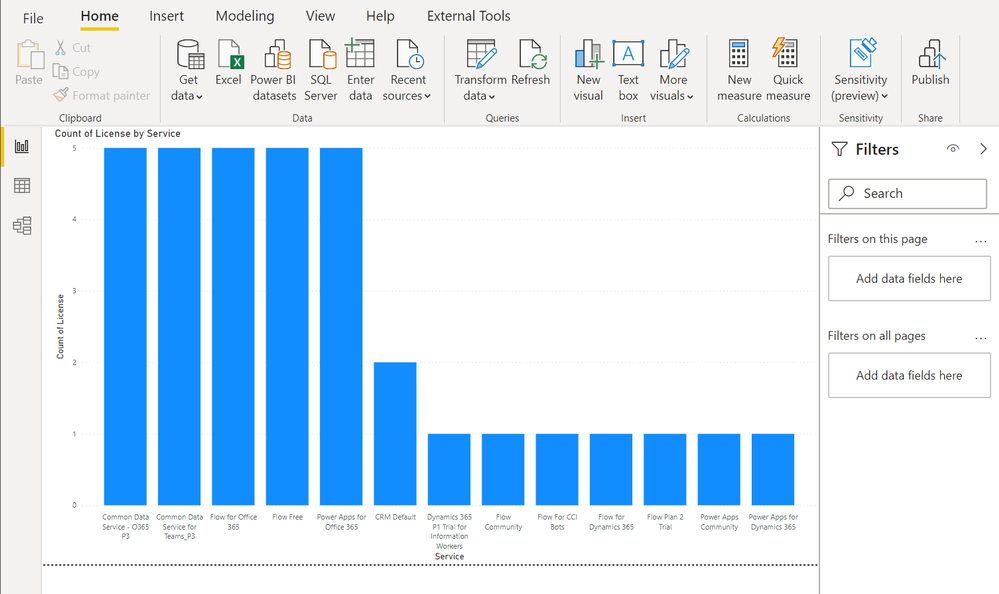

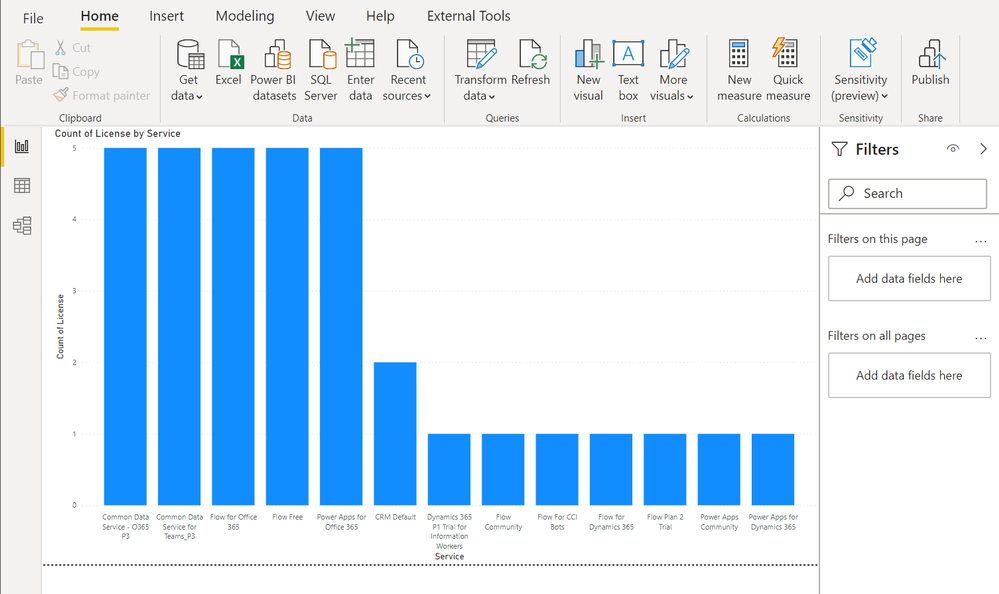

Context

During a Power Platform audit for a customer, I was looking for exporting all user licenses data into a single file to analyze it with Power BI. In this article, I’ll show you the easy way to export Power Apps and Power Automate user licenses with PowerShell!

Download user licenses

To do this, we are using PowerApps PowerShell and more particular, the Power Apps admin module.

To install this module, execute the following command as a local administrator:

Install-Module -Name Microsoft.PowerApps.Administration.PowerShell

Note: if this module is already installed on your machine, you can use the Update-Module command to update it to the latest version available.

Then to export user licenses data, you just need to execute the following command and replace the target file path to use:

Get-AdminPowerAppLicenses -OutputFilePath <PATH-TO-CSV-FILE>

Note: you will be prompted for your Microsoft 365 tenant credentials, you need to sign-in as Power Platform Administrator or Global Administrator to execute this command successfully.

After this, you can easily use the generated CSV file in Power BI Desktop for further data analysis:

Happy reporting everyone!

You can read this article on my blog here.

Resources

https://docs.microsoft.com/en-us/powershell/powerapps/get-started-powerapps-admin

https://docs.microsoft.com/en-us/powershell/module/microsoft.powerapps.administration.powershell/get-adminpowerapplicenses

by Contributed | Apr 23, 2021 | Technology

This article is contributed. See the original author and article here.

The team is excited this week to share what we’ve been working on based on all your input. News to be covered includes Application Gateway URL Rewrite General Availability, End of Support for Ubuntu 16.04, Using Azure Migrate with Private Endpoints, Overview of HoloLens 2 deployment and security-based Microsoft Learn Module of the week.

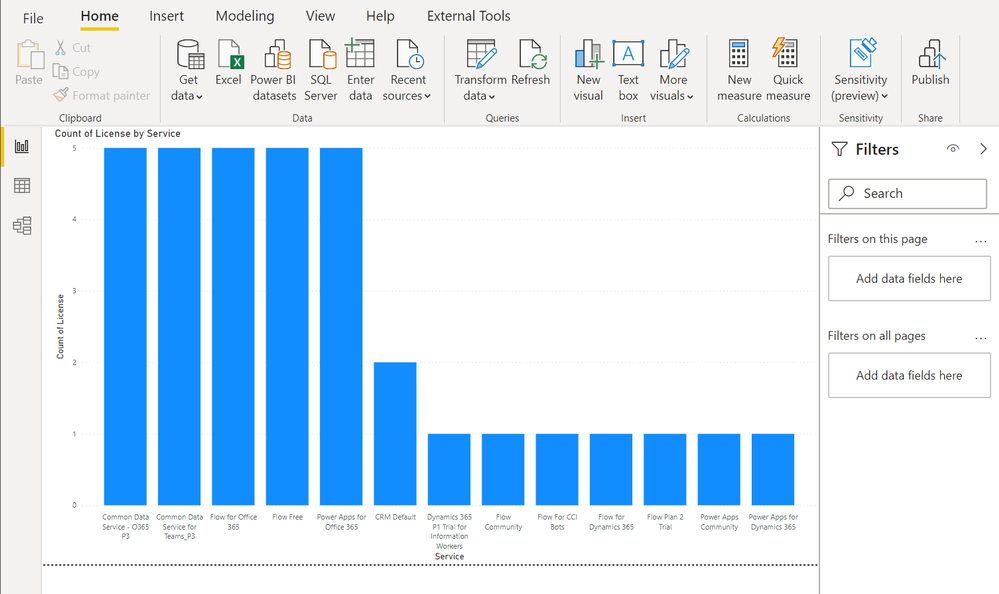

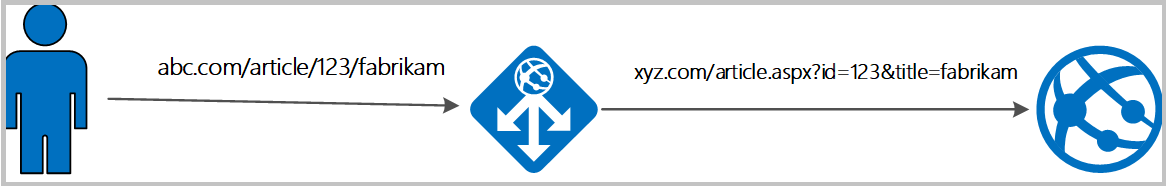

Application Gateway URL Rewrite General Availability

Azure Application Gateway can now rewrite the host name, path and query string of the request URL. You can now also rewrite the URL of all or some of the client requests based on matching one or more conditions as required. Administrators can also now choose to route the request based on the original URL or the rewritten URL. This feature enables several important scenarios such as allowing path based routing for query string values and support for hosting friendly URLs.

Learn more here: Rewrite HTTP Headers and URL with Application Gateway

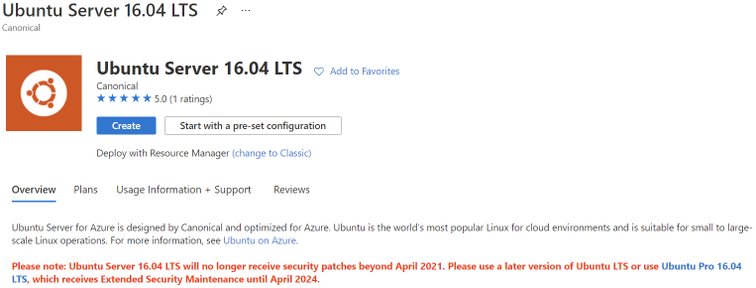

Upgrade your Ubuntu server to Ubuntu 18.04 LTS by 30 April 2021

Ubuntu is ending standard support for Ubuntu 16.04 LTS on 30 April 2021. Microsoft will replace the Ubuntu 16.04 LTS image with an Ubuntu 18.04 LTS image for new compute instances and clusters to ensure continued security updates and support from the Ubuntu community. If you have long running compute instances, or non-autoscaling clusters (min nodes > 0), please follow the instructions here for manual migration before 30 April 2021. After 30 April 2021, support for Ubuntu 16.04 ends and no security update will be provided. Please migrate to Ubuntu 18.04 immediately or before 30 April 2021, and please note that Microsoft will not be responsible for any kind of security breaches after the deprecation.

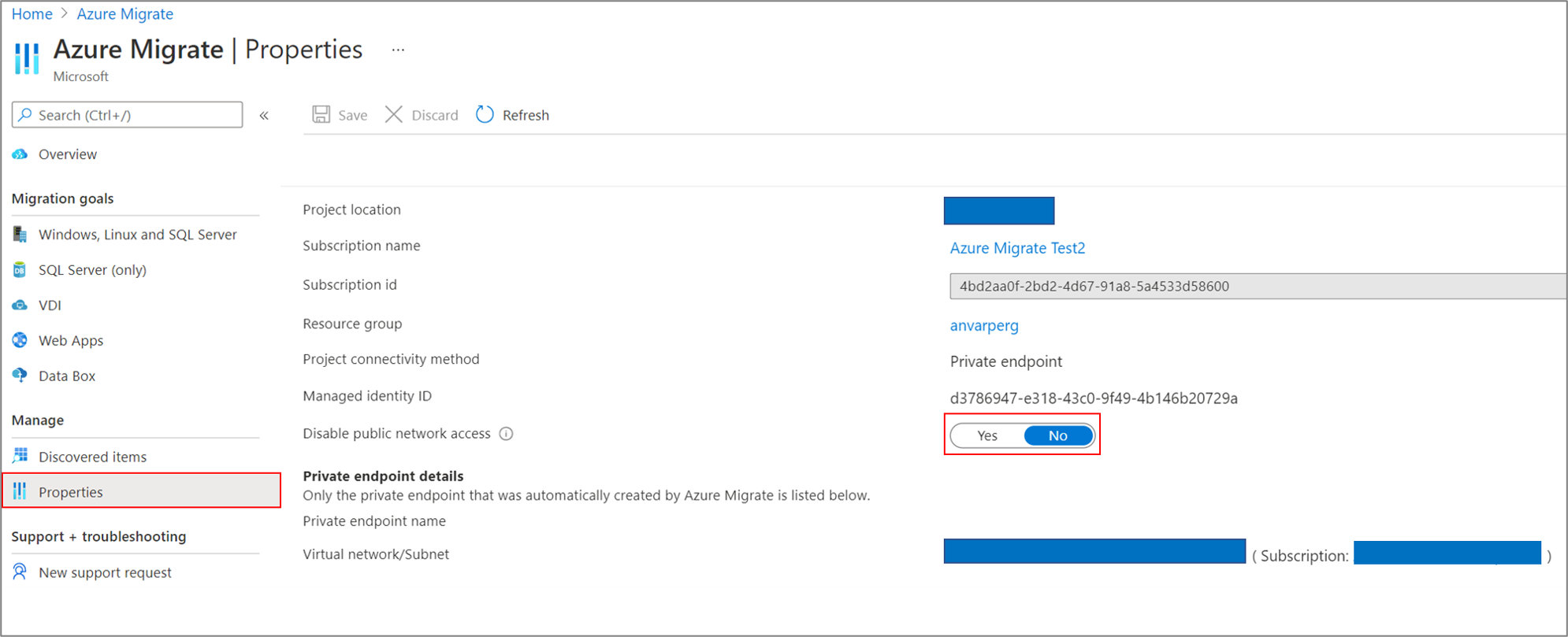

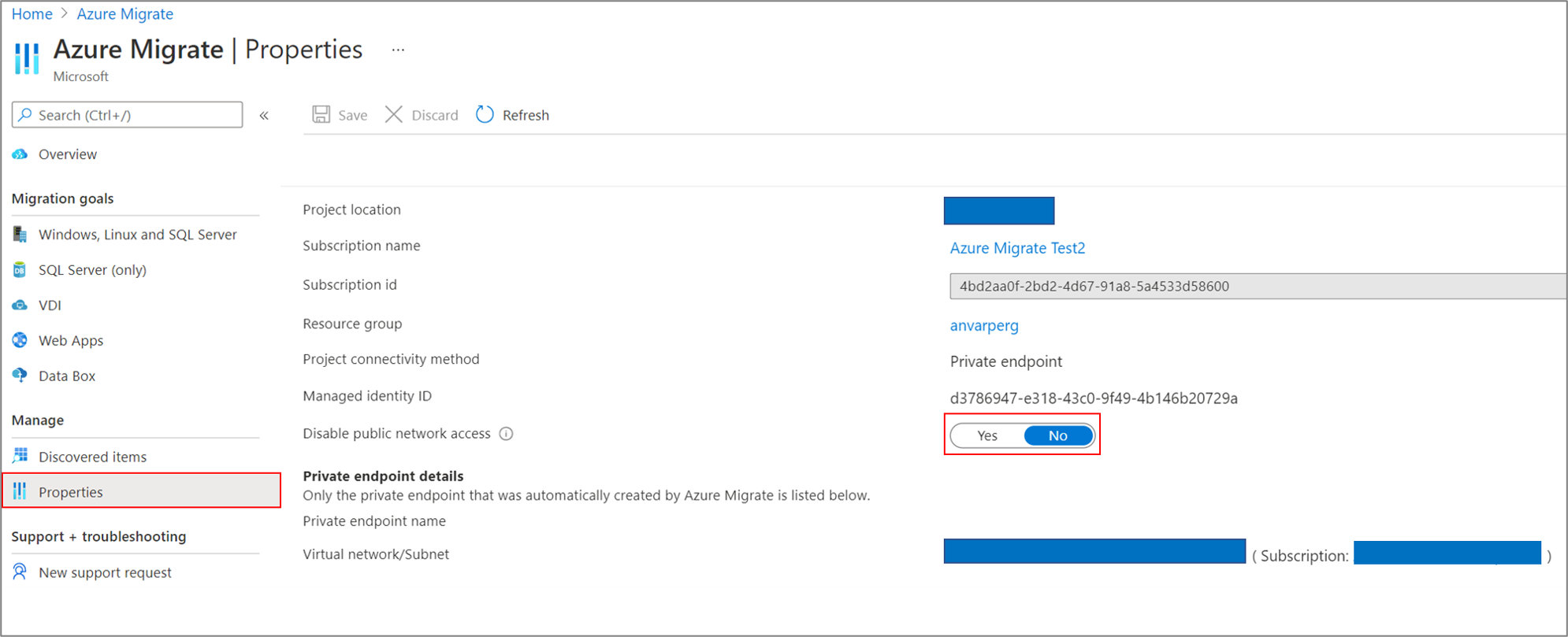

Azure Migrate with private endpoints

You can now use Azure Migrate: Discovery and Assessment and Azure Migrate: Server Migration tools to privately and securely connect to the Azure Migrate service over an ExpressRoute private peering or a site-to-site VPN connection via an Azure private link. The private endpoint connectivity method is recommended when there is an organizational requirement to not cross public networks to access the Azure Migrate service and other Azure resources. You can also use the private link support to use an existing ExpressRoute private peering circuits for better bandwidth or latency requirements.

Learn more here: Using Azure Migrate with private endpoints

HoloLens 2 Deployment Overview

Our team recently partnered with the HoloLens team to collaborate on and curate documentation surrounding HoloLens 2 deployment. Just like any device that requires access to an organizations network and data, the HoloLens in most cases requires management via that organization’s IT department.

This writeup shares all the necessary services required to get started: An Overview of How to Deploy HoloLens 2

Community Events

MS Learn Module of the Week

AZ-400: Develop a security and compliance plan

Build strategies around security and compliance that enable you to authenticate and authorize your users, handle sensitive information, and enforce proper governance.

Modules include:

- Secure your identities by using Azure Active Directory

- Create Azure users and groups in Azure Active Directory

- Authenticate apps to Azure services by using service principals and managed identities for Azure resources

- Configure and manage secrest in Azure Key Vault

- and more

Learn more here: Develop a security and compliance plan

Let us know in the comments below if there are any news items you would like to see covered in the next show. Be sure to catch the next AzUpdate episode and join us in the live chat.

by Contributed | Apr 23, 2021 | Technology

This article is contributed. See the original author and article here.

I remember running my first commands and building my first automation using Windows PowerShell back in 2006. Since then, PowerShell became one of my daily tools to build, deploy, manage IT environments. With the release of PowerShell version 6 and now PowerShell 7, PowerShell became cross-platform. This means you can now use it on even more systems like Linux and macOS. With PowerShell becoming more and more powerful (you see what I did there ;)), more people are asking me how they can get started and learn PowerShell. Luckily we just released 5 new modules on Microsoft Learn for PowerShell.

Learn PowerShell on Microsoft Learn

Learn PowerShell on Microsoft Learn

Microsoft Learn Introduction to PowerShell

Learn about the basics of PowerShell. This cross-platform command-line shell and scripting language is built for task automation and configuration management. You’ll learn basics like what PowerShell is, what it’s used for, and how to use it.

Learning objectives

- Understand what PowerShell is and what you can use it for.

- Use commands to automate tasks.

- Leverage the core cmdlets to discover commands and learn how they work.

PowerShell learn module: Introduction to PowerShell

Connect commands into a pipeline

In this module, you’ll learn how to connect commands into a pipeline. You’ll also learn about filtering left, formatting right, and other important principles.

Learning objectives

- Explore cmdlets further and construct a sequence of them in a pipeline.

- Apply sound principles to your commands by using filtering and formatting.

PowerShell learn module: Connect commands into a pipeline

Introduction to scripting in PowerShell

This module introduces you to scripting with PowerShell. It introduces various concepts to help you create script files and make them as robust as possible.

Learning objectives

- Understand how to write and run scripts.

- Use variables and parameters to make your scripts flexible.

- Apply flow-control logic to make intelligent decisions.

- Add robustness to your scripts by adding error management.

PowerShell learn module: Introduction to scripting in PowerShell

Write your first PowerShell code

Getting started by writing code examples to learn the basics of programming in PowerShell!

Learning objectives

- Manage PowerShell inputs and outputs

- Diagnose errors when you type code incorrectly

- Identify different PowerShell elements like cmdlets, parameters, inputs, and outputs.

PowerShell learn module: Write your first PowerShell code

Automate Azure tasks using scripts with PowerShell

Install Azure PowerShell locally and use it to manage Azure resources.

Learning objectives

- Decide if Azure PowerShell is the right tool for your Azure administration tasks

- Install Azure PowerShell on Linux, macOS, and/or Windows

- Connect to an Azure subscription using Azure PowerShell

- Create Azure resources using Azure PowerShell

PowerShell learn module: Automate Azure tasks using scripts with PowerShell

Conclusion

I hope this helps you get started and learn PowerShell! If you have any questions feel free to leave a comment!

Recent Comments