by Contributed | Sep 17, 2021 | Technology

This article is contributed. See the original author and article here.

In the July Update of Azure Kubernetes Service (AKS) on Azure Stack HCI we introduced automatic distribution of virtual machine data across multiple cluster shared volumes which makes clusters more resilient to shared storage outages. This post covers how this works and why it’s important for reliability.

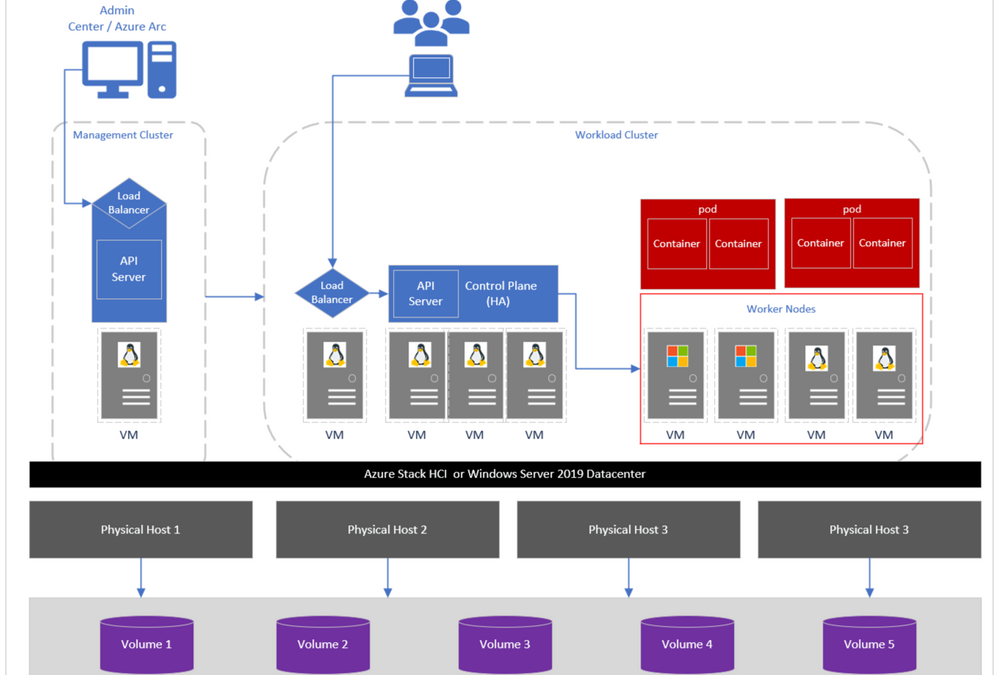

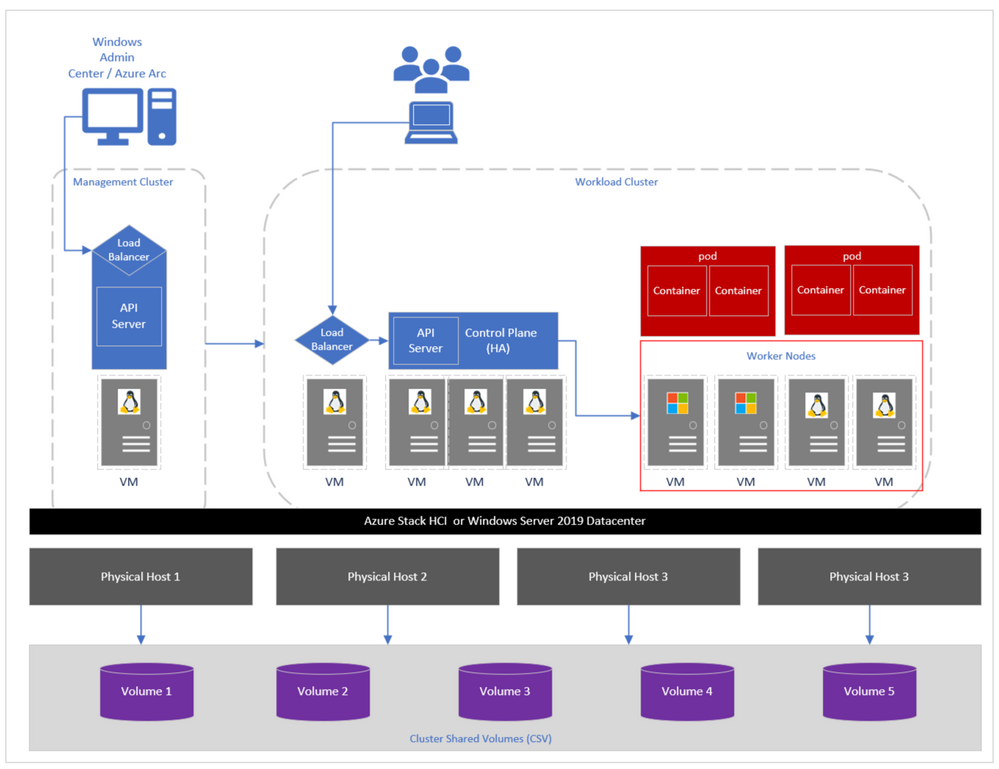

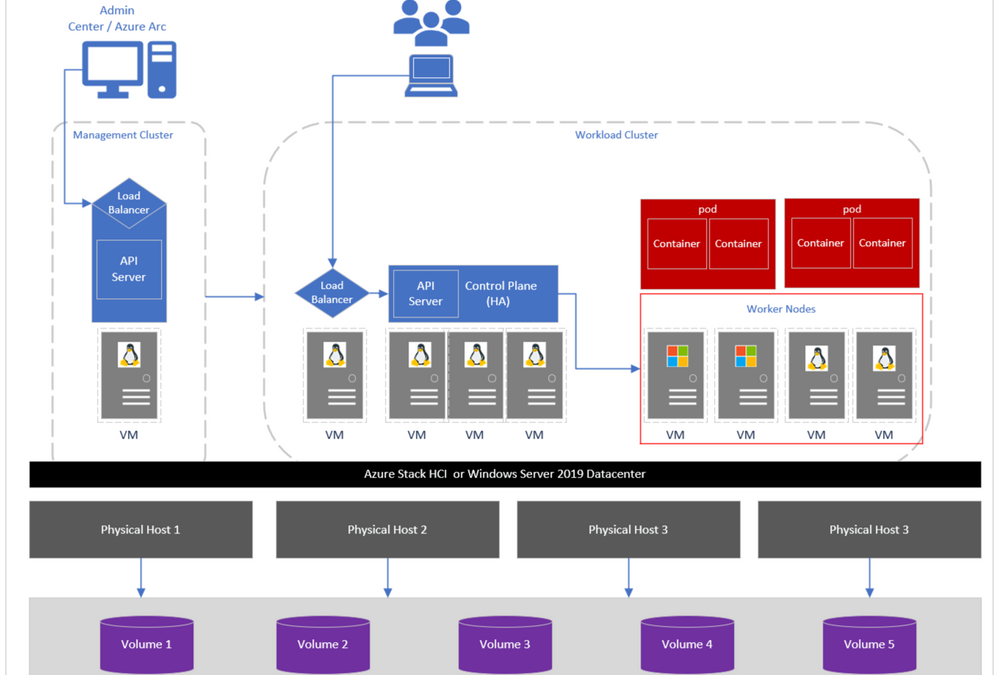

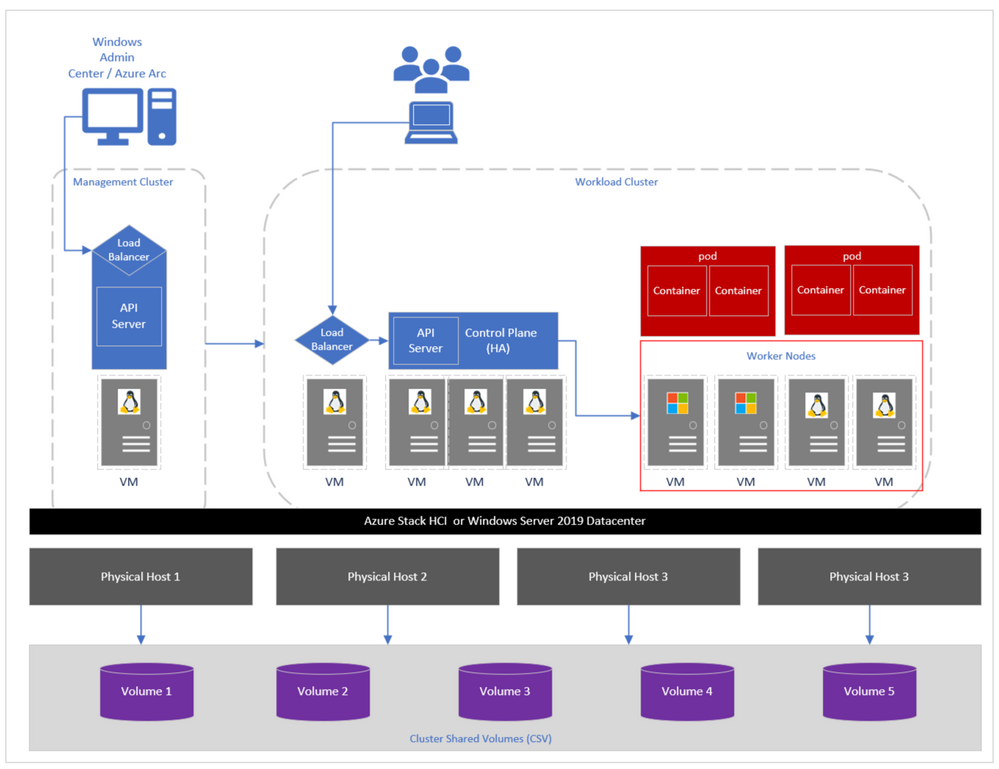

Just to recap, AKS-HCI is a turn-key solution for Administrators to easily deploy, manage Kubernetes clusters in datacenters and edge locations, and developers to run and manage modern applications similar to cloud-based Azure Kubernetes Service. The architecture seamlessly supports running virtualized Windows and Linux workloads on top of Azure Stack HCI or Windows Server 2019 Datacenter. It comprises of different layers which include a management cluster, a load balancer, workload clusters and Cluster Shared Volumes (CSV) which run customer workloads, etc. as shown in the image below. For detailed information on each of these layers visit here.

Figure 1: AKS-HCI cluster components.

Cluster Shared Volumes allow multiple nodes in a Windows Server failover cluster or Azure Stack HCI to simultaneously have read-write access to the same disk that is provisioned as an NTFS volume. In AKS-HCI, we use CSVs to persist virtual hard disk (VHD/VHDX) files and other configuration files required to run clusters.

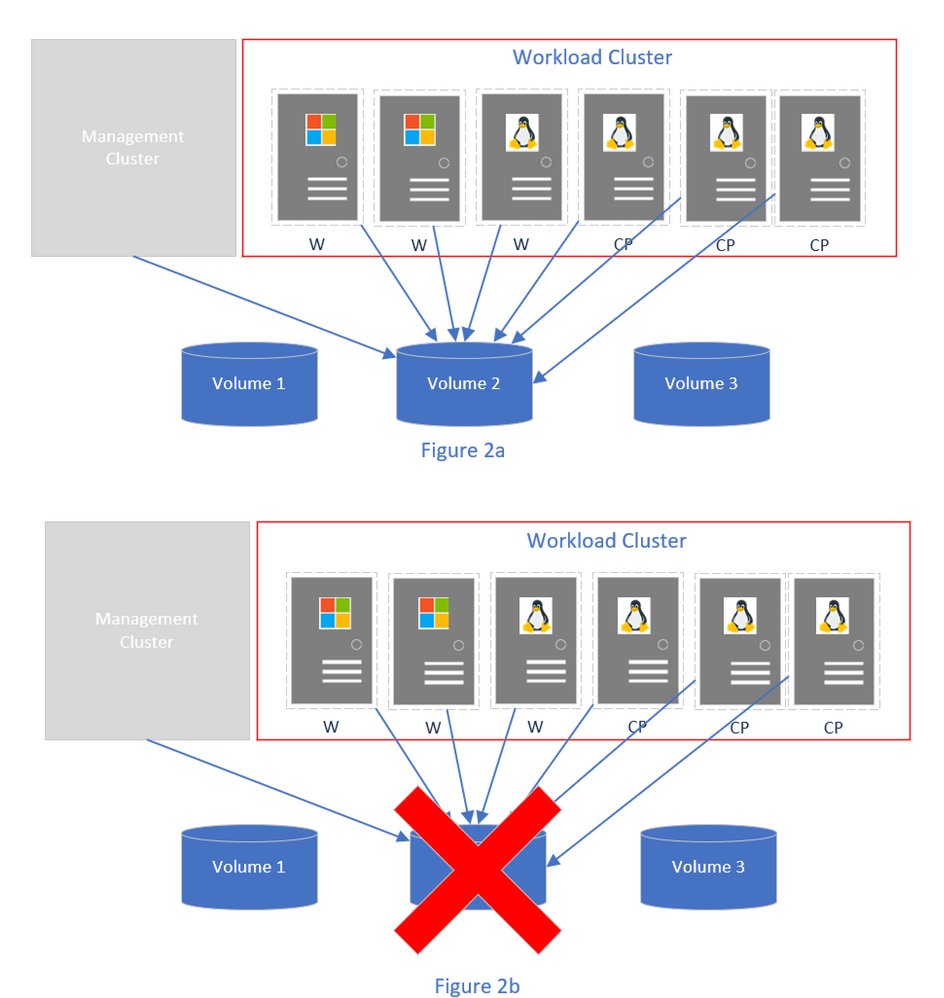

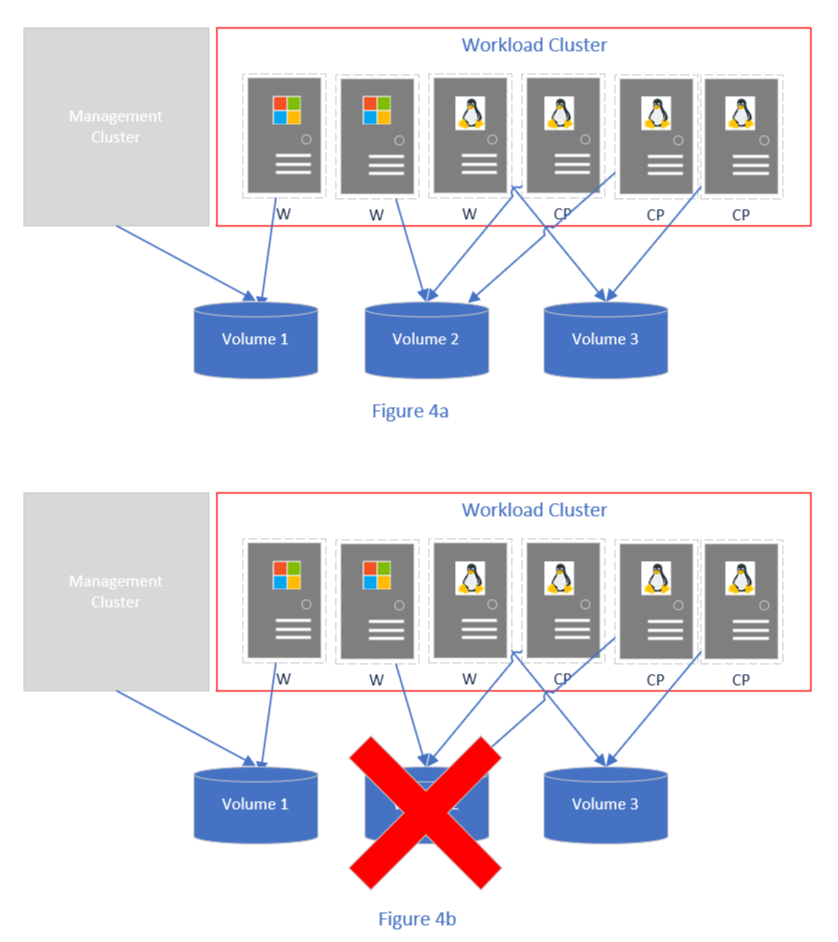

In past releases of AKS-HCI, virtual machine data was saved on a single volume in the system. This architecture generated a single point of failure – the volume hosting all VM data as shown in Figure 2a. In the event of an outage or failure in this volume, the entire cluster would be unreachable and thus impacting application/pod availability as illustrated in 2b.

Figure 2: Virtual machines on a single volume.

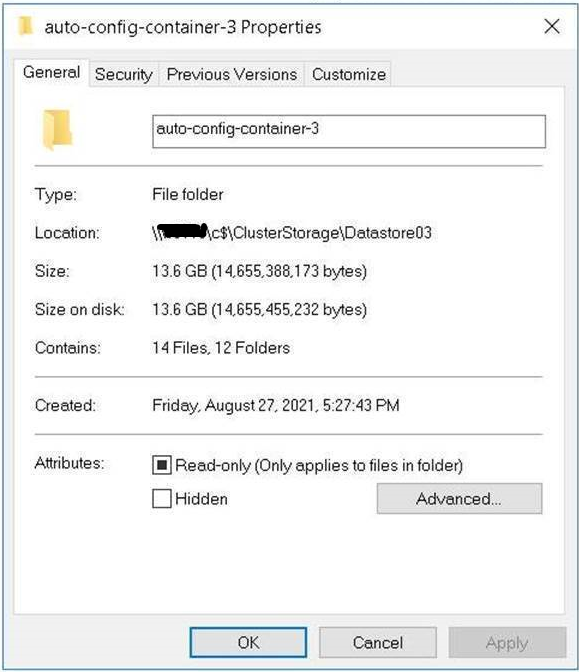

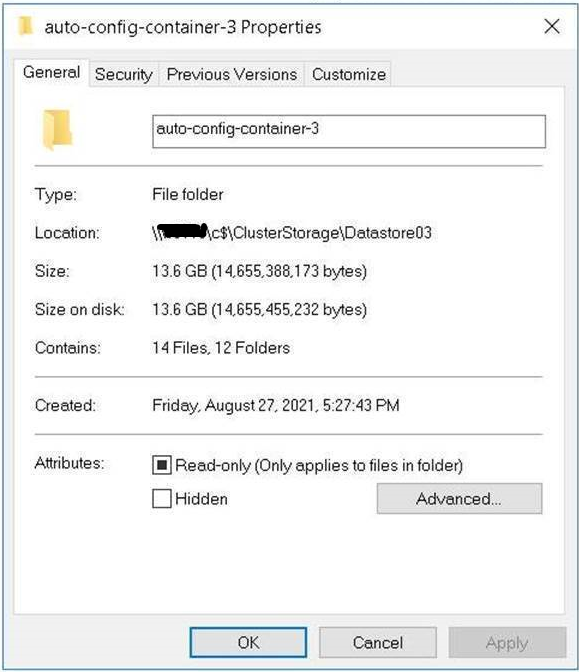

Starting with the July release, customers running multiple Cluster Shared Volumes (CSV) in their Azure Stack HCI clusters, by default during a new installation of AKS-HCI, the virtual machine data will automatically be spread out across all available CSVs in the cluster. What you will notice is a list of folders prefixed with the name auto-config-container-N created on each cluster shared volume in the system.

Figure 3: Sample of an auto-config-container-X folder generated by AKS-HCI deployment.

Most customers may not have noticed this behavior as it required no changes in the cluster creation user experience; this happens behind the scenes during initial cluster installation. Note that for customers running clusters based on the June or prior releases, an update and clean installation is required for this functionality to be available.

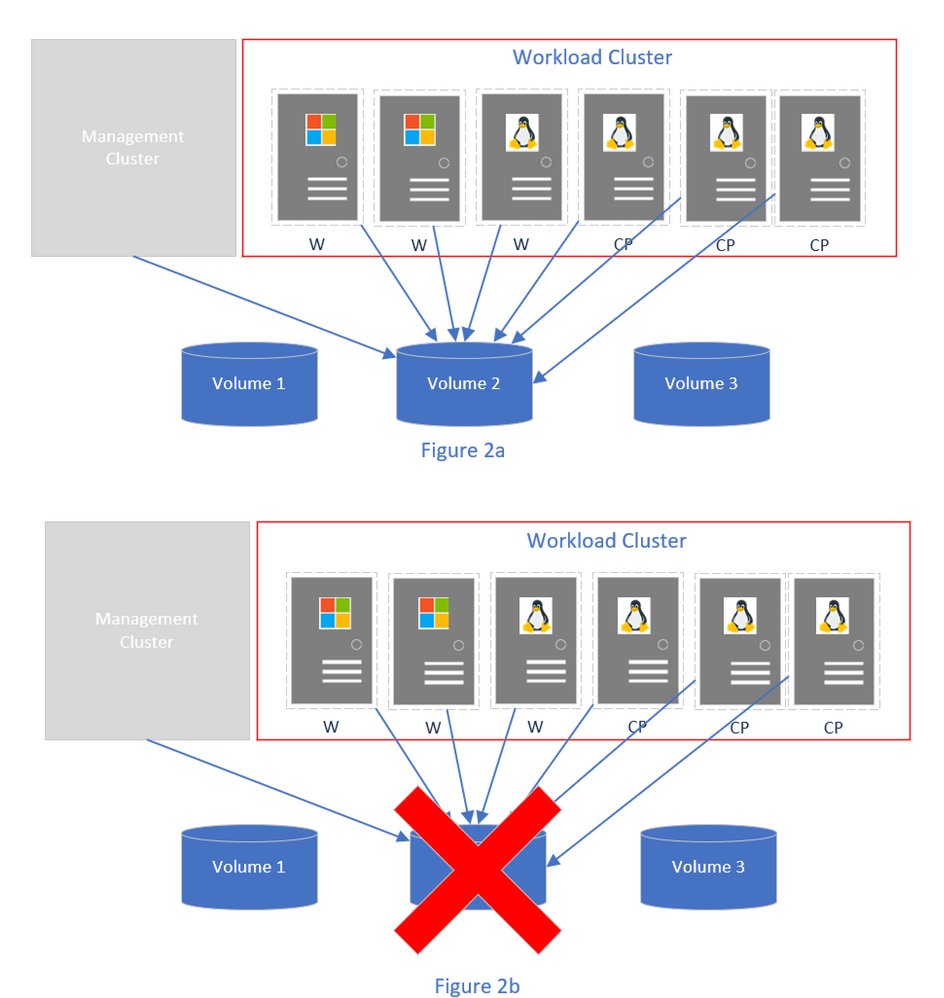

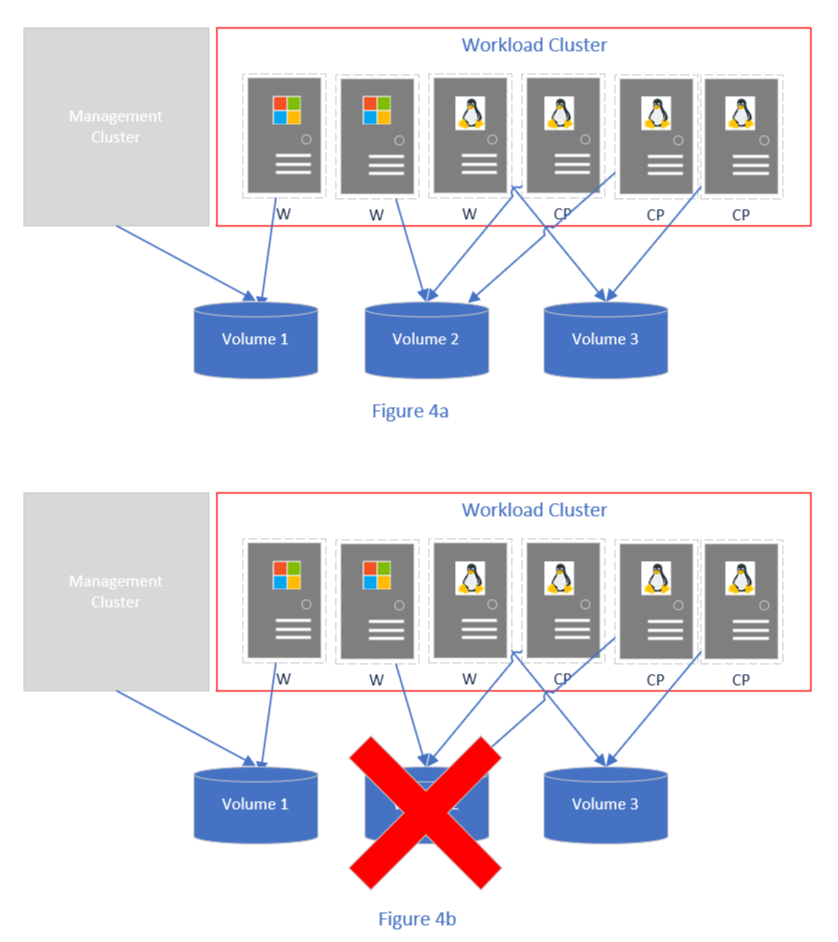

To illustrate how this improves the reliability of the system, assuming you have 3 volumes and deploy a cluster with VM data spread out as illustrated in Figure 4a. In the event of an outage or failure in volume 2 the cluster would still be operational as workloads would continue running in the remaining VMs (Figure 4b).

Figure 4: Virtual machines distributed across multiple cluster shared volumes.

To learn more about high availability on AKS-HCI, please visit our documentation for a range of topics.

Useful links:

Try for free: https://aka.ms/AKS-HCI-Evaluate

Tech Docs: https://aka.ms/AKS-HCI-Docs

Issues and Roadmap: https://github.com/azure/aks-hci

Evaluate on Azure: https://aka.ms/AKS-HCI-EvalOnAzure

by Scott Muniz | Sep 16, 2021 | Security, Technology

This article is contributed. See the original author and article here.

The Australian Cyber Security Centre (ACSC) has released its annual report on key cyber security threats and trends for the 2020–21 financial year.

The report lists the exploitation of the pandemic environment, the disruption of essential services and critical infrastructure, ransomware, the rapid exploitation of security vulnerabilities, and the compromise of business email as last year’s most significant threats.

CISA encourages users and administrators to review ACSC’s Annual Cyber Threat Report July 2020 to June 2021 and CISA’s Stop Ransomware webpage for more information.

by Contributed | Sep 16, 2021 | Technology

This article is contributed. See the original author and article here.

Today at Windows Server Summit, Microsoft announced a new Windows Server Hybrid Administrator Associate certification, a certification that members of the team responsible for this blog have been highly involved in developing.

To obtain this certification you need to pass two exams: AZ 800 (Administering Windows Server Hybrid Core Infrastructure) and AZ 801 (Configuring Windows Server Hybrid Advanced Services). The objectives associated with the exams address knowledge of configuring and administering core and advanced Windows Server roles and features, from AD DS, DNS, DHCP, File, Storage and Compute through to Security, High Availability, DR, Monitoring and Troubleshooting. Both the traditional on-premises elements of these Windows Server roles and features are covered by the exam objectives as well as the interaction of these elements with hybrid cloud technologies.

We’ve created two study guides to help you prepare for each exam. In these study guides you will find links to relevant MS Learn modules and learning paths and docs.microsoft.com articles. You can find them here:

https://aka.ms/az-800studyguide (Administering Windows Server Hybrid Core Infrastructure)

https://aka.ms/az-801studyguide (Configuring Windows Server Hybrid Advanced Services)

If you just want to get a good overview of the content of each exam, I ran through the contents of each in briefings to Jeff Woolsey from the Windows Server & Azure Stack HCI product team. Each briefing is about 20 minutes in length and watching both should give you a great idea of what each exam and the certification is all about:

AZ-800 https://youtu.be/yI8BRar8xJY

AZ-801 https://youtu.be/T-JSpxZp8xk

How these exams and the certification came about is directly related this team’s role as Cloud Advocates and our responsibility of advocating to and on behalf of the IT Operations audience. Certification has always been important to us and many of us got our groundings in core Microsoft technologies through preparing to take certification exams.

A good number of us first got certified on Windows NT 4 and my first book was a Microsoft Press training kit for the Windows Server 2003 admin exam. When Rick Claus made the first post on this blog introducing the team back in 2018, one of the first comments we got asked us about future Windows Server training and certification. We know the topic is important to you, our audience, because it has regularly come up when presenting to audiences at Ignite or user groups, or on twitter, or in casual conversation at the supermarket.

Over the last 18 months Cloud Advocates have worked with World Wide Learning, Marketing, and the Windows Server and Azure Stack HCI product teams to design and develop MS Learn and instructor led training content that covered the fundamental technologies addressed by the AZ 800 and AZ 801 exams. These modules, paths, and courses laid the path for the certification announced today.

It’s not a stretch to say that over the last few years cloud technologies have increasingly interacted with the on-premises world. Just as WINS was critical to NT4, AD was critical to Windows 2000, and virtualization critical to Windows Server 2008 and Windows Server 2012, cloud technologies are an important element of today’s on-premises Windows Server deployments.

Role based certifications address the tasks that people perform in the course of their jobs. Any new certification around Windows Server not only had to address the core on-premises roles, but also how those roles are extended by technologies hosted in the cloud. Through our regular interactions with our audience we’ve seen time and time again that we’re all living in a hybrid world even if the degree to which we’re living in that world varies from organization to organization.

Windows Server 2022 has been designed as to work in hybrid cloud environments, something you see through Windows Admin Center through to extended capabilities made available through Azure Arc and Azure File Sync. The description for each exam indicates that exam candidates should have experience with technologies they are being tested on. Whereas a few years ago the hybrid story wasn’t as comprehensive of compelling, the release of Windows Server 2022 provided an opportunity to return to a certification that attests to how people do and will use the operating system today and into the future.

The AZ-800 and AZ-801 exams will go into beta towards the end of 2021. An announcement will be made when the betas are available and we expect that uptake of available seats on the beta will be swift. The exams are likely to RTM early in 2022. By providing you with a lot of information now, we hope you’ll have a good amount of time to get prepared for this brand new certification.

by Scott Muniz | Sep 16, 2021 | Security, Technology

This article is contributed. See the original author and article here.

Summary

This Joint Cybersecurity Advisory uses the MITRE Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK®) framework, Version 8. See the ATT&CK for Enterprise for referenced threat actor tactics and for techniques.

This joint advisory is the result of analytic efforts between the Federal Bureau of Investigation (FBI), United States Coast Guard Cyber Command (CGCYBER), and the Cybersecurity and Infrastructure Security Agency (CISA) to highlight the cyber threat associated with active exploitation of a newly identified vulnerability (CVE-2021-40539) in ManageEngine ADSelfService Plus—a self-service password management and single sign-on solution.

CVE-2021-40539, rated critical by the Common Vulnerability Scoring System (CVSS), is an authentication bypass vulnerability affecting representational state transfer (REST) application programming interface (API) URLs that could enable remote code execution. The FBI, CISA, and CGCYBER assess that advanced persistent threat (APT) cyber actors are likely among those exploiting the vulnerability. The exploitation of ManageEngine ADSelfService Plus poses a serious risk to critical infrastructure companies, U.S.-cleared defense contractors, academic institutions, and other entities that use the software. Successful exploitation of the vulnerability allows an attacker to place webshells, which enable the adversary to conduct post-exploitation activities, such as compromising administrator credentials, conducting lateral movement, and exfiltrating registry hives and Active Directory files.

Zoho ManageEngine ADSelfService Plus build 6114, which Zoho released on September 6, 2021, fixes CVE-2021-40539. FBI, CISA, and CGCYBER strongly urge users and administrators to update to ADSelfService Plus build 6114. Additionally, FBI, CISA, and CGCYBER strongly urge organizations ensure ADSelfService Plus is not directly accessible from the internet.

The FBI, CISA, and CGCYBER have reports of malicious cyber actors using exploits against CVE-2021-40539 to gain access [T1190] to ManageEngine ADSelfService Plus, as early as August 2021. The actors have been observed using various tactics, techniques, and procedures (TTPs), including:

- Frequently writing webshells [T1505.003] to disk for initial persistence

- Obfuscating and Deobfuscating/Decoding Files or Information [T1027 and T1140]

- Conducting further operations to dump user credentials [T1003]

- Living off the land by only using signed Windows binaries for follow-on actions [T1218]

- Adding/deleting user accounts as needed [T1136]

- Stealing copies of the Active Directory database (

NTDS.dit) [T1003.003] or registry hives

- Using Windows Management Instrumentation (WMI) for remote execution [T1047]

- Deleting files to remove indicators from the host [T1070.004]

- Discovering domain accounts with the

net Windows command [1087.002]

- Using Windows utilities to collect and archive files for exfiltration [T1560.001]

- Using custom symmetric encryption for command and control (C2) [T1573.001]

The FBI, CISA, and CGCYBER are proactively investigating and responding to this malicious cyber activity.

- FBI is leveraging specially trained cyber squads in each of its 56 field offices and CyWatch, the FBI’s 24/7 operations center and watch floor, which provides around-the-clock support to track incidents and communicate with field offices across the country and partner agencies.

- CISA offers a range of no-cost cyber hygiene services to help organizations assess, identify, and reduce their exposure to threats. By requesting these services, organizations of any size could find ways to reduce their risk and mitigate attack vectors.

- CGCYBER has deployable elements that provide cyber capability to marine transportation system critical infrastructure in proactive defense or response to incidents.

Sharing technical and/or qualitative information with the FBI, CISA, and CGCYBER helps empower and amplify our capabilities as federal partners to collect and share intelligence and engage with victims while working to unmask and hold accountable, those conducting malicious cyber activities. See the Contact section below for details.

Click here for a PDF version of this report.

Technical Details

Successful compromise of ManageEngine ADSelfService Plus, via exploitation of CVE-2021-40539, allows the attacker to upload a .zip file containing a JavaServer Pages (JSP) webshell masquerading as an x509 certificate: service.cer. Subsequent requests are then made to different API endpoints to further exploit the victim’s system.

After the initial exploitation, the JSP webshell is accessible at /help/admin-guide/Reports/ReportGenerate.jsp. The attacker then attempts to move laterally using Windows Management Instrumentation (WMI), gain access to a domain controller, dump NTDS.dit and SECURITY/SYSTEM registry hives, and then, from there, continues the compromised access.

Confirming a successful compromise of ManageEngine ADSelfService Plus may be difficult—the attackers run clean-up scripts designed to remove traces of the initial point of compromise and hide any relationship between exploitation of the vulnerability and the webshell.

Targeted Sectors

APT cyber actors have targeted academic institutions, defense contractors, and critical infrastructure entities in multiple industry sectors—including transportation, IT, manufacturing, communications, logistics, and finance. Illicitly obtained access and information may disrupt company operations and subvert U.S. research in multiple sectors.

Indicators of Compromise

Hashes:

068d1b3813489e41116867729504c40019ff2b1fe32aab4716d429780e666324

49a6f77d380512b274baff4f78783f54cb962e2a8a5e238a453058a351fcfbba

File paths:

C:ManageEngineADSelfService Pluswebappsadssphelpadmin-guidereportsReportGenerate.jsp

C:ManageEngineADSelfService Pluswebappsadssphtmlpromotionadap.jsp

C:ManageEngineADSelfService PlusworkCatalinalocalhostROOTorgapachejsphelp

C:ManageEngineADSelfService PlusjrebinSelfSe~1.key (filename varies with an epoch timestamp of creation, extension may vary as well)

C:ManageEngineADSelfService PluswebappsadsspCertificatesSelfService.csr

C:ManageEngineADSelfService Plusbinservice.cer

C:UsersPubliccustom.txt

C:UsersPubliccustom.bat

C:ManageEngineADSelfService PlusworkCatalinalocalhostROOTorgapachejsphelp (including subdirectories and contained files)

Webshell URL Paths:

/help/admin-guide/Reports/ReportGenerate.jsp

/html/promotion/adap.jsp

Check log files located at C:ManageEngineADSelfService Pluslogs for evidence of successful exploitation of the ADSelfService Plus vulnerability:

- In access* logs:

/help/admin-guide/Reports/ReportGenerate.jsp/ServletApi/../RestApi/LogonCustomization/ServletApi/../RestAPI/Connection

- In serverOut_* logs:

Keystore will be created for "admin"The status of keystore creation is Upload!

- In adslog* logs:

Java traceback errors that include references to NullPointerException in addSmartCardConfig or getSmartCardConfig

TTPs:

- WMI for lateral movement and remote code execution (

wmic.exe)

- Using plaintext credentials acquired from compromised ADSelfService Plus host

- Using

pg_dump.exe to dump ManageEngine databases

- Dumping

NTDS.dit and SECURITY/SYSTEM/NTUSER registry hives

- Exfiltration through webshells

- Post-exploitation activity conducted with compromised U.S. infrastructure

- Deleting specific, filtered log lines

Yara Rules:

rule ReportGenerate_jsp {

strings:

$s1 = “decrypt(fpath)”

$s2 = “decrypt(fcontext)”

$s3 = “decrypt(commandEnc)”

$s4 = “upload failed!”

$s5 = “sevck”

$s6 = “newid”

condition:

filesize < 15KB and 4 of them

}

rule EncryptJSP {

strings:

$s1 = “AEScrypt”

$s2 = “AES/CBC/PKCS5Padding”

$s3 = “SecretKeySpec”

$s4 = “FileOutputStream”

$s5 = “getParameter”

$s6 = “new ProcessBuilder”

$s7 = “new BufferedReader”

$s8 = “readLine()”

condition:

filesize < 15KB and 6 of them

}

Mitigations

Organizations that identify any activity related to ManageEngine ADSelfService Plus indicators of compromise within their networks should take action immediately.

Zoho ManageEngine ADSelfService Plus build 6114, which Zoho released on September 6, 2021, fixes CVE-2021-40539. FBI, CISA, and CGCYBER strongly urge users and administrators to update to ADSelfService Plus build 6114. Additionally, FBI, CISA, and CGCYBER strongly urge organizations ensure ADSelfService Plus is not directly accessible from the internet.

Additionally, FBI, CISA, and CGCYBER strongly recommend domain-wide password resets and double Kerberos Ticket Granting Ticket (TGT) password resets if any indication is found that the NTDS.dit file was compromised.

Actions for Affected Organizations

Immediately report as an incident to CISA or the FBI (refer to Contact Information section below) the existence of any of the following:

- Identification of indicators of compromise as outlined above.

- Presence of webshell code on compromised ManageEngine ADSelfService Plus servers.

- Unauthorized access to or use of accounts.

- Evidence of lateral movement by malicious actors with access to compromised systems.

- Other indicators of unauthorized access or compromise.

Contact Information

Recipients of this report are encouraged to contribute any additional information that they may have related to this threat.

For any questions related to this report or to report an intrusion and request resources for incident response or technical assistance, please contact:

- To report suspicious or criminal activity related to information found in this Joint Cybersecurity Advisory, contact your local FBI field office at https://www.fbi.gov/contact-us/field-offices, or the FBI’s 24/7 Cyber Watch (CyWatch) at (855) 292-3937 or by e-mail at CyWatch@fbi.gov. When available, please include the following information regarding the incident: date, time, and location of the incident; type of activity; number of people affected; type of equipment used for the activity; the name of the submitting company or organization; and a designated point of contact.

- To request incident response resources or technical assistance related to these threats, contact CISA at Central@cisa.gov.

- To report cyber incidents to the Coast Guard pursuant to 33 CFR Subchapter H, Part 101.305 please contact the USCG National Response Center (NRC) Phone: 1-800-424-8802, email: NRC@uscg.mil.

Revisions

September 16, 2021: Initial Version

This product is provided subject to this Notification and this Privacy & Use policy.

by Scott Muniz | Sep 16, 2021 | Security, Technology

This article is contributed. See the original author and article here.

The Federal Bureau of Investigation (FBI), CISA, and Coast Guard Cyber Command (CGCYBER) have released a Joint Cybersecurity Advisory (CSA) detailing the active exploitation of an authentication bypass vulnerability (CVE-2021-40539) in Zoho ManageEngine ADSelfService Plus—a self-service password management and single sign-on solution. The FBI, CISA, and CGCYBER assess that advanced persistent threat (APT) cyber actors are likely among those exploiting the vulnerability. The exploitation of this vulnerability poses a serious risk to critical infrastructure companies, U.S.-cleared defense contractors, academic institutions, and other entities that use the software.

CISA strongly encourages users and administrators to review Joint FBI-CISA-CGCYBER CSA: APT Actors Exploiting Newly Identified Vulnerability in ManageEngine ADSelfService Plus and immediately implement the recommended mitigations, which include updating to ManageEngine ADSelfService Plus build 6114.

Recent Comments