Unlock new value with your Dynamics 365 data in Microsoft 365 and Fabric, while safeguarding your data and assets

This article is contributed. See the original author and article here.

Navigating the complex world of business data in the age of AI presents unique challenges to enterprises. Organizations grapple with harnessing disparate data sources, addressing security and compliance risks while maintaining cost effectiveness. Overwhelming challenges often lead to inefficiencies and missed opportunities more than ever. Dynamics 365 and Dataverse are offering a unified platform for managing data effectively and securely at hyperscale, while empowering low code makers and business users of Microsoft Dynamics 365 and Power Platform.

Customers in different industries, from finance to retail, have been trusting their data and processes with Microsoft Dynamics 365 and Power Platform for years. We are excited to announce multiple features for helping IT administrators navigate effectively with rising AI, data, and security challenges. With Microsoft Purview integration, data governance and compliance risks are significantly reduced. Azure Sentinel provides vigilant monitoring against threats, while enhanced logging to the Microsoft 365 unified audit log tackles insider threats head-on. With Dataverse Link to Fabric, administrators can enable simpler data integration for low code makers, without the need to build and govern complex data pipelines. Moreover, the introduction of Dataverse elastic tables and long-term data retention strategies promise a substantial improvement in both hyperscale management and ROI, reinforcing a robust, secure, and cost-efficient data ecosystem.

Protect your data and assets in the age of AI

Growing cyber risks and increased corporate liability exposure for breaches have driven an increased focus on security in many organizations. To address this, Dataverse provides a comprehensive platform to secure your data and assets. At Ignite 2023 we are announcing several new security capabilities:

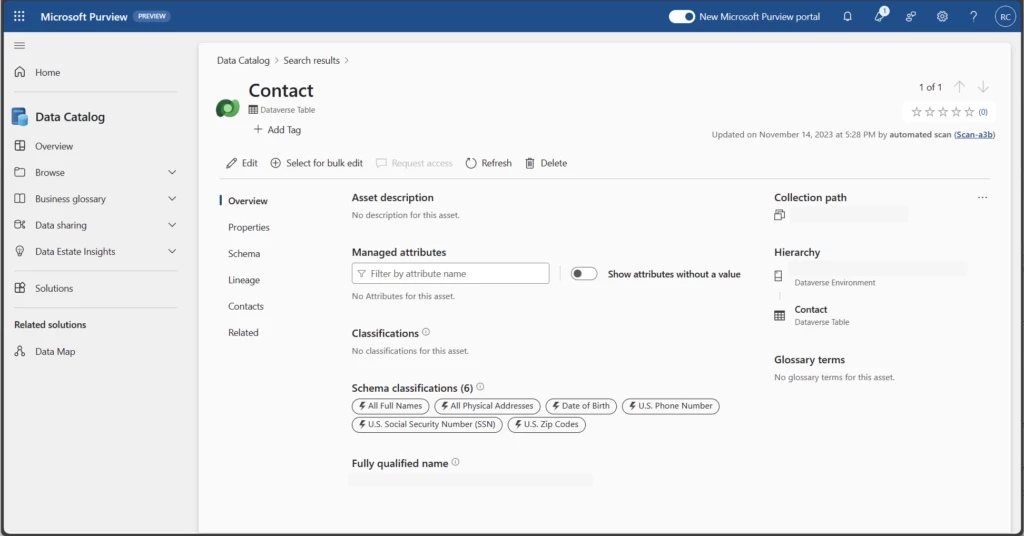

Govern Dynamics 365 and Power Platform data in Dataverse through Microsoft Purview integration

Dataverse integration with Microsoft Purview’s Data Map, available shortly in public preview, enables automated data discovery and sensitive data classification. The integration will help your organization to understand and govern their business applications data estate, safeguard that data, and improve their risk and compliance posture. Learn more here: http://aka.ms/DataversePurviewIntegration

Monitor and react to threats with Sentinel

Microsoft Sentinel solution for Microsoft Power Platform will be in public preview across regions over the next few weeks. Microsoft Sentinel is also integrated with Dynamics 365, with recently added OOB analytics rules. With Sentinel integration, customers can detect various suspicious activities such as Microsoft Power Apps execution from unauthorized geographies, suspicious data destruction by Power Apps, mass deletion of Power Apps, phishing attacks (via Power Apps), Power Automate flows activity by departing employees, Microsoft Power Platform connectors added to an environment, and the update or removal of Microsoft Power Platform data loss prevention policies. Learn more here: http://aka.ms/DataverseSentinelIntegration

Manage the risk of insider threats via enhanced logging to the Microsoft 365 Unified Audit Log

To manage the risk of insider threats, all administrator actions in Power Platform are logged to the Microsoft 365 Unified Admin audit log, enabling security teams that manage compliance and insider risk management teams who act on events the ability to mitigate risks in an organization. Learn more here: http://aka.ms/PowerPlatformAdminAuditLogging

Seamlessly integrate your Dynamics 365 data with Fabric and Microsoft 365

Empower your users to do more with Link to Fabric

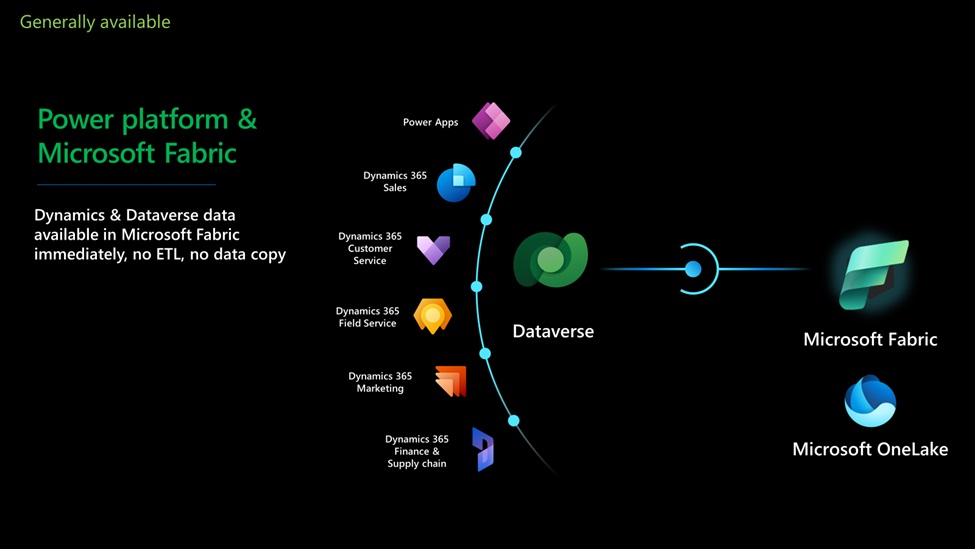

We are excited to announce general availability of Link to Fabric, a no-copy, no-ETL direct link between Dataverse and Microsoft Fabric. If your organization uses Dynamics 365 or Power Platform with Power BI, Link to Fabric enables simple data integration, and allows low code makers to securely derive AI driven insights across business apps and data sources and drive action.

Low code Makers can Link to Microsoft Fabric from the Analyze menu in Power Apps maker portal command bar. The system validates configuration and lets you choose a Fabric workspace without leaving maker portal. When you confirm, the system securely links all your tables into a system generated Synapse Lakehouse in the Fabric workspace you selected. Your data stays in Dataverse while you can work with all Fabric workloads like SQL, Python and PowerBI without making copies or building pipelines. As data gets updated in Dataverse, changes are reflected in Fabric, near real time.

We are also excited to announce joint partner and ISV solutions with Dynamics 365, Power platform and Microsoft Fabric. Partners and system are leveraging the Dataverse Link to Fabric to provide value added solutions that combine business functionality with insights and built-in actions.

MECOMS is a top recommended solution for energy and utility companies across the globe enables next generation cities with smart energy and utility solutions that manage consumption from “meter to cash”. MECOMS 365, built on Dynamics 365 and Microsoft Fabric, gathers smart meter data from homes and businesses, processes billing and reconciliation in Dynamics 365 and integrates with Dynamics 365 for customer engagement. Smart cities can not only provide billing and excellent service, but also provide insights to customers on how they can lower consumption and save money.

Ian Bruyninckx, Lead Product Architect, MECOMS, A Ferranti Company

ERP customers can extend their insights and reduce TCO by upgrading to Synapse Link

If you are a Dynamics 365 for Finance and Operations (F&O) customer, we have exciting news to share. Synapse Link for Dataverse service built into Power Apps, the successor to the Export to Data Lake feature in finance and operations apps, is now generally available. By upgrading from Export to data lake feature in F&O to Synapse Link, you can benefit from the improved configuration and enhanced performance which translates to a reduction in the total cost of ownership (TCO).

To learn more about upgrading to Synapse Link, refer to https://aka.ms/TransitiontoSynapseLinkVideos.

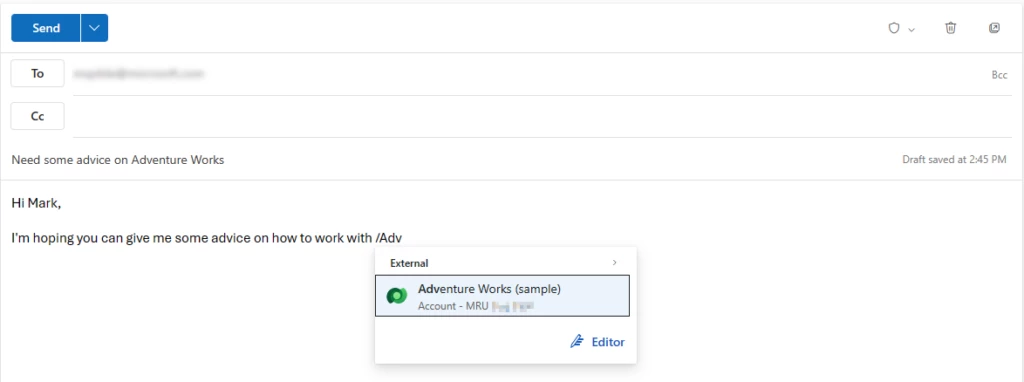

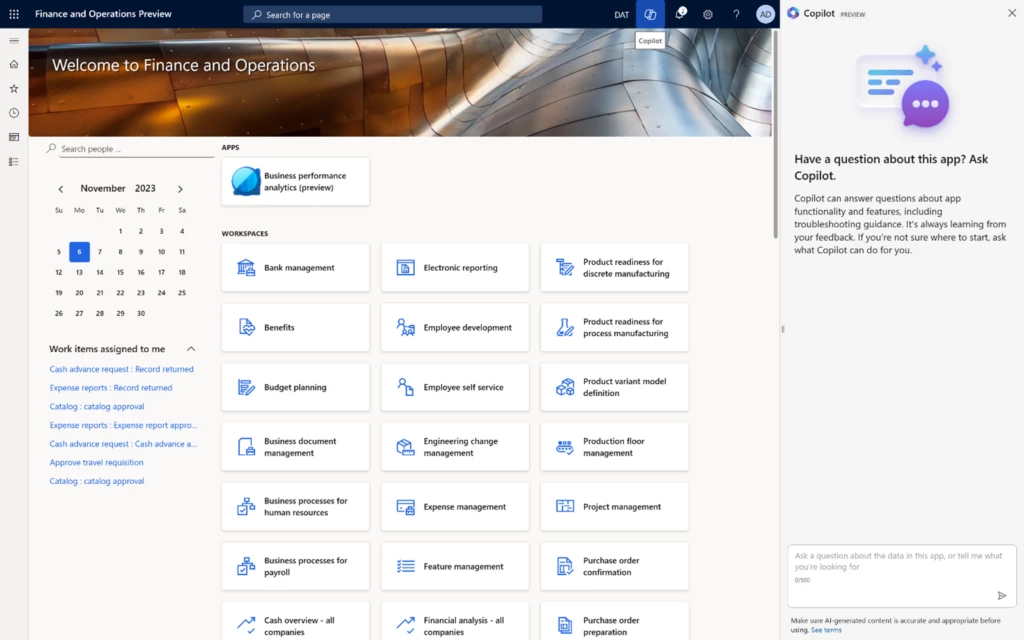

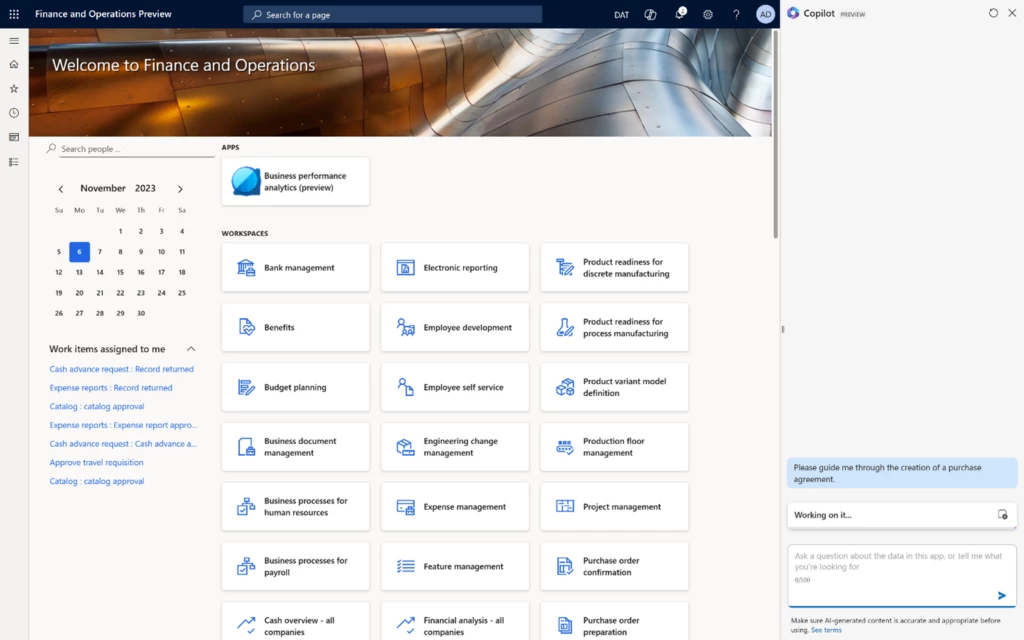

Reference Dataverse records with Microsoft 365 Context IQ to efficiently access enterprise data

One of the most time-consuming tasks, for any person that uses email, is sharing information in your line of business applications with colleagues. You must jump out of your Outlook web experience, open your line of business app, navigate to a record, and then copy and paste the link into your email. This is an incredibly time-consuming set of steps and actions.

We are excited to announce general availability for Dataverse integration with Microsoft 365 Context IQ a new feature that makes it possible for users to access their most recently used data directly from Outlook Web Client using a simple gesture.

Please refer to the settings on how to Use /mention to link to records in Outlook web client – Power Platform | Microsoft Learn.

Efficiently hyper(scale) your business applications

In the age of AI, customers are challenged with managing data at hyperscale from a plethora of sources. As a polyglot hyperscale data platform for structured relational and unstructured non-relational data, Dataverse can support all your business scenarios with security, governance, and life cycle management, with no storage limitations.

For very high scale data scenarios, such as for example utilizing third party data for time-sensitive marketing campaigns or driving efficiency with predictive maintenance for your IOT business, Dataverse elastic tables is a powerful addition to standard tables, supporting both relational and non-relational data. You can even optimize large volume data storage with time-defined auto-delete capability.

Additionally, while Dataverse can support your business growth with no limit on active data, to meet your company’s compliance, regulatory or other organizational policy, you can retain in-active data with Dataverse long term data retention and save 50% or more on storage capacity.

Click here to learn more about Microsoft Dataverse.

The post Unlock new value with your Dynamics 365 data in Microsoft 365 and Fabric, while safeguarding your data and assets appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments