by Contributed | Jun 21, 2024 | Technology

This article is contributed. See the original author and article here.

As spring turns to summer, and sprouts appear in my garden, I think about all the preparation I’ve made for their environment—turning the soil, setting up the watering system, adding peat moss—and know that the yield will be greater and the harvest better. Such is the case with Microsoft Intune. As we continue to enhance the capabilities, each one an investment, the cumulative result is a richer and more robust management experience. Below, we highlight some of the newest features.

New troubleshooting tool for mobile devices

Part of diagnosing an issue is not only defining what is wrong but also what is not wrong. Our customers asked for a simple way to temporarily remove apps and configurations from a device managed in Microsoft Intune as part of the troubleshooting process. The result is a feature we call Remove apps and configuration (RAC). Before RAC, removing settings involved excluding devices from policy assignments or removing users from groups, and then waiting for devices to check in. After diagnosing the device, those assignments and group memberships would need to be restored one by one. Now, RAC affords a set of useful troubleshooting steps:

- Real-time monitoring of which policies and apps are removed/restored

- Selective restore of individual apps and policies

- Temporary removal of apps and policies with an automated restore in 8 to 24 hours

- Policy assignments and group membership remain unchanged

This initial release will be distributed in early July. It will support iOS/iPadOS and Android corporate-owned devices, and it will be available to GCC, GCC High, and DoD environments on release. For more information on this tool, follow the update on the Microsoft 365 roadmap.

Windows enrollment attestation preview is here

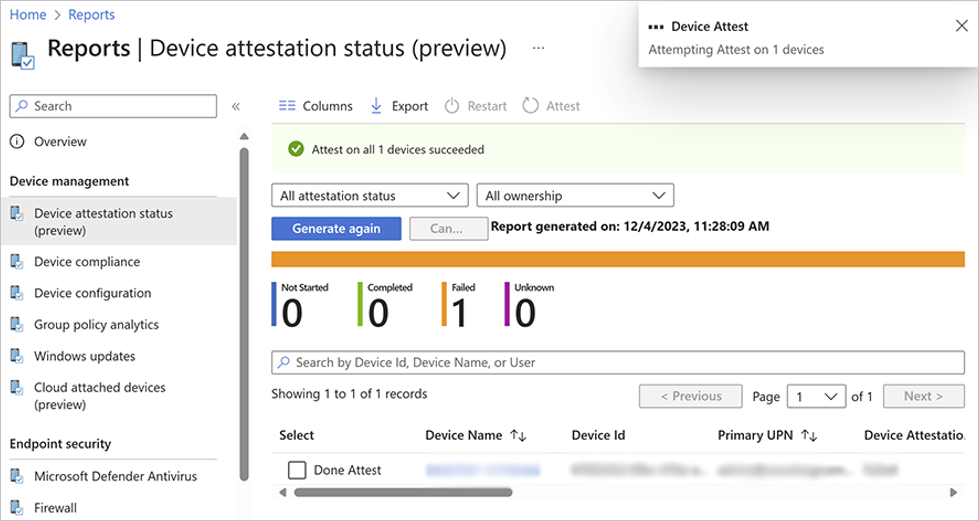

Last month we talked about device attestation capabilities coming to Intune. This month, the public preview of Windows enrollment attestation is starting to roll out with the new reporting and Device Attest action.

This feature builds on attestation by applying it to enrollment. Applicable Windows devices have their enrollment credentials stored in the device hardware, in this case Trusted Platform Module (TPM) 2.0, and Intune can then attest to this storage—meaning that the device enrolled securely. Successful devices show as Completed in the report. Devices that are Not Started or Failed can be retried using the new Device Attest at the top of the report. This will be available in public preview by the end of June.

Screenshot of the preview of the device attestation status report in the Intune admin center listing the name, ID, and primary UPN of a device that failed device attestation.

Screenshot of the preview of the device attestation status report in the Intune admin center listing the name, ID, and primary UPN of a device that failed device attestation.

Stay up to date on the release of this capability to the public Microsoft 365 roadmap.

More granular endpoint security access controls

Role-based access control (RBAC) enhances organizations’ ability to configure access to specific workloads while maintaining a robust security posture. Our customers asked for even more granular controls to help scope security work across geographic areas, business units, or different teams to only relevant information and features. In this latest release, we are adding specific permission sets to enable more flexibility in creating custom roles for:

- Endpoint detection and response

- Application control

- Attack surface reduction

We plan to have new permission sets for all endpoint security workloads in the future.

We know that many of our customers use a custom role with Security baselines permission to manage security workloads, so we are automatically adding the new permissions to this role. This way, no permissions will be lost for existing users. For new custom roles that are granted Security baselines permission, these will not include the new permissions by default but rather only those without specific permission sets.

This update also applies to customers using the Microsoft Defender console to manage security policies, and it is available in GCC, GCC High, and DoD environments. Read more about granular RBAC permissions.

So much of what we do is a direct result of customer feedback. Please join our community, visit the Microsoft Feedback portal, or leave a comment on this post. We value all your input, so please share it, especially after working with these exciting new capabilities.

Stay up to date! Bookmark the Microsoft Intune Blog and follow us @MSIntune on X and on LinkedIn to continue the conversation.

by Contributed | Jun 21, 2024 | Technology

This article is contributed. See the original author and article here.

The latest edition of Sync Up is live now in your favorite podcast app! This month, Arvind Mishra and I are explored all the announcements and energy of the Microsoft 365 Community Conference! Even better, we had on-site interviews with a host of OneDrive experts!

Mark Kashman (you read that right, the Mark Kashman, of Intrazone!) talked about our experiences at the conference, including the :robot_face: Age of Copilots, the magic of in-person conferences, and some of the amazing SharePoint-related features that were shown off!

Lesha Bhansali shared how OneDrive is available everywhere, from Windows to Mac to Teams to Outlook, making it easy for your users to be productive inside the apps that they already use!

Carter Green talked about the latest improvements we’re bringing to the OneDrive desktop app, including :rainbow: colored folders, and Sync health report exports!

Vishal Lodha made a second consecutive appearance on Sync Up to talk about the amazing customer interactions we had, including an amazing panel where customers shared their experiences with OneDrive and how they’ve unlocked that power for their users!

Show: https://aka.ms/SyncUp | Apple Podcasts: https://aka.ms/SyncUp/Apple | Spotify: https://aka.ms/SyncUp/Spotify | RSS: https://aka.ms/SyncUp/RSS

But wait, there’s more! Our last episode of Sync Up on Data Migration is now available on YouTube! Be sure to check it out & subscribe!

If you weren’t able to attend the conference, here’s just a sneak peak of the experience!

That’s all for this week! Thank you all for listening!

by Contributed | Jun 20, 2024 | Technology

This article is contributed. See the original author and article here.

We wanted to provide you with an important update to the deprecation schedule for the two Admin Audit Log cmdlets, as part of our ongoing commitment to improve security and compliance capabilities within our services. The two Admin Audit Log cmdlets are:

- Search-AdminAuditLog

- New-AdminAuditLog

As communicated in a previous blog post, the deprecation of Admin Audit Log (AAL) and Mailbox Audit Log (MAL) cmdlets was initially planned to occur simultaneously on April 30th, 2024. However, to ensure a smooth transition and to accommodate the feedback from our community, we have revised the deprecation timeline.

We would like to inform you that the Admin Audit Log cmdlets will now be deprecated separately from the Mailbox Audit Log cmdlets, with the final date set for September 15, 2024.

This change allows for a more phased approach, giving you additional time to adapt your processes to the new Unified Audit Log (UAL) cmdlets, which offer enhanced functionality and a more unified experience.

What This Means for You

- The Admin Audit Log cmdlets will be deprecated on September 15, 2024.

- The Mailbox Audit Log cmdlets will have a separate deprecation date, which will be announced early next year.

- We encourage customers to begin transitioning to the Unified Audit Log (UAL) cmdlet i.e. Search-UnifiedAuditLog as soon as possible. Alternatively, you can explore using the Audit Search Graph API, which is currently in Public Preview and is expected to become Generally Available by early July 2024.

Next Steps

If you are currently using any one or both of the above-mentioned Admin Audit Log cmdlets, you will need to take the following actions before September 15, 2024:

- For Search-AdminAuditLog, you will need to replace it with Search-UnifiedAuditLog in your scripts or commands. To get the same results as Search-AdminAuditLog, you will need to set the RecordType parameter to ExchangeAdmin. For example, if you want to search for all Exchange admin actions in the last 30 days, you can use the following command:

Search-UnifiedAuditLog -RecordType ExchangeAdmin -StartDate (Get-Date).AddDays(-30) -EndDate (Get-Date)

- For New-AdminAuditLogSearch, you will need to use the Microsoft Purview Compliance Portal to download your audit log report. The portal allows you to specify the criteria for your audit log search, such as date range, record type, user, and action. You can also choose to receive the report by email or download it directly from the portal. You can access the portal here: Home Microsoft Purview. More details on using the Compliance portal for audit log searching can be found here.

Differences between UAL and AAL cmdlets

As you move from AAL to UAL cmdlets, you may notice some minor changes between them. In this section, we will show you some important differences in the Input and Output of the UAL cmdlet from the AAL cmdlets.

Input Parameter Differences

Admin Audit Log (AAL) cmdlets include certain parameters that are not directly available in the Unified Audit Log (UAL) cmdlets. However, we have identified suitable alternatives for most of them within the UAL that will allow you to achieve similar functionality.

Below are the 4 parameters that are supported in the AAL and their alternatives in UAL (if present).

AAL Parameter

|

Current AAL use example

|

New UAL equivalent example

|

Note

|

Cmdlets

|

Search-AdminAuditLog -StartDate 05/20/2024 -EndDate 05/28/2024 -Cmdlets Set-Mailbox

|

Search-UnifiedAuditLog -StartDate 05/20/2024 -EndDate 05/28/2024

-Operations Set-Mailbox

|

The “Cmdlets” parameter in AAL can be substituted with the “Operations” parameter in UAL. This will allow you to filter audit records based on the operations performed.

|

ExternalAccess

|

Search-AdminAuditLog -StartDate 05/20/2024 -EndDate 05/28/2024 -ExternalAccess $false

|

Search-UnifiedAuditLog -RecordType ExchangeAdmin -StartDate 05/20/2024 -EndDate 05/28/2024

–FreeText “ExternalAccess-false”

|

While UAL does not have a direct “ExternalAccess” parameter, you can use the “FreeText” parameter to filter for external access by including relevant keywords and terms associated with external user activities

|

IsSuccess

|

Search-AdminAuditLog -Cmdlets Set-Mailbox -Parameters MaxSendSize,MaxReceiveSize

-StartDate 01/24/2024 -EndDate 02/12/2024 -IsSuccess $true

|

Not Supported

|

This property was always True in AAL because only the logs that succeeded were returned. Hence using or not using this parameter made no difference in the returned result set. Therefore, this property is not supported anymore in the Search-UnifiedAuditLog cmdlet.

|

StartIndex

|

Search-AdminAuditLog -StartDate 05/20/2024 -EndDate 05/28/2024 -Resultsize 100 -StartIndex 99

|

Not Supported

|

In AAL, you can use the “StartIndex” parameter to pick the starting index for the results. UAL doesn’t support this parameter. Instead, you can use the pagination feature of Search-UnifiedAuditLog cmdlet to get a specific number of objects with the SessionId, SessionCommand and ResultSize parameter.

|

Please Note: The SessionId that is returned in the output of Search-AdminAuditLog is a system set value and the SessionId that is passed as an input along with the Search-UnifiedAuditLog cmdlet is User set value. This parameter may have the same name but perform different functions for each cmdlet.

Output Differences

There are differences how the Audit Log output is displayed in AAL vs UAL cmdlets. UAL has an enhanced set of results with enhanced properties in JSON format. In this section we point out a few major differences that should ease your migration journey.

Property in AAL

|

Equivalent Property in UAL

|

CmdletName

|

Operations

|

ObjectModified

|

Object Id

|

Caller

|

UserId

|

Parameters

|

AuditData > Parameters

NOTE: All the parameters and the values passed will be present as a JSON

|

ModifiedProperties

|

AuditData > ModifiedProperties

NOTE: Modified values will be only present in case the verbose mode is enabled using Set-AdminAuditLogConfig cmdlet.

|

ExternalAccess

|

AuditData > ExternalAccess

|

RunDate

|

CreationDate

|

We are here to help

We are committed to providing you with the best tools and services to manage your Exchange Online environment and welcome your questions or feedback about this change. Please feel free to contact us through a comment on this blog post or reaching out by email at AdminAuditLogDeprecation[at]service.microsoft.com. We are always happy to hear from you and assist in any way we can.

The Exchange Online Team

by Contributed | Jun 19, 2024 | Technology

This article is contributed. See the original author and article here.

Have you ever gone toe to toe with the threat actor known as Octo Tempest? This increasingly aggressive threat actor group has evolved their targeting, outcomes, and monetization over the past two years to become a dominant force in the world of cybercrime. But what exactly defines this entity, and why should we proceed with caution when encountering them?

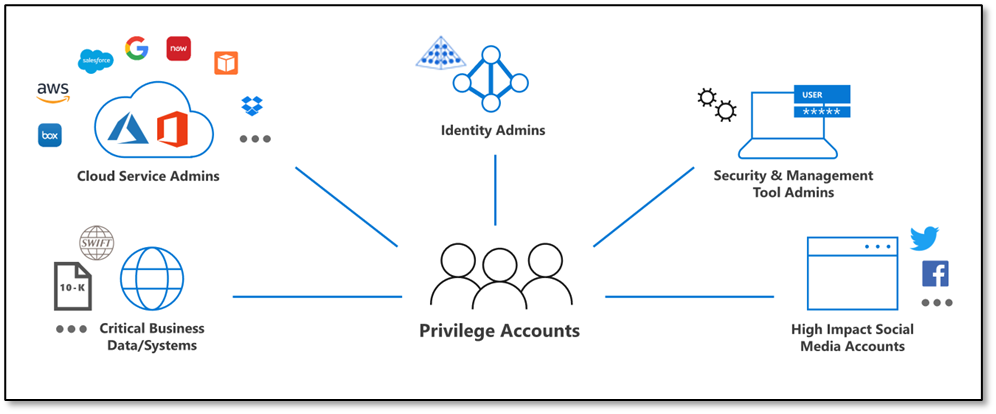

Octo Tempest (formerly DEV-0875) is a group known for employing social engineering, intimidation, and other human-centric tactics to gain initial access into an environment, granting themselves privilege to cloud and on-premises resources before exfiltrating data and unleashing ransomware across an environment. Their ability to penetrate and move around identity systems with relative ease encapsulates the essence of Octo Tempest and is the purpose of this blog post. Their activities have been closely associated with:

- SIM swapping scams: Seize control of a victim’s phone number to circumvent multifactor authentication.

- Identity compromise: Initiate password spray attacks or phishing campaigns to gain initial access and create federated backdoors to ensure persistence.

- Data breaches: Infiltrate the networks of organizations to exfiltrate confidential data.

- Ransomware attacks: Encrypt a victim’s data and demand primary, secondary or tertiary ransom fees to refrain from disclosing any information or release the decryption key to enable recovery.

Figure 1: The evolution of Octo Tempest’s targeting, actions, outcomes, and monetization.

Some key considerations to keep in mind for Octo Tempest are:

- Language fluency: Octo Tempest purportedly operates predominantly in native English, heightening the risk for unsuspecting targets.

- Dynamic: Have been known to pivot quickly and change their tactics depending on the target organizations response.

- Broad attack scope: They target diverse businesses ranging from telecommunications to technology enterprises.

- Collaborative ventures: Octo Tempest may forge alliances with other cybercrime cohorts, such as ransomware syndicates, amplifying the impact of their assaults.

As our adversaries adapt their tactics to match the changing defense landscape, it’s essential for us to continually define and refine our response strategies. This requires us to promptly utilize forensic evidence and efficiently establish administrative control over our identity and access management services. In pursuit of this goal, Microsoft Incident Response has developed a response playbook that has proven effective in real-world situations. Below, we present this playbook to empower you to tackle the challenges posed by Octo Tempest, ensuring the smooth restoration of critical business services such as Microsoft Entra ID and Active Directory Domain Services.

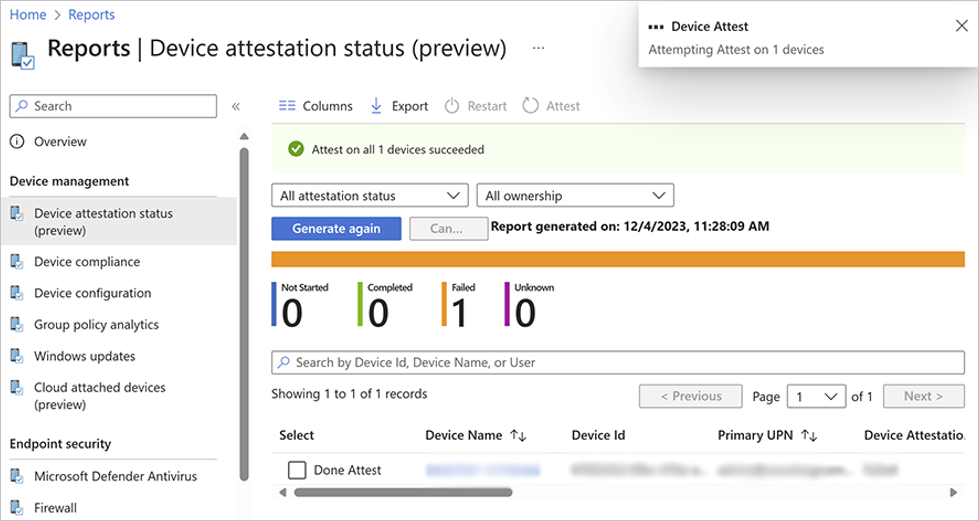

Cloud eviction

We begin with the cloud eviction process. If any actor takes control of the identity plane in Microsoft Entra ID, a set of steps should be followed to hit reset and take back administrative control of the environment. Here are some tactical measures employed by the Microsoft Incident Response team to ensure the security of the cloud identity plane:

Figure 2: Cloud response playbook.

Break glass accounts

Emergency scenarios require emergency access. For this purpose, one or two administrative accounts should be established. These accounts should be exempted from Conditional Access policies to ensure access in critical situations, monitored to verify their non-use, and passwords should be securely stored offline whenever feasible.

More information on emergency access accounts can be found here: Manage emergency access admin accounts – Microsoft Entra ID | Microsoft Learn.

Federation

Octo Tempest leverages cloud-born federation features to take control of a victim’s environment, allowing for the impersonation of any user inside the environment, even if multifactor authentication (MFA) is enabled. While this is a damaging technique, it is relatively simple to mitigate by logging in via the Microsoft Graph PowerShell module and setting the domain back from Federated to Managed. Doing so breaks the relationship and prevents the threat actor from minting further tokens.

Connect to your Azure/Office 365 tenant by running the following PowerShell cmdlet and entering your Global Admin Credentials:

Connect-MgGraph

Change federation authentication from Federated to Managed running this cmdlet:

Update-MgDomain -DomainId "test.contoso.com" -BodyParameter @{AuthenticationType="Managed"}

Service principals

Service principals have their own identities, credentials, roles, and permissions, and can be used to access resources or perform actions on behalf of the applications or services they represent. These have been used by Octo Tempest for persistence in compromised environments. Microsoft Incident Response recommends reviewing all service principals and removing or reducing permissions as needed.

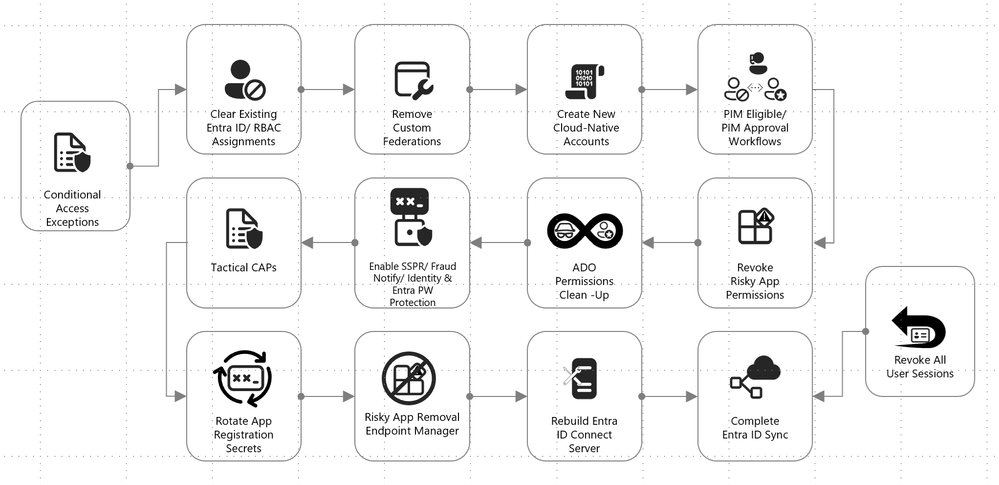

Conditional Access policies

These policies govern how an application or identity can access Microsoft Entra ID or your organization resources and configuring these appropriately ensures that only authorized users are accessing company data and services. Microsoft provides template policies that are simple to implement. Microsoft Incident Response recommends using the following set of policies to secure any environment.

Note: Any administrative account used to make a policy will be automatically excluded from it. These accounts should be removed from exclusions and replaced with a break glass account.

Figure 3: Conditional Access policy templates.

Conditional Access policy: Require multifactor authentication for all users

This policy is used to enhance the security of an organization’s data and applications by ensuring that only authorized users can access them. Octo Tempest is often seen performing SIM swapping and social engineering attacks, and MFA is now more of a speed bump than a roadblock to many threat actors. This step is essential.

Conditional Access policy: Require phishing-resistant multifactor authentication for administrators

This policy is used to safeguard access to portals and admin accounts. It is recommended to use a modern phishing-resistant MFA type which requires an interaction between the authentication method and the sign-in surface such as a passkey, Windows Hello for Business, or certificate-based authentication.

Note: Exclude the Entra ID Sync account. This account is essential for the synchronization process to function properly.

Conditional Access policy: Block legacy authentication

Implementing a Conditional Access policy to block legacy access prohibits users from signing in to Microsoft Entra ID using vulnerable protocols. Keep in mind that this could block valid connections to your environment. To avoid disruption, follow the steps in this guide.

Conditional Access policy: Require password change for high-risk users

By implementing a user risk Conditional Access policy, administrators can tailor access permissions or security protocols based on the assessed risk level of each user. Read more about user risk here.

Conditional Access policy: Require multifactor authentication for risky sign-ins

This policy can be used to block or challenge suspicious sign-ins and prevent unauthorized access to resources.

Segregate Cloud admin accounts

Administrative accounts should always be segregated to ensure proper isolation of privileged credentials. This is particularly true for cloud admin accounts to prevent the vertical movement of privileged identities between on-premises Active Directory and Microsoft Entra ID.

In addition to the enforced controls provided by Microsoft Entra ID for privileged accounts, organizations should establish process controls to restrict password resets and manipulation of MFA mechanisms to only authorized individuals.

During a tactical takeback, it’s essential to revoke permissions from old admin accounts, create entirely new accounts, and ensure that the new accounts are secured with modern MFA methods, such device-bound passkeys managed in the Microsoft Authenticator app.

Figure 4: CISO series: Secure your privileged administrative accounts with a phased roadmap | Microsoft Security Blog.

Review Azure resources

Octo Tempest has a history of manipulating resources such as Network Security Groups (NSGs), Azure Firewall, and granting themselves privileged roles within Azure Management Groups and Subscriptions using the ‘Elevate Access’ option in Microsoft Entra ID.

It’s imperative to conduct regular, and thorough, reviews of these services to carefully evaluate all changes to these services and effectively remove Octo Tempest from a cloud environment.

Of particular importance are the Azure SQL Server local admin accounts and the corresponding firewall rules. These areas warrant special attention to mitigate any potential risks posed by Octo Tempest.

Intune Multi-Administrator Approval (MAA)

Intune access policies can be used to implement two-person control of key changes to prevent a compromised admin account from maliciously using Intune, causing additional damage to the environment while mitigation is in progress.

Access policies are supported by the following resources:

- Apps – Applies to app deployments but doesn’t apply to app protection policies.

- Scripts – Applies to deployment of scripts to devices that run Windows.

Octo Tempest has been known to leverage Intune to deploy ransomware at scale. This risk can be mitigated by enabling the MAA functionality.

Review of MFA registrations

Octo Tempest has a history of registering MFA devices on behalf of standard users and administrators, enabling account persistence. As a precautionary measure, review all MFA registrations during the suspected compromise window and prepare for the potential re-registration of affected users.

On-premises eviction

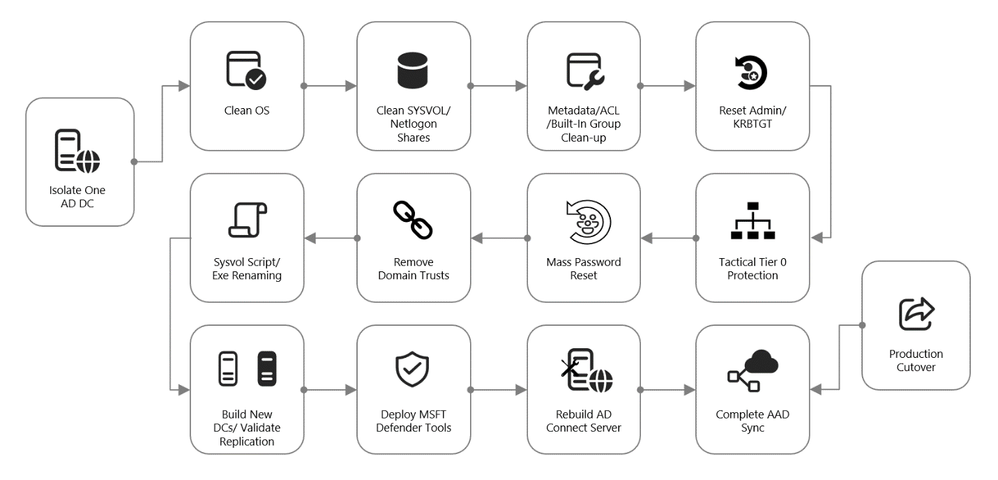

Additional containment efforts include the on-premises identity systems. There are tried and tested procedures for rebuilding and recovering on-premises Active Directory, post-ransomware, and these same techniques apply to an Octo Tempest intrusion.

Figure 5: On-premises recovery playbook.

Active Directory Forest Recovery

If a threat actor has taken administrative control of an Active Directory environment, complete compromise of all identities, in Active Directory, and their credentials should be assumed. In this scenario, on-premises recovery follows this Microsoft Learn article on full forest recovery:

Active Directory Forest Recovery – Procedures | Microsoft Learn

If there are good backups of at least one Domain Controller for each domain in the compromised forest, these should be restored. If this option is not available, there are other methods to isolate Domain Controllers for recovery. This can be accomplished with snapshots or by moving one good Domain Controller from each domain into an isolated network so that Active Directory sanitization can begin in a protective bubble.

Once this has been achieved, domain recovery can begin. The steps are identical for every domain in the forest:

- Metadata cleanup of all other Domain Controllers

- Seizing the Flexible Single Master Operations (FSMO) roles

- Raising the RID Pool and invalidating the RID Pool

- Resetting the Domain Controller computer account password

- Resetting the password of KRBTGT twice

- Resetting the built-in Administrator password twice

- If Read-Only Domain Controllers existed, removing their instance of krbtgt_xxxxx

- Resetting inter-domain trust account (ITA) passwords on each side of the parent/child trust

- Removing external trusts

- Performing an authoritative restore of the SYSVOL content

- Cleaning up DNS records for metadata cleaned up Domain Controllers

- Resetting the Directory Services Restore Mode (DSRM) password

- Removing Global Catalog and promoting to Global Catalog

When these actions have been completed, new Domain Controllers can be built in the isolated environment. Once replication is healthy, the original systems restored from backup can be demoted.

Octo Tempest is known for targeting Key Vaults and Secret Servers. Special attention will need to be paid to these secrets to determine if they were accessed and, if so, to sanitize the credentials contained within.

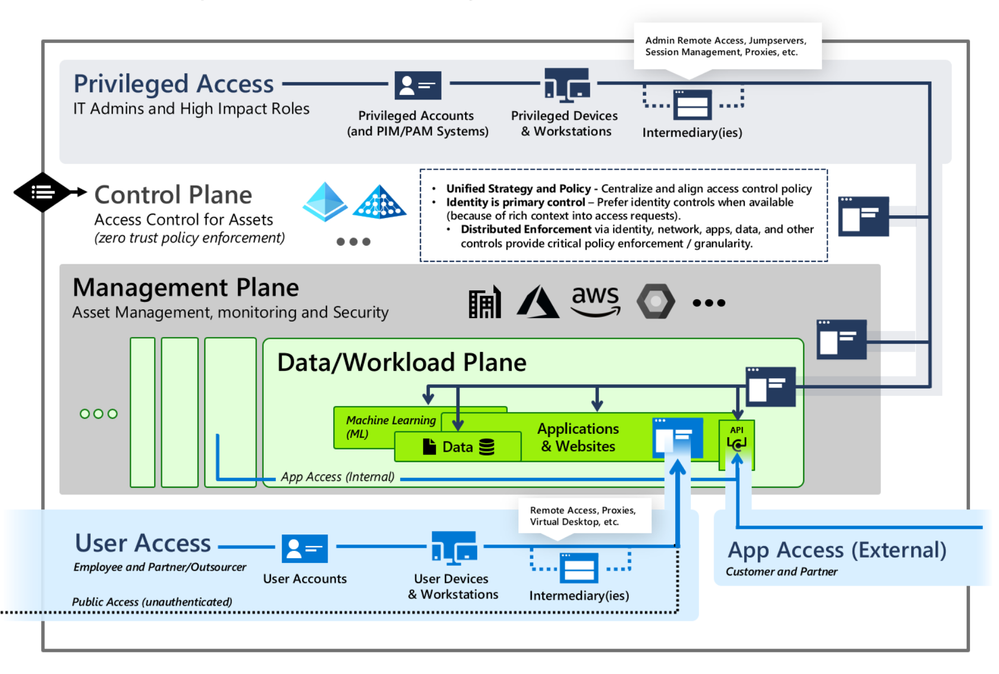

Tiering model

Restricting privilege escalation is critical to containing any attack since it limits the scope and damage. Identity systems in control of privileged access, and critical systems where identity administrators log onto, are both under the scope of protection.

Microsoft’s official documentation guides customers towards implementing the enterprise access model (EAM) that supersedes the “legacy AD tier model”. The EAM serves as an all-encompassing means of addressing where and how privileged access is used. It includes controls for cloud administration, and even network policy controls to protect legacy systems that lack accounts entirely.

However, the EAM has several limitations. First, it can take months, or even years, for an organization’s architects to map out and implement. Secondly, it spans disjointed controls and operating systems. Lastly, not all of it is relevant to the immediate concern of mitigating Pass-the-Hash (PtH) as outlined here.

Our customers, with on-premises systems, are often looking to implement PtH mitigations yesterday. The AD Tiering model is a good starting point for domain-joined services to satisfy this requirement. It is:

- Easier to conceptualize

- Has practical implementation guidance

- Rollout can be partially automated

The EAM is still a valuable strategy to work towards in an organization’s journey to security; but this is a better goal for after the fires and smoldering embers have been extinguished.

Figure 6: Securing privileged access Enterprise access model – Privileged access | Microsoft Learn.

Segregated privileged accounts

Accounts should be created for each tier of access, and processes should be put in place to ensure that these remain correctly isolated within their tiers.

Control plane isolation

Identify all systems that fall under the control plane. The key rule to follow is that anything that accesses or can manipulate an asset must be treated at the same level as the assets that they manipulate. At this stage of eviction, the control plane is the key focus area. As an example, SCCM being used to patch Domain Controllers must be treated as a control plane asset.

Backup accounts are particularly sensitive targets and must be managed appropriately.

Account disposition

The next phase of on-premises recovery and containment consists of a procedure known as account disposition in which all privileged or sensitive groups are emptied except for the account that is performing the actions. These groups include, but are not limited to:

- Built-In Administrators

- Domain Admins

- Enterprise Admins

- Schema Admins

- Account Operators

- Server Operators

- DNS Admins

- Group Policy Creator Owners

Any identity that gets removed from these groups goes through the following steps:

- Password is reset twice

- Account is disabled

- Account is marked with Smartcard is required for interactive login

- Access control lists (ACLs) are reset to the default values and the adminCount attribute is cleared

Once this is done, build new accounts as per the tiering model. Create new Tier 0 identities for only the few staff that require this level of access, with a complex password and marked with the Account is sensitive and cannot be delegated flag.

Access Control List (ACL) review

Microsoft Incident Response has found a plethora of overly-permissive access control entries (ACEs) within critical areas of Active Directory of many environments. These ACEs may be at the root of the domain, on AdminSDHolder, or on Organizational Units that hold critical services. A review of all the ACEs in the access control lists (ACLs) of these sensitive areas within Active Directory is performed, and unnecessary permissions are removed.

Mass password reset

In the event of a domain compromise, a mass password reset will need to be conducted to ensure that Octo Tempest does not have access to valid credentials. The method in which a mass password reset occurs will vary based on the needs of the organization and acceptable administrative overhead. If we simply write a script that gets all user accounts (other than the person executing the code) and resets the password twice to a random password, no one will know their own password and, therefore, will open tickets with the helpdesk. This could lead to a very busy day for those members of the helpdesk (who also don’t know their own password).

Some examples of mass password reset methods, that we have seen in the field, include but are not limited to:

- All at once: Get every single user (other than the newly created tier 0 accounts) and reset the password twice to a random password. Have enough helpdesk staff to be able to handle the administrative burden.

- Phased reset by OU, geographic location, department, etc.: This method targets a community of individuals in a more phased out approach which is less of an initial hit to the helpdesk.

- Service account password resets first, humans second: Some organizations start with the service account passwords first and then move to the human user accounts in the next phase.

Whichever method you choose to use for your mass password resets, ensure that you have an attestation mechanism in place to be able to accurately confirm that the person calling the helpdesk to get their new password (or enable Self-Service Password Reset) can prove they are who they say they are. An example of attestation would be a video conference call with the end user and the helpdesk and showing some sort of identification (for instance a work badge) on the screen.

It is recommended to also deploy and leverage Microsoft Entra ID Password Protection to prevent users from choosing weak or insecure passwords during this event.

Conclusion

The battle against Octo Tempest underscores the importance of a multi-faceted and proactive approach to cybersecurity. By understanding a threat actors’ tactics, techniques and procedures and by implementing the outlined incident response strategies, organizations can safeguard their identity infrastructure against this adversary and ensure all pervasive traces are eliminated. Incident Response is a continuous process of learning, adapting, and securing environments against ever-evolving threats.

by Contributed | Jun 18, 2024 | Technology

This article is contributed. See the original author and article here.

Seattle—June 18, 2024—Today, we are happy to announce new releases and enhancements to Azure AI Translator Service. We are introducing a new endpoint which unifies document translation async batch and sync operation and the SDKs are updated. Now, you can use and deploy document translation features in your organization to translate documents through Azure AI Studio and SharePoint without writing any code. Azure AI Translator container is now enhanced to translate both text and documents.

Overview

Document translation offers two operations: asynchronous batch and synchronous. Depending on the scenario, customers may use either operations or a combination of both. Today, we are delighted to announce that both operations have been unified and will share the same endpoint.

Asynchronous batch operation:

- Asynchronous batch translation supports the processing of multiple documents and large files. The batch translation processes source documents from an Azure Blob storage and uploads translated documents back into it.

The endpoint for the asynchronous batch operation is getting updated to:

{your-document translation-endpoint}/translator/document/batches?api-version=[Date]

The service will continue to support backward compatibility for the deprecated endpoint. We recommend new customers adapt the latest endpoint as new functions in the future will be added to the same.

Synchronous operation:

- Synchronous operation supports the processing of single document translation. It accepts source document as part of the request body, processes the document in memory and return translated document as part of the response body.

{your-document translation-endpoint}/translator/document:translate?api-version=[Date]

This unification is aimed to provide customers with consistency and simplicity while using either of the document translation operations.

Updated SDK

The updated document translation SDK supports both asynchronous batch operation and synchronous operation. Here’s how you can leverage it:

To run a translation operation for a document, you need a Translator endpoint and credentials. You can use the DefaultAzureCredential to try a number of common authentication methods optimized for both running as a service and development. The samples below uses a Translator API key credential by creating an AzureKeyCredential object. You can set endpoint and apiKey based on an environment variable, a configuration setting, or any way that works for your application.

Asynchronous batch method:

Creating a DocumentTranslationClient

string endpoint = "";

string apiKey = "";

SingleDocumentTranslationClient client = new SingleDocumentTranslationClient(new Uri(endpoint), new AzureKeyCredential(apiKey));

To Start a translation operation for documents in a blob container, call StartTranslationAsync. The result is a Long Running operation of type DocumentTranslationOperation which polls for the status of the translation operation from the API. To call StartTranslationAsync you need to initialize an object of type DocumentTranslationInput which contains the information needed to translate the documents.

Uri sourceUri = new Uri("");

Uri targetUri = new Uri("");

var input = new DocumentTranslationInput(sourceUri, targetUri, "es");

DocumentTranslationOperation operation = await client.StartTranslationAsync(input);

await operation.WaitForCompletionAsync();

Console.WriteLine($" Status: {operation.Status}");

Console.WriteLine($" Created on: {operation.CreatedOn}");

Console.WriteLine($" Last modified: {operation.LastModified}");

Console.WriteLine($" Total documents: {operation.DocumentsTotal}");

Console.WriteLine($" Succeeded: {operation.DocumentsSucceeded}");

Console.WriteLine($" Failed: {operation.DocumentsFailed}");

Console.WriteLine($" In Progress: {operation.DocumentsInProgress}");

Console.WriteLine($" Not started: {operation.DocumentsNotStarted}");

await foreach (DocumentStatusResult document in operation.Value)

{

Console.WriteLine($"Document with Id: {document.Id}");

Console.WriteLine($" Status:{document.Status}");

if (document.Status == DocumentTranslationStatus.Succeeded)

{

Console.WriteLine($" Translated Document Uri: {document.TranslatedDocumentUri}");

Console.WriteLine($" Translated to language code: {document.TranslatedToLanguageCode}.");

Console.WriteLine($" Document source Uri: {document.SourceDocumentUri}");

}

else

{

Console.WriteLine($" Error Code: {document.Error.Code}");

Console.WriteLine($" Message: {document.Error.Message}");

}

}

Synchronous method:

Creating a SingleDocumentTranslationClient

string endpoint = "";

string apiKey = "";

SingleDocumentTranslationClient client = new SingleDocumentTranslationClient(new Uri(endpoint), new AzureKeyCredential(apiKey));

To start a synchronous translation operation for a single document, call DocumentTranslate. To call DocumentTranslate you need to initialize an object of type MultipartFormFileData which contains the information needed to translate the documents. You would need to specify the target language to which the document must be translated to.

try

{

string filePath = Path.Combine("TestData", "test-input.txt");

using Stream fileStream = File.OpenRead(filePath);

var sourceDocument = new MultipartFormFileData(Path.GetFileName(filePath), fileStream, "text/html");

DocumentTranslateContent content = new DocumentTranslateContent(sourceDocument);

var response = client.DocumentTranslate("hi", content);

var requestString = File.ReadAllText(filePath);

var responseString = Encoding.UTF8.GetString(response.Value.ToArray());

Console.WriteLine($"Request string for translation: {requestString}");

Console.WriteLine($"Response string after translation: {responseString}");

}

catch (RequestFailedException exception)

{

Console.WriteLine($"Error Code: {exception.ErrorCode}");

Console.WriteLine($"Message: {exception.Message}");

}

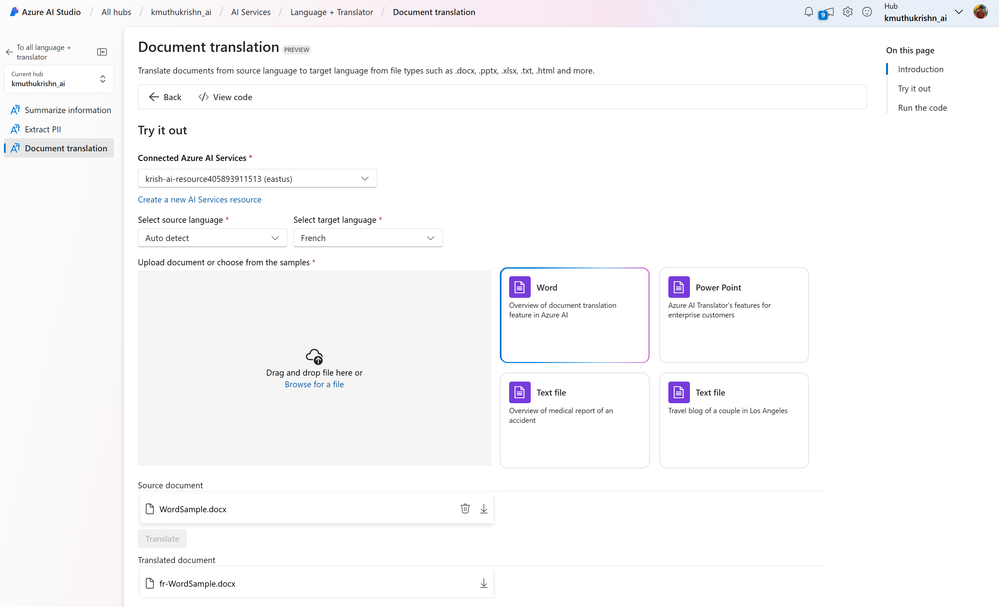

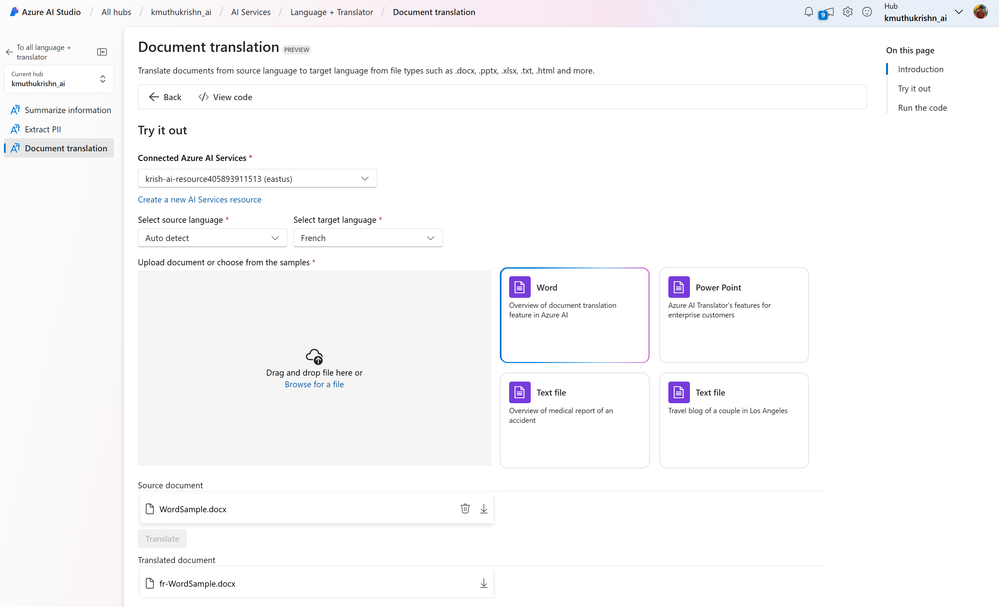

Ready to use solution in Azure AI Studio

Customers can easily build apps for their document translation needs using the SDK. One such example is the document translation tool in the Azure AI Studio, which was announced to be generally available at //build 2024. Here is a glimpse of how you may translate documents in this user interface:

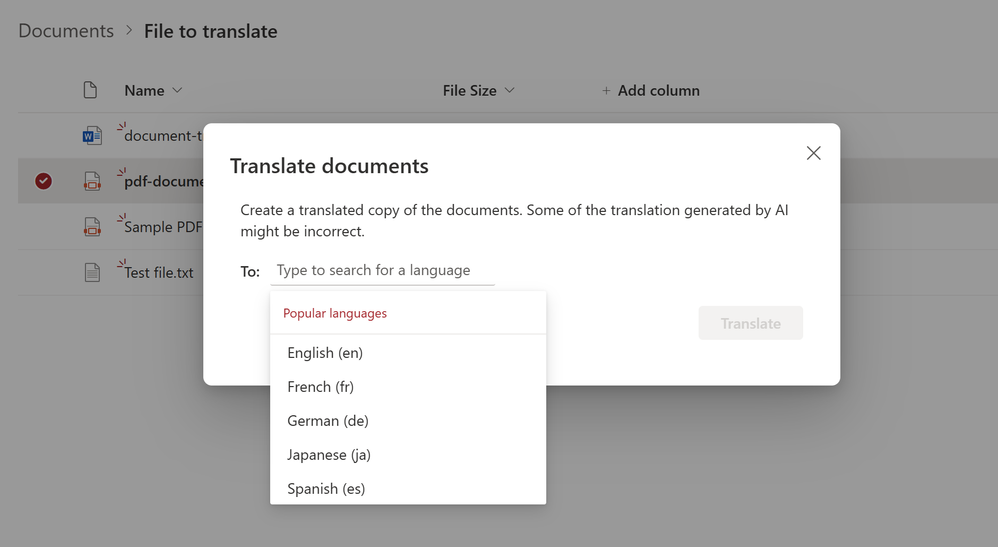

SharePoint document translation

The document translation integration in SharePoint lets you easily translate a selected file or a set of files into a SharePoint document library. This feature lets you translate files of different types either manually or automatically by creating a rule.

Learn more about the SharePoint integration here.

You can also use the translation feature for translating video transcripts and closed captioning files. More information here.

Document translation in container is generally available

In addition to the above updates, earlier this year, we announced the release of document translation and transliteration features for Azure AI Translator containers as preview. Today, both capabilities are generally available. All Translator container customers will get these new features automatically as part of the update.

Translator containers provide users with the capability to host the Azure AI Translator API on their own infrastructure and include all libraries, tools, and dependencies needed to run the service in any private, public, or personal computing environment. They are isolated, lightweight, portable, and are great for implementing specific security or data governance requirements.

With that update, the following are the operations that are now supported in Azure AI Translator containers:

- Text translation: Translate the text phrases between supported source and target language(s) in real-time.

- Text transliteration: Converts text in a language from one script to another script in real-time.

- Document translation: Translate a document between supported source and target language while preserving the original document’s content structure and format.

References

Screenshot of the preview of the device attestation status report in the Intune admin center listing the name, ID, and primary UPN of a device that failed device attestation.

Recent Comments