by Contributed | Oct 10, 2024 | Technology

This article is contributed. See the original author and article here.

Welcome to the October 2024 edition of our Microsoft Credentials roundup, where we highlight the latest portfolio news and updates to inspire and support your training and career journey.

In this article

What’s new with Microsoft Applied Skills?

- Explore the latest Applied Skills scenarios

- Take the Microsoft Learn AI Skills Challenge

What’s new with Microsoft Certifications?

- Announcing the new Microsoft Certified: Fabric Data Engineer Associate Certification (Exam DP-700)

- New pricing for Microsoft Certification exams effective November 1, 2024

- Microsoft Certifications to be retired

- Build your confidence with exam prep resources

Let’s talk about Applied Skills

Want to have a bigger impact in your career? Explore Microsoft Applied Skills credentials to showcase your skills. According to a recent IDC study*, 50% of organizations find that micro-credentials like Microsoft Applied Skills help employees get promoted faster!

*Source: IDC InfoBrief, sponsored by Microsoft, Skilling Up! Leveraging Full and Micro-Credentials for Optimal Skilling Solutions, Doc. #US52019124, June 2024

Explore the latest Applied Skills scenarios

We’re all about keeping you up to date with in-demand, project-based skills? Here are the new scenarios we’ve launched:

Coming soon

Stay tuned for the following Applied Skills and more at aka.ms/BrowseAppliedSkills:

- Implement retention, eDiscovery, and Communication Compliance in Microsoft Purview

- Develop data-driven applications by using Microsoft Azure SQL Database

Applied Skills updates

We ensure that Applied Skills credentials stay aligned with the latest product updates and trends. Our commitment to helping you build relevant, in-demand skills means that we periodically update or retire older assessments as we introduce new ones.

Now available

These assessments have been updated and are now back online:

If you have been preparing for these credentials, go ahead and take the assessment today!

Retirements

Keep an eye out for new credentials in these areas.

- Retired: Build collaborative apps for Microsoft Teams

- Retiring on October 31st: Develop generative AI solutions with Azure OpenAI Service

Take the Microsoft Learn AI Skills Challenge

Dive into the latest AI technologies like Microsoft Copilot, GitHub, Microsoft Azure, and Microsoft Fabric. Explore six curated topics designed to elevate your skills, participate in interactive community events, and gain insights from expert-led sessions. Take your learning to the next level with a selection of the topics to earn a Microsoft Applied Skills Credential and showcase your expertise to potential employers. Start your AI journey today!

Share Your Story

Have you earned an Applied Skills credential that has made a difference in your career or education? Bala Venkata Veturi (see below) earned an Applied Skills credential through the #WICxSkillsReadyChallenge.

We’d love to hear from you! Share your story with us and inspire others with your journey. We could feature your success story next!

What’s new with Microsoft Certifications?

Announcing the new Microsoft Certified: Fabric Data Engineer Associate Certification (Exam DP-700)

These days, as organizations strive to harness the power of AI, data engineering skills are indispensable. Data engineers play a pivotal role in designing and implementing the foundational elements for any successful data and AI initiative. To support learners who want to build and validate these skills, Microsoft Learn is pleased to announce the new Microsoft Certified: Fabric Data Engineer Associate Certification, along with its related Exam DP-700: Implementing data engineering solutions using Microsoft Fabric (beta), both of which will be available in late October 2024. Read all about this exciting news and explore other Microsoft Credentials for analytics and data science, in our blog post Prove your data engineering skills, and be part of the AI transformation.

New pricing for Microsoft Certification exams effective November 1, 2024

Microsoft Learn continually reviews and evolves its portfolio of Microsoft Certifications to help learners around the world stay up to date with the latest technologies, especially as AI and cybersecurity skills become increasingly important in the workplace. We also regularly update our exam content, format, and delivery. To reflect their current market value, we’re updating pricing for Microsoft Certification exams, effective November 1, 2024. The new prices will vary depending on the country or region where you take the exam. For most areas, there will be no change in the price. For some areas, the price will decrease to make the exams more affordable. For a few areas, the price will increase to align with global and regional standards. The goal is to make Microsoft Certification exam pricing simpler and more consistent across geographies while still offering a fair and affordable value proposition. Check out all the details in New pricing for Microsoft Certification exams effective November 1, 2024.

Microsoft Certifications to be retired

As announced in Evolving Microsoft Credentials for Dynamics 365, the following Certifications will be retired on November 30, 2024:

If you’re currently preparing for Exam MB-210, Exam MB-220, or Exam MB-260, we recommend that you take the exam before November 30, 2024. If you’ve already earned one of the Certifications being retired, it will remain on the transcript in your profile on Microsoft Learn. If you’re eligible to renew your certification before November 30, 2024, we recommend that you consider doing so.

Build your confidence with exam prep resources

To better prepare you to earn a new Microsoft Certification, we’ve added new Practice Assessments on Microsoft Learn, including:

Watch this space for the next Microsoft Credentials roundup as we continue to evolve our portfolio to help support your career growth.

Follow us on X and LinkedIn, and make sure you’re subscribed to The Spark, our LinkedIn newsletter.

Previous editions of the Microsoft Credentials roundup

by Contributed | Oct 9, 2024 | Technology

This article is contributed. See the original author and article here.

The Microsoft Security team is excited to connect with you next week at Authenticate 2024 Conference, taking place October 14 to 16 in Carlsbad, CA! With the rise in identity attacks targeting passwords and MFA credentials, it’s becoming increasingly clear that phishing resistant authentication is critical to counteract these attacks. As the world shifts towards stronger, modern authentication methods, Microsoft is proud to reaffirm our commitment to passwordless authentication and to expanding our support for passkeys across products like Microsoft Entra, Microsoft Windows, and Microsoft consumer accounts (MSA).

To enhance security for both consumers and enterprise customers, we’re excited to showcase some of our latest innovations at this event:

We look forward to demonstrating these new advancements and discussing how to take a comprehensive approach to modern authentication at Authenticate Conference 2024.

Where to find Microsoft Security at Authenticate 2024 Conference

Please stop by our booth to chat with our product team or join us at the following sessions:

Session Title

|

Session Description

|

Time

|

Passkeys on Windows: Paving the way to a frictionless future!

|

UX Fundamentals

Discover the future of passkey authentication on Windows. Explore our enhanced UX, powered by Microsoft AI and designed for seamless experiences across platforms. Join us as we pave the way towards a passwordless world.

Speakers:

Sushma K. Principal Program Manager, Microsoft

Ritesh Kumar Software Engineer, Microsoft

|

October 14th

12:00 – 12:25 PM

|

Passkeys on Windows: New platform features

|

Technical Fundamentals and Features

This is an exciting year for us as we’re bringing some great passkey features to Windows users. In this session, I’ll discuss our new capabilities for synced passkeys protected by Windows Hello, and I’ll walk through a plugin model for third-party passkey providers to integrate with our Windows experience. Taken together, these features make passkeys more readily available wherever users need them, with the experience, flexibility, and durability that users should expect when using their passkeys on Windows.

Speaker:

Bob Gilbert Software Engineering Manager, Microsoft

|

October 14th

2:30 – 2:55 PM

|

We love passkeys – but how can we convince a billion users?

|

Keynote

It’s clear that passkeys will be core component of a passwordless future. The useability and security advantages are clear. What isn’t as clear is how we actually convince billions of users to step away from a decades-long relationship with passwords and move to something new. Join us as we share insights on how to accelerate adoption when users, platforms, and applications needs are constantly evolving. We will share practical UX patterns and practices, including messaging, security implications,

and how going passwordless changes the concept of account recovery.

Speakers:

Scott Bingham Principal Product Manager, Microsoft

Sangeeta Ranjit Group Product Manager, Microsoft

|

October 14th

5:05 – 5:25 PM

|

Stop by our booth #402 to speak with our product team in person!

Stop counting actors… Start describing authentication events

|

Vision and Future

We began deploying multifactor authentication because passwords provided insufficient security. More factors equal more security, right? Yes, but we continue to see authentication attacks such as credential stuffing and phishing! The identity industry needs to stop thinking in the quantity of authentication factors and start thinking about the properties of the authentication event. As we transition into the era of passkeys, it’s time to consider how we describe the properties of our authentication event. In this talk, we’ll demonstrate how identity providers and relying parties can communicate a consistent, composable collection of authentication properties. To raise the security bar and provide accountability, these properties must communicate not only about the authentication event, but about the security primitives underlying the event itself. These properties can be used to drive authentication and authorization decisions in standalone and federated environments, enabling clear, consistent application of security controls.

Speakers:

Pamela Dingle Director of Identity Standards, Microsoft

Dean H. Saxe Principal Engineer, Office of the CTO, Beyond Identity

|

October 16th

10:00 – 10:25 AM

|

Bringing passkeys into your passwordless journey

|

Passkeys in the Enterprise

Most of our enterprise customers are deploying some form of passwordless credential or planning to in the next few years, however, the industry is all abuzz with excitement about passkeys. What do passkeys mean for your organization’s passwordless journey? Join the Microsoft Entra ID product team as we explore the impact of passkeys on the passwordless ecosystem, share insights from Microsoft’s own passkey implementation and customer experiences.

Speakers:

Tim Larson – Senior Product Manager, Identity Network and Access, Security, Microsoft

Micheal Epping – Senior Product Manager, Microsoft

|

October 16th

11:00 – 11:25 AM

|

We can’t wait to see you in Carlsbad, CA for Authenticate 2024 Conference

Jarred Boone, Senior Product Marketing Manager, Identity Security

Read more on this topic

Learn more about Microsoft Entra

Prevent identity attacks, ensure least privilege access, unify access controls, and improve the experience for users with comprehensive identity and network access solutions across on-premises and clouds.

by Contributed | Oct 8, 2024 | Technology

This article is contributed. See the original author and article here.

In our continued effort to equip developers and organizations with advanced search tools, we are thrilled to announce the launch of several new features in the latest Preview API for Azure AI Search. These enhancements are designed to optimize vector index size and provide more granular control and understanding of your search index to build Retrieval-Augmented Generation (RAG) applications.

MRL Support for Quantization

Matryoshka Representation Learning (MRL) is a new technique that introduces a different form of vector compression, which complements and works independently of existing quantization methods. MRL enables the flexibility to truncate embeddings without significant semantic loss, offering a balance between vector size and information retention.

This technique works by training embedding models so that information density increases towards the beginning of the vector. As a result, even when using only a prefix of the original vector, much of the key information is preserved, allowing for shorter vector representations without a substantial drop in performance.

OpenAI has integrated MRL into their ‘text-embedding-3-small’ and ‘text-embedding-3-large’ models, making them adaptable for use in scenarios where compressed embeddings are needed while maintaining high retrieval accuracy. You can read more about the underlying research in the official paper [1] or learn about the latest OpenAI embedding models in their blog.

Storage Compression Comparison

Table 1.1 below highlights the different configurations for vector compression, comparing standard uncompressed vectors, Scalar Quantization (SQ), and Binary Quantization (BQ) with and without MRL. The compression ratio demonstrates how efficiently the vector index size can be optimized, yielding significant cost savings. You can find more about our Vector Index Size Limits here: Service limits for tiers and skus – Azure AI Search | Microsoft Learn.

Table 1.1: Vector Index Size Compression Comparison

Configuration

|

*Compression Ratio

|

Uncompressed

|

–

|

SQ

|

4x

|

BQ

|

28x

|

**MRL + SQ (1/2 and 1/3 truncation dimension respectively)

|

8x-12x

|

**MRL + BQ (1/2 and 1/3 truncation dimension respectively)

|

64x – 96x

|

Note: Compression ratios depend on embedding dimensions and truncation. For instance, using “text-embedding-3-large” with 3072 dimensions truncated to 1024 dimensions can result in 96x compression with Binary Quantization.

*All compression methods listed above, may experience slightly lower compression ratios due to overhead introduced by the index data structures. See “Memory overhead from selected algorithm” for more details.

**The compression impact when using MRL depends on the value of the truncation dimension. We recommend either using ½ or 1/3 of the original dimensions to preserve embedding quality (see below)

Quality Retainment Table:

Table 1.2 provides a detailed view of the quality retainment when using MRL with quantization across different models and configurations. The results indicate the impact on Mean NDCG@10 across a subset of MTEB datasets, showing that high levels of compression can still preserve up to 99% of search quality, particularly with BQ and MRL.

Table 1.2: Impact of MRL on Mean NDCG@10 Across MTEB Subset

Model Name

|

Original Dimension

|

MRL Dimension

|

Quantization Algorithm

|

No Rerank (% Δ)

|

Rerank 2x Oversampling (% Δ)

|

OpenAI text-embedding-3-small

|

1536

|

512

|

SQ

|

-2.00% (Δ = 1.155)

|

-0.0004% (Δ = 0.0002)

|

OpenAI text-embedding-3-small

|

1536

|

512

|

BQ

|

-15.00% (Δ = 7.5092)

|

-0.11% (Δ = 0.0554)

|

OpenAI text-embedding-3-small

|

1536

|

768

|

SQ

|

-2.00% (Δ = 0.8128)

|

-1.60% (Δ = 0.8128)

|

OpenAI text-embedding-3-small

|

1536

|

768

|

BQ

|

-10.00% (Δ = 5.0104)

|

-0.01% (Δ = 0.0044)

|

OpenAI text-embedding-3-large

|

3072

|

1024

|

SQ

|

-1.00% (Δ = 0.616)

|

-0.02% (Δ = 0.0118)

|

OpenAI text-embedding-3-large

|

3072

|

1024

|

BQ

|

-7.00% (Δ = 3.9478)

|

-0.58% (Δ = 0.3184)

|

OpenAI text-embedding-3-large

|

3072

|

1536

|

SQ

|

-1.00% (Δ = 0.3184)

|

-0.08% (Δ = 0.0426)

|

OpenAI text-embedding-3-large

|

3072

|

1536

|

BQ

|

-5.00% (Δ = 2.8062)

|

-0.06% (Δ = 0.0356)

|

Table 1.2 compares the relative point differences of Mean NDCG@10 when using different MRL dimensions (1/3 and 1/2 from the original dimensions) from an uncompressed index across OpenAI text-embedding models.

Key Takeaways:

- 99% Search Quality with BQ + MRL + Oversampling: Combining Binary Quantization (BQ) with Oversampling and Matryoshka Representation Learning (MRL) retains 99% of the original search quality in the datasets and embeddings combinations we tested, even with up to 96x compression, making it ideal for reducing storage while maintaining high retrieval performance.

- Flexible Embedding Truncation: MRL enables dynamic embedding truncation with minimal accuracy loss, providing a balance between storage efficiency and search quality.

- No Latency Impact Observed: Our experiments also indicated that using MRL had no noticeable latency impact, supporting efficient performance even at high compression rates.

For more details on how MRL works and how to implement it, visit the MRL Support Documentation.

Targeted Vector Filtering

Targeted Vector Filtering allows you to apply filters specifically to the vector component of hybrid search queries. This fine-grained control ensures that your filters enhance the relevance of vector search results without inadvertently affecting keyword-based searches.

Sub-Scores

Sub-Scores provide granular scoring information for each recall set contributing to the final search results. In hybrid search scenarios, where multiple factors like vector similarity and text relevance play a role, Sub-Scores offer transparency into how each component influences the overall ranking.

Text Split Skill by Tokens

The Text Split Skill by Tokens feature enhances your ability to process and manage large text data by splitting text based on token countsThis gives you more precise control over passage (chunk) length, leading to more targeted indexing and retrieval, particularly for documents with extensive content.

For any questions or to share your feedback, feel free to reach out through our Azure Search · Community

Getting started with Azure AI Search

References:

[1] Kusupati, A., Bhatt, G., Rege, A., Wallingford, M., Sinha, A., Ramanujan, V., Howard-Snyder, W., Chen, K., Kakade, S., Jain, P., & Farhadi, A. (2024). Matryoshka Representation Learning. arXiv preprint arXiv:2205.13147. Retrieved from https://arxiv.org/abs/2205.13147{2205.13147}

by Contributed | Oct 7, 2024 | Technology

This article is contributed. See the original author and article here.

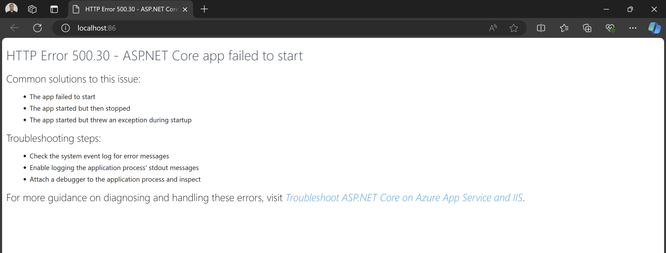

Introduction

When deploying an ASP.NET Core application, encountering the “HTTP Error 500.30 – ASP.NET Core app failed to start” is the most common error. This error typically indicates an issue within the application startup process, often triggered by misconfigurations, dependencies or environment mismatches.

Problem

The HTTP 500.30 error occurs when the ASP.NET Core application fails to launch successfully. Unlike other HTTP errors that might relate to client-side or application issues, this error signifies that the server was unable to initiate the application due to a problem during startup. This failure may result from misconfigurations in the hosting environment, incorrect settings in the app configuration, or missing dependencies required for the application to run.

Solution

Since the issue could stem from various factors, it’s essential to verify all aspects. First, ensure that the correct ASP.NET Core runtime is installed. The ASP.NET Core Hosting Bundle includes everything needed to run web or server apps. It contains the .NET runtime, ASP.NET Core runtime and if installed on a machine with IIS, it also adds the ASP.NET Core IIS Module. If it is not installed, download and install the correct Hosting Bundle from this link – Download ASP.NET Core 8.0 Runtime (v8.0.8) – Windows Hosting Bundle Installer (microsoft.com) If you are using previous version, use this link to get the list of relevant versions download. Download .NET 8.0 (Linux, macOS, and Windows) (microsoft.com)

Note: If the Hosting Bundle is installed before IIS, the bundle installation must be repaired. Run the Hosting Bundle installer again after installing IIS.

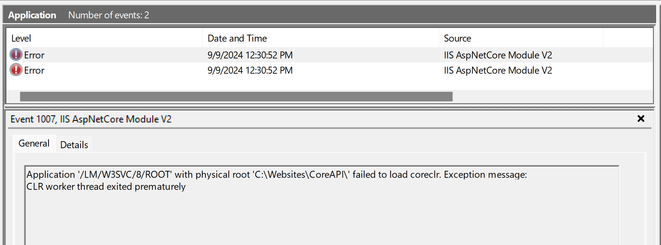

You should also review the application event logs. These can often reveal more specific errors, such as missing configuration files, connection string issues, or runtime errors. To access these logs, go to: Event Viewer -> Windows Logs -> Application. An error you might encounter could look something like this –

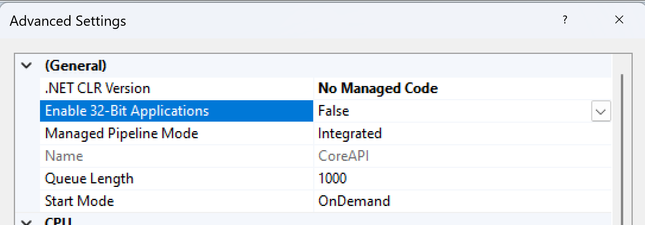

Here the application startup failed to load coreclr. CLR worker thread exited prematurely. This issue commonly occurs when an application is built for a 32-bit runtime, but the application pool is set to 64-bit. To resolve this, set the “Enable 32-Bit Applications” option to true. For more details on this setting, you can refer to this article – How to Host 32-Bit Applications in IIS: Complete Step-by-Step Guide & In-Depth Analysis (microsoft.com)

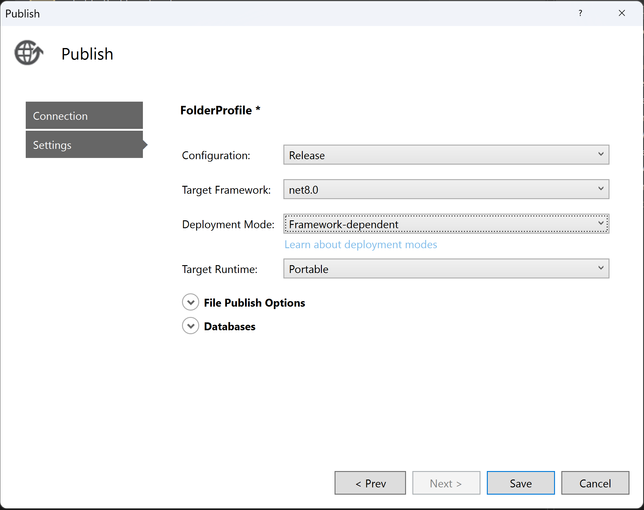

Also be mindful while you are publishing your application. Make sure to select the correct configurations (Debug/Release). Always choose “Release” unless you special debugging need.

The target framework should match with the installed hosting bundle version. Also, ensure you select the appropriate target runtime and deployment mode.

Lastly, if your ASP.NET Core application relies on dependencies or third-party libraries, verify that they are installed on the server. If your application is 64-bit but the dependencies are 32-bit, enable the “Enable 32-Bit Applications” setting in the application pool.

Other Potential Causes

The failure to load corclr is one possible cause of the 500.30 exception. In the context of hosting ASP.NET Core applications on IIS, a 500.30 – Start Failure generally indicates that the application was unable to start correctly. There are many other factors that could contribute to this exception. Some of these include –

Missing or Incorrect .NET Runtime

The required ASP.NET Core runtime may not be installed on the server. Ensure that the correct version of the runtime is installed. This is already discussed above.

Startup Errors in the Application

Issues in the Startup.cs or Program.cs files e.g., incorrect configurations, middleware issues, dependency injection failure or exceptions could prevent the application from starting.

App Misconfiguration

Incorrect configurations in the appsettings.json or web.config or environment-specific settings files could lead to startup failures, such as invalid database connection strings or service misconfigurations etc.

File Permission

The application may not have sufficient file system permissions to access required directories or files.

Environment Variable Issues

Missing or misconfigured environment variables e.g., ASPNETCORE_ENVIRONMENT can cause the app to crash or behave unexpectedly during startup.

Conclusion

HTTP Error 500.30 signifies a failure in the ASP.NET Core application’s startup process. By investigating the stdout logs, windows application and system event logs, validating the environment, checking dependency versions, and ensuring proper configurations and tracing the startup process, you can better identify the specific cause of the 500.30 error.

by Contributed | Oct 4, 2024 | Technology

This article is contributed. See the original author and article here.

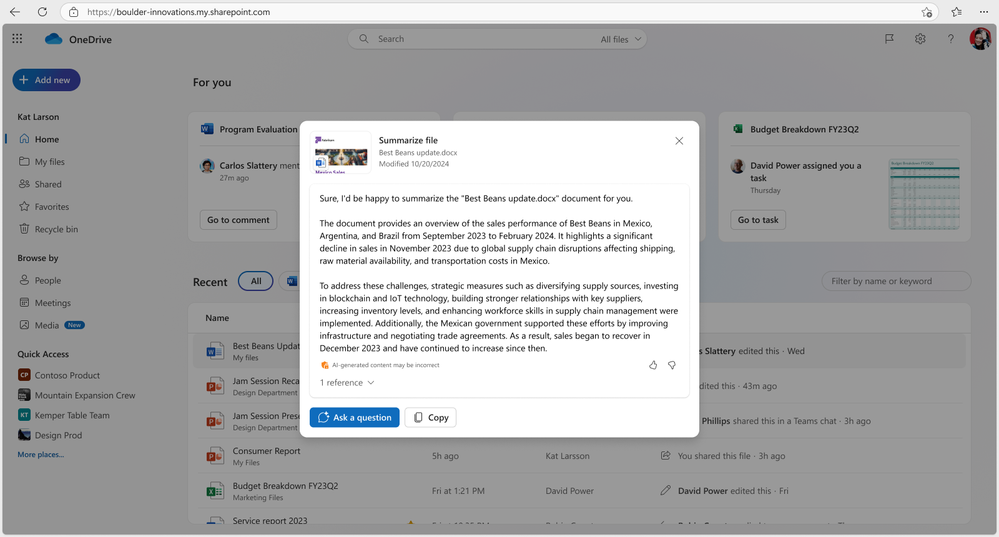

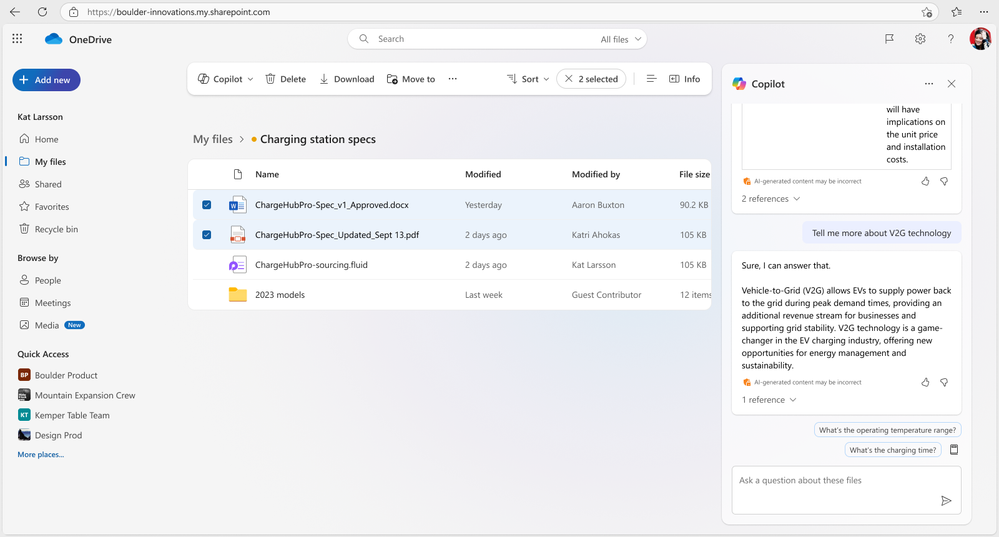

Copilot brings the power of AI right into OneDrive to help you work more efficiently and effectively. And it’s now available on OneDrive for the web to all our Copilot licensed commercial users, marking a significant milestone in the way you work with files in OneDrive. So, it’s time to get to know Copilot in OneDrive and what it can do for you. This article brings together a playlist of important recent resources to give you both the what, how, and why of bringing Copilot into the OneDrive experience.

To start, here is a nice, short demo video from Mike Tholfsen (Principal Group Product Manager – Microsoft Education) showcasing “4 new Copilot in OneDrive features” (within Microsoft 365). Watch now:

A few use cases to discover what Copilot in OneDrive can do:

- Generate summaries for large documents.

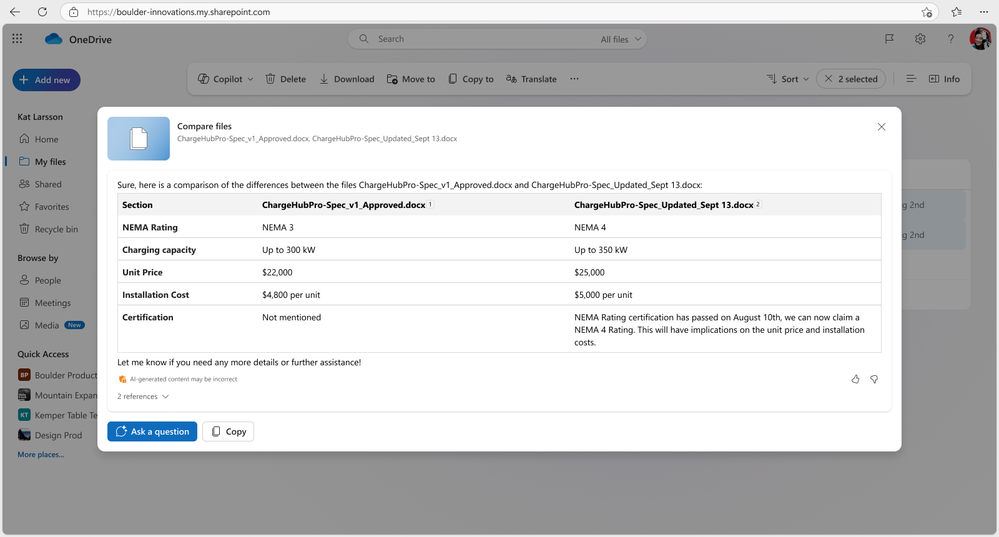

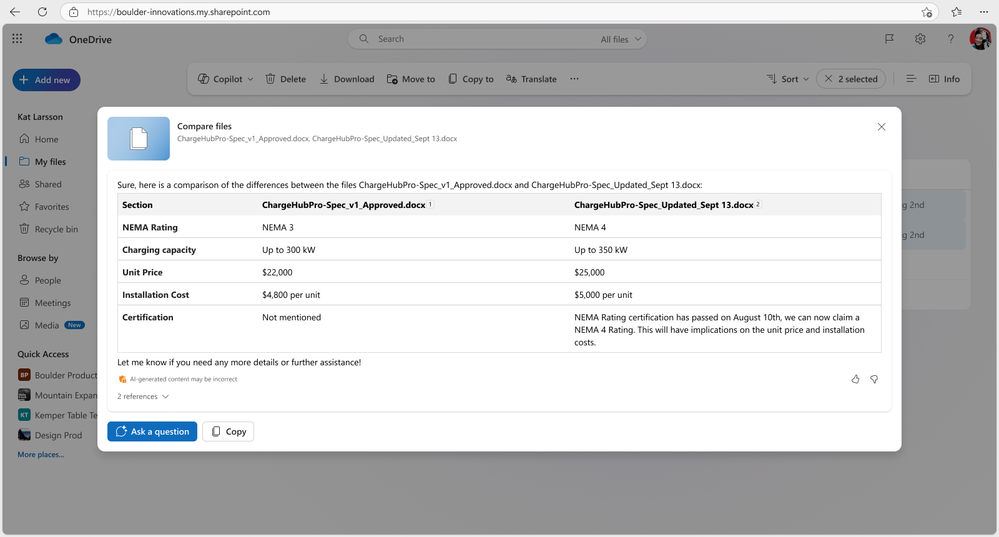

- Compare differences between multiple documents.

- Answer complex questions using files you have access to.

- Generate ideas for new documents.

Now, let’s go behind the scenes with Sync Up and hear from OneDrive designer, Ben Truelove.

In this episode of the Sync Up podcast, hosts Stephen Rice and Arvind Mishra dive into the design of Copilot in OneDrive with special guest Ben Truelove, a veteran designer at Microsoft. Ben shares insights from his 27-year journey at Microsoft, including how the team designed and iterated to deliver the best Copilot experience to our customers. Tune in to learn about the innovative features and user-centric design that make Copilot a game-changer for OneDrive users.

“Designing OneDrive for Copilot” | Latest video episode from Sync Up – The OneDrive podcast. Watch now:

Listen to and subscribe: Sync Up – The OneDrive podcast. And explore other Microsoft podcasts.

OK, let’s move into the finer details with the recent general availability (GA) blog.

Copilot isn’t just a tool; it’s a productivity companion that works alongside you, making everyday tasks easier and empowering you to achieve more. Arjun Tomar (Principal Product Manager on the OneDrive web team) published a recent blog to dig deeper into what you can do with Copilot in OneDrive. He shares how you can get started – including the new getting started guide. And last, the OneDrive team wants your feedback, in-app and a nice set of FAQs just a link away.

You can now select up to five files―even mixing formats such as Word docs, PowerPoints, and PDFs―and have Copilot offer a detailed comparison across all the files.

You can now select up to five files―even mixing formats such as Word docs, PowerPoints, and PDFs―and have Copilot offer a detailed comparison across all the files.

Review Arjun Tomar‘s full blog, “Introducing Copilot in OneDrive: Now Generally Available.”

To get a broader perspective, watch the “OneDrive: AI at your Fingertips” training video.

Learn about and see demos of new and important capabilities coming to OneDrive — giving you the best file experiences across Microsoft 365: Enhancing collaboration, powered by AI and Copilot, fast, reliable sync, and more. You’ll get the latest news, roadmap, plus a few tips and tricks along the way.

Take time to get to know all aspects of how the OneDrive team is leveraging AI. Arjun Tomar and Ben Truelove co-present “OneDrive: AI at your fingertips.” Watch now:

Before it’s too late, register for the upcoming OneDrive webinar (Oct. 8th, 10am PDT)

Discover what’s here and coming for Copilot in OneDrive, new enhancements to the mobile app, photos experience, and more!

Register for their upcoming webinar | Oct 8, 2024, 10am PDT | “OneDrive: AI Innovations for a New Era of Work and Home“ presented by Jeff Teper– President – Collab Apps & Platform, Jason Moore- VP – Product Management, Arwa Tyebkhan – Principal Product Group Manager, Carlos Perez– Principal Design Director, Gaia Carini-Principal Group Product Manager, and Arjun Tomar-Senior Product Manager.

“OneDrive: AI Innovations for a New Era of Work and Home” co-presenters: Jeff Teper (President, Collaboration Apps and Platform), Jason Moore (VP, Product Management), Arwa Tyebkhan (Principal Product Group Manager), Carlos Perez (Principal Design Director), Gaia Carini (Principal Group Product Manager), and Arjun Tomar (Senior Product Manager).

“OneDrive: AI Innovations for a New Era of Work and Home” co-presenters: Jeff Teper (President, Collaboration Apps and Platform), Jason Moore (VP, Product Management), Arwa Tyebkhan (Principal Product Group Manager), Carlos Perez (Principal Design Director), Gaia Carini (Principal Group Product Manager), and Arjun Tomar (Senior Product Manager).

BONUS | Copilot Wave 2 – AI innovations for OneDrive and SharePoint.

Adam Harmetz and Jason Moore – Microsoft VPs for SharePoint and OneDrive respectively – recently posted a related blog that highlights the public preview of Copilot agents in SharePoint, a new experience that enables any user to quickly create and share agents right from within SharePoint for specific purposes. Plus, they included insights about Copilot in OneDrive – highlighting numerous use cases like summarize, get answers from, and compare your files in OneDrive faster than ever.

Review their full blog, “Microsoft 365 Copilot Wave 2: AI Innovations in SharePoint and OneDrive“; scroll below to see two of the highlighted Copilot in OneDrive innovations:

With Copilot in OneDrive, you can summarize one or multiple files in your OneDrive web app without opening each file.

With Copilot in OneDrive, you can summarize one or multiple files in your OneDrive web app without opening each file.

ask Copilot questions about the information you need from the documents you choose to gain insights and do your best work.

ask Copilot questions about the information you need from the documents you choose to gain insights and do your best work.

If you’re new to Copilot or want to learn more, check out our get started with Copilot in OneDrive (support.microsoft.com) for detailed instructions and tips on how to make the most of this AI-powered assistant designed to revolutionize the way you interact with your files and streamline your workflow.

Follow the OneDrive community blog, subscribe to Sync Up – The OneDrive podcast, stay up to date on Microsoft OneDrive adoption on adoption.microsoft.com, see what they’re tweeting, and join the product team for their monthly OneDrive Office Hours | Register and join live: https://aka.ms/OneDriveCommunityCall.

Cheers, Mark “OneAI” Kashman

Recent Comments