by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Abstract:

Azure virtual machine scale set agents are a new form of self-hosted agents that can be auto scaled to meet customer demands.

This elasticity reduces the need to run dedicated agents all the time. Unlike Microsoft-hosted agents, customers have flexibility over the size and the image of machines on which agents run.

In this session we will introduce you to the Azure DevOps VMSS agents and offer insights into the setup, working and also basic troubleshooting of the agents.

Webinar Date & Time : November 30, 2020. Time 4.00 PM IST (10.30 AM GMT)

Invite : Download the Calendar Invite

Speaker Bio :

Muni Karthik currently works as a Support Engineer in Microsoft, India. His day to day responsibilities include assisting customers overcome the challenges that they face with the different Team Foundation Server and Azure DevOps services. His interests are exploring the latest DevOps concepts and providing good support experience to the customers. He partners with Field teams, Product engineering groups to help our customers and developers.

Kirthish Kotekar Tharanatha is working as Support Escalation Engineer in Azure DevOps team in Microsoft and he helps the customers to resolve the most complex of issues in Azure DevOps day to day. His area of interests include the CI and CD part of Azure DevOps and also various other open CI/CD tools integration with Azure DevOps.

Devinar 2020

by Contributed | Nov 16, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

What is covered in this blog post?

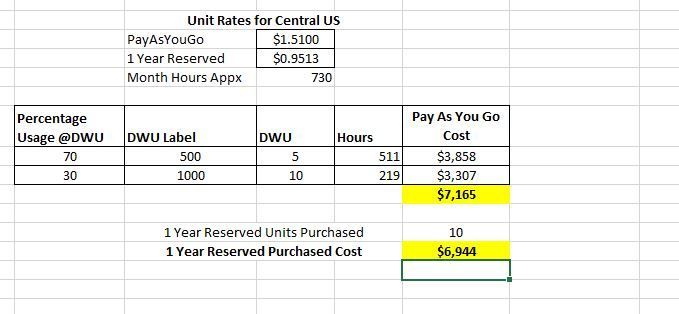

Recently I went through a Cost Optimization exercise of evaluating Azure Synapse Reserved instance pricing with my customer and this blog post documents the learnings from that exercise as I think it would be beneficial for others as well. The blog post covers following main points:

- Shares additional perspective with examples on cost optimization for Azure Synapse using Reserved Instance pricing when you plan to run Azure Synapse Instance at variable DWU Levels.

- Shares the example Excel spreadsheet (attached with this post) which can be used to play around with the usage patterns (variable DWU Levels) and cost estimates (Please refer to Pricing page for most up to date pricing information, the spreadsheet uses Central US as an example).

- Summarizes the main aspects of how the Azure Reserved Instance Pricing works (along with links to public documentation) in case you are not familiar

RI is the abbreviation I will use at times to refer to Reserved Instance pricing

Background

The main benefit of the cloud environment is its elastic scale where you can scale up and down as per your needs to save costs. My customer wanted to run Synapse instance at DWU Level 7500 a third of the time, DWU Level 6000 a third of the time and DWU Level 3000 a third of the time. So, the question to address was does it make sense to purchase Synapse Reserved Instance Pricing and how much should be purchased 3000, 6000, 7500 or something in the middle. Such requirements are not uncommon, the example scenario:

- Maybe 7500 DWU higher compute is needed during loads

- 6000 DWU needed during peak usage hours

- 3000 DWU sufficient during off-peak usage hours

Before going into Cost Analysis Examples from customer scenario I will first summarize how Azure Synapse Reserved Instance pricing works.

Summary of how Reserved Instance pricing works

Azure Synapse RI pricing is very flexible and very good cost saving measure. I will be bold enough to make a statement that if you are running a production workload which you don’t plan to sunset in near time most likely Synapse Reserved Instance Pricing would make sense for you.

I am summarizing a few important points around how Azure Synapse Reserved pricing works but you can read more from the official documentation pages – Save costs for Azure Synapse Analytics charges with reserved capacity and How reservation discounts apply to Azure Synapse Analytics

• 1 year Reserved Instance pricing discount is appx 37%

• 3 year Reserved Instance pricing discount is appx 65%

• Synapse charges are a combination of Compute and Storage, Reserved instance pricing is only applicable to Compute and not storage

• Compute charge is calculated as multiples of DWU 100, i.e. DWU 1000 is 10 units of DWU 100, DWU 2000 is 20 units of DWU 100, etc.

• When Azure Synapse Reserved Instance is purchased you are basically purchasing discounted rate for N number of DWU 100 units for a 1 or 3 year commitment

• The Azure Synapse Analytics reserved capacity discount is applied to running warehouses on an hourly basis. If you don’t have a warehouse deployed for an hour, then the reserved capacity is wasted for that hour. It doesn’t carry over but I will share some examples here to put you at comfort that the discount is so big that even if there is some wastages (instance running at a lower SKU then RI purchased) there is a good chance you will still come out ahead.

• The best part about the N units of RI purchased is that it can be shared between multiple instances within the same subscription or across subscription (customers need to decide on the scope of the RI) . As an example, if you purchase 10 units the discount can get applied to both your Dev as well as Prod instance.

• Simple Example for RI purchased for DWU 1000

- Say, Prod runs @ DWU 1000 during peak hours but is brought down to DWU 500 in off-peak hours.

- Say, you have another instance which runs @ DWU 500 but its future is not very clear for you to commit to Reserved instance pricing or maybe it is a Dev instance which goes between DWU 500 and 200 or even paused at time.

- When Prod runs @DWU 1000, you pay discounted rate for Prod and pay as you go rate for the Dev instance but when Prod is @DWU 500 then in that case you pay discounted rate for both of your Synapse Instances.

Costs Analysis Discovery/Learnings

The main objective of the cost analysis exercise was to determine if purchasing Reserved Instance Pricing makes sense when Azure Synapse will be running at variables DWU Levels.

In scenarios where you will be using Azure Synapse at different DWU Levels the bare minimum goal (or success criteria) is that Reserved Instance should not cost more than what you would pay under Pay as you Go cost model.

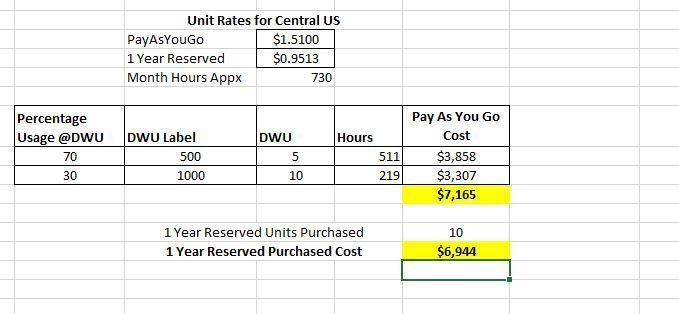

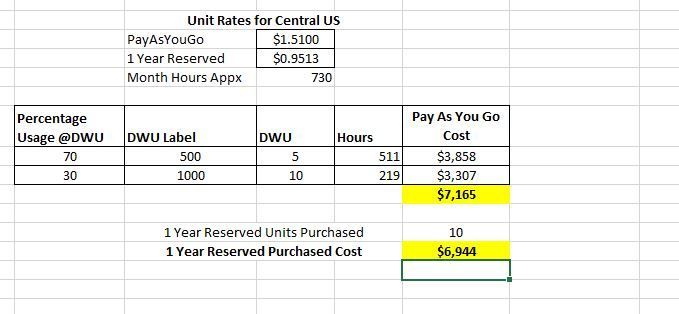

Example 1

1 Year RI, 10 Units

Low SKU – DWU 500

High SKU – DWU 1000

With 1 Year RI you will still come out ahead if you run at Purchased RI SKU (DWU Level 1000) at least 30% of the time and at least 50% of the Purchased RI SKU (DWU Level 500) 70% of the time.

With 1 Year RI you will still come out ahead if you run at Purchased RI SKU (DWU Level 1000) at least 30% of the time and at least 50% of the Purchased RI SKU (DWU Level 500) 70% of the time.

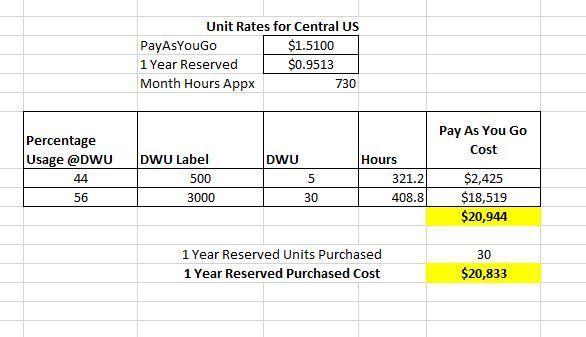

Example 2

1 Year RI, 30 Units

Low SKU – DWU 500

High SKU – DWU 3000

With 1 Year RI you will still come out ahead if you run at Purchased RI SKU (DWU Level 3000) at least 56% of the time and at least 17% of the Purchased RI SKU (DWU Level 500) 44% of the time.

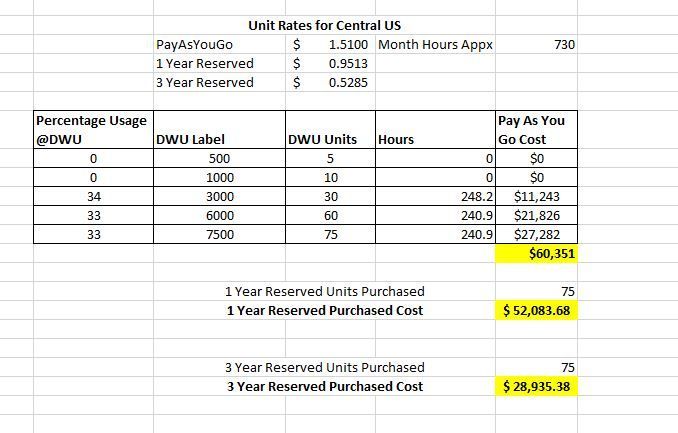

Example 3

3 Year RI, 75 Units

DWU 3000 34% of the time

DWU 6000 33% of the time

DWU 7500 33% of the time

- When 1 or 3 Year Reserved Instanced Pricing is purchased for DWU 7500 (75 units), even when you run Synapse at a lower scale than RI purchased you will still come out ahead because the discount is so big

- 3 Years RI is so much cheaper that maybe you don’t want to do this scale up and down at all and run the Synapse instance DWU 7500 all the time

Conclusions

- Bottom line is that even if you are losing hourly discounted rate for partial hours you can still come out ahead because discount is so big (3 Year RI discount much bigger than 1 Year RI).

- Lastly, its important to re-iterate thanks to the flexible nature of Azure Reserved Instance pricing that you can have other Azure Synapse instances running in same or different Azure Subscription to make use of the discounted price which is unused capacity on your main Azure Synapse instance for which RI was purchased.

Azure Synapse Scaling Sample Script

In case you are planning scaling your Synapse instances I wanted to add link (https://github.com/microsoft/sql-data-warehouse-samples/blob/master/samples/automation/ScaleAzureSQLDataWarehouse/ScaleAzureSQLDataWarehouse.ps1) to this sample script for completeness. When scaling Azure Synapse connection is dropped momentarily so any running queries will fail. This sample scaling script accounts for active queries before performing scaling operation. Sample is a little old and I have not verified but plan to validate in a few days and update as per my validation results but it should give a good starting point regardless.

by Contributed | Nov 16, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

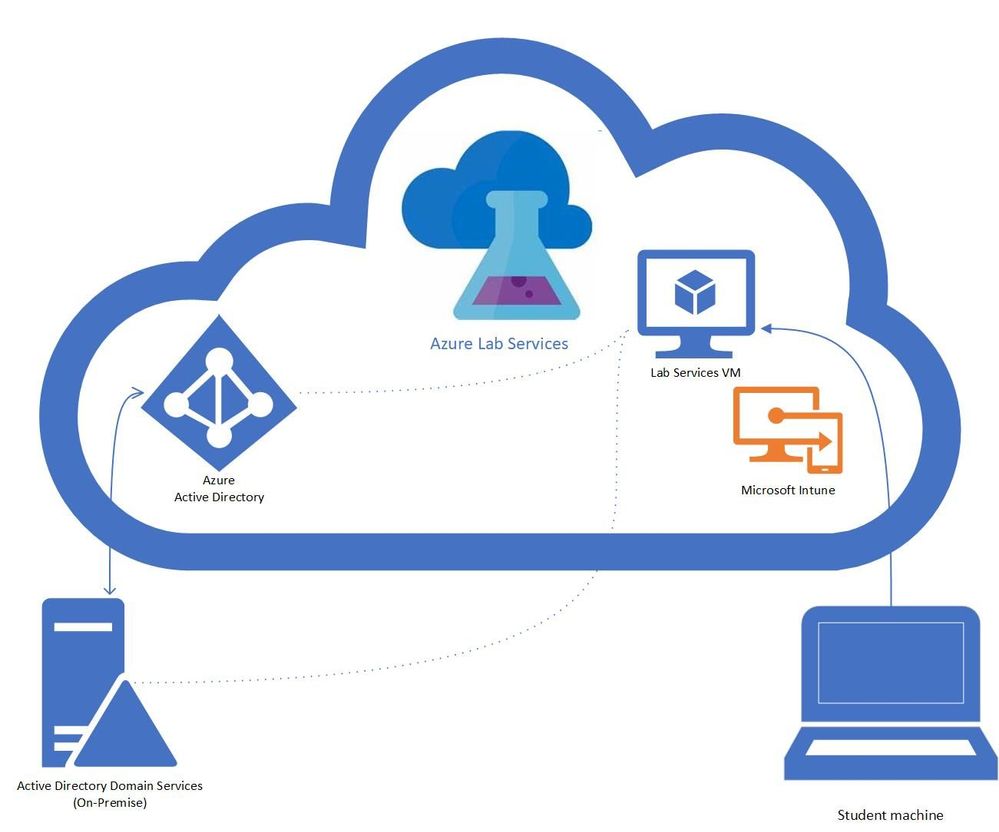

Intune + Azure Lab Services

A question that we get asked by IT departments is “can Intune be used to manage Windows 10 machines in a lab?” The answer is yes! In this blog post, we will show you how you can enable Intune on your lab’s VMs. This post will focus on getting lab VMs automatically domain joined, enrolled into Intune, and into a specific AD group at the initial student logon.

Benefits

There are several benefits to having the lab VMs being managed by Intune. The ability to create profiles that configure the VM to allow or restrict capabilities like blocking different URLs, setting sites to open when the browser starts, blocking downloads, and managing Bitlocker encryption. The Microsoft Endpoint Manager helps deliver a modern management tool for your lab VMs where you can create and customize these configuration profiles. For an education focused management tool, the Intune for Education is a portal that helps simplify Windows configuration, Take a Test, user management, group / sub-group inheritance and app management.

Prerequisites

These steps assume the following prerequisites have been configured:

- Check with your account representative for the appropriate Intune licensing.

- The Active Directory is setup with a MDM service, that is configured for auto-enrollment.

- You have a Azure Lab Services Account peered to a hybrid Azure Active Directory.

Here is more information on how to setup an Azure Lab Services account that is connected to your network.

Setting up the Lab

- Setup Template VM to join the domain.

Currently, there is a set of PowerShell scripts that are run on the template VM so that the Lab VMs will be domain joined when they are initially started. These set of scripts also rename the Lab VM to make the name unique, including setting a prefix for the VM name that can be used to put the VM in the appropriate AD group, I’ve included more details later in this blog.

- Set Group Policy to auto enroll into Intune.

The following steps will setup the auto enrollment for the lab VMs. On the template VM setup the auto-enrollment using the following steps:

- Open the local group policy editor (gpedit.msc)

- Under Computer Configuration / Administrative Templates / Windows Components / MDM

- Enable automatic MDM enrollment using default Azure AD credentials set to User Credential.

- Disable MDM enrollment is disabled.

- More information is available in the device management documentation.

- Publish Template

Once the template is published, the machines for that class will be created. The students VMs will join the domain on first startup. When the student logs on with their account, the device will be Intune enabled.

You should start the VMs before the students to get the VMs domain joined and setup for the students. The domain join and setting up the student access may take some time. Once the domain join has completed, the VMs can be turned off and when the students start and logon to the VM the auto enrollment will occur. In the case that you run into issues I’ve included a section on troubleshooting.

Additional: Setup dynamic AD group for the class

The Lab VMs are Intune enabled, but an additional step is to have the VMs added to a specific active directory group. Profiles can be set for an AD group so that any VMs added to the group will be configured based on the profile information. The dynamic group allows you to set up rules for which machines are in the group. Each group corresponds to a class or more specifically, the machines within the class. A student could have multiple classes where each class has a different set of requirements and machines that will need to be managed. Dynamic groups use rules to determine which AD group a VM should belong to. The simplest example is to use the VM name prefix (from the domain join script) as the rule for the group. An example rule would be “displayName -startsWith “Prefix”

Troubleshooting

In the case that the student VMs aren’t working as expected here are some troubleshooting tips.

- Start with the Domain join scripts.

- Check the Group Policy on the student VM

- Confirm that the group policy for auto-enrollment is set on the student VM, if not check the template VM.

- Check Event viewer on the student VM for information on the auto-enrollment task.

Final thoughts

Given the complexity of Active Directory and network configurations this is a specific example to help understand how to get Azure Lab Services working with Intune which opens a whole world of capabilities in managing student VMs.

by Contributed | Nov 16, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

If you prefer using a CLI—for one-time tasks as well as for more complicated, automated scripts—you can use the Azure CLI to take advantage of the improvements for provisioning and managing Flexible Server (in preview) in Azure Database for PostgreSQL. Flexible Server is a new deployment option (now in preview) for our fully-managed Postgres database service and is designed to give you more granular control and flexibility over database management functions and configuration settings.

The new Azure CLI experience with Flexible Server for Azure Database for PostgreSQL includes:

- Refined output to keep you informed about what’s going on behind the scenes.

- One command to create a secure server inside a virtual network.

- Ability to use contextual information between CLI commands help reduce the number of keystrokes for each command.

Try Azure Database for PostgreSQL – Flexible Server CLI commands.

Screenshot of Azure CLI welcome message

Screenshot of Azure CLI welcome message

Use local context to store common information

Flexible server CLI commands support local context with az config param-persist command that stores information locally such as region, resource group, subscription ID, resource name, etc. for every sequential CLI command you run. You can easily turn on local context to store information with az config param persist on. If local context is turned on, you can see the contextual information using az config param-persist show. You can always turn if off using az config param persist off.

Run the command to view what is in your local context.

az config param-persist show

The output as shown below will tell you the values stored in your local context.

Command group "config param-persist" is experimental and not covered by customer support.

{

"all":

{

"location":"eastus",

"resource_group_name":"mynewproject"

}

}

Ease of provisioning and deprovisioning

When creating a Postgres server using our managed database service on Azure, you probably want to get started quickly. Especially if you’re just trying things out. With the new and improved Azure CLI for Flexible Server on Azure Database for PostgreSQL, you can quickly create a Postgres server inside a virtual network. Or if you prefer, you can easily provision a server with firewall rules in one single step. You can also view the progress visually as CLI commands keep you informed about what’s going on behind the scenes.

Create a Postgres Flexible Server inside a new virtual network

Instead of creating a resource group, a virtual network, and a subnet with separate commands, you can use one command—az postgres flexible-server create as shown below—to create a secure PostgreSQL Flexible Server inside a new virtual network and have a new subnet delegated to the server.

Run the command to create a secure server inside a virtual network.

az postgres flexible-server create

The output shows you at the steps taken to create this server with the virtual network, subnet, username, and password are all auto-generated.

Local context is turned on. Its information is saved in working directory /home/azuser. You can run az local-context off to turn it off.

Command argument values from local context: --resource-group: mynewproject, --location: eastus

Command group "postgres flexible-server" is in preview. It may be changed/removed in a future release.

Checking the existence of the resource group "mynewproject"...

Resource group "mynewproject" exists ? : True

Creating new vnet "VNET095447391" in resource group "mynewproject"...

Creating new subnet "Subnet095447391" in resource group "mynewproject" and delegating it to "Microsoft.DBforPostgreSQL/flexibleServers"...

Creating PostgreSQL Server "server095447391" in group "mynewproject"...

Your server "server095447391" is using sku "Standard_D2s_v3" (Paid Tier). Please refer to https://aka.ms/postgres-pricing for pricing details

Make a note of your password. If you forget, you would have to reset your password with "az postgres flexible-server update -n server095447391 -g mynewproject -p <new-password>".

{

"connectionString": "postgresql://username:your-password@server095447391.postgres.database.azure.com/postgres?sslmode=require",

"host": "server095447391.postgres.database.azure.com",

"id": "/subscriptions/your-subscription-id/resourceGroups/mynewproject/providers/Microsoft.DBforPostgreSQL/flexibleServers/server095447391",

"location": "East US",

"password": "your-password",

"resourceGroup": "mynewproject",

"skuname": "Standard_D2s_v3",

"subnetId": "/subscriptions/your-subscription-id/resourceGroups/mynewproject/providers/Microsoft.Network/virtualNetworks/VNET095447391/subnets/Subnet095447391",

"username": "your-username",

"version": "12"

}

Create a PostgreSQL Flexible Server with public access to all IPs

Use az postgres flexible-server create –public-access all if you want to create a publicly accessible postgres server. Publicly accessible server can be access from any client machine as long as you have the correct username and password. If you want to restrict access only to your IP set –public -access argument to either your <IP – address> or an IP address range such as <Start IP address- End IP address>.

Run the command create a server will public access.

az postgres flexible-server create --public-access all

The output shows your a new server created with a firewall rule that allows IPs from 0.0.0.0 to 255.255.255.255:

Local context is turned on. Its information is saved in working directory /home/azuser. You can run az local-context off to turn it off.

Command argument values from local context: --resource-group: mynewproject, --location: eastus

Command group "postgres flexible-server" is in preview. It may be changed/removed in a future release.

Checking the existence of the resource group "mynewproject"...

Resource group "mynewproject" exists ? : True

Creating PostgreSQL Server "server184001358" in group "mynewproject"...

Your server "server184001358" is using sku "Standard_D2s_v3" (Paid Tier). Please refer to https://aka.ms/postgres-pricing for pricing details

Configuring server firewall rule to accept connections from "0.0.0.0" to "255.255.255.255"...

Make a note of your password. If you forget, you would have to reset your password with "az postgres flexible-server update -n server184001358 -g mynewproject -p <new-password>".

{

"connectionString": "postgresql://username:your-password@server184001358.postgres.database.azure.com/postgres?sslmode=require",

"firewallName": "AllowAll_2020-11-11_20-29-34",

"host": "server184001358.postgres.database.azure.com",

"id": "/subscriptions/your-subscription-id/resourceGroups/mynewproject/providers/Microsoft.DBforPostgreSQL/flexibleServers/server184001358",

"location": "East US",

"password": "your-password",

"resourceGroup": "mynewproject",

"skuname": "Standard_D2s_v3",

"username": "your-username",

"version": "12"

}

Delete the server when using local context

Use az postgres flexible-server delete to delete the server which will look in local context to find for any PostgreSQL flexible server. In the example below, it identified server056513445 in local context and hence tries to delete that server.

Run the command to delete your server.

az postgres flexible-server delete

The output prompts you to confirm if you want to delete the server.

Local context is turned on. Its information is saved in working directory /home/azuser. You can run az local-context off to turn it off.

Command argument values from local context: --resource-group: mynewproject, --name: server184001358

Command group "postgres flexible-server" is in preview. It may be changed/removed in a future release.

Are you sure you want to delete the server "server184001358" in resource group "mynewproject" (y/n): y

Tune your PostgreSQL server

Tuning PostgreSQL database parameters is important to configure your server to fit your application’s needs or even to optimize performance. You can use az postgres flexible-server parameter set command to easily update server parameters with ease. You can view all the server parameter with parameter list command and parameter show command to view the parameter values of an specific server parameter.

Run the command to configure log_error_verbosity parameter:

az postgres flexible-server parameter set --name log_error_verbosity --value TERSE

The output shows you if the value has change to TERSE .

Local context is turned on. Its information is saved in working directory /home/azuser. You can run az local-context off to turn it off.

Command argument values from local context: --server-name: server184001358, --resource-group: sumuth-flexible-server

Command group "postgres flexible-server parameter" is in preview. It may be changed/removed in a future release.

{

"allowedValues": "terse,default,verbose",

"dataType": "Enumeration",

"defaultValue": "default",

"description": "Sets the verbosity of logged messages.",

"id": "/subscriptions/xxxxxxxxxxxxxxxxxxxxxxxxxxx/resourceGroups/sumuth-flexible-server/providers/Microsoft.DBforPostgreSQL/serversv2/server184001358/configurations/log_error_verbosity",

"name": "log_error_verbosity",

"resourceGroup": "sumuth-flexible-server",

"source": "user-override",

"type": "Microsoft.DBforPostgreSQL/flexibleServers/configurations",

"value": "TERSE"

}

Use powerful Azure CLI utilities with flexible server CLI

Azure CLI has powerful utilities that can be used with PostgreSQL Flexible Server CLI commands from finding right commands, getting readable output or even running REST APIs.

- az find to find the command you are looking.

- Use the

–help argument to get a complete list of commands and subgroups of a group.

- Change the output formatting to table or tsv or yaml formats as you see fit.

- Use az interactive mode which provides interactive shell with auto-completion, command descriptions, and examples.

- Use az upgrade to update your CLI and extensions.

- Use az rest command that lets you call your service endpoints to run GET, PUT, PATCH methods in a secure way.

The new experience has been designed to support the best possible experience for developers to create and manage your PostgreSQL servers. We’d love for you to try out Azure Database for PostgreSQL Flexible server CLI commands and share your feedback for new CLI commands or issues with existing ones.

Recent Comments