by Contributed | Oct 9, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Some great new announcements and an important certificate change to tell you about this week.

Azure Communication Services (SMA and Telephony) is now available in public preview. There’s an important change to the root certificate authority of the TLS certificates used by Azure services. Conditional Access to the Office 365 Suite is now generally available. And we look at managing Azure Policies as code in GitHub. Let’s go!

https://youtube.com/watch?v=AvXw1380Vq4

Azure Communication Services SMS and Telephony now available in Public Preview

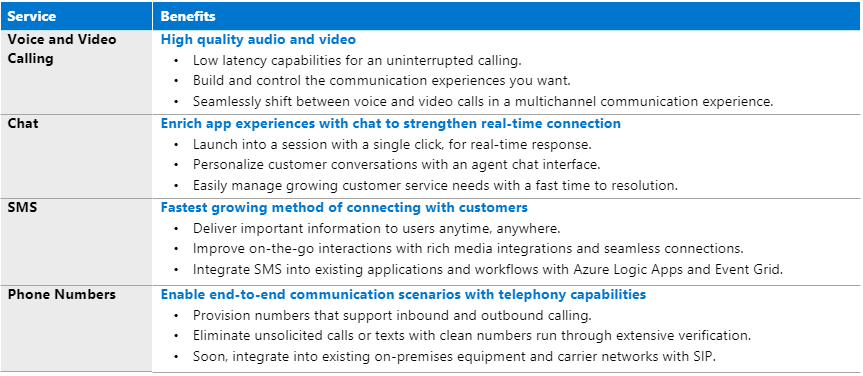

Azure Communication Services makes it easy to add voice and video calling, chat, and SMS text message capabilities to mobile apps, desktop applications, and websites with just a few lines of code. While developer friendly APIs and SDKs make it easy to create personalized communication experiences quickly, without having to worry about complex integrations.

These capabilities can be used on virtually any platform and device. Build engaging communication experiences with the same secure platform used by Microsoft Teams.

View the announcement

Learn more about Azure Communication Services

IMPORTANT: Azure TLS certificate changes

Microsoft is updating Azure services to use TLS certificates from a different set of Root Certificate Authorities (CAs). This change is being made to comply with one of the CA/Browser Forum Baseline requirements.

This exercise will conclude on October 26, 2020. This will impact customers who use certificate pinning (to explicitly specify a list of acceptable CAs) or customers with specific operating systems that talk to Azure services and may require steps to correctly build the cert chain to the new roots (e.g. Linux, Java, Android etc).

For more information, see Azure TLS certificate changes

Conditional Access for the Office 365 Suite now in GA

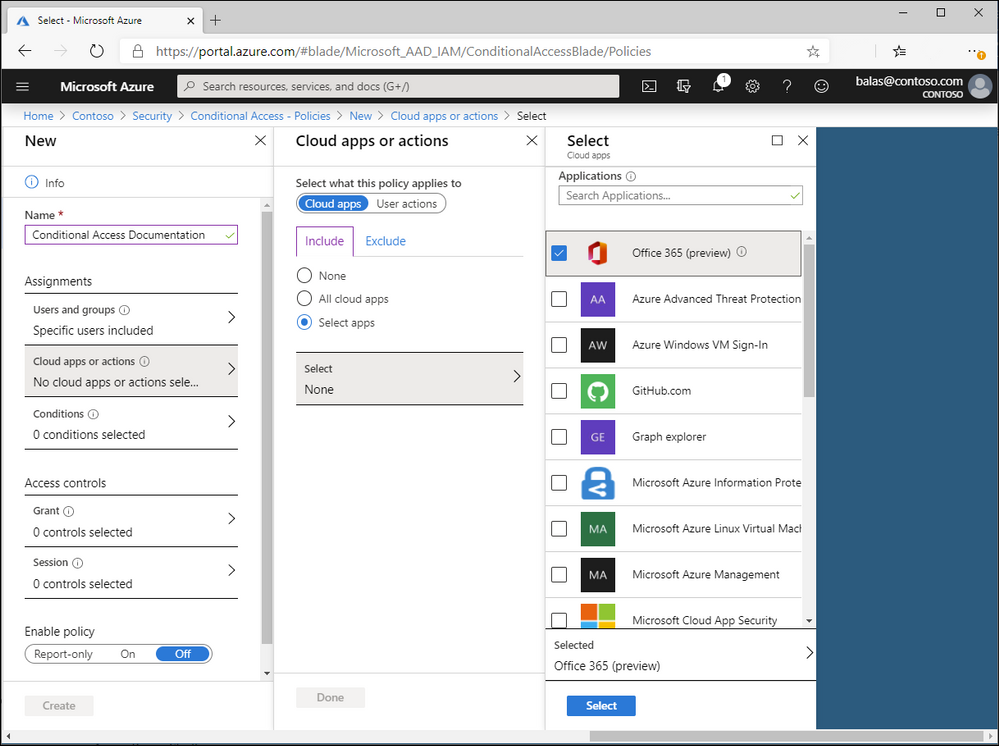

Conditional Access Policies give you a greater level of control over enforcing security requirements when accessing Office 365 applications, but with the apps sharing many underlying services, it can be hard to keep these policies consistent. You can now set conditional access policy requirements across the entire suite of Office 365 apps, including Exchange Online, SharePoint Online, and Microsoft Teams, as well as micro-services used by these well-known apps.

Premiered in public preview in Feb 2020, this capability is now Generally Available.

View the announcement

Learn more about Conditional Access for the Office 365 Suite

Enabling resilient DevOps practices with code to cloud automation

Thanks to Pixel Robots (Discord) for bringing this one to our attention.

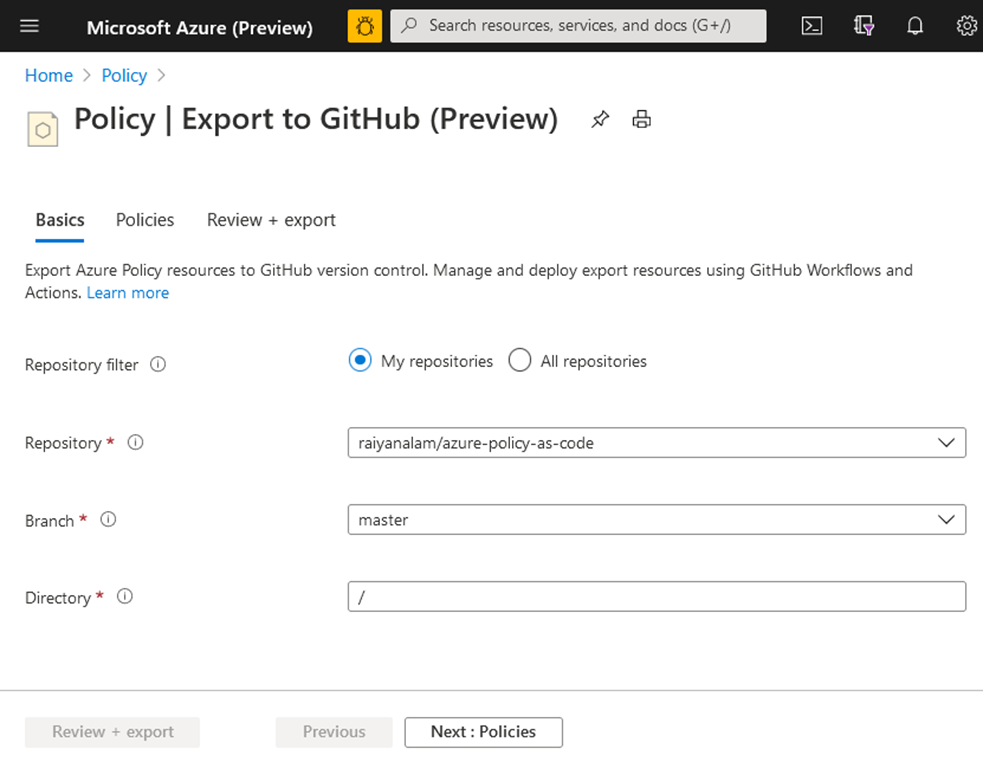

As you progress on your Cloud Governance journey, there is an increasing need to shift from manually managing each policy in the Azure portal to something more manageable, collaborative, and repeatable at enterprise scale. We are announcing that we made the integration between Azure Policy and GitHub even stronger to help you on this journey.

You can now easily export Azure policies to a GitHub repository in just a few clicks. All exported policies will be stored as files in GitHub. You can then collaborate and track changes using version control and push policy file changes to Azure Policy using Manage Azure Policy action. See Managing Azure Policy as Code with GitHub to learn more and go to Azure Policy to access the feature.

View the full product group blog on enabling resilient DevOps practices with code to cloud automation.

MS Learn Module of the Week

Build a cloud governance strategy on Azure

Keeping a Cloud environment controlled and consistent can be challenging, as it expands and as more teams have access to deploy and change resources. Enable teams to have the control they need, within the boundaries you set, and avoid configuration drift by implementing a cloud governance strategy. This module is part of a newly updated set of Azure Fundamentals training, and includes exercises in the free Azure sandbox environment.

After completing this module, you’ll be able to:

– Make organizational decisions about your cloud environment by using the Cloud Adoption Framework for Azure.

– Define who can access cloud resources by using Azure role-based access control.

– Apply a resource lock to prevent accidental deletion of your Azure resources.

– Apply tags to your Azure resources to help describe their purpose.

– Control and audit how your resources are created by using Azure Policy.

– Enable governance at scale across multiple Azure subscriptions by using Azure Blueprints.

Let us know in the comments below if there are any news items you would like to see covered in next week show. Az Update streams live every Friday so be sure to catch the next episode and join us in the live chat.

by Contributed | Oct 9, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

On the 13th October at 1PM PDT, 9PM BST, Mustafa Saifee, a Microsoft Learn Student Ambassador from SVKM Institute of Technology, India and Dave Glover, a Cloud Advocate from Microsoft will livestream an in-depth walkthrough of how to develop a secure IoT solution with Azure Sphere and IoT hub on Learn TV.

The content will be based on a module on Microsoft Learn, our hands-on, self guided learning platform, and you can follow along at https://docs.microsoft.com/en-us/learn/modules/develop-secure-iot-solutions-azure-sphere-iot-hub/

You can follow along with us live on October 13, or join the Microsoft IoT Cloud Advocates in our IoT TechCommunity throughout October to ask your questions about IoT Edge development.

Meet the presenters

Mustafa Saifee

Microsoft Learn Student Ambassador

SVKM Institute of Technology

Dave Glover

Senior Cloud Advocate, Microsoft

IoT and Cloud Specialist

Session details

In this session Dave and Mustafa will deploy an Azure Sphere application to monitor ambient conditions for laboratory conditions. The application will monitor the room environment conditions, connect to IoT Hub, and send telemetry data from the device to the cloud. You’ll control cloud to device communications and undertake actions as needed.

Learning Objectives

In this session you will learn how to:

- Create an Azure IoT Hub and Device Provisioning Services

- Configure your Azure Sphere application to Azure IoT Hub

- Build and deploy the Azure Sphere application

- View the environment telemetry from the Azure Cloud Shell

- Control an Azure Sphere application using Azure IoT Hub Direct Method

Ready to go

Our Livestream will be shown live on this page and at Microsoft Learn TV on Tuesday 13th October 2020 or early morning of Wednesday 14th October in APAC time zone.

by Contributed | Oct 9, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Thriving on Azure SQL

Many thanks to our colleagues Mahesh Sreenivas, Pranab Mazumdar, Karthick Pakirisamy Krishnamurthy, Mayank Mehta and Shovan Kar from Microsoft Dynamics team for their contributions to this article.

Overview

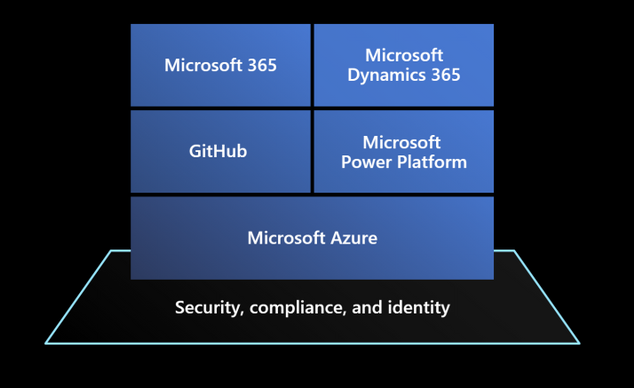

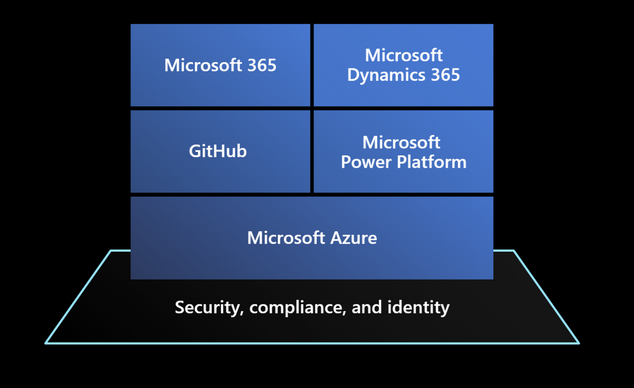

Dynamics 365 is a set of intelligent SaaS business applications that helps companies of all sizes, from all industries, to run their entire business and deliver greater results through predictive, AI-driven insights. Dynamics 365 applications are built on top of a Microsoft Power Platform that provides a scalable foundation for running not just Dynamics apps themselves, but also to let customers, system integrators and ISVs to create customizations for specific verticals, and connect their business processes to other solutions and systems leveraging hundreds of pre-existing connectors with a no-code / low-code approach.

Microsoft Power Platform (also offering services like Power Apps, Power Automate, Power Virtual Agents and PowerBI) has been built on top of Microsoft Azure services, like Azure SQL Database, which offer scalable and reliable underlying compute, data, management and security services that power the entire stack represented in the picture above.

A bit of history

Microsoft Dynamics 365 has its roots in a suite of packaged business solutions, like Dynamics AX, NAV and CRM, running on several releases of Windows Server and SQL Server on customers’ datacenters around the world.

When Software as a Service paradigm emerged to dominate business applications’ industry, Dynamics CRM led the pack becoming one of the first Microsoft’s online services. At the beginning of the SaaS journey, Dynamics services ran on of bare-metal servers in Microsoft’s on-premises datacenters. With usage growing every day, into millions of active users, the effort required to deploy and operate all those servers, manage capacity demands, and respond promptly to issues of continuously growing customer data volumes (with database size distribution from 100 MB up to more than 4 TB for the largest tenants) would eventually become unmanageable.

Dynamics was one of the first adopters of Microsoft SQL Server 2012 AlwaysOn to achieve business continuity, but also to provide a flexible way to move customer databases to new clusters by creating additional replicas to rebalance utilization.

Managing so many databases at scale was clearly a complex task, involving the entire database lifecycle from initial provisioning to monitoring, patching and operating this large fleet while guaranteeing availability, and team learned how to deal with issues like quorum losses and replicas not in a failover-ready state. From a performance perspective, database instances running on shared underlying nodes, made it hard to isolate performance issues and provided limited options to scale up or accommodate workload burst other than moving individual instances to new nodes.

As end customers can run multiple versions of (highly-customized) applications in their environments, generating significantly different workloads, it is no surprise to hear from Mahesh Sreenivas, Partner Group Engineering Manager on the Common Data Service team, that to manage and maintain all this on a traditional platform was “painful for both engineers and end customers”.

The move to Azure and Azure SQL Database

Dynamics 365 team decided to move their platform to Microsoft Azure to solve these management and operational challenges while meeting customer requirements, ensuring platform fundamentals like availability and reliability, and letting the engineering team to focus on adding innovative new features.

Engineering effort from initial designs to production took a couple of years, including migration of the customers to the new Azure based platform, moving from a monolithic code base running on-premises into a world-class planet scale service running on Azure.

Common Data Service on Azure SQL Database

The first key decision was to transition from a suite of heterogeneous applications, each one with its own history and technical peculiarities, to a common underlying platform where Dynamics applications were going to be regular applications just like what other ISV companies could build and run: hence Microsoft Power Platform and its Common Data Service layer was introduced. Essentially, a new no-code, low-code platform built on top of underlying Azure capabilities like Compute, Storage, Network and other specialized services like Azure SQL Database was a way to abstract Dynamics applications from underlying platform, letting Dynamics developers to focus on transitioning to the platform without managing individual resources like database instances.

The same platform today is also hosting other services like PowerApps, Power Automate, Power Virtual Agents or PowerBI, and it is available for other companies to build their own SaaS applications on top of, from no-code simple solutions to full-code specialized ISV apps that don’t need to worry about how to manage underlying resources like compute and various storage facilities.

By moving to Azure a platform that is managing around 1M database instances (as of July 2020), Dynamics team learned a lot about how underlying services are working, but also provided enormous feedback to other Microsoft teams to make their services better in a mutually beneficial relationship.

From an architectural perspective, Common Data Service is organized in logical stamps (or scale groups) that have two tiers, compute and data, where the relational data store is built on Azure SQL Database given team’s previous familiarity with SQL Server 2012 and 2016 on premises. This provides out of the box, pre-configured high availability with a 3 (or more) nines SLA, depending on selected service tiers. Business Continuity is also guaranteed through features like Geo-restore, Active Geo-replication and Auto Failover Groups.

Azure SQL Database also helped the team significantly by reducing database corruption episodes at table, index or backup level compared to running many databases on a shared, single SQL Server instance on-premises. Similarly, the several man hours required for patching at the firmware, operating system and SQL Server on physical machines have been reduced to only managing the application layer and its data.

Azure SQL Database Elastic Pools

Once landed on Azure SQL Database, the second key decision was to adopt Elastic Pools to host their database fleet. Azure SQL Database Elastic Pools are a simple and cost-effective solution for managing and scaling multiple databases that may have varying and unpredictable usage demands. Databases in an elastic pool are on a single logical server, and share a given amount of resources at a set price. SaaS application developers can optimize the price-performance for hundreds of databases within a prescribed resource budget, while delivering performance elasticity for each database and control multi-tenancy by setting min-max utilization thresholds for each tenant. At the same time, they enforce security and isolation by providing separate access control policies for each database. “By moving to Azure SQL Database Elastic Pools, our team doesn’t need to over-invest in this aspect of managing replication because it’s handled by the Azure SQL Database service,” explains Mahesh.

Microsoft Power Platform is using a separate for each tenant using a given service within its portfolio.

The “Spartan” resource management layer

Given the wide spectrum of customer industries, sizes and (customized) workloads, one of the key requirements is the ability to allocate and manage these databases across a fleet of elastic pools efficiently, maximizing resource utilization and management. To achieve this goal, 3 aspects are critical to master:

- Flexibility in sizing and capacity planning

- Agility in scaling resources for individual tenants

- Optimized price-performance

While Azure SQL Database platform provides the foundations to fully manage these aspects (for example, online service tier scaling, ability to move databases between pools, ability to move from single databases to pools, and viceversa, multitude of price-performance choices, etc.), Dynamics team created a dedicated management layer to automate these operations based on application-defined conditions and policies. “Spartan” is that management layer, designed to reduce the amount of manual efforts to the bare minimum, it has a scalable micro-services platform (implemented as an ARM Resource Provider) which is taking care the entire lifecycle of their database fleet.

Spartan is an API layer taking care of database CRUD operations (create, read, update, delete), but also all other operations like moving a database between elastic pools to maximize resource utilization, load balancing customer workloads across pools and logical servers, managing backup retention and restoring databases to a previous point in time. Underlying storage assigned to databases and pools is also managed automatically by the platform to avoid inefficiencies and maximize density. What may appear as a rare operation like shrinking a database in production, is a common task for a platform that needs to operate more than 1M databases and optimize costs.

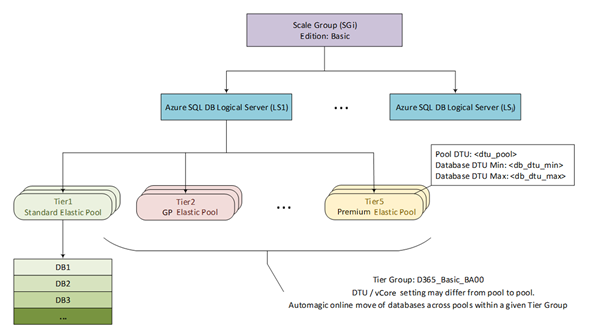

Elastic Pools are organized in “tiers”, where each tier provides different configurations in terms of underlying service tiers utilized (General Purpose, Business Critical) and compute size allocated so that end customer databases will always run at the optimal price-performance level. Each tier also controls min-max settings for associated databases and define optimal density of databases per pool, in addition to other details like firewall settings and others.

Figure 1 Azure SQL Database layout per Scale Group

The picture above represents this logical organization of Elastic Pools into tiers and shows the combination of DTU and vCore purchasing models that Dynamics team is using to find the best tradeoff between granular resource allocation and cost optimization.

For very large customers, the platform can also move these databases out of a shared pool into a dedicated Azure SQL Single Database with the ability to scale to the largest compute sizes (e.g. M-Series with up to 128 vCore instances).

The level of efficiency of this platform is incredible, if you think that Dynamics team is managing the entire platform with 2 dedicated engineers, that are focused on operating and improving the Spartan platform rather than managing individual databases.

Dynamics 365 and Azure SQL Database: better together!

As mentioned, engineers from Dynamics and Azure teams have worked hard together to improve underlying platforms during this journey. Some platform-wide improvements like Accelerated Networking, introduced to significantly reduce network latency between compute nodes and other Azure services, has been heavily advocated by Dynamics team who highly benefitted from this for their data-centric, data-intensive applications.

In Azure SQL Database, Dynamics team has influenced the introduction of the vCore model to find the right ratio between compute cores and database storage that now can scale independently and optimize costs and performance.

To help get even more out of the relational database resources in Common Data Service, the team implemented Read Scale-Out, which helps boost performance by offloading part of the workload placed on primary read-write database replicas. Like most data-centric services, the workload in Common Data Service is read-heavy—meaning that it gets a lot more reads than writes. And with Read Scale-out, if a call into Common Data Service is going to result in a select versus an update, we can route that request to a read-only replica and scale-out the read workload.

Given the vast variety of customer workloads and scale, Dynamics 365 apps have been also a great sparring partner to tune and improve features like Automatic Tuning with automatic plan correction, automatic index management and intelligent query processing set of features.

Imagine having queries timing out in a 1 million database fleet: is it for lack of the right indexing strategy? A query plan regression? Something else?

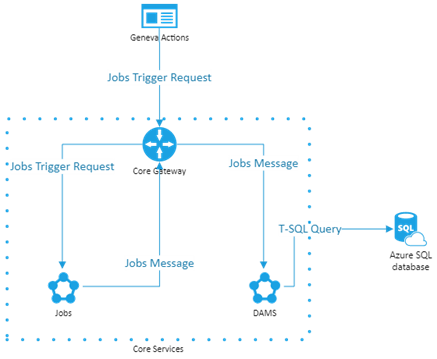

To help Dynamics engineers and support organization during troubleshooting and maintenance events, another micro-service called Data Administration and Management Service (DAMS) has been developed to schedule and execute maintenance tasks and jobs like creating or rebuilding indexes to dynamically optimize changes in customer workloads. These tasks can span areas like performance improvements, transaction management, diagnostic data collection and query plan management.

Figure 2 DAMS Architecture

With help from Microsoft Research (MSR), Dynamics team has ported SQL Server’s Database Tuning Advisor (DTA) to Azure SQL and integrated it into the microservice. DTA is a platform for evaluating queries and generating index and statistic recommendations to tune query performance of the most critical database workloads.

Like any other customer database in Azure SQL Database, Dynamics 365 databases have features like Query Store turned on by default. This feature provides insights on query plan choices and performance and simplifies performance troubleshooting by helping them in quickly find performance differences caused by query plan changes.

On top of these capabilities, Dynamics team also created an optimization tool that they share with their end customers to validate if their own customizations are implemented properly, detects things like how many data controls are placed in their visualization forms and provide recommendations that adhere to their best practices.

They also proactively monitor customer workloads to understand critical use cases and detect new patterns that customers may introduce, and make sure that the platform can run them efficiently.

Working side by side with Azure SQL Database engineers, the Dynamic team helped improving many areas of the database engine. One example is related to extremely large query plan caches (100k+ plans), a common problem for complex OLTP workloads, where spinlock contention on plan recompilations was creating high CPU utilization and inefficiencies. Solving this issue helped thousands of other Azure SQL Database customers running on the same platform.

Other areas they helped to improve were Constant Time Recovery, making failover process much more efficient for millions of databases, or managed lock priority for reducing blocking during automatic index creation.

In addition to what Azure SQL Database provides out of the box, Dynamics team also developed specific troubleshooting workflows for customer escalating performance issues. As an example, Dynamics support engineers can run Database Tuning Advisor on a problematic customer workload and understand specific recommendations that could be applied to mitigate customer issues.

A look into the future

Dynamics 365 was one of the prime influencers for increasing Azure SQL Database max instance size from 1TB to 4TB, given the scale of some of the largest end customers. That said, even 4TB now is a limit in their ability to scale, so Dynamics team is looking into Azure SQL Database Hyperscale as their next-gen relational storing tier for their service. Virtually unlimited database size, in conjunction with the separation between compute and storage sizing and the ability to leverage read replicas to scale out customer workloads are the most critical characteristics that the team is looking into.

Dynamics team is working side by side with Azure SQL team in testing and validating Azure SQL Database Hyperscale on all the challenging scenarios mentioned previously, and this collaboration will continue to be successful not only for the two teams respectively, but also for all the other customers running on the platform.

by Contributed | Oct 8, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

While cloud provides lot of agility, flexibility and ease for creating and managing your resources, it can be very costly if its not managed properly. Azure Advisor helps you analyzing your configurations and usage telemetry and offers personalized, actionable recommendations to help you optimize your Azure resources for reliability, security, operational excellence, performance, and cost. Icing on the cake is Azure Advisor is available at no additional cost.

It provides:

- Best practices to optimize your Azure workloads

- Step-by-step guidance and quick actions for fast remediation

- One place to review and act on recommendations from across Azure

- Alerts to notify you about new and available recommendations

Key things:

Optimize your deployments with personalized recommendations – Advisor provides relevant best practices to help you improve reliability, security, and performance, achieve operational excellence, and reduce costs. Configure Advisor to target specific subscriptions and resource groups, to focus on critical optimizations. Access Advisor through the Azure portal, the Azure Command Line Interface (CLI), or the Advisor API. Or configure alerts to notify you automatically about new recommendations.

Quickly and easily take action – Advisor is designed to help you save you time on cloud optimization. The recommendation service includes suggested actions you can take right away, postpone, or dismiss. Advisor Quick Fix makes optimization at scale faster and easier by allowing users to remediate recommendations for multiple resources simultaneously and with only a few clicks. Recommendations are prioritized according to our best estimate of significance to your environment, and you can share them with your team or stakeholders.

Find all your optimization recommendations in one place – Azure offers many services that provide recommendations, including Azure Security Center, Azure Cost Management, Azure SQL DB Advisor, Azure App Service, and others. Advisor pulls in recommendations from all these services so you can more easily review them and take action from a single place.

by Contributed | Oct 8, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Using replication in a MySQL setup, allows to copy data from a master server to one or more MySQL replica servers. There are a couple of reasons of using MySQL replication:

- It spreads read load across replica servers for improving performance;

- Enhance business security, continuity and performance using cross-region read replica: the replica can be in a different region from the master server;

- BI and analytical workloads can be used on a replica server without affecting the performance of the master server.

Regardless of scenario and the number of Azure Database for MySQL read replicas configured, since the replication is asynchronous there is a latency between master and replica server.

Replication latency due to IO or SQL errors

When the replication is started on the replica servers 2 threads are running:

- IO thread that connects to the master server, reads the binary logs and copies them to a local file that is called relay log;

- SQL thread that reads the events from relay log and applies them to the database.

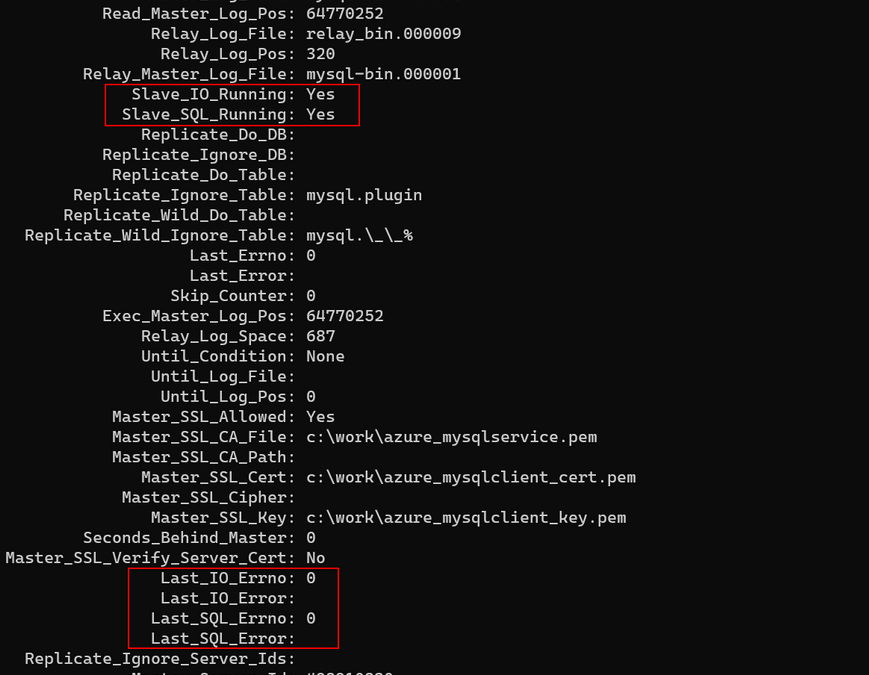

First step to troubleshoot the replication latency is to check if the latency is either due to IO or SQL thread. As such on the replica server must be executed the following statement:

SHOW SLAVE STATUS

A typical output will look like:

Figure 1. SHOW SLAVE STATUS output

From above if Slave_IO_Running: Yes and Slave_SQL_Running: Yes then the replication is running, it is not broken. Shall be checked Last_IO_Errno/Last_IO_Error or Last_SQL_Errno/Last_SQL_Error that will hold the error number and error message of the most recent error that caused the SQL thread to stop. An error number of 0 and empty message means there is no error. Any error number not equal with 0, must be troubleshooted, can be checked as well the MySQL server error message reference for the given error number.

Replication Latency due to increase connections

A sudden spike in the number of connections increases the CPU usage for the simple fact that connections must be processed. In a situation when there is already a workload on the Azure Database for MySQL replica server and a sudden spike of connections is occurring the CPU will have to process the new connections and as a result the replication latency can increase. In such case the first step is to leverage the connectivity by using a connection pooler like ProxySQL.

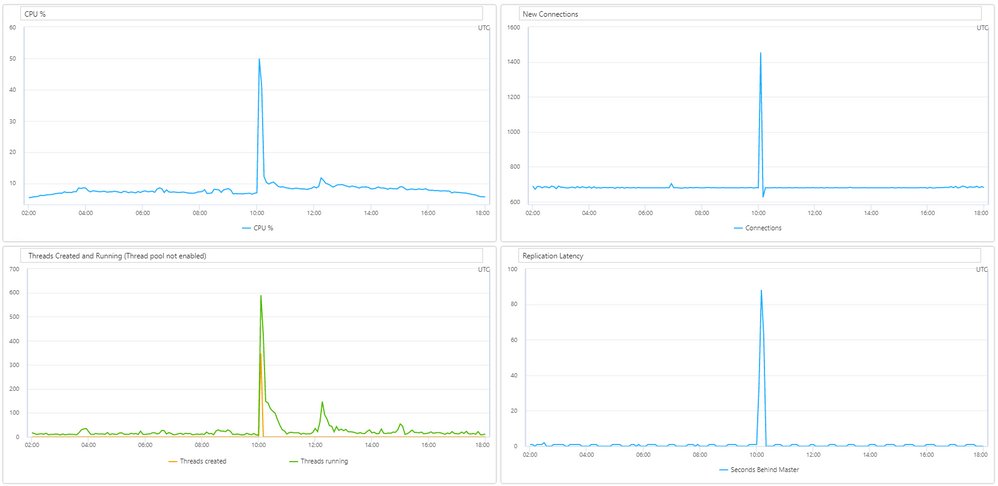

Figure 2. Replication latency is increasing on new connections spike

As in most of the cases ProxySQL is implemented in a AKS cluster with multiple nodes or VM scale sets to avoid a single point of failure, sometimes connections spike can still occur. Out of the box MySQL will assign a thread for each connection, hence, thread pools that is supported from MySQL version 5.7 can be used. As such, we suggest enabling thread pooling on each MySQL replica server and properly configure thread_pool_min_threads and thread_pool_max_threads parameters based on the workload.

By default, thread_pool_max_threads = 65535 and this value simply will not gain any improvement as it will allow to create up till 65535 threads, which has no difference from the normal MySQL behavior. Instead, this parameter should be lowered to a value suitable for the workload. As an example, if this parameter is lowered to 200, even though there can be a couple of thousands of new connections, thread pool will allow to be processed a maximum of 200 connection threads.

After proper implementation of thread pools in Azure Database for MySQL replica servers, in case of a spike of connections the thread pool will limit the threads processed and the CPU will not increase, therefore replication will not be affected by the high number of new connections. We have seen big improvements in replication latency after implementing thread pool.

Figure 3. CPU and Replication latency not increasing on new connections spike (thread pool enabled)

Other keynote in here is that for enabling thread pools in Azure Database for MySQL parameter thread_handling must be changed from “one-thread-per-connection” to “pool-of-threads” and as this parameter is a static one, a server restart will be required for the change to apply. Although thread_pool_max_threads is a dynamic parameter, in a special case when reducing the value, for example from 1000 to 200, the MySQL server will not enlarge the thread pool furthermore, but also already existing threads will not be terminated, thus if the thread pool already contains 1000 threads, a server restart will be needed to reflect the new settings.

Replication latency due to no Primary or Unique key on a table

Azure Database for MySQL uses Row-Based replication, meaning that the master server writes events to the binary log about individual table row change, while the SQL Thread from the replica server will execute those changes to the corresponding table rows. Having no primary or unique key on a table it is also a common cause of replication latency, as some DML queries will need to scan all table rows to apply the changes from SQL thread.

In MySQL the primary key is an associated index that ensures fast query performance as it cannot include NULL values. Also, for InnoDB storage engine, the table data is physically organized to do ultra-fast lookups and sorts based on the primary key column or columns. Therefore, to ensure that the replica is able to keep up with changes from the source, we recommend adding a primary key on tables in the source server before creating the replica server or re-creating the replica server if you already have one.

Replication latency due to long running queries

It is possible that the workload on replica server to be the cause of the latency as the SQL thread cannot keep up with the IO thread. If it is the case, the slow query log should be enabled to help troubleshooting the issue. Slow queries can increase resource consumptions or slow down the server, thus replica will not be able to catch up with the master.

On the other hand, slow queries on master server can equally contribute to the latency as well. For instance, when a long running transaction on the master will complete, the data will be propagated to the replica server, but as it already took long to execute there will be already a delay. In such a case the latency is expected, thus long running queries should be identified and where possible to reduce the timings either by optimizing the query itself or breaking into smaller queries.

Replication latency due to DDL queries

Some DDL queries, especially ALTER TABLE can require a lock on a table, therefore should be used with caution in a replication environment. Depending on the ALTER TABLE statement executed, it might allow concurrent DML operations, for more details check MySQL Online DDL Operations. Also, some operations like CREATE INDEX will only finish when all transactions that accesses the table completed. In a replication scenario first the DDL command will be executed on the master server and only when completed will be propagated to the replica server(s).

In a heavy workload on both master and read replica servers an ALTER TABLE statement on the master server could take time to complete and the size of the table might be a contribution factor as well. When the operation completes, it will be propagated to the read replica server and depending on the ALTER statement it might require a lock on the table. In case a lock is required, the binary log will not be written, obviously the replication lag will start to increase from the moment the ALTER TABLE statement was propagated to the replica till the lock will be released. In such a case, the completion time will also depend on the workload on the replica or if there are long running transactions on the table that will increase the time furthermore.

Though Index dropping, renaming and creation should use INPLACE algorithm for the ALTER TABLE it will avoid copying table data, but may rebuild the table. Typically for INPLACE algorithm concurrent DML are supported, but an exclusive metadata lock on the table may be taken briefly during preparation and execution phases of the operation. As such, for CREATE INDEX statement the clauses ALGORITHM and LOCK may be used to influence the table copying method and level of concurrency for reading and writing, nevertheless adding a FULLTEXT or SPATIAL index will still prevent DML operations. See below an example of creating an index with ALGORITHM and LOCK clauses:

ALTER TABLE table_name ADD INDEX index_name (column), ALGORITHM=INPLACE, LOCK=NONE;

Unfortunately, for DDL statement that requires a lock, replication latency cannot be avoided, instead these types of DDL operations should be performed when less load is given, for instance during nighttime to reduce potential customer impact.

Replication latency due to replica server lower SKU

In Azure Database for MySQL read replicas are created with the same server configuration as the master. The replica server configuration can be changed after it has been created. However, if the replica server will be downgraded, the workload can cause higher resource consumption that in turn can lead to replication latency. Therefore, it is recommended that the replica server’s configuration should be kept at equal or greater values than the source to ensure the replica is able to keep up with the master.

Please reach out to the Azure Database for MySQL team at AskAzureDBforMySQL@service.microsoft.com if you have any questions

by Contributed | Oct 8, 2020 | Azure, Technology

This article is contributed. See the original author and article here.

Omar Khan – General Manager of Azure Customer Success Marketing

Microsoft Learn and Pluralsight are joining forces to help you unlock the power of Microsoft Azure and to help you think further outside the box than ever before. Join us at Pluralsight LIVE, an annual technology skills conference, which is going virtual this year. All you need is an internet connection and a drive to learn!

Register for Pluralsight LIVE and connect with us at the Microsoft Azure “virtual booth,” where you’ll find lots of resources, live chat, trivia games, and opportunities to engage with folks from Microsoft.

Plus, Microsoft is presenting an in-depth session at this year’s LIVE, Build your Azure skills with Microsoft Learn and Pluralsight. This session, on Tuesday, October 13, 2020, at 1 PM Mountain Time, explores how you can get the skills you need to meet the challenges of today’s cloud-based, digital world. You’ll learn what’s new in Azure and how building the right skills on Azure can help you to embrace digital transformation. We’ll explain how the Microsoft Learn offering works and how we partner with Pluralsight to support your skilling process. And we’ll help you get started identifying the right certifications for you or your team.

Decide what your skilling journey looks like

Before you explore how to use the resources of Microsoft Learn and Pluralsight together to learn and master Azure, first step back and examine your and your organization’s goals. Each person—and each company—has a unique journey. Your organization’s digital transformation journey may define your own learning journey. Depending on your business or personal goals, you might identify different skilling needs, for example:

• You or members of your team might need foundational understanding of an Azure technology.

• You might have some roles on your team that need specific technical skills to help people succeed in their jobs.

• You might be starting a new project that requires you to use and combine multiple Azure technologies to deliver the best solution.

After you’ve assessed your learning and skilling needs, you can create a learning plan for you or your team that combines learning experiences available to you through digital skilling, training events, and instructor-led training.

Microsoft Learn offers many training options so you can choose to learn in a style that fits you or your team best, as you identify roles, skills, and learning paths to support your complete learning journey—from building a foundation for skills validation to gaining recognition with Microsoft Certifications. Use free, self-paced learning or attend training events. Or you can attend in-depth instructor-led training, either virtually or in person, using Microsoft Official Courseware delivered by Microsoft Learning Partners. Combine these learning experiences to customize the best learning strategy for you.

Now let’s look at how you can use Microsoft Learn and Pluralsight together to make the most of your learning experience.

Combine Microsoft Learn and Pluralsight to intensify your tech skills on Azure

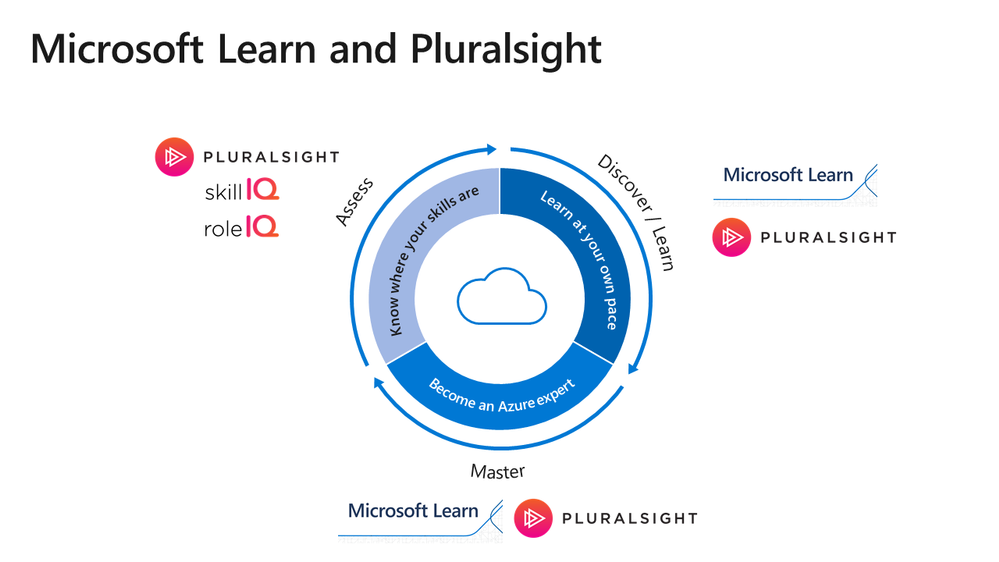

Microsoft Learn and Pluralsight offer complementary options to help you gain Azure expertise. Use them together for an integrated learning experience.

Start by determining where you are:

• To know where your Azure skills stand, use Pluralsight Skill IQ.

• Measure your proficiency in your role, with Pluralsight Role IQ.

After you identify any gaps in your knowledge, focus your time on discovering what you need to learn instead of searching for content or reviewing what you already know.

Combine features from both platforms to enhance your learning. Whether you learn better reading, watching videos, practicing in sandbox environments, or with instructor-led training, use Microsoft Learn and Pluralsight together, wherever you are.

Want to prove your skills by earning an Azure certification? Microsoft Learn and Pluralsight are there to support your journey.

• Prepare using learning paths on Microsoft Learn and Pluralsight.

• Use instructor-led training on Microsoft Learn and Pluralsight to build foundational skills and reinforce your skills.

Already certified? Challenge yourself to earn another certification. Learning is an ongoing journey. Create your own learning strategy with instruction in both Microsoft Learn and Pluralsight. The following infographic shows you how you can assess, discover, and master the skills you need by combining these helpful resources.

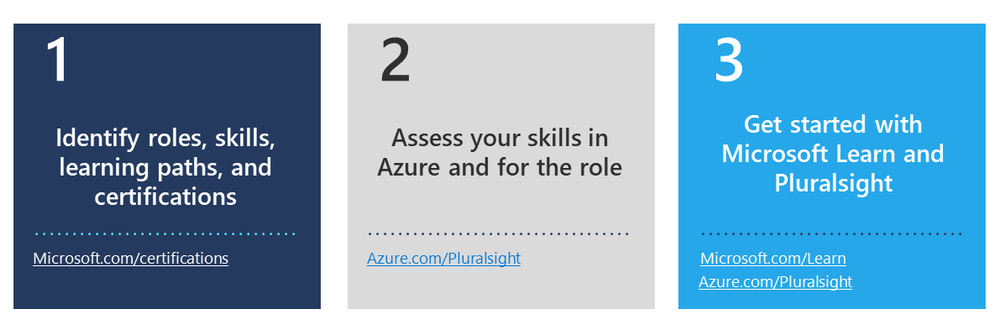

Time to start learning!

Microsoft Learn and Pluralsight are the leading complementary learning platforms to help you skill up on—and master—Azure and to help you get recognized and take the next step in your career. Check out Microsoft Learn for instructor-led or self-paced interactive training and for hands-on learning, and go to Pluralsight for videos, skills assessments, and more. Create a custom Azure learning path, learn at your own pace, and prepare for Azure certifications—all on your way to becoming an Azure expert. Are you ready? One, two, three—let’s go!

Recent Comments