by Contributed | Dec 2, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

For many people, hands-on experience is often the best way to learn and evaluate data tools. I’ve been working with a colleague from our Azure team, Kunal Jain, to put together an end-to-end Azure Synapse and Power BI solution using 120+ million rows of real CMS Medicare Part D Data that is available for use in the public domain. If you’re not highly technical, and you’ve never used Azure or Power BI before, you can still deploy this solution with a few simple steps using an Azure ARM template. We also provide a video to walk you through a paint-by-numbers tutorial. The Azure ARM template will automatically:

- Create Azure Data Lake, Azure Data Factory, and Azure Synapse

- Pull the raw data from CMS into a Data Lake using Azure Data Factory

- Shape the data and create a dimensional model for Azure Synapse

- Deploy the solution to Azure Synapse, including performance tuning settings

The entire process takes about an hour and a half to run, with most of that time spent on waiting for the scripts from the ARM template to finish. Once deployed, another video also walks you through the steps of connecting it to a pre-built Power BI report template. The whole process should take about 1-2 hours with no code required, and at the end you can review and evaluate and end-to-end Azure and Power BI solution using real CMS Medicare Part D Data:

Here is a link to the GitHub site: https://github.com/kunal333/E2ESynapseDemo

While the source CMS data is real public Healthcare data, the intent of this project is to provide you with a simple end-to-end solution for the purposes of learning, demos, and tool evaluation. We intend to enhance and build upon this solution in the future, but it is not a supported solution intended to be used for production purposes.

Below is a tutorial video for deploying the solution. Note that this is designed to be low code with only a few things to cut and paste. All you need is an Azure account, and you can pause the Synapse instance or delete the entire Resource Group at any time. There are also simple instructions on the GitHub page:

Here is another tutorial video describing the process by which to connect the Power BI Template file containing the business logic:

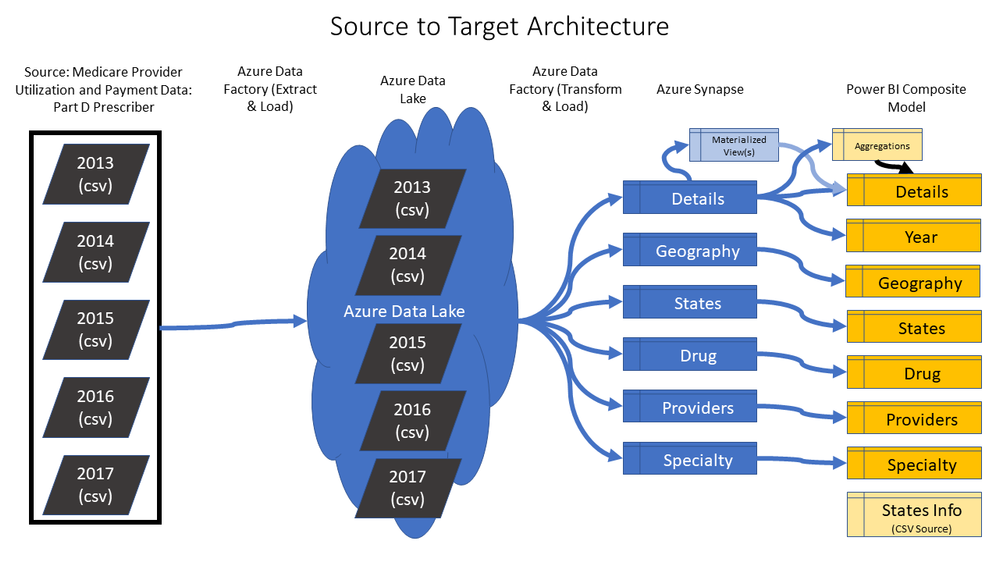

The following diagram summarizes the steps of the whole process:

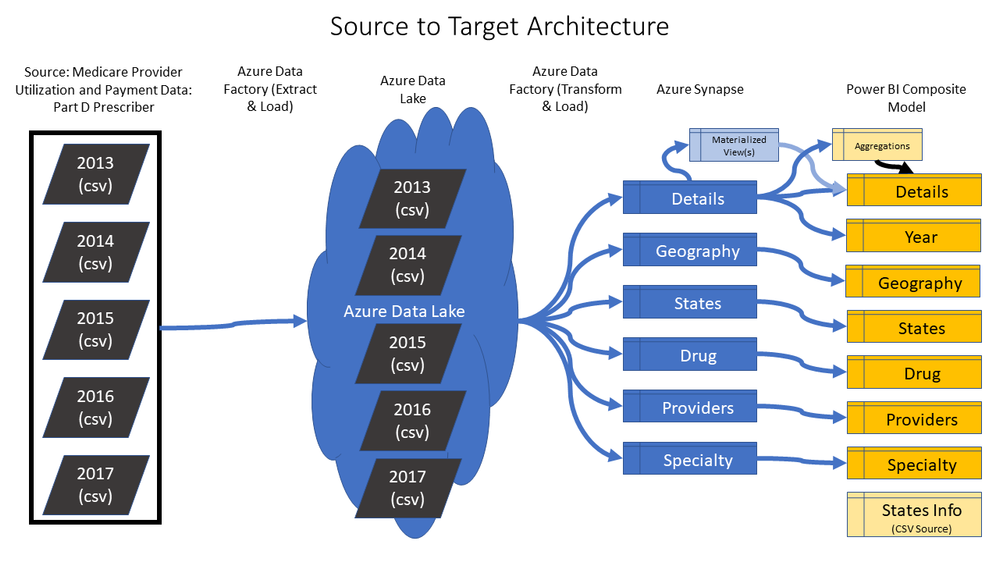

Azure Data Factory, along with Power BI, creates the following logical model that enables highly performant end user queries for complicated questions about the data. Notice that a CSV file is also added to the Power BI layer to demonstrate that custom criteria from a business user can be used to query the Synapse data:

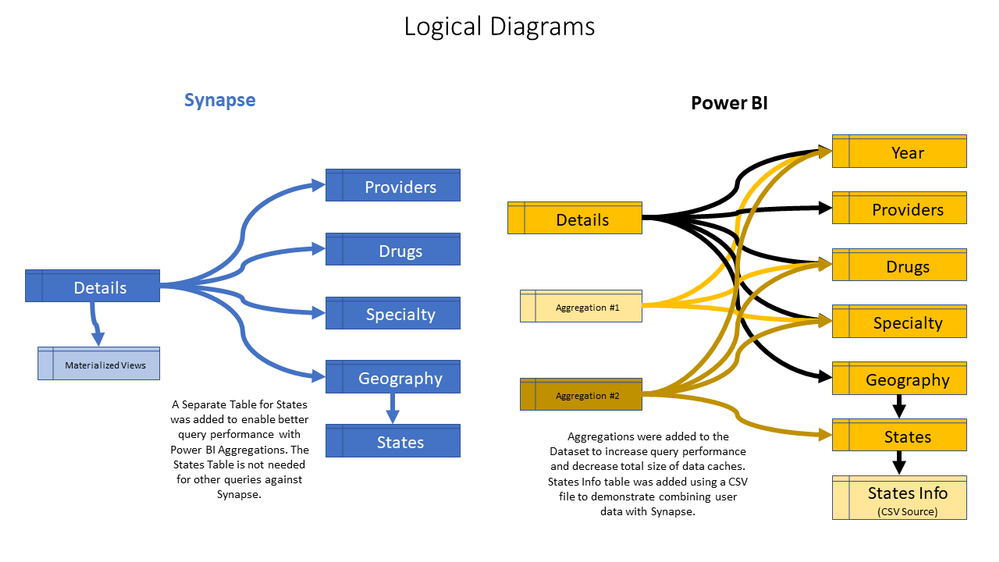

Calculations have been added to the Power BI Semantic Layer to enable complex analytics such as Pareto Analysis:

Below is a screenshot of the pre-built Power BI report:

More information about the solution is available at the GitHub site: https://github.com/kunal333/E2ESynapseDemo

If you deploy this solution, we’d appreciate if you could take the time to provide some feedback. What was your experience with the ARM template? How do you plan to use this solution? What types of similar solutions can we provide in the future that would be valuable?:

by Contributed | Dec 2, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

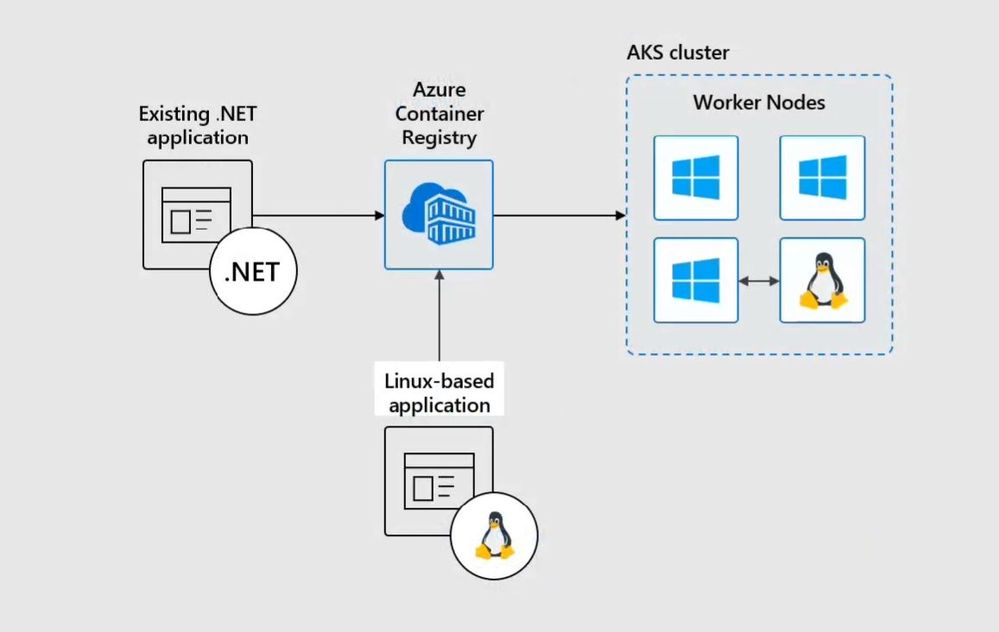

Today we are looking at how you can modernize Windows Server Apps on Microsoft Azure using Containers with Windows Admin Center and Azure Kubernetes Service (AKS). We will see how we can create a new custom Docker container image using Windows Admin Center, upload that to an Azure Container registry and deploy it to our Azure Kubernetes Service cluster.

In the video, we have a quick intro about Windows and Hyper-V containers in general. After that, we are using Windows Admin Center with the new Container Extension to manage our Windows Server container host and create a new Docker container image.

Windows Admin Center Container Extension and Windows Server Container Host

@Vinicius Apolinario and his team just released a new version of the container extension for Windows Admin Center in November 2020, which will help you to simply create a new Windows Server container host. You can find more about the latest new features here.

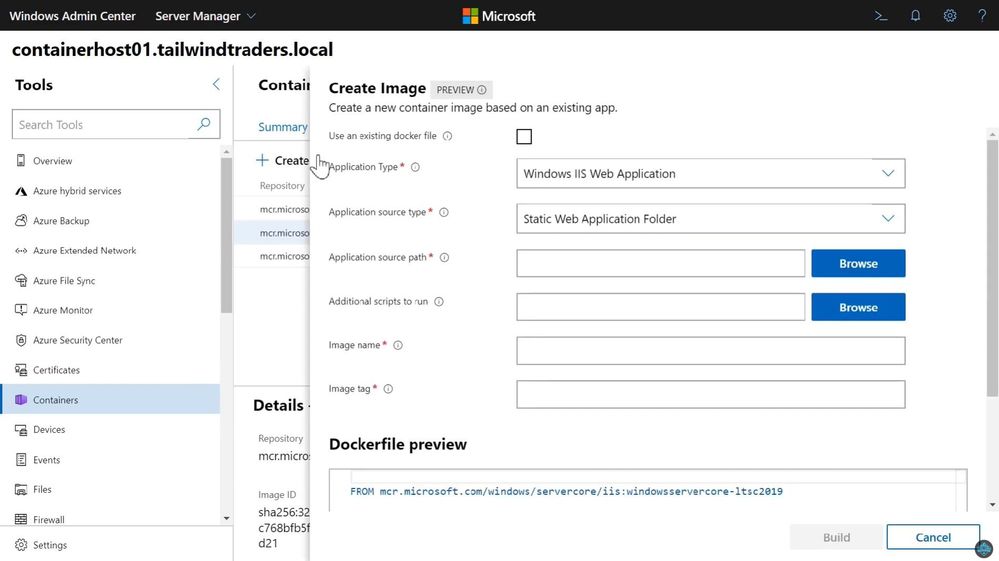

Create a new Docker Container Image using Windows Admin Center

You can use the Windows Admin Center Container extension to create a new Docker container image. This will help you to easily create the necessary basic Docker file for your container image.

Create a new Docker Container Image using Windows Admin Center

Create a new Docker Container Image using Windows Admin Center

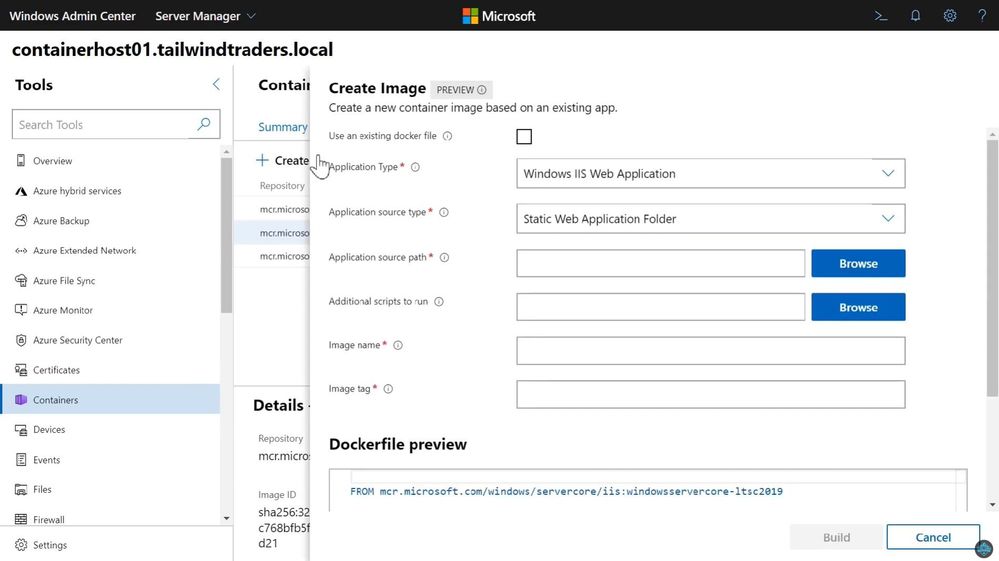

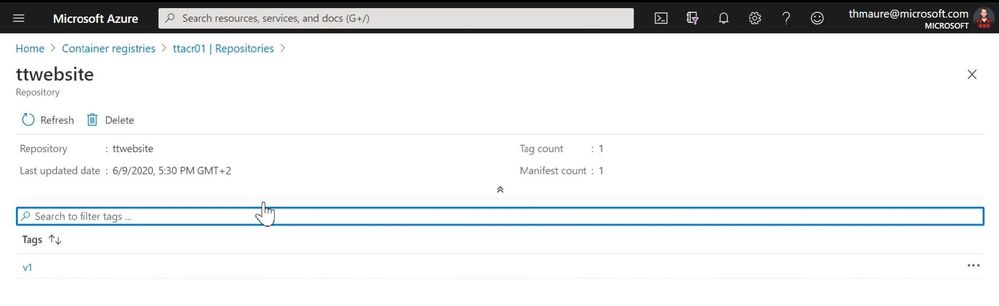

Push Windows Container Image to Azure Container Registry

After you have created your custom container image, you can now upload it to your container registry. This can be an Azure Container Registry (ACR) or another container registry you want to use.

Push Container image to ACR

Push Container image to ACR

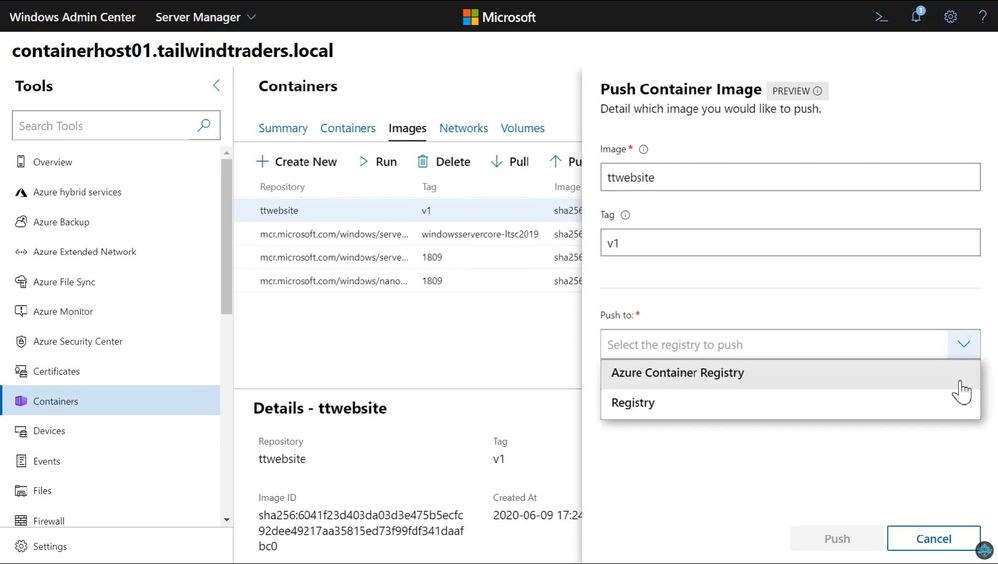

You can now find your container image on your container registry.

Azure Container Registry ACR

Azure Container Registry ACR

Now you can deploy your Windows Server container image to your Azure Kubernetes Service (AKS) cluster or other container offerings on Azure, AKS on Azure Stack HCI, Azure Stack Hub, or any other container platform which has access to the ACR.

Windows Server Containers on AKS

Windows Server Containers on AKS

I hope this blog was helpful to show some of the tooling available to modernize Windows Server applications on Microsoft Azure using Containers with Windows Admin Center and AKS! If you have any questions, feel free to leave a comment.

by Contributed | Dec 2, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

All Around Azure is the amazing show you may already know to learn everything about Azure services and how they can be utilized with different technologies, operating systems, and devices. Now, the show is expanding! All Around Azure: Developers Guide to IoT is the next event in our Worldwide Online Learning Days event series and will focus on topics ranging from IoT device connectivity, IoT data communication strategies, use of artificial intelligence at the edge, data processing considerations for IoT data, and IoT solutioning based on the Azure IoT reference architecture.

Join us on a guided journey into IoT Learning and certification options. We will be hosting 2.5 hours of live content and Q&A sessions in your local time zone – so you can get all your questions answered in real-time by our speakers. No matter where you are and what time zone you are streaming from, we will see you on January 19th.

Internet of Things Event Learning Path

This event follows along the Internet of Things Event Learning Path. A learning path is a carefully curated set of technical sessions that provide a view into a particular area of Azure; and often help you prepare for a certification. Each session in a learning path is business-scenario focused so you can see how this technology could be used in the real world. Each session is independent, however viewing them as a series builds a clearer picture of when and how to use which technology.

The Internet of Things Event Learning Path is designed for Solution Architects, Business Decision Makers, and Development teams that are interested in building IoT Solutions with Azure Services. The content is comprised of 5 video-based modules that approach topics ranging from IoT device connectivity, IoT data communication strategies, use of artificial intelligence at the edge, data processing considerations for IoT data, and IoT solutioning based on the Azure IoT reference architecture.

Each session includes a curated selection of associated modules from Microsoft Learn that can provide an interactive learning experience for the topics covered and may also contribute toward preparedness for the official AZ-220 IoT Developer Certification.

The video resources and presentation decks are open-source and can be found within the associated module’s folder in this repository.

Session 1: Connecting Your Physical Environment to a Digital World – A Roadmap to IoT Solutioning

With 80% of the world’s data collected in the last 2 years, it is estimated that there are currently 32 billion connected devices generating said data. Many organizations are looking to capitalize on this for the purposes of automation or estimation and require a starting point to do so.

This session will share an IoT real world adoption scenario and how the team went about incorporating IoT Azure services.

Session 2: Deciphering Data – Optimizing Data Communication to Maximize your ROI

Data collection by itself does not provide business values. IoT solutions must ingest, process, make decisions, and take actions to create value. This module focuses on data acquisition, data ingestion, and the data processing aspect of IoT solutions to maximize value from data.

As a device developer, you will learn about message types, approaches to serializing messages, the value of metadata and IoT Plug and Play to streamline data processing on the edge or in the cloud.

As a solution architect, you will learn about approaches to stream processing on the edge or in the cloud with Azure Stream Analytics, selecting the right storage based on the volume and value of data to balance performance and costs, as well as an introduction to IoT reporting with PowerBI.

Check out more on Learn!

Session 3: Adding Intelligence – Unlocking New Insights with AI & ML

For many scenarios, the cloud is used as a way to process data and apply business logic with nearly limitless scale. However, processing data in the cloud is not always the optimal way to run computational workloads: either because of connectivity issues, legal concerns, or because you need to respond in near-real time with processing at the Edge.

In this session we dive into how Azure IoT Edge can help in this scenario. We will train a machine learning model in the cloud using the Microsoft AI Platform and deploy this model to an IoT Edge device using Azure IoT Hub.

At the end, you will understand how to develop and deploy AI & Machine Learning workloads at the Edge. Check out more on Learn!

Session 4: Big Data 2.0 as your New Operational Data Source

A large part of value provided from IoT deployments comes from data. However, getting this data into the existing data landscape is often overlooked. In this session, we will start by introducing what are the existing Big Data Solutions that can be part of your data landscape.

We will then look at how you can easily ingest IoT Data within traditional BI systems like Data warehouses or in Big Data stores like data lakes. When our data is ingested, we see how your data analysts can gain new insights on your existing data by augmenting your PowerBI reports with IoT Data. Looking back at historical data with a new angle is a common scenario. Finally, we’ll see how to run real-time analytics on IoT Data to power real time dashboards or take actions with Azure Stream Analytics and Logic Apps. By the end of the presentation, you’ll have an understanding of all the related data components of the IoT reference architecture. Check out more on Learn!

Session 5: Get to Solutioning – Strategy & Best Practices when Mapping Designs from Edge to Cloud

by Contributed | Dec 1, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Hi All,

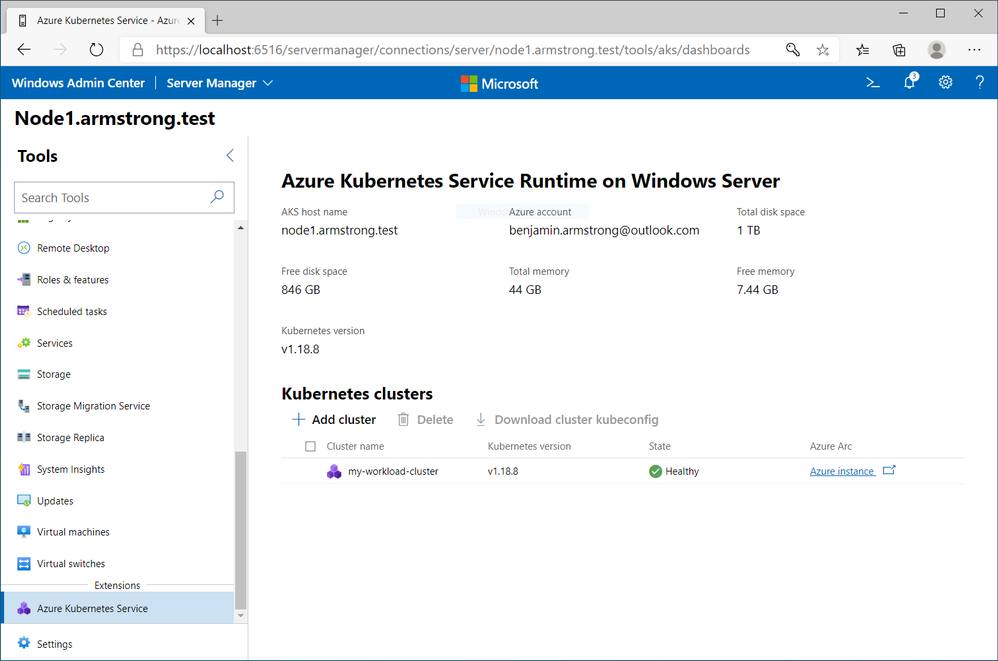

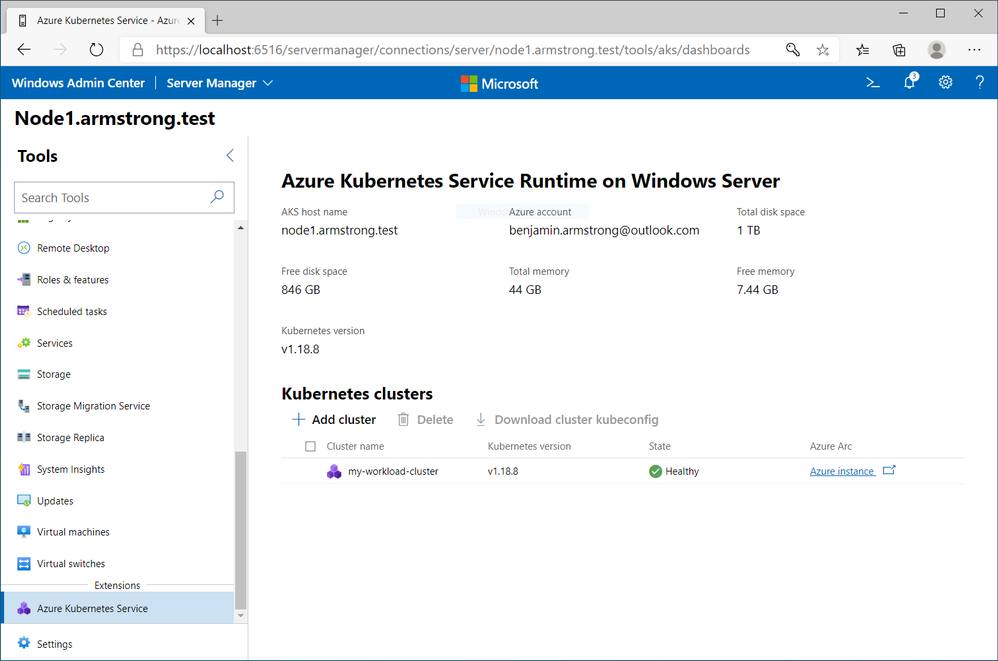

The AKS on Azure Stack HCI team has been hard at work responding to feedback from you all, and adding new features and functionality. Today we are releasing the AKS on Azure Stack HCI December Update.

You can evaluate the AKS on Azure Stack HCI December Update by registering for the Public Preview here: https://aka.ms/AKS-HCI-Evaluate (If you have already downloaded AKS on Azure Stack HCI – this evaluation link has now been updated with the December Update)

Some of the new changes in the AKS on Azure Stack HCI December Update include:

Workload Cluster Management Dashboard in Windows Admin Center

With the December update, AKS on Azure Stack HCI now provides you with a dashboard where you can:

- View any workload clusters you have deployed

- Connect to their Arc management pages

- Download the kubeconfig file for the cluster

- Create new workload clusters

- Delete existing workload clusters

We will be expanding the capabilities of this dashboard overtime.

Naming Scheme Update for AKS on Azure Stack HCI worker nodes

As people have been integrating AKS on Azure Stack HCI into their environments, there were some challenges encountered with our naming scheme for worker nodes. Specifically as people needed to join them to a domain to enable GMSA for Windows Containers. With the December update AKS on Azure Stack HCI worker node naming is now more domain friendly.

Windows Server 2019 Host Support

When we launched the first public preview of AKS on Azure Stack HCI – we only supported deployment on top of new Azure Stack HCI systems. However, some users have been asking for the ability to deploy AKS on Azure Stack HCI on Windows Server 2019. With this release we are now adding support for running AKS on Azure Stack HCI on any Windows Server 2019 cluster that has Hyper-V enabled, with a cluster shared volume configured for storage.

There have been several other changes and fixes that you can read about in the December Update release notes (Release December 2020 Update · Azure/aks-hci (github.com))

Once you have downloaded and installed the AKS on Azure Stack HCI December Update – you can report any issues you encounter, and track future feature work on our GitHub Project at https://github.com/Azure/aks-hci

I look forward to hearing from you all!

Cheers,

Ben

by Contributed | Dec 1, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

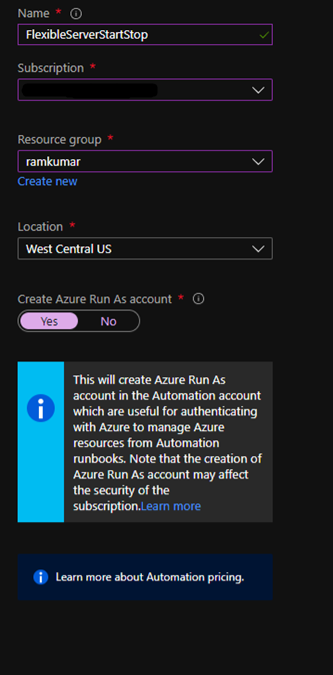

Flexible Server is a new deployment option for Azure Database for PostgreSQL that gives you the control you need with multiple configuration parameters for fine-grained database tuning along with a simpler developer experience to accelerate end-to-end deployment. With Flexible Server, you will also have a new way to optimize cost with stop/start capabilities. The ability to stop/start the Flexible Server when needed is ideal for development or test scenarios where it’s not necessary to run your database 24×7. When Flexible Server is stopped, you only pay for storage, and you can easily start it back up with just a click in the Azure portal.

Azure Automation delivers a cloud-based automation and configuration service that supports consistent management across your Azure and non-Azure environments. It comprises process automation, configuration management, update management, shared capabilities, and heterogeneous features. Automation gives you complete control during deployment, operations, and decommissioning of workloads and resources. The Azure Automation Process Automation feature supports several types of runbooks such as Graphical, PowerShell, Python. Other options for automation include PowerShell runbook, Azure Functions timer trigger, Azure Logic Apps. Here is a guide to choose the right integration and automation services in Azure.

Runbooks support storing, editing, and testing the scripts in the portal directly. Python is a general-purpose, versatile, and popular programming language. In this blog, we will see how we can leverage Azure Automation Python runbook to auto start/stop a Flexible Server on weekend days (Saturdays and Sundays).

Prerequisites

Steps

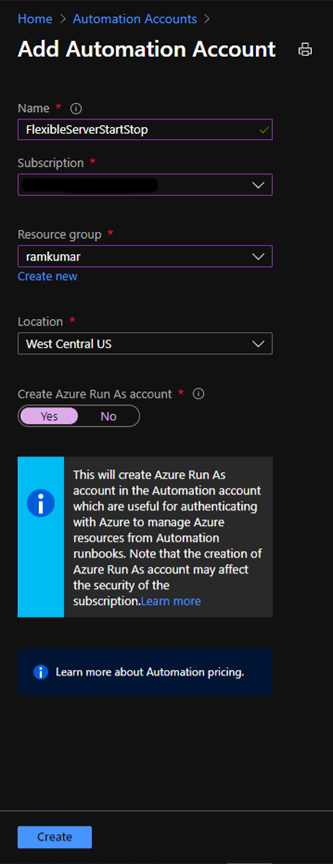

1. Create a new Azure Automation account with Azure Run As account at:

https://ms.portal.azure.com/#create/Microsoft.AutomationAccount

NOTE: An Azure Run As Account by default has the Contributor role to your entire subscription. You can limit Run As account permissions if required. Also, all users with access to the Automation Account can also use this Azure Run As Account.

2. After you successfully create the Azure Automation account, navigate to Runbooks.

Here you can already see some sample runbooks.

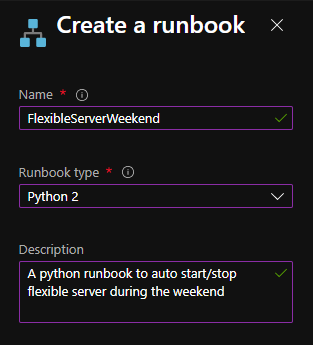

3. Let’s create a new python runbook by selecting+ Create a runbook.

4. Provide the runbook details, and then select Create.

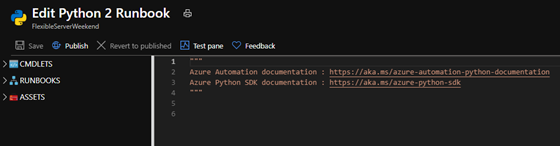

After the python runbook is created successfully, an Edit screen appears, similar to the image below.

5. Copy paste the below python script. Fill in appropriate values for your Flexible Server’s subscription_id, resource_group, and server_name, and then select Save.

import azure.mgmt.resource

import requests

import automationassets

from msrestazure.azure_cloud import AZURE_PUBLIC_CLOUD

from datetime import datetime

def get_token(runas_connection, resource_url, authority_url):

""" Returns credentials to authenticate against Azure resoruce manager """

from OpenSSL import crypto

from msrestazure import azure_active_directory

import adal

# Get the Azure Automation RunAs service principal certificate

cert = automationassets.get_automation_certificate("AzureRunAsCertificate")

pks12_cert = crypto.load_pkcs12(cert)

pem_pkey = crypto.dump_privatekey(crypto.FILETYPE_PEM, pks12_cert.get_privatekey())

# Get run as connection information for the Azure Automation service principal

application_id = runas_connection["ApplicationId"]

thumbprint = runas_connection["CertificateThumbprint"]

tenant_id = runas_connection["TenantId"]

# Authenticate with service principal certificate

authority_full_url = (authority_url + '/' + tenant_id)

context = adal.AuthenticationContext(authority_full_url)

return context.acquire_token_with_client_certificate(

resource_url,

application_id,

pem_pkey,

thumbprint)['accessToken']

action = ''

day_of_week = datetime.today().strftime('%A')

if day_of_week == 'Saturday':

action = 'stop'

elif day_of_week == 'Monday':

action = 'start'

subscription_id = '<SUBSCRIPTION_ID>'

resource_group = '<RESOURCE_GROUP>'

server_name = '<SERVER_NAME>'

if action:

print 'Today is ' + day_of_week + '. Executing ' + action + ' server'

runas_connection = automationassets.get_automation_connection("AzureRunAsConnection")

resource_url = AZURE_PUBLIC_CLOUD.endpoints.active_directory_resource_id

authority_url = AZURE_PUBLIC_CLOUD.endpoints.active_directory

resourceManager_url = AZURE_PUBLIC_CLOUD.endpoints.resource_manager

auth_token=get_token(runas_connection, resource_url, authority_url)

url = 'https://management.azure.com/subscriptions/' + subscription_id + '/resourceGroups/' + resource_group + '/providers/Microsoft.DBforPostgreSQL/flexibleServers/' + server_name + '/' + action + '?api-version=2020-02-14-preview'

response = requests.post(url, json={}, headers={'Authorization': 'Bearer ' + auth_token})

print(response.json())

else:

print 'Today is ' + day_of_week + '. No action taken'

After you save this, you can test the python script using “Test Pane”. When the script works fine, then select Publish.

Next, we need to schedule this runbook to run every day using Schedules.

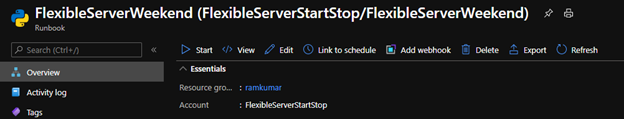

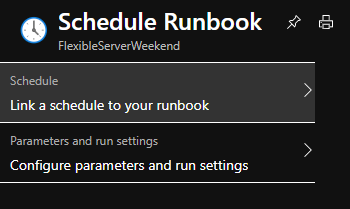

6. On the runbook Overview blade, select Link to schedule.

7. Select Link a schedule to your runbook.

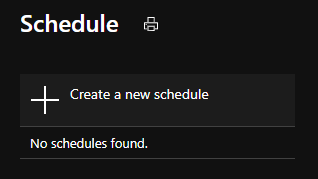

8. Select Create a new schedule.

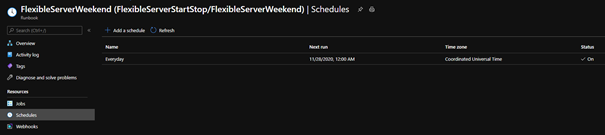

9. Create a schedule to run every day at 12:00 AM using the following parameters

10. Select Create and verify that the schedule has been successfully created and verify that the Status is “On“.

After following these steps, Azure Automation will run the Python runbook every day at 12:00 AM. The python script will stop the Flexible Server if it’s a Saturday and start the server if it’s a Monday. This is all based on the UTC time zone, but you can easily modify it to fit the time zone of your choice. You can also use the holidays Python package to auto start/stop Flexible Server during the holidays.

If you want to dive deeper, the new Flexible Server documentation is a great place to find out more. You can also visit our website to learn more about our Azure Database for PostgreSQL managed service. We’re always eager to hear your feedback, so please reach out via email using the Ask Azure DB for PostgreSQL alias.

by Contributed | Dec 1, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Learn how to leverage SQL Server on Azure virtual machine to improve your elasticity and business continuity for your on-premises SQL Server instances. Discover how the new benefits allow you to reduce your overall TCO while improving your uptime ONLY on Azure on this episode of Data Exposed with Amit Banerjee.

Recent Comments