by Contributed | Dec 1, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Monthly Webinar and Ask Me Anything on Azure Data Explorer register now

Azure Data Explorer is a fast, fully managed data analytics service for real-time analysis on large volumes of data streaming from applications, websites, IoT devices, and more. Ask questions and iteratively explore data on the fly to improve products, enhance customer experiences, monitor devices, and boost operations. Quickly identify patterns, anomalies, and trends in your data. Explore new questions and get answers in minutes. Run as many queries as you need, thanks to the optimized cost structure.

register now

09:00-09:45 AM PST Azure Data Explorere overview by Uri Barash, Azure Data Explorer Group Program Manager

YouTube Live Stream

09:00-10:00 AM PST Ask me Anything with Azure Data Explorer Product group.

Ask Me Anything

by Contributed | Nov 30, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Most organizations are now aware of how valuable the forms (pdf, images, videos…) they keep in their closets are. They are looking for best practices and most cost-effective ways and tools to digitize those assets. By extracting the data from those forms and combining it with existing operational systems and data warehouses, they can build powerful AI and ML models to get insights from it to deliver value to their customers and business users.

With the Form Recognizer Cognitive Service, we help organizations to harness their data, automate processes (invoice payments, tax processing …), save money and time and get better accuracy.

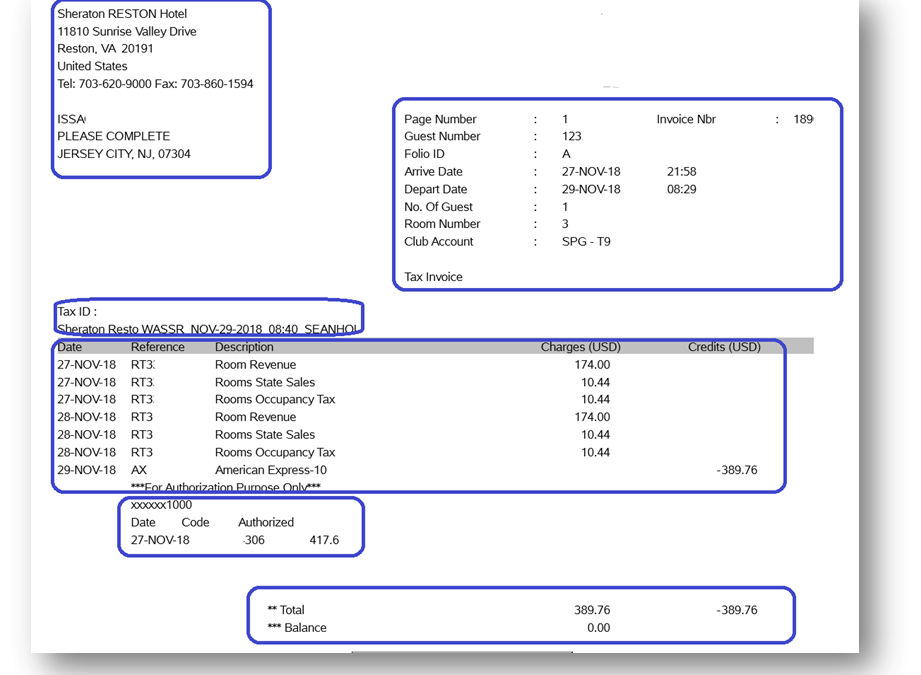

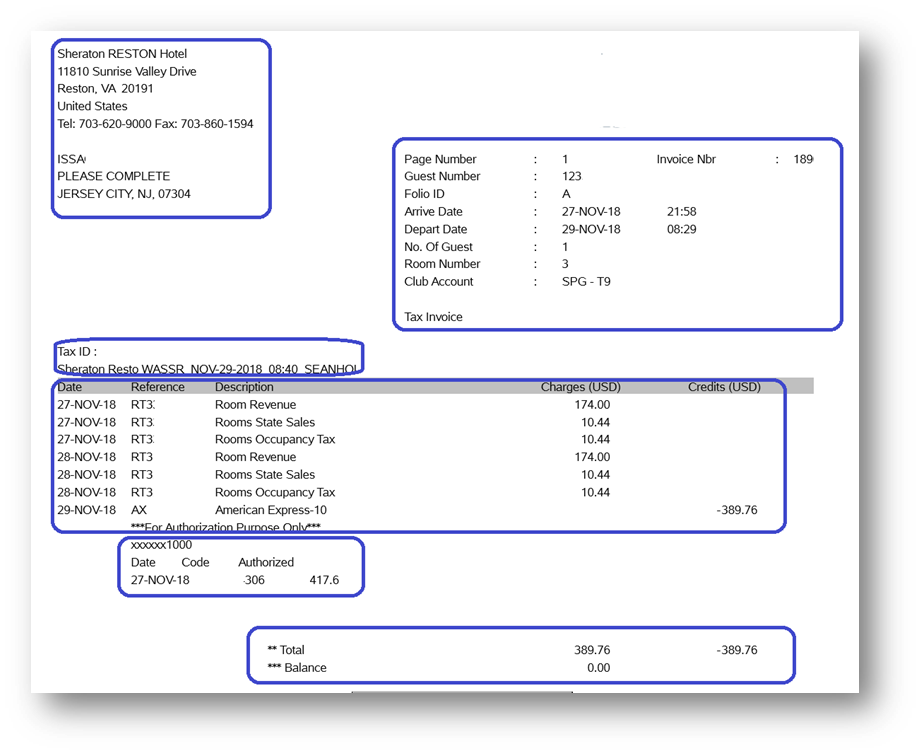

Figure 1:Typical form

In my first blog about the automated form processing, I described how you can extract key-value pairs from your forms in real-time using the Azure Form Recognizer cognitive service. We successfully implemented that solution for many customers.

Often, after a successful PoC or MVP, our customers realize that, not only they need this real time solution but, they also have a huge backlog of forms they would like to ingest into their relational, NoSQL databases or data lake, in a batch fashion. They have different types of forms and they don’t want to build a model for each type. They are also looking for easy and quick way to ingest the new type of forms.

In this blog, we’ll describe how to dynamically train a form recognizer model to extract the key-value pairs of different type of forms and at scale using Azure services. We’ll also share a github repository where you can download the code and implement the solution we describe in this post.

The backlog of forms maybe in your on-premises environment or in a (s)FTP server. We assume that you were able to upload them into an Azure Data Lake Store Gen 2, using Azure Data Factory, Storage Explorer or AzCopy. Therefore, the solution we’ll describe here will focus on the data ingestion from the data lake to the (No)SQL database.

Our product team published a great tutorial on how to Train a Form Recognizer model and extract form data by using the REST API with Python. The solution described here demonstrates the approach for one model and one type of forms and is ideal for real-time form processing.

The value-add of the post is to show how to automatically train a model with new and different type of forms using a meta-data driven approach, in batch mode.

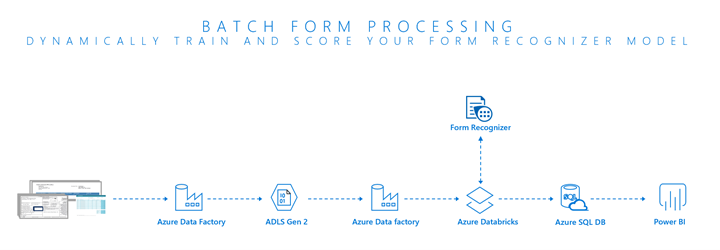

Below is the high-level architecture.

Figure 2: High Level Architecture

Azure services required to implement this solution

To implement this solution, you will need to create the below services:

Form Recognizer resource:

Form Recognizer resource to setup and configure the form recognizer cognitive service, get the API key and endpoint URI.

Azure SQL single database:

We will create a meta-data table in Azure SQL Database. This table will contain the non-sensitive data required by the Form Recognizer Rest API. The idea is, whenever there is a new type of form, we just insert a new record in this table and trigger the training and scoring pipeline.

The required attributes of this table are:

- form_description: This field is not required as part of the training of the model the inference. It just to provide a description of the type of forms we are training the model for (example client A forms, Hotel B forms,…)

- training_container_name: This is the storage account container name where we store the training dataset. It can be the same as scoring_container_name

- training_blob_root_folder: The folder in the storage account where we’ll store the files for the training of the model.

- scoring_container_name: This is the storage account container name where we store the files we want to extract the key value pairs from. It can be the same as the training_container_name

- scoring_input_blob_folder: The folder in the storage account where we’ll store the files to extract key-value pair from.

- model_id: The identify of model we want to retrain. For the first run, the value must be set to -1 to create a new custom model to train. The training notebook will return the newly created model id to the data factory and, using a stored procedure activity, we’ll update the meta data table with in the Azure SQL database.

Whenever you had a new form type, you need to reset the model id to -1 and retrain the model.

- file_type: The supported types are application/pdf, image/jpeg, image/png, image/tif.

- form_batch_group_id : Over time, you might have multiple forms type you train against different models. The form_batch_group_id will allow you to specify all the form types that have been training using a specific model.

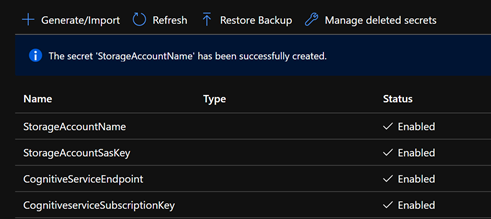

Azure Key Vault:

For security reasons, we don’t want to store certain sensitive information in the parametrization table in the Azure SQL database. We store those parameters in Azure Key Vault secrets.

Below are the parameters we store in the key vault:

- CognitiveServiceEndpoint: The endpoint of the form recognizer cognitive service. This value will be stored in Azure Key Vault for security reasons.

- CognitiveServiceSubscriptionKey: The access key of the cognitive service. This value will be stored in Azure Key Vault for security reasons. The below screenshot shows how to get the key and endpoint of the cognitive service

Figure 3: Cognitive Service Keys and Endpoint

- StorageAccountName: The storage account where the training dataset and forms we want to extract the key value pairs from are stored. The two storage accounts can be different. The training dataset must be in the same container for all form types. They can be in different folders.

- StorageAccountSasKey : the shared access signature of the storage account

The below screen shows the key vault after you create all the secrets

Figure 4 : Key Vault Secrets

Azure Data Factory:

To orchestrate the training and scoring of the model. Using a look up activity, we’ll retrieve the parameters in the Azure SQL Database and orchestrate the training and scoring of the model using Databricks notebooks. All the sensitive parameters stored in Key vault will be retrieve in the notebooks.

Azure Data Lake Gen 2:

To store the training dataset and the forms we want to extract the key-values pairs from. The training and the scoring datasets can be in different containers but, as mentioned above, the training dataset must be in the same container for all form types.

Azure Databricks:

To implement the python script to train and score the model. Note that we could have used Azure functions.

Azure Key Vault:

To store the sensitive parameters required by the Form Recognizer Rest API.

The code to implement this solution is available in the following GitHub repository.

Additional Resources

Get started with deploying Form Recognizer –

- Custom Model – extract text, tables and key value pairs

- Prebuilt receipts – extract data from USA sales receipts

- Layout – extract text and table structure (row and column numbers) from your documents

- See What’s New

by Contributed | Nov 30, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

The Azure Sphere 20.11 SDK feature release includes the following features:

- Updated Azure Sphere SDK for both Windows and Linux

- Updated Azure Sphere extension for Visual Studio Code

- New and updated samples

The 20.11 release does not contain an updated extension for Visual Studio or an updated OS.

To install the 20.11 SDK and Visual Studio Code extension, see the installation Quickstart for Windows or Linux:

New features in the 20.11 SDK

The 20.11 SDK introduces the first Beta release of the azsphere command line interface (CLI) v2. The v2 CLI is designed to match the Azure CLI more closely in functionality and usage. On Windows, you can run it in PowerShell or in a standard Windows command prompt; the Azure Sphere Developer Command Prompt is not required.

The Azure Sphere CLI v2 Beta supports all the commands that the original azsphere CLI supports, along with additional features:

- Tab completion for commands.

- Additional and configurable output formats, including JSON.

- Clear separation between stdout for command output and stderr for messages.

- Additional support for long item lists without truncation.

- Simplified object identification so that you can use either a name or an ID (a GUID) to identify tenants, products, and device groups.

For a complete list of additional features, see Azure Sphere CLI v2 Beta.

The CLI v2 Beta is installed alongside the existing CLI on both Windows and Linux, so you have access to either interface. We encourage you to use CLI v2 and to report any problems by using the v2 Beta CLI azsphere feedback command.

IMPORTANT: The azsphere reference documentation has been updated to note the differences between the two versions and to include examples of both. However, examples elsewhere in the documentation still reflect the original azsphere CLI v1. We will update those examples when CLI v2 is promoted out of the Beta phase.

We do not yet have a target date for promotion of the CLI v2 Beta or the deprecation of the v1 CLI. However, we expect to support both versions for at least two feature releases.

New and updated samples for 20.11

The 20.11 release includes the following new and updated sample hardware designs and applications:

In addition to these changes, we have begun making Azure Sphere samples available for download through the Microsoft Samples Browser . The selection is currently limited but will expand over time. To find Azure Sphere samples, search for Azure Sphere on the Browse code samples page.

Updated extension for Visual Studio Code

The 20.11 Azure Sphere extension for Visual Studio Code supports the new Tenant Explorer, which displays information about devices and tenants. See view device and tenant information in the Azure Sphere Explorer for details.

If you encounter problems

For self-help technical inquiries, please visit Microsoft Q&A or Stack Overflow. If you require technical support and have a support plan, please submit a support ticket in Microsoft Azure Support or work with your Microsoft Technical Account Manager. If you would like to purchase a support plan, please explore the Azure support plans.

by Contributed | Nov 30, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

One of the most important questions customers ask when deploying Azure DDoS Protection Standard for the first time is how to manage the deployment at scale. A DDoS Protection Plan represents an investment in protecting the availability of resources, and this investment must be applied intentionally across an Azure environment.

Creating a DDoS Protection Plan and associating a few virtual networks using the Azure portal takes a single administrator just minutes, making it one of the easiest to deploy resources in Azure. However, in larger environments this can be a more difficult task, especially when it comes to managing the deployment as network assets multiply.

Azure DDoS Protection Standard is deployed by creating a DDoS Protection Plan and associating VNets to that plan. The VNets can be in any subscription in the same tenant as the plan. While the deployment is done at the VNet level, the protection and the billing are both based on the public IP address resources associated to the VNets. For instance, if an Application Gateway is deployed in a certain VNet, its public IP becomes a protected resource, even though the virtual network itself only directly contains private addresses.

A consideration worth making is that the cost is not insignificant – a DDoS Protection plan starts at $3,000 USD per month for up to 100 protected IPs, adding $30 per public IP beyond 100. When the commitment has been made to investing in this protection, it is very important for you to be able to ensure that investment is applied across all required assets.

Azure Policy to Audit and Deploy

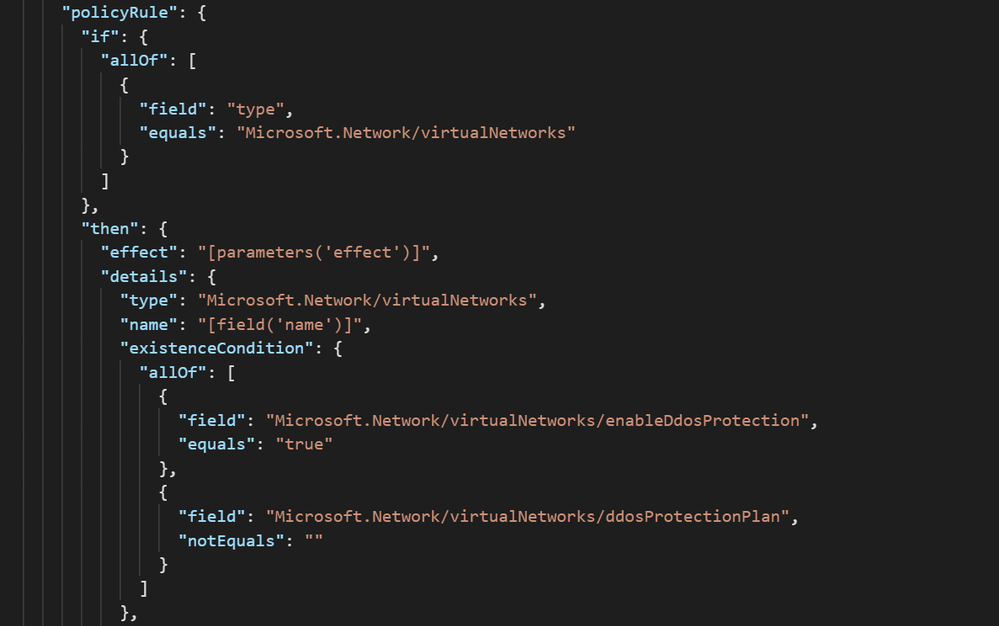

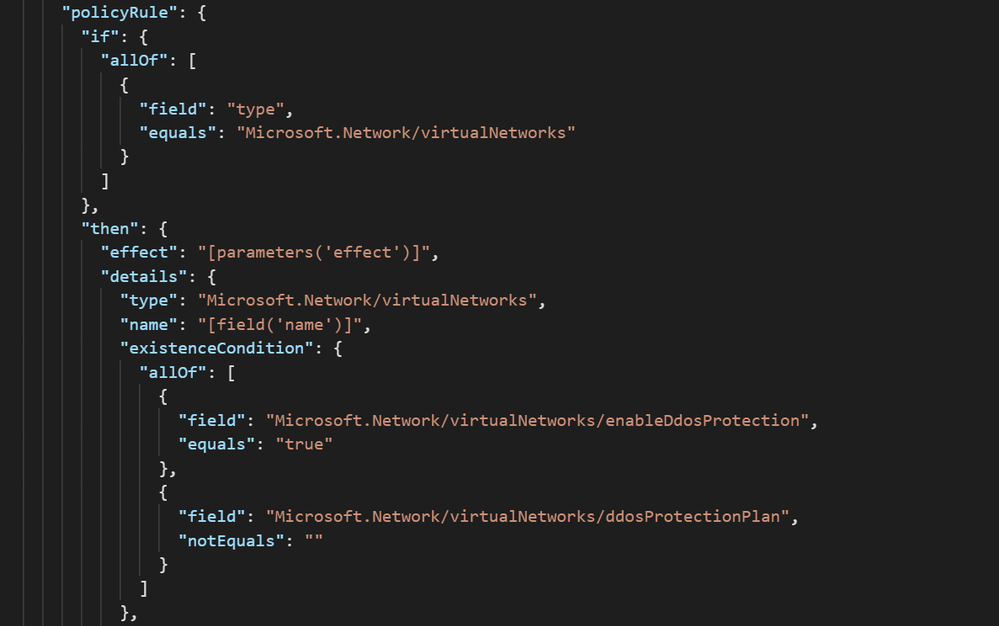

We just posted an Azure Policy sample to the Azure network security GitHub repository that will audit whether a DDoS Protection Plan is associated to VNets, then optionally create a remediation task that will create the association to protect the VNet.

The logic of the policy can be seen in the screenshot below. All virtual networks in the assignment scope are evaluated against the criteria of whether DDoS Protection is enabled and has a plan attached:

Further down in the definition, there is a template that creates the association of the DDoS Protection Plan to the VNets in scope. Let’s look at what it takes to use this sample in a real environment.

Creating a Definition

To create an Azure Policy Definition:

- Navigate to Azure Policy –> Definitions and select ‘+ Policy Definition.’

- For the Definition Location field, select a subscription. This policy will still be able to be assigned to other subscriptions via Management Groups.

- Define an appropriate Name, Description, and Category for the Policy.

- In the Policy Rule box, replace the example text with the contents of VNet-EnableDDoS.json

- Save.

Assigning the Definition

Once the Policy Definition has been created, it must be assigned to a scope. This gives you the ability to either deploy the policy to everything, using either Management Group or Subscription as the scope, or select which resources get DDoS Protection Standard protection based on Resource Group.

To assign the definition:

- From the Policy Definition, click Assign.

- On the Basics tab, choose a scope and exclude resources if necessary.

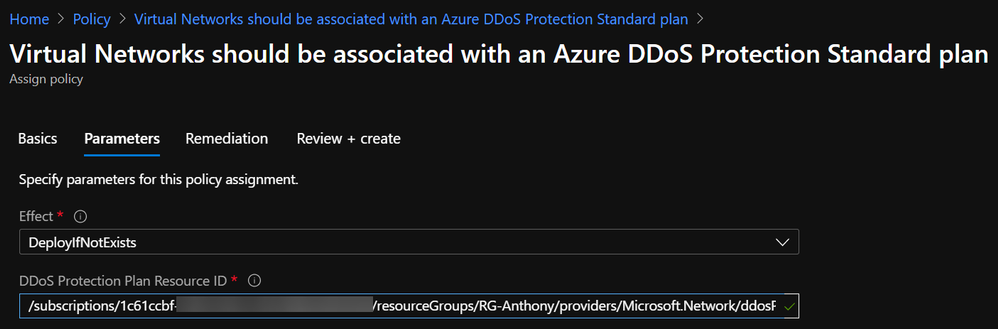

- On the Parameters tab, choose the Effect (DeployIfNotExists if you want to remediate) and paste in the Resource ID of the DDoS Protection Plan in the tenant:

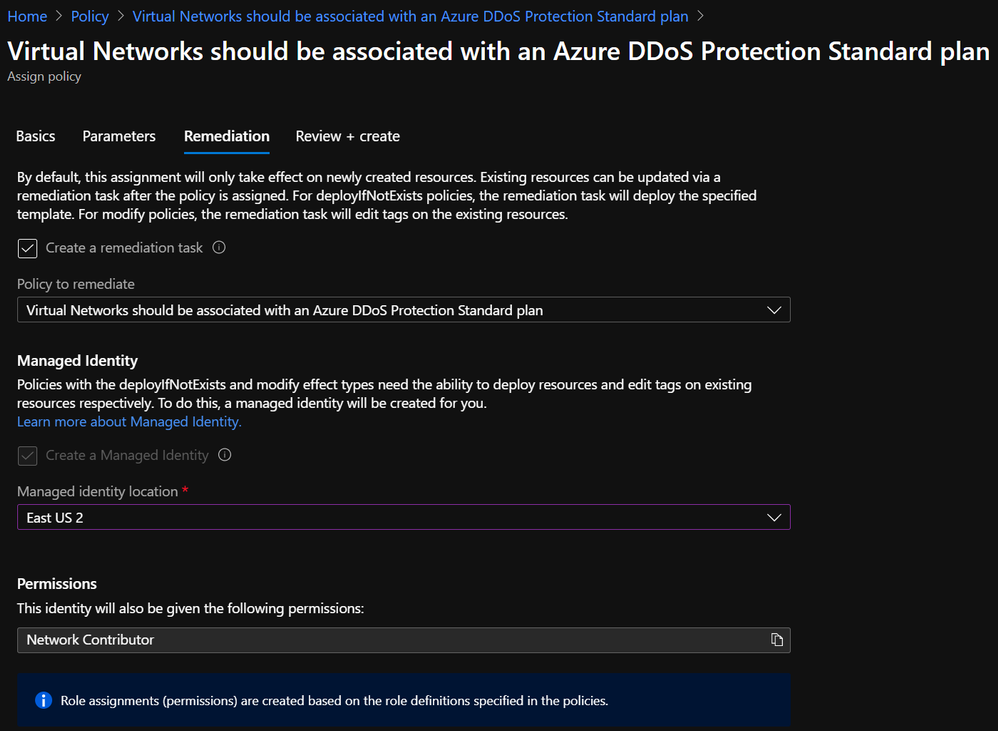

- On the Remediation tab, check the box to create a remediation task and choose a location for the managed identity to be created. Network Contributor is an appropriate role:

- Create.

Modifying the Policy Definition

The process outlined above can be used to apply DDoS Protection to collections of resources as defined by the boundaries of management groups, subscriptions, and resource groups. However, these boundaries do not always represent an exhaustive list of where DDoS Protection should or should not be applied. Sure, some customers want to attach a DDoS Protection Plan to every VNet, but most will want to be more selective.

Even if resource groups are granular enough to determine whether DDoS Protection should be applied, Policy Assignments are limited to a single RG per assignment, so the process of creating an assignment for every resource group is prohibitively tedious.

One solution to the problem of policy scoping is to modify the definition rather than the assignment. Let’s use the example of an environment where DDoS Protection is required for all production resources. Production environments could exist in many different subscriptions and resource groups, and this could change as new environments are stood up.

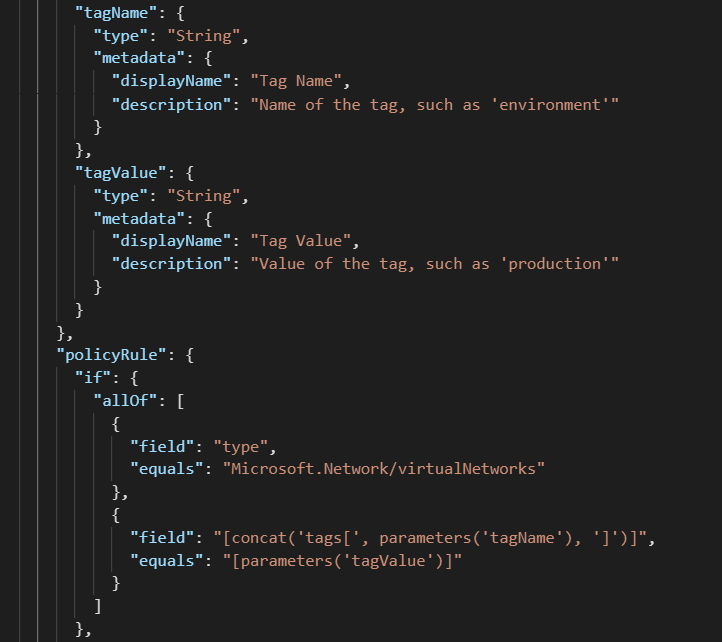

The solution here is to use tags as the identifier of production resources. In order to use this as a way to scope Azure Policy Assignments, you must modify the definition. To do this, a short snippet needs to be added to the policy rule, along with corresponding parameters (or copied from VNet-EnableDDoS-Tags.json)

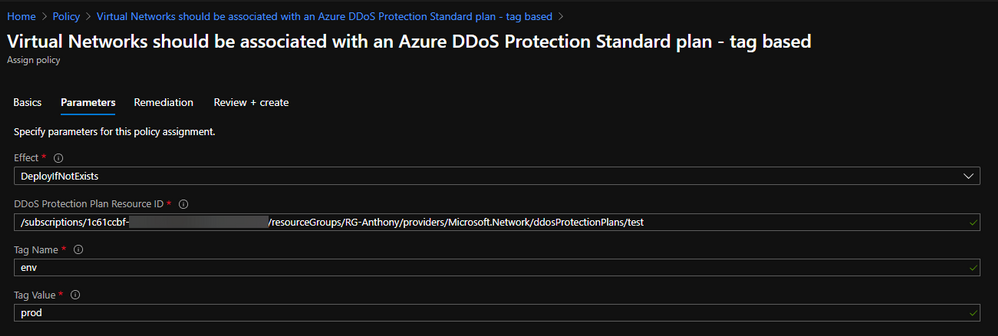

After modifying a definition to look for tag values, the corresponding assignment will look slightly different:

In this configuration, a single Policy Definition can be assigned to a wide scope, such as a Management Group, and every tagged resource within will be in scope.

Verifying Compliance

When a Policy Assignment is created using a remediation action, the effect of the policy should guarantee compliance with requirements. To gain visibility into the auditing and remediation done by the policy, you can go to Azure Policy à Compliance and select the assignment to monitor:

A successful remediation task denotes that the VNet is now protected by Azure DDoS Protection Standard.

End-to-End Management with Azure Policy

Moving beyond plan association to VNets, there are some other requirements of DDoS Protection that Azure Policy can help with.

On the Azure network security GitHub repo, you can find a policy to restrict creation of more than one DDoS Protection Plan per tenant, which helps to ensure that those with access cannot inadvertently drive up costs.

Another sample is available to keep diagnostic logs enabled across all Public IP Addresses, which keeps valuable data flowing to the teams that care about such data.

The point that should be taken from this post is that Azure Policy is a great mechanism to audit and enforce compliance with DDoS Protection requirements, and it has the power to control most other aspects of Azure security and compliance.

by Contributed | Nov 30, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Today, I worked on a service request that our customer got an issue that depending on the driver that they are using they are not able to see data in a specific column.

Sometimes, it is hard to debug the application or specify the driver to use to extract this data. In this Powershell command you could specify the driver and the provider to obtain this data.

Basically, you need to define the parameter of the connection and the provider to connect. In terms of provider you could use

- 1 – Driver: OLEDB – Provider: SQLOLEDB

- 2 – Driver: OLEDB – Provider: MSOLEDBSQL

- 3 – .Net SQL Client

- 4 – Driver: ADO – Provider: SQLOLEDB

- 5 – Driver: ADO – Provider: MSOLEDBSQL

Enjoy!

by Contributed | Nov 30, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

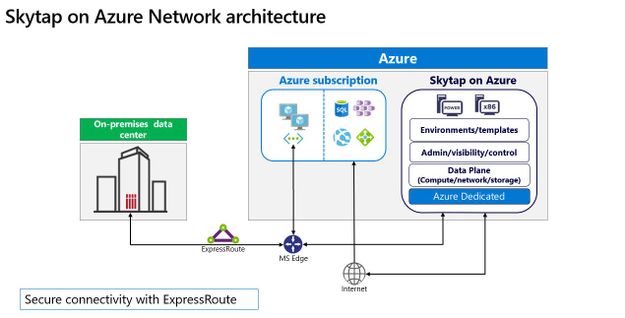

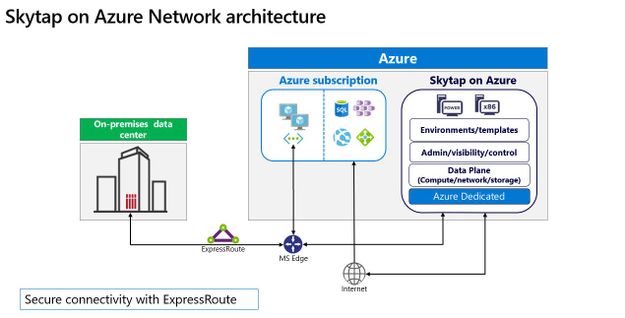

Cloud migration remains a crucial component for any organization in the transformation of their business, and Microsoft continues to focus on how best to support customers wherever they are in that journey. Microsoft works with partners like Skytap to unlock the power of Azure for customers relying on traditional on-premises application platforms.

Skytap on Azure is a cloud service purpose-built to natively run traditional IBM Power workloads in Azure. And we are excited to share that Skytap on Azure is available for purchase and provisioning directly through Azure Marketplace, further streamlining the experience for customers.

The migration of applications running on IBM Power to the cloud is often seen as a difficult and challenging move involving re-platforming. With Skytap on Azure, Microsoft brings the unique capabilities of IBM Power9 servers to Azure, directly integrating with Azure networking enabling Skytap to provide its platform with minimal connectivity latency to Azure native services.

Skytap has more than a decade of experience working with customers, such as Delphix, Schneider Electric, and Okta, and offering extensible application environments that are compatible with on-premises data centers; Skytap’s environments simplify migration and provide self-service access to develop, deploy, and accelerate innovation for complex applications.

“Until we started working with Skytap, we did not have a public cloud option for our IBM Power Systems customers that provided real value over their on-premise systems. Now, with Skytap on Azure we’re excited to offer true cloud capabilities like instant cloning, capacity on demand and pay-as-you-go options in the highly secure Azure environment,” said Daniel Magid, CEO of Eradani.

Skytap on Azure offers consumption-based pricing, on-demand access to compute and storage resources, and extensibility through RESTful APIs. With Skytap availability on Azure Marketplace customers can get started quickly, and at a low cost. Learn more about Skytap on Azure here, additionally take a look at the latest video from our Microsoft Product team here.

Skytap on Azure is available in the East US Azure region. Given the high level of interest we have seen already, we intend to expand availability to additional regions across Europe, the United States, and Asia Pacific. Stay tuned for more details on specific regional rollout availability.

Try Skytap on Azure today, available through the Azure Marketplace. Skytap on Azure is a Skytap first-party service delivered on Microsoft Azure’s global cloud infrastructure.

Published on behalf of the Microsoft Azure Dedicated and Skytap on Azure Team

Recent Comments