by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Final Update: Wednesday, 18 November 2020 01:36 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 11/18, 01:15 UTC. Our logs show the incident started on 11/18, 00:20 UTC and that during the 55 minutes that it took to resolve the issue some customers might have experienced issues with missed or delayed Log Search Alerts or experienced difficulties accessing data for resources hosted in West US2 and North Europe.

- Root Cause: The failure was due to an issue in one of our backend services.

- Incident Timeline: 55 minutes – 11/18, 00:20 UTC through 11/18, 01:15 UTC

We understand that customers rely on Azure Log Analytics as a critical service and apologize for any impact this incident caused.

-Saika

by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

As customers progress and mature in managing Azure Policy definitions and assignments, we have found it important to ease the management of these artifacts at scale. Azure Policy as code embodies this idea and focuses on managing the lifecycle of definitions and assignments in a repeatable and controlled manner. New integrations between GitHub and Azure Policy allow customers to better manage policy definitions and assignments using an “as code” approach.

More information on Azure Policy as Code workflows here.

Export Azure Policy Definitions and Assignments to GitHub directly from the Azure Portal

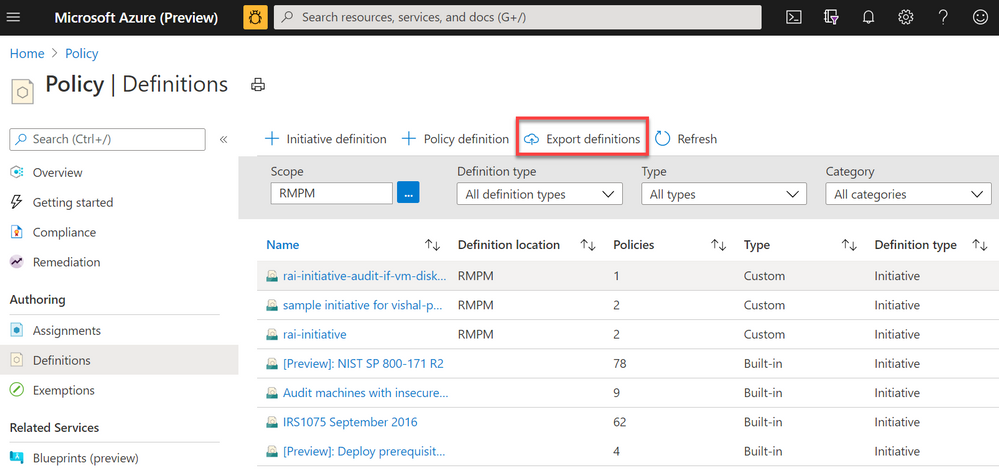

Screenshot of Azure Policy definition view blade from the Azure Portal with a red box highlighting the Export definitions button

Screenshot of Azure Policy definition view blade from the Azure Portal with a red box highlighting the Export definitions button

The Azure Policy as Code and GitHub integration begins with the export function; the ability to export policy definitions and assignments from the Azure Portal to GitHub repositories. Now available in the definitions view, the export definition button will allow you to select your GitHub repository, branch, directory then instruct you to select the policy definitions and assignments you wish to export. After exporting, the selected artifacts will be exported to the GitHub. The files will be exported in the following recommended format:

|- <root level folder>/ ________________ # Root level folder set by Directory property

| |- policies/ ________________________ # Subfolder for policy objects

| |- <displayName>_<name>____________ # Subfolder based on policy displayName and name properties

| |- policy.json _________________ # Policy definition

| |- assign.<displayName>_<name>__ # Each assignment (if selected) based on displayName and name properties

Naturally, GitHub keeps tracks of changes committed in files which will help in versioning of policy definitions and assignments as conditions and business requirements change. GitHub will also help organizing all Azure Policy artifacts in a central source control for easy management and scalability.

Leverage GitHub workflows to sync changes from GitHub to Azure

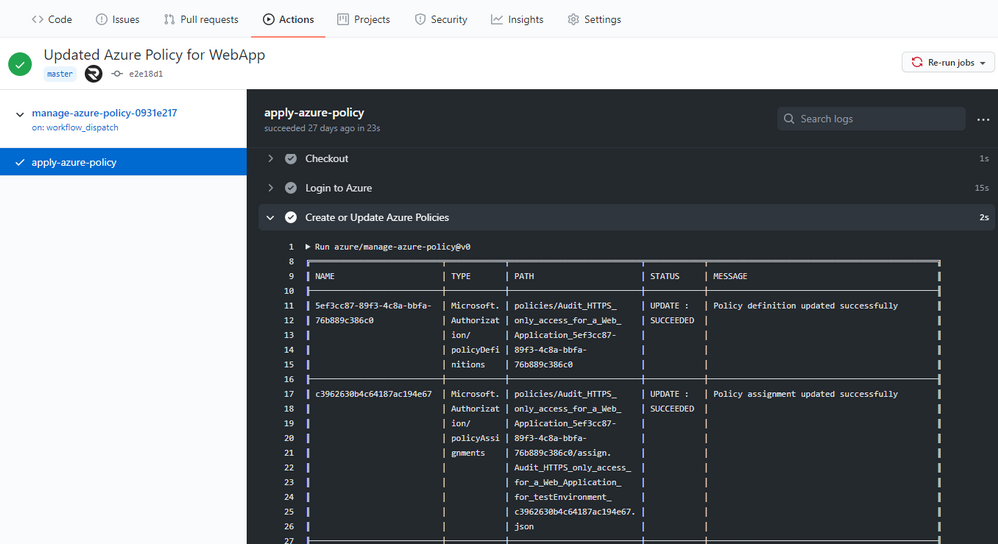

Screenshot of the Manage Azure Policy action within GitHub

Screenshot of the Manage Azure Policy action within GitHub

An added feature of exporting is the creation of a GitHub workflow file in the repository. This workflow leverages the Manage Azure Policy action to aid in syncing changes from your source control repository to Azure. The workflow makes it quick and easy for customers to iterate on their policies and to deploy them to Azure. Since workflows are customizable, this workflow can be modified to control the deployment of those policies following safe deployment best practices.

Furthermore, the workflow will add in traceability URLs into the definition metadata for easy tracking of the GitHub workflow run that updated the policy.

More information on Manage Azure Policy GitHub Action here.

Trigger Azure Policy Compliance Scans in a GitHub workflow

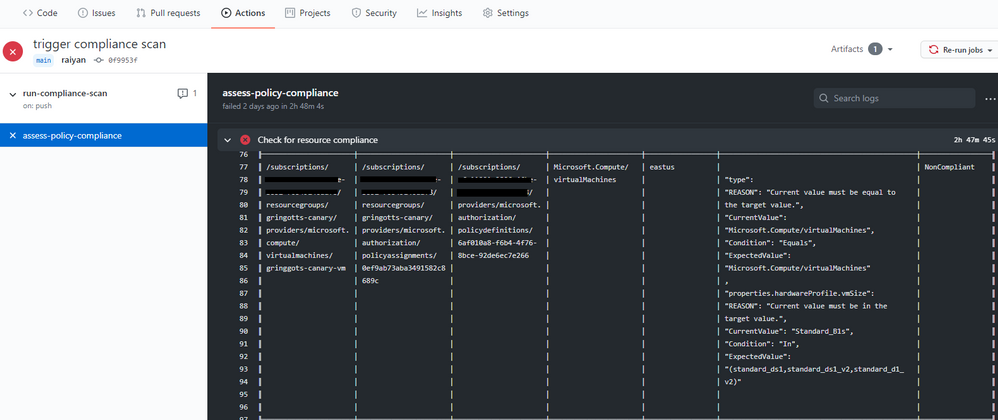

Screenshot of the Azure Policy compliance scan on GitHub Actions

Screenshot of the Azure Policy compliance scan on GitHub Actions

We also rolled out the Azure Policy Compliance Scan that triggers an on-demand compliance evaluation scan. This can be triggered from a GitHub workflow to test and verify policy compliance during deployments. The workflow also allows for the compliance scan to be targeted at specific scopes and resources by leveraging the scopes and scopes-ignore inputs. Furthermore, the workflow is able to upload a CSV file with list of resources that are non-compliant after the scan is complete. This is great for rolling out new policies at scale and verifying the compliance of your environment is as expected.

More information on the Azure Policy Compliance Scan here.

These three integration points between GitHub and Azure Policy create a great foundation for leveraging policies in ‘as code’ approach. As always, we look forward to your valuable feedback and adding more capabilities.

by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

As Azure continues to deliver new H-series and N-series virtual machines that match the capabilities of supercomputing facilities around the world, we have at the same time made significant updates to Azure CycleCloud — the orchestration engine that enables our customers to build HPC environments using these virtual machine families.

We are pleased to announce the general availability of Cyclecloud 8.1, the first release of CycleCloud on the 8.x platform. Here are some of the new features in this release that we are excited to share with you.

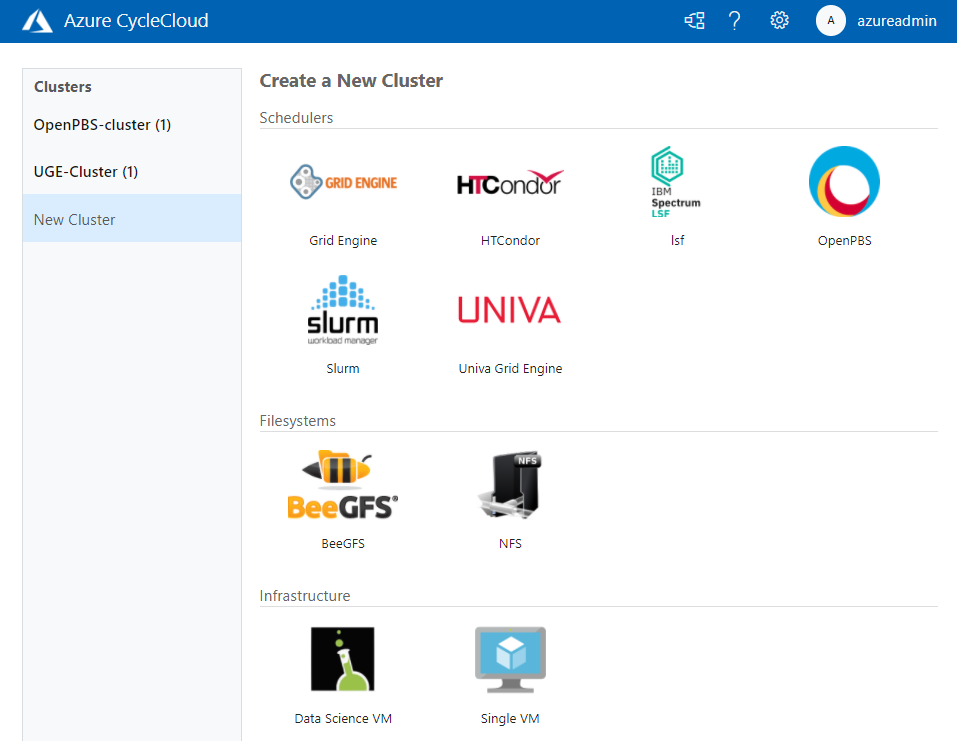

Welcome Univa Grid Engine

Univa Grid Engine, the enterprise-grade version of the beloved Grid Engine scheduler, joins IBM Spectrum LSF, OpenPBS, Slurm, and HTCondor as supported schedulers in CycleCloud.

Whether they are tightly-coupled or high-throughput, submit your jobs to your scheduler of choice and CycleCloud will allocate the required compute infrastructure on Azure to meet resource demands, and automatically scale down the infrastructure after the jobs complete. With this addition, CycleCloud is the only cloud HPC orchestration engine that supports all the major HPC batch schedulers, one that you can rely upon to run your production workloads.

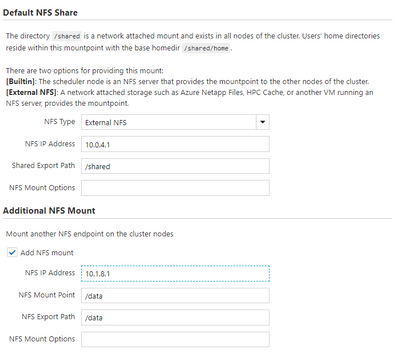

Configure Shared Filesystems Easily in the UI

In CycleCloud 8.1, it is simple to attach and mount network attached storage file systems to your HPC cluster. High-performance storage is a crucial component of HPC systems; Azure provides different storage services, such as HPC Cache, NetApp Files, and Azure Blob, that you may use as part of your HPC deployment, with each service optimized for different workloads.

Most workloads typically require access to one or more shared filesystems for common directory or file access, and you can now configure your cluster to mount NFS shares through the CycleCloud web user interface without needing to modify configuration files.

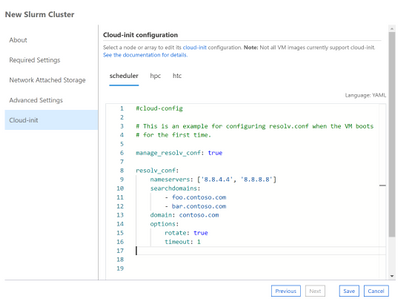

Perform Last-Mile VM Customization with Cloud-Init

CycleCloud Projects is a powerful tooling system for customizing your cluster nodes as they boot-up. In CycleCloud 8, we have included support for a complementary approach with Cloud-Init, a widely-used initialization system for customizing virtual machines.

You can now use the CycleCloud UI or a cluster template to specify a cloud-init config, Shell script or Python script that will configure a VM to your requirements before any of CycleCloud’s preparation steps.

Trigger Actions through Azure Event Grid

CycleCloud 8 generates events when certain node or cluster changes occur, and you can configure your CycleCloud installation to have these events published to Azure Event Grid. With this, you can create triggers for events like SpotVM evictions or node allocation failures, and automatically drive downstream actions or alerts . For example, track Spot eviction rates for your clusters by VM SKU and Region, and use those to trigger a decision to switch to pay-as-you-go VMs.

Azure is the Best Place to Run HPC Workloads

Over the past year, we have been privileged in being able to help our partners run their mission critical HPC workloads on Azure. CycleCloud has been used by the U.S Navy to run weather simulations for forecasting cyclone paths and intensities, by structural biologists to obtain one of the first glimpses at the crucial SARS-Cov-2 Spike protein, by biomedical engineers in performing ventilator airflow simulations ahead of an FDA EUA submission.

With the CycleCloud 8.1 release, we look forward to continuing our support of the HPC community and partners by giving them familiar and easy access to scalable, true supercomputing infrastructure Azure.

Learn More

All the new features in Azure CycleCloud 8.1

Azure CycleCloud Documentation

Azure HPC

by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

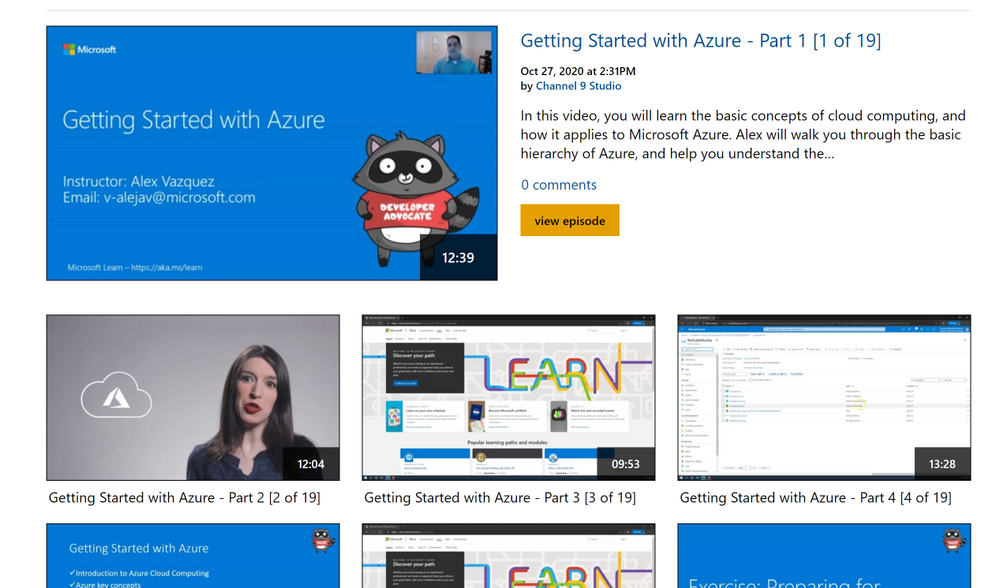

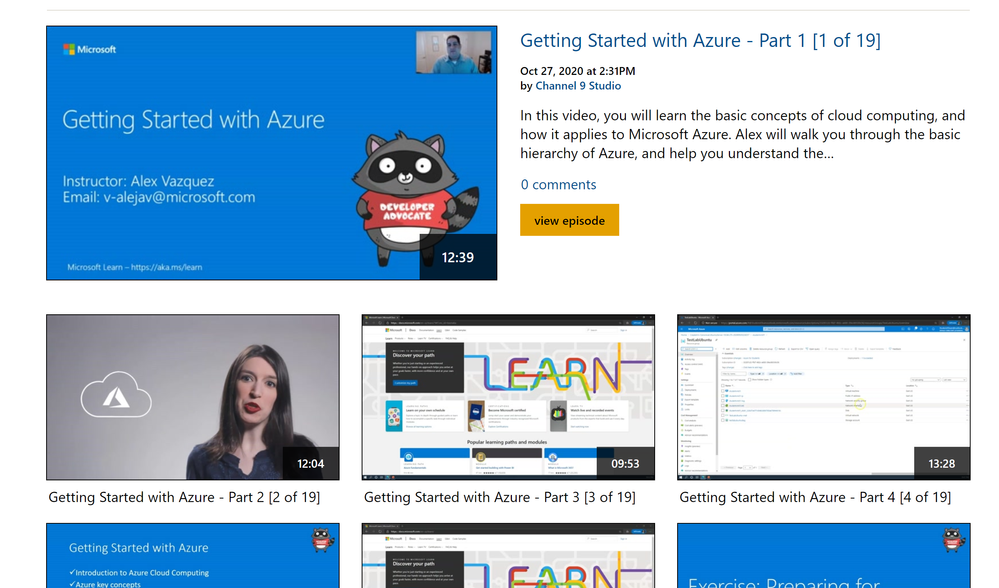

This is a series of Azure applied workshops where participants will start with the basics of cloud, getting started on Azure, and will then move on to learn about Azure’s Data and Machine Learning tools. This training series is intended for a broad audience including students, researchers, faculty, post-docs, staff and anyone interested in getting started and learning Azure.

This series will get you started on Azure and provide hands on experience managing data and leveraging Azure’s data science and machine learning tools.

The Azure workshop series will focus on the following topics:

1. Getting Started with Azure – Part 1 [1 of 19]

2. Getting Started with Azure – Part 2 [2 of 19]

3. Getting Started with Azure – Part 3 [3 of 19]

4. Getting Started with Azure – Part 4 [4 of 19]

5. Getting Started with Azure – Part 5 [5 of 19]

6. Exercise – Setting up first Subscription [6 of 19]

7. Exercise – Preparing for Machine Learning [7 of 19]

8. Exercise – Preparing for Working with Data [8 of 19]

9. What is Machine Learning? [9 of 19]

10. Azure ML Tools [10 of 19]

11. Exercise – Build a ML Model with Azure [11 of 19]

12. Exercise – Deploy and Consume a ML Model [12 of 19]

13. Using Automated ML [13 of 19]

14. Exercise – Deploy your ML Model on Azure [14 of 19]

15. Benefits Working with Data [15 of 19]

16. Databases [16 of 19]

17. Unstructured Data [17 of 19]

18. Exercise: How to Transform Data [18 of 19]

19. Tools and Integrations [19 of 19]

Additional resources and links:

by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Azure Data Explorer offers ingestion (data loading) from Event Hubs, a big-data streaming platform and event ingestion service. Event Hubs can process millions of events per second in near real-time.

The “1-click” tool now intelligently suggests the relevant table mapping definitions according to the Event Hub source, in just 4 clicks.

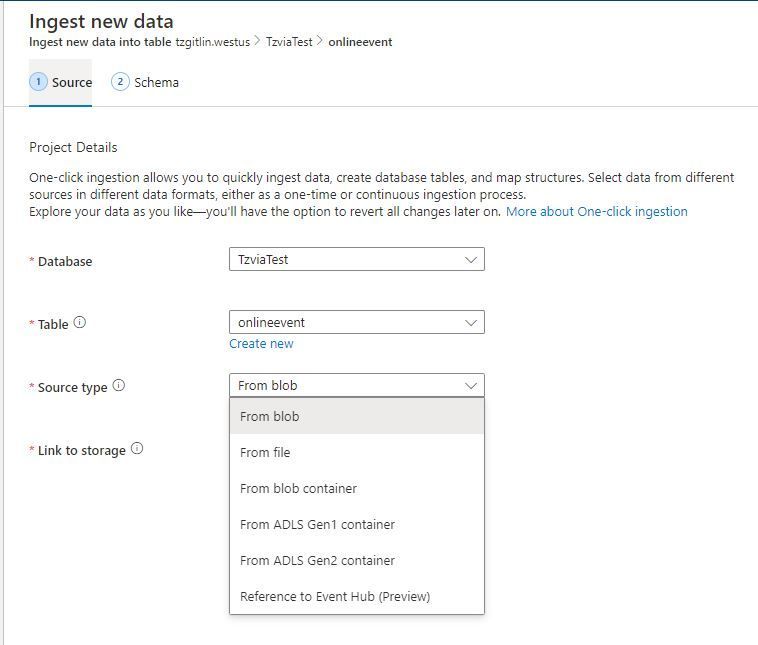

Click 1:

Click on “Ingest new data” in Kusto Web Explorer (KWE) –> Reference to Event Hub

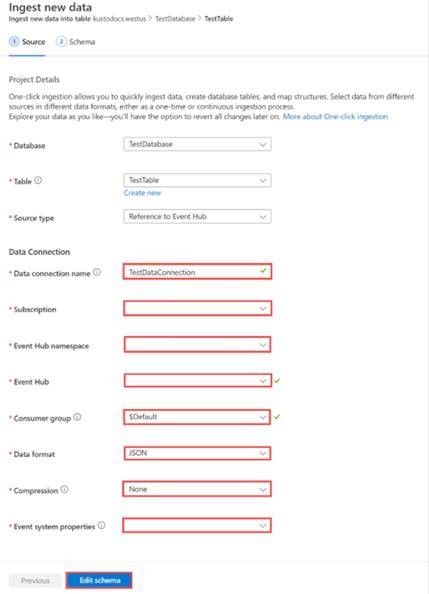

Click 2:

Connect the Event Hub as a source for continuous event ingestion to a table (new/existing)

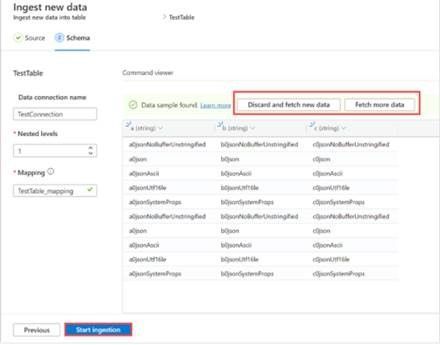

Click 3:

The 1-click tool fetches Event Hub events and infers its schema per the event structure. The user must only verify the inferred schema, and make any necessary adjustments.

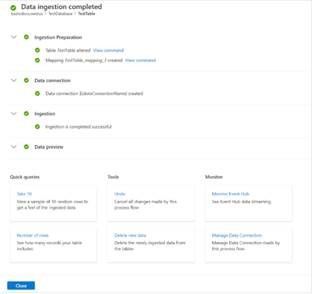

Click 4:

Click on the “Start Ingestion” button to begin inferring the incoming events in either streaming or batching mode, per your needs.

Next time you need to connect Event Hub with Azure Data Explorer, use 1-click for an easy and accurate definition end to end ingestion definition from table to query.

** All actions are revertible by the service (Tools)

read more

by Contributed | Nov 17, 2020 | Azure, Microsoft, Technology

This article is contributed. See the original author and article here.

Azure Data Studio – a cross-platform client tool with hybrid and poly cloud capabilities – now brings KQL experiences for modern data professionals. In this episode with Julie Koesmarno, she will show KQL magic in Notebooks and the native KQL experiences – all in Azure Data Studio. You’ll also learn more about the use cases for KQL experiences in Azure Data Studio and our roadmap.

Watch on Data Exposed

Resources:

View/share our latest episodes on Channel 9 and YouTube!

Recent Comments