Reimagine Project Quotes with Enhanced Experience and Time Phased Estimates in Dynamics 365 Project Operations

This article is contributed. See the original author and article here.

Project quotations in Dynamics 365 Project Operations help you create and manage detailed estimates for your customers before a project begins. A project quotation includes the estimated scope of work, roles, effort, timelines, pricing, and terms, providing a clear understanding of the proposed services and associated costs.

Within the Quote, quote lines represent the high-level components of work being delivered, while the quote line details capture granular estimates, tying in scheduling, cost, and revenue details. Each quote line is also linked to contracting models and chargeable components, allowing for accurate modeling of revenue spread, project spending, and profitability, both at the quote level and also for each individual component.

We designed the recent update around three key goals:

- Elevating the overall user experience

- Leveraging new platform capabilities

- Simplifying user interactions when creating and managing quotes

A Closer Look: Quote Form Improvements in Dynamics 365 Project Operations

A significant change is the reduction of tabs within the Quote form, centralizing your quote management experience. Let’s explore the enhanced tabs:

Summary Tab: One View for All Critical Financial Insights

All related tabs for quotes are now consolidated under the Summary tab. This tab gives you a comprehensive view of your quote’s financial health, including:

- Customer budget

- Profitability

- Scheduled delivery date

- Detailed cost and revenue breakdowns over time

With this unified view, you can confidently track and manage quote details to ensure alignment with your project’s financial goals and customer commitments.

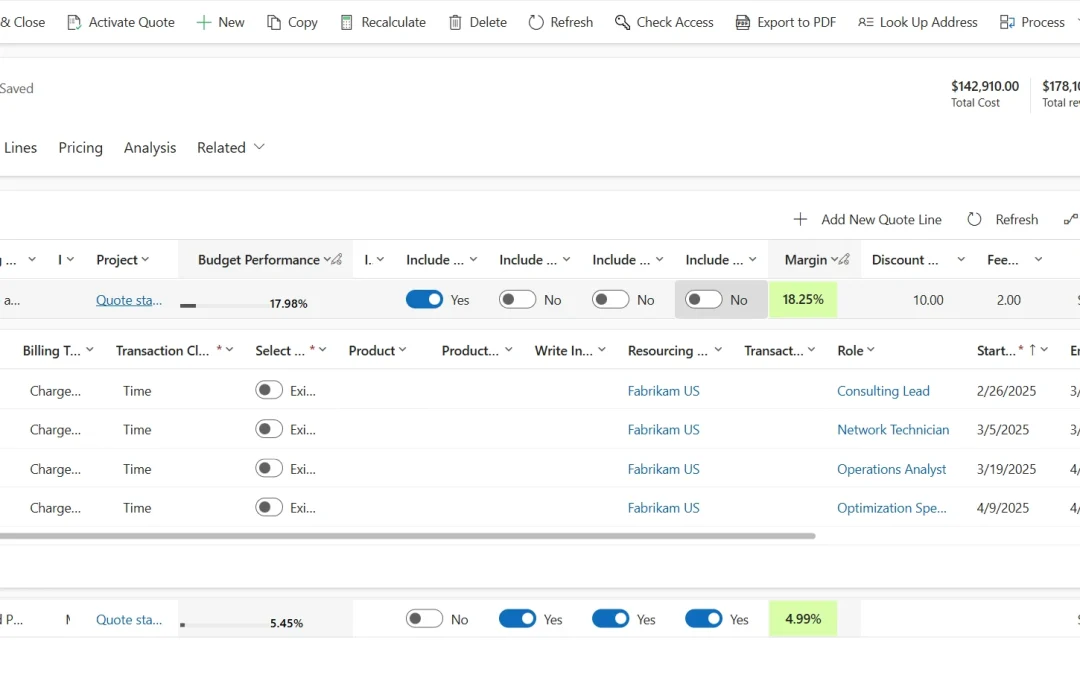

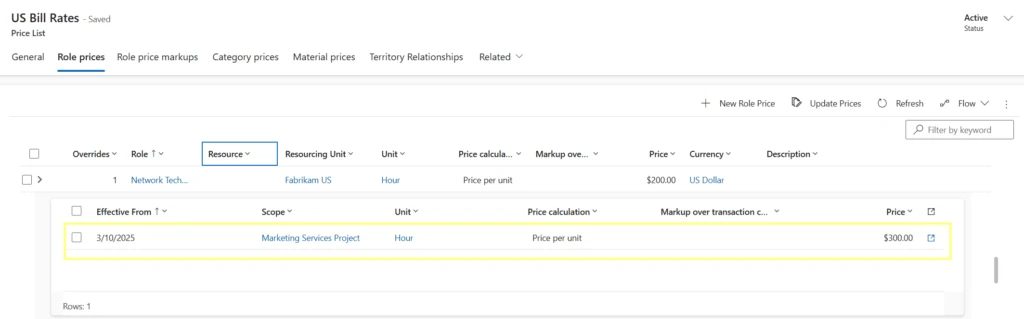

Project Lines Tab: Detailed Control Over Quote Lines and Estimates

The Project Lines tab has seen a substantial transformation and deserves special attention. As a result, this powerful workspace enables you to manage both project quote lines and their detailed estimates within a single, easy-to-use grid view. Here’s everything you can do:

- Consolidated View with Nested Grids: View project quote lines at a glance and expand each line to see its associated quote line details in a nested, expandable grid format.

- Comprehensive Details for Every Quote Line: For each quote line, you can view critical information such as billing method, invoice schedule, associated project, and budget performance metrics (transaction classes, discounts, fees, margin percentage, and extended amount).

- Drill-Down into Quote Line Details: Expanding a quote line reveals all underlying quote line details, allowing you to closely monitor and manage granular estimates tied to the high-level component.

- Direct Actions from the Grid:

- Create new quote lines or delete existing ones without navigating away.

- Import project estimates to automatically generate quote line details.

- Manually create quote line details for custom estimates right within the tab.

- Full Editing Capabilities: Select any quote line detail to edit or delete it as needed. For deeper financial insights, use the Open cost detail action to view the specific cost breakdown of each line.

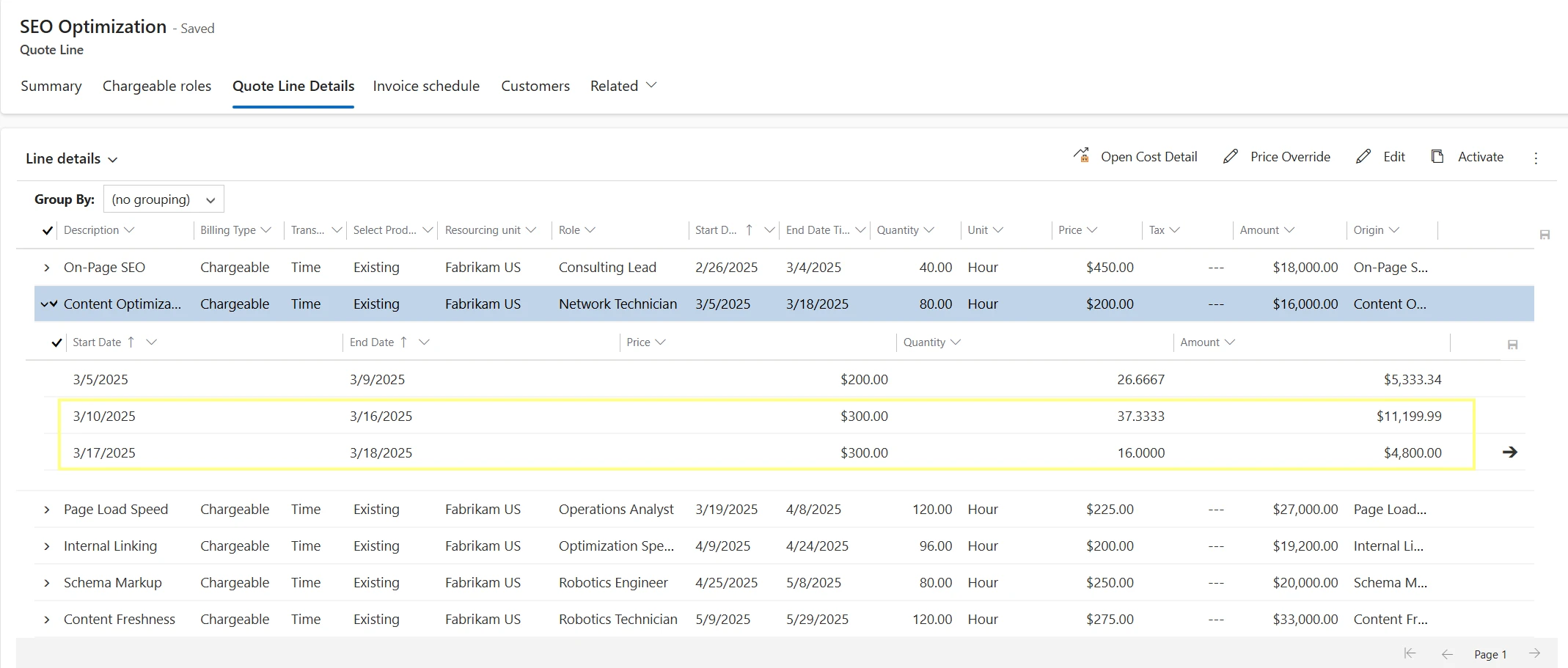

- Apply Price Overrides with Ease: Need to adjust pricing for time transaction line details? Simply:

- Select the desired line detail.

- Use the Price override button to make the price change, click save, and accept recalculate.

- Apply price overrides within both the Quote and Quote Line forms, ensuring flexibility and control.

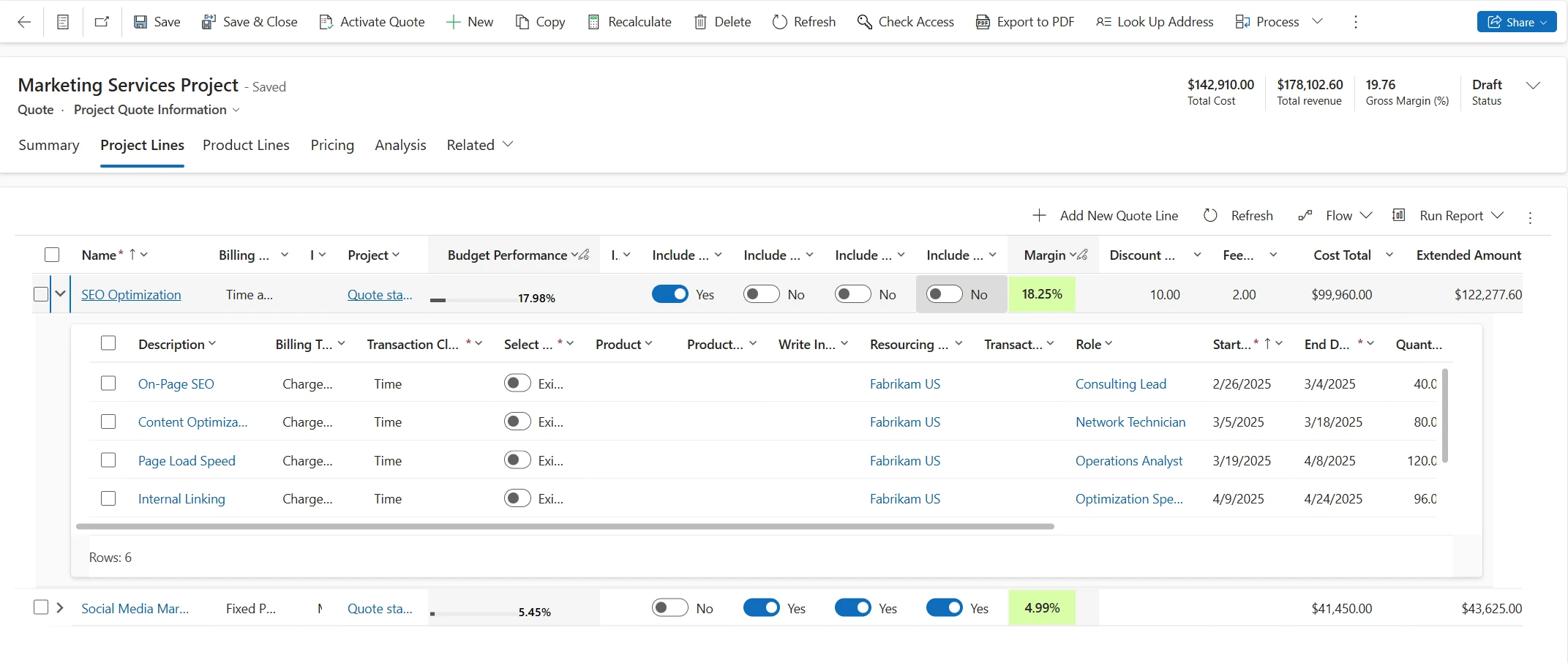

- Access Quote Line Details for Sales and Cost Prices:

- The Quote Line Detail tab now provides two form views–Line details and Line details: Cost , though which you can analyze both sales and cost prices over time.

- Line details: This view displays each quote line detail along with its associated sales price, with time-phased sales prices broken down by specific periods. The Open cost detail button is still available on selecting the quote line detail and shows the related cost rate and amount for the selected quote line detail in an expanded view.

- Line details: Cost: This view gives you insight into the cost price associated with each quote line detail. The subgrid displays time-phased cost prices, helping you to easily assess the cost structure of each quote line.

- The Quote Line Detail tab now provides two form views–Line details and Line details: Cost , though which you can analyze both sales and cost prices over time.

These enhancements provide greater visibility into both sales and cost prices, enabling more accurate pricing assessments for each quote line.

This comprehensive functionality empowers your sales and delivery teams to build accurate, competitive quotes faster while maintaining visibility into the financial levers of every project component.

Time Phasing of Project Estimates

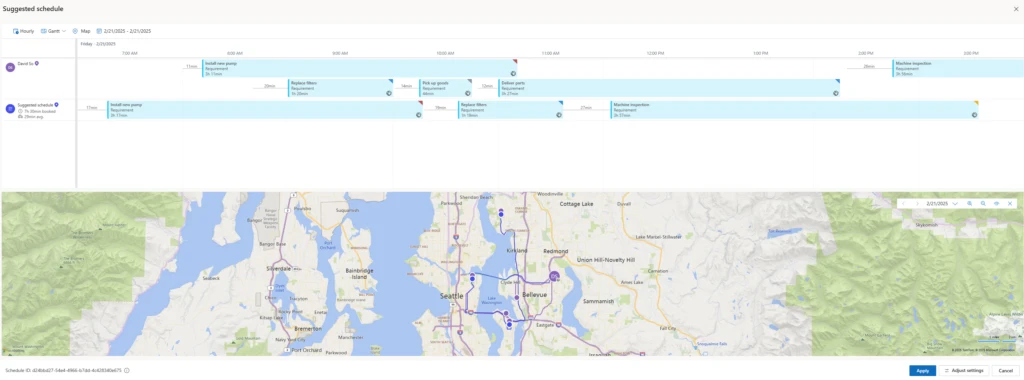

Complementing the quoting enhancements, we are also introducing Time Phasing of Project Estimates. This critical feature allows you to spread estimated costs and revenue over time, reflecting project duration and delivery milestones.

The Time phasing of prices feature provides visibility into price fluctuations over time within Project quote lines. Now, nested quote line details display price changes phased out on a weekly basis. This allows you to track adjustments due to price overrides or multiple price lists.

Throughout the duration of a quote line detail, the system distributes the quantities and prices for each week, making it easier to identify pricing adjustments. The parent quote line detail reflects the weighted average price of all child time-phased lines. You can create role price overrides on a quote line detail that is then reflected in the nested time-phased lines. Each week in the nested Quote Line detail line retains the price applicable on the first day of that week.

What Does This Mean for You?

With these enhancements, Dynamics 365 Project Operations offers:

- Simplified workflows for faster quote creation

- Full visibility into financial details at every stage

- Better forecasting with time-phased estimates, enabling accurate tracking of sales and cost price fluctuations and role price adjustments over time

- Increased efficiency with fewer clicks and a modern UI

- Smarter decision-making with real-time KPIs

These improvements to project quotes in Dynamics 365 are designed to help you win more deals, deliver more predictable project outcomes, and maximize profitability.

Call to Action

We encourage you to explore them and see firsthand how they can transform your project sales and estimation process. Experience the streamlined quoting process in Dynamics 365 Project Operations and unlock better control over your project estimates. Start building detailed, accurate, and customer-ready quotes with ease. Dive into the enhanced Quote form, explore time-phased estimates, and empower your teams to make informed, confident decisions. Ready to elevate your quoting experience? Explore the new capabilities today!

Learn More

We are making constant enhancements to our features. To learn more about Project quotations, visit Project quotations new experience and to learn more about Time phasing of estimates, visit Estimate a project quote line

The post Reimagine Project Quotes with Enhanced Experience and Time Phased Estimates in Dynamics 365 Project Operations appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments