by Contributed | Jun 7, 2024 | Technology

This article is contributed. See the original author and article here.

Navigating the intricate world of technology requires more than just expertise; it demands the ability to share that knowledge effectively. This article embarks on a journey to uncover the most effective strategies for crafting presentations tailored for the tech-savvy audience. We will dissect the elements that make a presentation not just informative, but memorable and engaging. From leveraging the latest tools to understanding the nuances of audience engagement, we aim to provide a comprehensive guide that empowers tech professionals to deliver their message with precision and impact. We’ll discuss these strategies with U.S. M365 MVP Melissa Marshall.

MVP Melissa Marshall

Share with us your journey into becoming a top-level presenter.

I started my career as a professor at Penn State University, where I taught public speaking courses for engineering students. While I was there, I had the good fortune to give a TED Talk entitled “Talk Nerdy to Me” about the importance of science communication, and that really launched my ideas on scientific presentations into global prominence. I began to receive additional invitations to speak at conferences and provide training workshops at companies and institutions. In 2015, I went full-time into my speaking, training, and consulting business, Present Your Science. I now help the leading tech professionals and companies in the world present their work in a meaningful and compelling way that inspires stakeholders to take action.

Could you please share with us the three main tools to improve presentations?

- Be audience-centric. Your ability to be successful as a speaker depends upon your ability to make your audience successful.

TIP: Be an interpreter of your work, not a reporter. Always connect each piece of technical info to a “So what?” point.

- Filter and Focus. When you try to share everything, you share nothing.

TIP: Start your planning with the ‘view’ you want your audience to have at the end of the talk. Then ask yourself “What would they need to know in order to get there?”

- Show Your Science. Your slides should do something for you that your words cannot. This means make your slides VISUAL not VERBAL.

TIP: Avoid bullet points (seriously!). Have a brief take-away message at the top of each slide and support it with visual evidence.

What are the main challenges that presenters face during a presentation?

Presenters often allow the “status quo” of how slides are typically designed in their industry or at their company to dictate their choices. Unfortunately, this status quo is often rooted in text heavy, bulleted slides which are not successful for an audience. Instead of designing slides how you have always seen it done, I think presenters need to use a more strategic, evidence-based approach for their slide design. That’s why I worked with the MS PowerPoint team to create this slide design template for technical presenters. This template is fully customizable, but it helps to lead the presenter to a design strategy that focuses on take away messages supported by visual evidence. Which is a big step in the right direction for technical slides that are more successful for an audience.

Also, presenters often struggle to filter their details in presentations, and they overwhelm the audience with too much information. This can be improved by beginning your preparation by identifying the most critical single message you must convey. And then focus on including information that relates to that message.

When sharing data, it’s important to be very descriptive about not just what the data is, but why the data is significant. It is easy to get in the habit of simply sharing the information, without providing context for it.

How are you using AI today to help you with presentations?

I love sharing PowerPoint Speaker Coach with my clients. This is an awesome AI-Driven tool that provides the speaker with private feedback on presentation elements like rate of speaking, emphasis, verbal fillers, and inclusive language. It’s a great way to add some structure and purpose to practicing a presentation.

What advice would you have for tech professionals beginning their journey presenting?

Look for more opportunities to present! Most people have some anxiety associated with speaking in front of others, which causes them to avoid those situations as much as possible. However, the answer to becoming more comfortable speaking is to simply DO IT MORE. It’s counterintuitive to what we feel like we want to do, but if you embraced the discomfort of presenting more often, you would find quite quickly that you are all the sudden becoming more comfortable and confident.

In summary, the journey through the landscape of technical presentations is one of continuous learning and adaptation. The strategies discussed here provide a roadmap for creating presentations that not only convey complex information but also engage and inspire the tech community. By weaving together a narrative that resonates with the audience, utilizing visual aids to clarify and emphasize key points, and delivering with confidence and passion, presenters can leave a lasting impact. As technology continues to advance, so must our approach to sharing it. Let this article serve as a catalyst for innovation in your presentation techniques, empowering you to illuminate the path forward in the ever-changing world of technology.

by Contributed | Jun 6, 2024 | Technology

This article is contributed. See the original author and article here.

Hi!

I written several posts about how to use Local Large Language Models with C#.

But what about Small Language Models?

Well, today’s demo is an example on how to use SLMs this with ONNX. So let’s start with a quick intro to Phi-3, ONNX and why to use ONNX. And then, let’s showcase some interesting code sample and respirces.

In the Phi-3 C# Labs sample repo we can find several samples, including a Console Application that loads a Phi-3 Vision model with ONNX, and analyze and describe an image.

Introduction to Phi-3 Small Language Model

The Phi-3 Small Language Model (SLM) represents a groundbreaking advancement in AI, developed by Microsoft. It’s part of the Phi-3 family, which includes the most capable and cost-effective SLMs available today. These models outperform others of similar or even larger sizes across various benchmarks, including language, reasoning, coding, and math tasks. The Phi-3 models, including the Phi-3-mini, Phi-3-small, and Phi-3-medium, are designed to be instruction-tuned and optimized for ONNX Runtime, ensuring broad compatibility and high performance.

You can learn more about Phi-3 in:

Introduction to ONNX

ONNX, or Open Neural Network Exchange, is an open-source format that allows AI models to be portable and interoperable across different frameworks and hardware. It enables developers to use the same model with various tools, runtimes, and compilers, making it a cornerstone for AI development. ONNX supports a wide range of operators and offers extensibility, which is crucial for evolving AI needs.

Why to Use ONNX on Local AI Development

Local AI development benefits significantly from ONNX due to its ability to streamline model deployment and enhance performance. ONNX provides a common format for machine learning models, facilitating the exchange between different frameworks and optimizing for various hardware environments.

For C# developers, this is particularly useful because we have a set of libraries specifically created to work with ONNX models. In example:

C# ONNX and Phi-3 and Phi-3 Vision

Project |

Description |

Location |

|---|

LabsPhi301 |

This is a sample project that uses a local phi3 model to ask a question. The project load a local ONNX Phi-3 model using the Microsoft.ML.OnnxRuntime libraries. |

.srcLabsPhi301 |

LabsPhi302 |

This is a sample project that implement a Console chat using Semantic Kernel. |

.srcLabsPhi302 |

LabsPhi303 |

This is a sample project that uses a local phi3 vision model to analyze images.. The project load a local ONNX Phi-3 Vision model using the Microsoft.ML.OnnxRuntime libraries. |

.srcLabsPhi303 |

LabsPhi304 |

This is a sample project that uses a local phi3 vision model to analyze images.. The project load a local ONNX Phi-3 Vision model using the Microsoft.ML.OnnxRuntime libraries. The project also presents a menu with different options to interacti with the user. |

.srcLabsPhi304 |

To run the projects, follow these steps:

Clone the repository to your local machine.

Open a terminal and navigate to the desired project. In example, let’s run LabsPhi301.

Run the project with the command

The sample project ask for a user input and replies using the local mode.

The running demo is similar to this one:

Sample Console Application to use a ONNX model

Let’s take a look at the 1st demo application with the following code snippet is from /src/LabsPhi301/Program.cs. The main steps to use a model with ONNX are:

- The Phi-3 model, stored in the

modelPath , is loaded into a Model object.

- This model is then used to create a

Tokenizer which will be responsible for converting our text inputs into a format that the model can understand.

And this is the chatbot implementation.

- The chatbot operates in a continuous loop, waiting for user input.

- When a user types a question, the question is combined with a system prompt to form a full prompt.

- The full prompt is then tokenized and passed to a

Generator object.

- The generator, configured with specific parameters, generates a response one token at a time.

- Each token is decoded back into text and printed to the console, forming the chatbot’s response.

- The loop continues until the user decides to exit by entering an empty string.

using Microsoft.ML.OnnxRuntimeGenAI;

var modelPath = @"D:phi3modelsPhi-3-mini-4k-instruct-onnxcpu_and_mobilecpu-int4-rtn-block-32";

var model = new Model(modelPath);

var tokenizer = new Tokenizer(model);

var systemPrompt = "You are an AI assistant that helps people find information. Answer questions using a direct style. Do not share more information that the requested by the users.";

// chat start

Console.WriteLine(@"Ask your question. Type an empty string to Exit.");

// chat loop

while (true)

{

// Get user question

Console.WriteLine();

Console.Write(@"Q: ");

var userQ = Console.ReadLine();

if (string.IsNullOrEmpty(userQ))

{

break;

}

// show phi3 response

Console.Write("Phi3: ");

var fullPrompt = $"{systemPrompt}{userQ}";

var tokens = tokenizer.Encode(fullPrompt);

var generatorParams = new GeneratorParams(model);

generatorParams.SetSearchOption("max_length", 2048);

generatorParams.SetSearchOption("past_present_share_buffer", false);

generatorParams.SetInputSequences(tokens);

var generator = new Generator(model, generatorParams);

while (!generator.IsDone())

{

generator.ComputeLogits();

generator.GenerateNextToken();

var outputTokens = generator.GetSequence(0);

var newToken = outputTokens.Slice(outputTokens.Length - 1, 1);

var output = tokenizer.Decode(newToken);

Console.Write(output);

}

Console.WriteLine();

}

This is a great example of how you can leverage the power of Phi-3 and ONNX in a C# application to create an interactive AI experience. Please take a look at the other scenarios and if you have any questions, we are happy to receive your feedback!

Best

Bruno Capuano

Note: Part of the content of this post was generated by Microsoft Copilot, an AI assistant.

by Contributed | Jun 5, 2024 | Dynamics 365, Microsoft 365, Technology

This article is contributed. See the original author and article here.

Azure Application Insights is now our comprehensive solution for end-to-end conversation diagnostics. As part of this advancement, we are phasing out unified routing diagnostics and integrating its capabilities into Application Insights.

Key dates

- Disclosure date: May 9, 2024

We sent communications to affected customers that we are deprecating unified routing diagnostics in Dynamics 365 Customer Service.

- End of support: July 1, 2024

After this date, no new diagnostics records will be generated for conversations/records routed.

- End of life: July 15, 2024

After this date, unified routing diagnostics will be taken out of service.

Next steps

We strongly encourage customers to leverage Azure Application Insights, which will be enriched with all the conversation and routing diagnostics related events. Application Insights offers a flexible and cost-effective alternative with the added advantage of customization to meet business needs. We aim to iteratively improve diagnostic capabilities of conversation lifecycle events. This ensures reliability and alignment with our commitment to cost-efficiency and user-centric innovation. Learn more about conversation diagnostics in Azure Application Insights.

Please contact your Success Manager, FastTrack representative, or Microsoft Support if you have any additional questions.

The post Transition from unified routing diagnostics to Azure Application Insights appeared first on Microsoft Dynamics 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Jun 5, 2024 | Technology

This article is contributed. See the original author and article here.

There’s no question about it: People are the make-or-break element of a high-performing IT organization. To stay competitive, organizations must ensure that they have the right people with the right skills in the right positions. But with accelerating tech advancements, doing so has never been tougher.

IDC data reveals that IT skills shortages are widening in both scope and severity. According to 811 IT leaders responding to the IDC 2024 IT Skills Survey (January 2024), organizations are experiencing a wide range of negative impacts relating to a dearth of enterprise skills. Nearly two-thirds (62%) of IT leaders tell IDC that a lack of skills has resulted in missed revenue growth objectives. More than 60% say that it has led to quality problems and a loss of customer satisfaction overall.

As a result, IDC now predicts that by 2026, more than 90% of organizations worldwide will feel similar pain, amounting to some $5.5 trillion in losses caused by product delays, stalled digital transformation journeys, impaired competitiveness, missed revenue goals, and product quality issues.

To maintain competitiveness, organizations everywhere must improve IT training. They must go beyond just putting more modern IT training platforms and learning systems in place, however. Rather, they must invest in instilling and promoting a culture of learning within the organization — one that values ongoing, continuous learning and rewards the accomplishments of learners. Vendor- and technology-based credentials have long been core to organizational training efforts, and for good reason: They provide organizations with a way to ascertain important technical skills. And full-vendor credentials still matter, of course. But IDC data shows growing excitement about badges and micro-credentials as a means of verifying more specific scenario- or project-based skills.

When less is more

Without a doubt, vendor certifications remain a critical tool for hiring new professionals and upskilling existing ones. IDC data reveals that they can enhance career mobility, engagement, and salary. In fact, according to IDC’s 2024 Full and Micro Credential Survey (March 2024), some 70% of IT leaders say that certifications are important when hiring, regardless of how experienced candidates are in their careers. But organizations these days also find specific scenario- or project-based micro-credentials to be increasingly appealing as a complement to full-fledged vendor credentials.

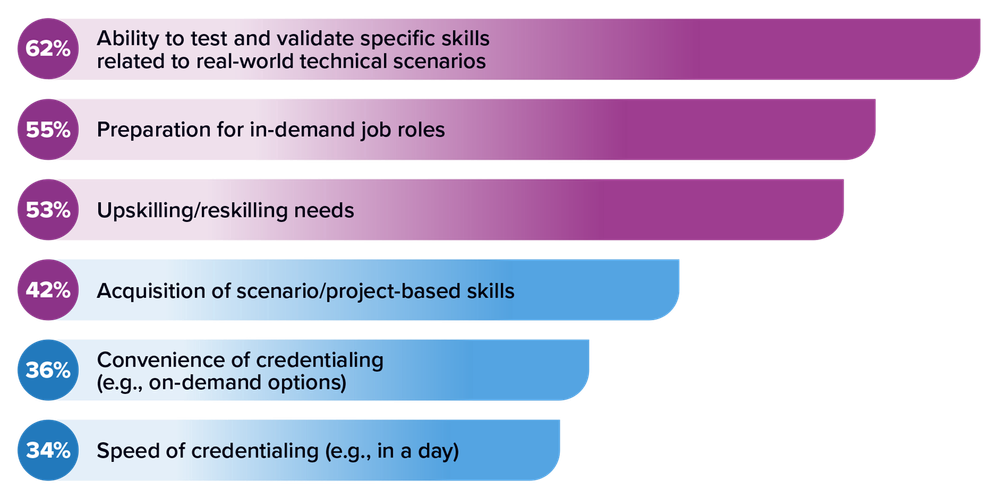

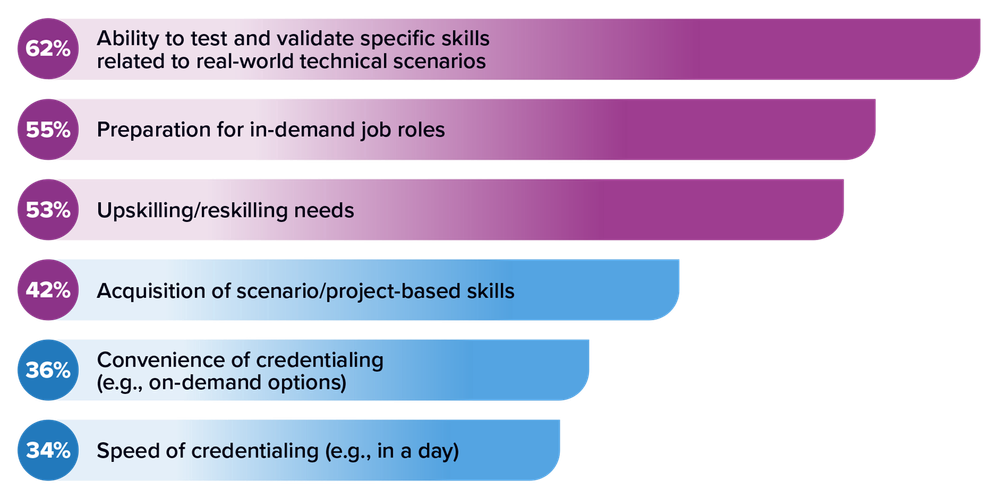

To this point, more than 60% of organization leaders tell IDC that they use micro-credentials to test and validate specific skills related to real-world technical scenarios. They say that it also helps them to prepare themselves and their employees for in-demand job roles (55%) and to fulfill upskilling and reskilling needs (53%).

Q. What are the main factors driving the consideration of the use of micro-credentials?

Source: IDC 2024 Digital Skilling Survey, March 2024

Moving forward

As the IT skills shortage continues to expand and worsen, IDC predicts that global IT leaders will face increasing pressure to get the right people with the right skills into the right roles. Adding to the pressure is the rapidly growing demand for AI skills. According to IDC’s Future Enterprise and Resiliency Survey, 58% of CEOs worldwide are deeply concerned about their ability to deliver on planned AI initiatives over the next 12 months.

A full-featured IT training program that lets organizations and employees hone the skills they have makes all the difference. Credentials, whether full or project based, are a key part of any thoughtful IT training and continuous skilling initiative. Full credentials and micro-credentials together allow organizations to determine where skill gaps lie and create a plan of action to skill up employees on the technologies and projects on which they rely.

Organizations should focus on involving key stakeholders across departments to get their buy-in and partner with established credentialing organizations to add credibility. They should design clear career pathways that show how reskilling with micro-credentials and certifications can align with company goals and lead to faster promotions. They should foster a culture of continuous learning to keep skills current as well as update the program with industry trends and emerging technologies to maintain its relevance. Finally, they should regularly assess the program’s effectiveness in closing the skills gap and adjust as necessary.

Investing in continuing education for your employees just makes plain sense. IDC’s research has long shown that companies that invest in training are better able to retain their best, most talented employees.

For more information, read IDC InfoBrief, Skilling Up! Leveraging Full and Micro-Credentials for Optimal Skilling Solutions

by Contributed | Jun 5, 2024 | AI, Business, Microsoft 365, Technology, Work Trend Index

This article is contributed. See the original author and article here.

Discover some key insights from the Work Trend Index report that can impact small and medium-sized business leaders, as well as the actions you can take to prepare your organization for AI and better leverage its benefits so you can maintain your competitive edge.

The post Workers worldwide are embracing AI, especially in small and medium-size businesses appeared first on Microsoft 365 Blog.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

by Contributed | Jun 4, 2024 | Technology

This article is contributed. See the original author and article here.

More often than we want to admit, customers frequently come to us with cases where a Consumption logic app was unintentionally deleted. Although you can somewhat easily recover a deleted Standard logic app, you can’t get the run history back nor do the triggers use the same URL. For more information, see GitHub – Logic-App-STD-Advanced Tools.

However, for a Consumption logic app, this process is much more difficult and might not always work correctly. The definition for a Consumption logic app isn’t stored in any accessible Azure storage account, nor can you run PowerShell cmdlets for recovery. So, we highly recommend that you have a repository or backup to store your current work before you continue. By using Visual Studio, DevOps repos, and CI/CD, you have the best tools to keep your code updated and your development work secure for a disaster recovery scenario. For more information, see Create Consumption workflows in multitenant Azure Logic Apps with Visual Studio Code.

Despite these challenges, one possibility exists for you to retrieve the definition, but you can’t recover the workflow run history nor the trigger URL. A few years ago, the following technique was documented by one of our partners, but was described as a “recovery” method:

Recovering a deleted Logic App with Azure Portal – SANDRO PEREIRA BIZTALK BLOG (sandro-pereira.com)

We’re publishing the approach now as a blog post but with the disclaimer that this method doesn’t completely recover your Consumption logic app, but retrieves lost or deleted resources. The associated records aren’t restored because they are permanently destroyed, as the warnings describe when you delete a Consumption logic app in the Azure portal.

Recommendations

We recommend applying locks to your Azure resources and have some form of Continuous Integration/Continuous Deployment (CI/CD) solution in place. Locking your resources is extremely important and easy, not only to limit user access, but to also protect resources from accidental deletion.

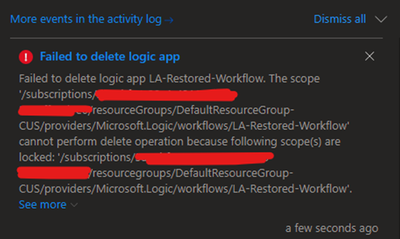

To lock a logic app, on the resource menu, under Settings, select Locks. Create a new lock, and select either Read-only or Delete to prevent edit or delete operations. If anyone tries to delete the logic app, either accidentally or on purpose, they get the following error:

For more information, see Protect your Azure resources with a lock – Azure Resources Manager.

Limitations

- If the Azure resource group is deleted, the activity log is also deleted, which means that no recovery is possible for the logic app definition.

- Run history won’t be available.

- The trigger URL will change.

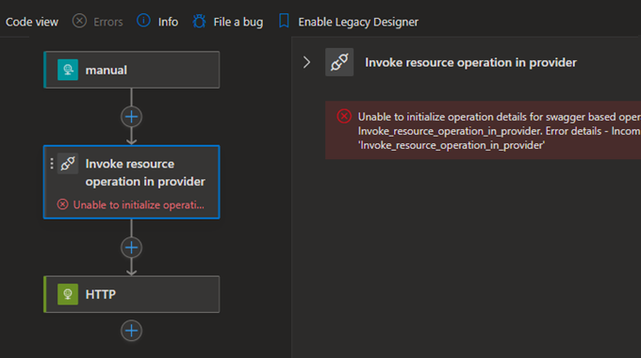

- Not all API connections are restored, so you might have to recreate them in the workflow designer.

- If API connections are deleted, you must recreate new API connections.

- If a certain amount of time has passed, it’s possible that changes are no longer available.

Procedure

- In the Azure portal, browse to the resource group that contained your deleted logic app.

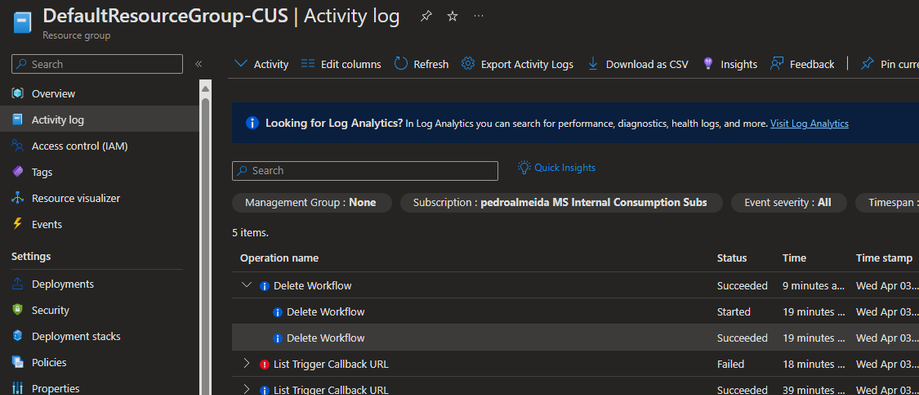

- On the logic app menu, select Activity log.

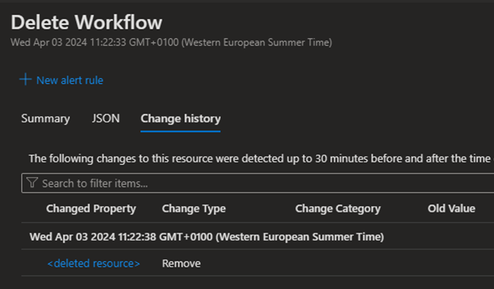

- In the operations table, in the Operation name column, find the operation named Delete Workflow, for example:

- Select the Delete Workflow operation. On the pane that opens, select the Change history tab. This tab shows what was modified, for example, versioning in your logic app.

As previously mentioned, if the Changed Property column doesn’t contain any values, retrieving the workflow definition is no longer possible.

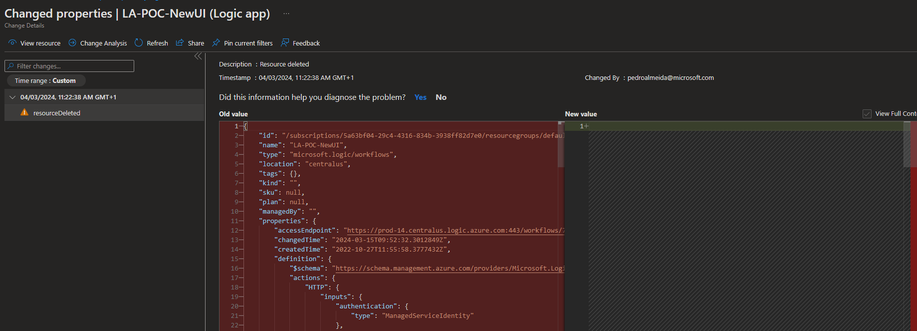

- In the Changed Property column, select .

You can now view your logic app workflow’s JSON definition.

- Copy this JSON definition into a new logic app resource.

- As you don’t have a button that restores this definition, the workflow should load without problems.

- You can also use this JSON workflow definition to create a new ARM template and deploy the logic app to an Azure resource group with the new connections or by referencing the previous API connections.

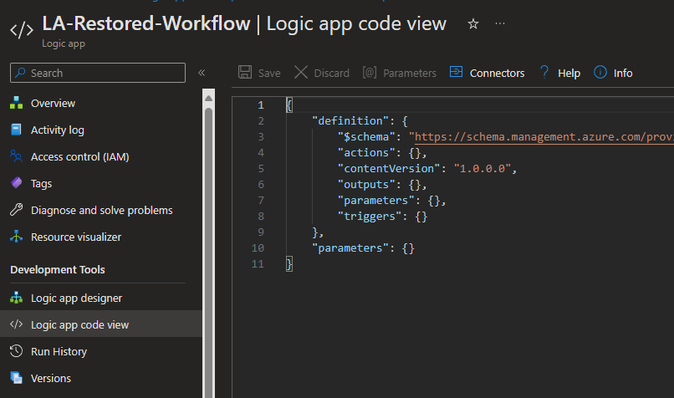

- If you’re restoring this definition in the Azure portal, you must go to the logic app’s code view and paste your definition there.

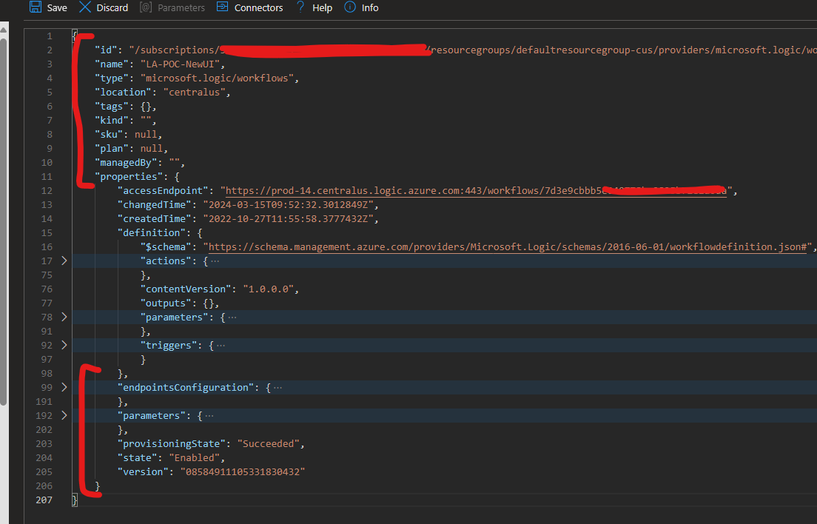

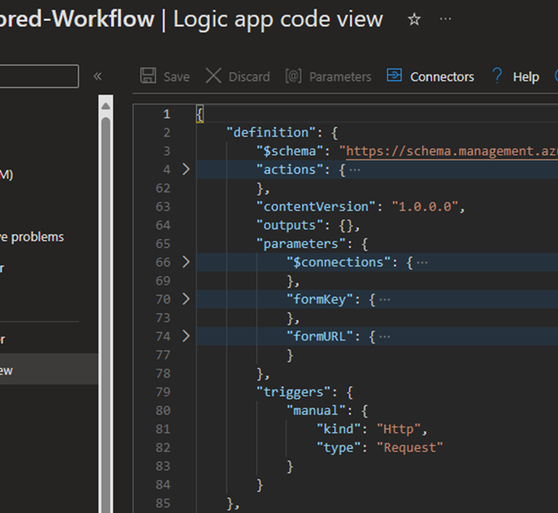

The complete JSON definition contains all the workflow’s properties, so if you directly copy and paste everything into code view, the portal shows an error because you’re copying the entire resource definition. However, in code view, you only need the workflow definition, which is the same JSON that you’d find on the Export template page.

So, you must copy the definition JSON object’s contents and the parameters object’s contents, paste them into the corresponding objects in your new logic app, and save your changes.

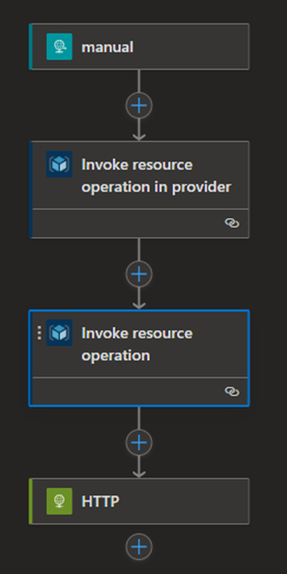

In this scenario, the API connection for the Azure Resource Manager connector was lost, so we have to recreate the connection by adding a new action. If the connection ID is the same, the action should re-reference the connection.

After we save and refresh the designer, the previous operation successfully loaded, and nothing was lost. Now you can delete the actions that you created to reprovision the connections, and you’re all set to go.

We hope that this guidance helps you mitigate such occurrences and speeds up your work.

Recent Comments