This article is contributed. See the original author and article here.

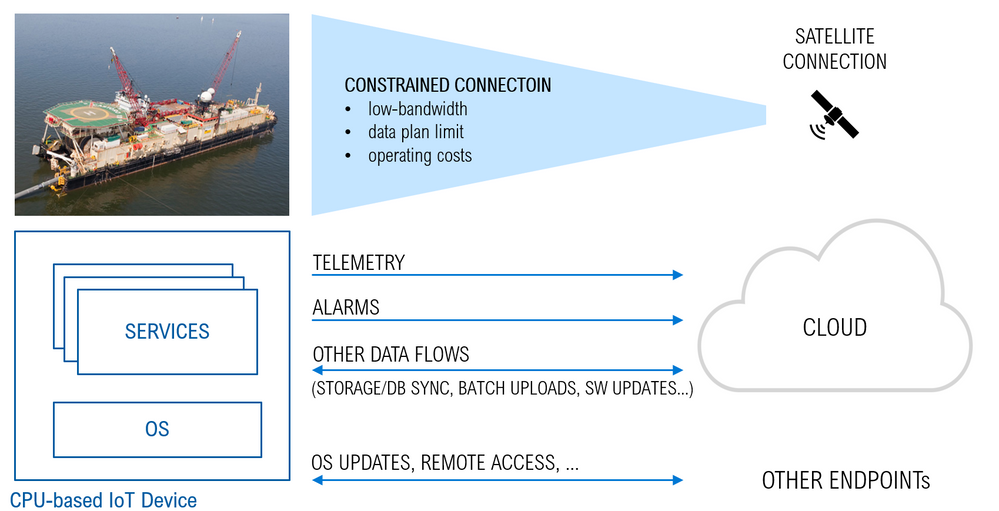

There are cases where IoT devices are connected to the cloud over constrained and metered connections like LPWAN, narrow-band cellular, or satellite connections.

Although such connections are sometimes the only viable option (think of a satellite link for an off-shore vessel), they may underperform in terms of:

- a lower bandwidth and bitrate, leading also to an increased latency

- higher operating-costs

- data plans with lower limits on the traffic volume

If not an issue with simple MCU-based IoT devices sending few data-points per day, those constraints may be a major concern or even a showstopper in the case of CPU-based devices, running a full-fledged Operating System (OS) along with the services and workloads required by the specific use case. The overall traffic in the latter case is significantly bigger, and it includes a variety of data-flows with different characteristics and requirements in terms of frequency, traffic volume and latency. Just to mention a few:

- telemetry (which is a continuous stream, with a low to high traffic volume depending on the specific use case and the latency is usually not critical although important)

- high-priority events like alarms (random events, low volume but latency is critical)

- bulk uploads like file storage and DB syncs, OS and SW updates, container images pull (on a periodic basis or on-demand, usually very high volume, latency is not relevant at all)

Transporting those data-flows over a constrained and metered connection poses several risks: the traffic volume can easily exceed the limit and increase the operating costs, and the low bandwidth makes it challenging to meeting the latency requirements of high-priority messages like alarms.

Possible mitigations of those risks are:

- reducing the traffic volume, in order to comply with data plan limits, to reduce the operating costs, and to reduce the bandwidth footprint

- prioritizing data-flows, in order to comply with the latency requirements

Let’s see how to implement such mitigation strategies.

Reducing the traffic volume

Edge Computing is a valid option to reduce the overall traffic volume. It enables processing and analyzing the raw data close to its source, and transferring only the insights over the connection. Edge Computing adds several other benefits as well, like low-latency control at the edge, increased security/reliability through decentralization, privacy and compliance.

There are various platforms available to implement edge computing securely and in a way that allows control of the workloads from the cloud. Azure IoT Edge is a good example of such platforms.

Prioritizing data-flows

In addition to reducing the traffic volume, we need also a way to prioritize data-flows and control the latency.

Some Edge Computing platforms may embed a mechanism to assign priorities to the data-flows they process. A good example is the “Priority Queues” mechanism implemented in the IoT Edge v1.0.10 and announced in this blog post. Each stream uses a dedicated queue, with individual settings in terms of time-to-live and priority over other streams. That would be used, for instance, to ensure that an alarm is delivered before other telemetry messages already in the queue.

However, such a mechanism cannot be applied to bulk upload of raw data, or to any other data-flow which is out of the scope of edge computing (like OS/SW updates, container image pulls, …). The prioritization of such data-flows needs a different approach.

An easy but effective solution would be to limit the bandwidth that they are allowed to use. That would prevent an OS update or a file upload from saturating the whole available bandwidth, with the risk of interfering with other streams (telemetry, alarms) or even stopping them.

Bandwidth Shaping via Linux Traffic Control

On a Linux system, the kernel’s traffic control subsystem is an effective and quite versatile way of shaping any TCP/IP data-flow.

Such Traffic Control subsystem embeds a packet scheduler and configurable “queueing disciplines” which allow for:

- limiting the bandwidth (with advanced filtering on networks, hosts, ports, directions, …)

- simulating delays, packet loss, corruption, and duplicates (useful to investigate the impact of connection’s constraints on performance and reliability)

The Traffic Control utility, aka ‘tc’, is a command-line tool that gives you full access to the kernel packet scheduler. It is pre-installed in many distros (ex. Ubuntu) or you can easily install it if missing. In case of Debian for instance:

sudo apt-get install iproute2

You can start experimenting with the tc in a shell and create and apply rules that will limit the bandwidth of a service, or simulate packet loss/corruption and network delays. For instance, the following will limit the egress bandwidth of the network interface ‘eth0’ to 1Mbps:

tc qdisc add dev eth0 root tbf rate 1mbit burst 32kbit

You can find more examples here.

Try also tc-config, which is a tc command wrapper (developed in Python) with simpler and more intuitive syntax and parameters.

With a device running Linux, you could remotely SSH onto it and use command lines to configure tc, but that’s not a scalable method if you are dealing with many devices in a production environment. If you are using Azure IoT Edge for edge compute, we have created an open-source module that will help you. With this module, you will be able to configure traffic control through the module twin like you would for any other IoT Edge modules.

The traffic-control-edge-module (github here) is a sample IoT Edge module wrapping the tc-config command-line tool to perform bandwidth limitation and traffic shaping within an IoT Edge device via Module Twins.

Here’s an example showing how you would apply a 50Kbps bandwidth limitation to the data-flow associated to a bulk upload originated by the edge Blob Storage module named “myBlobStorage” running in the IoT Edge device:

The module implements also some simple logic to listen to containers lifecycle events, to detect whether a container has been (re)started and to (re)apply the related rules if any.

Check out the repository for the traffic-control-edge-module (github here) and do not hesitate to let us know what you think, file issues, and contribute

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments