This article is contributed. See the original author and article here.

This post was authored by @Pawel Partyka from the Office 365 ATP Customer Experience Engineering team, and Vipul Pandey from the Office 365 ATP PM team.

1 – Introduction

Office 365 Advanced Threat Protection provides several built-in security alerts to help customers detect and mitigate potential threats. In many large enterprises there is often a need to integrate these alerts with a SIEM platform or other case management tools to enable a Security Operations Center (SOC) team to monitor the alerts. The Office 365 Management Activity API provides these SOC teams the ability to integrate O365 ATP alerts with other platforms.

One of the challenges that organizations often face, particularly large enterprises, is the ever-increasing volume of alerts that the SOC needs to monitor. This at times makes it important to integrate and onboard only specific alerts to the monitoring and case management platforms or SIEM.

Let’s take an integration scenario that we worked on recently. As part of effort to deal with phishing related threats, one of our large enterprise customers wanted to fetch and integrate “user-reported phishing alerts”. However, they only wanted to their SOC to get those alerts that have already been processed by an Automated Investigation and Response (AIR) playbook to reduce false positives and focus on real threats.

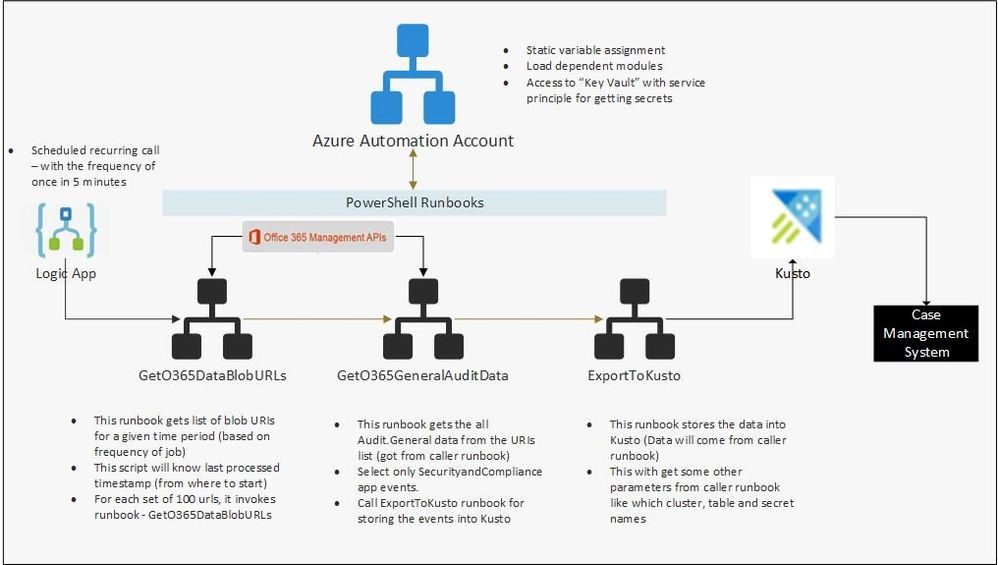

Our engineering team worked on a solution which efficiently fetches only the relevant alerts using the Office 365 Management API and integrates them with the SIEM and case management platform. Below is the solution and the reference architecture. This could potentially be used to fetch and integrate other relevant alerts from Office 365 ATP.

2 – Azure Components

As mentioned in the introduction, we have used the Azure cloud to set up end-to-end infrastructure for getting O365 audit events and storing the required filtered data for near real-time security monitoring and historical analysis. After evaluating and analyzing various combinations of Azure services, we have decided to use following Azure components.

- Azure Data Explorer (Kusto) for final storage, which gives us real-time querying capabilities for data analysis.

- Azure blob containers – As a staging area and for data archival.

- Azure automation account – For deploying and automating the PowerShell scripts we used to fetch audit data using the Office 365 Management API.

- Azure Logic app – For scheduling and calling the PowerShell runbooks.

- Azure Key Vault – For storing the secrets required for accessing the Management API.

The architecture diagram below depicts the end-to-end setup.

3 – Setting up the Azure Active Directory application

To access audit data from the O365 Management API, we’ll configure access though an Azure Active Directory (AAD) application. Create an AAD application using the steps below, and get access to this AAD application from the tenant admin. Once you have access, keep a note of the Client Id and secret key of this application, because we will need these details later.

3.1 – Azure AD app registration

- Navigate to the Azure AD admin portal.

- Click “New registration”.

- Enter a name for your app (for example “Management Activity API”). Leave the “Accounts in this organizational directory only” option selected.

- Select “Web” and click “Register”.

Figure 2: Registering an Azure AD application

- Click “API permissions” from left navigation menu.

- Click “Add a permission” and then “Office 365 Management APIs”.

Figure 3: Requesting API permissions

- Click “Application permissions”. Expand “ActivityFeed” and select “ActivityFeed.Read”, expand “ServiceHealth” and select “ServiceHealth.Read”.

Figure 4: Configuration of API permissions

- Click Add permissions.

- Refresh the list of permissions. Click “Grant admin consent for <your organization’s name>”. Click Yes.

- On the App screen click “Overview” and copy “Application (client) ID” to the clipboard. You will need this later when configuring the ClientIDtoAccessO365 Automation Account variable value.

- Click “Certificates & secrets”. Click “New client secret”. Assign a name to the secret. Click Add.

Figure 5: Certificates & secrets

- After the secret is generated, copy the secret to the clipboard by clicking on the “copy” icon next to the secret value. You will need this later when configuring the ClientSecrettoAccessO365 Automation Account variable value.

Figure 6: Client secrets

4 – Setup storage account

We need to set up a storage account for data storage, which we will use as a staging area. We can retain data here for longer term, to be used for historical data analysis. Below is the step-by-step process for setting up the storage account.

- Note: Use the same “Resource Group Name” for all the resources we are setting up, so that we can track all the resources easily.

- Make a note of the name of the blob storage account and name of the container, because we will need to assign this to a variable in the automation account.

Once we create the container, we can see the container like below when we navigate to “Container” section inside the storage account.

Steps to create Storage Account

- Navigate to “Storage account” service in the Azure portal and select “Add new” to create a new storage account.

Figure 7: Storage accounts

Figure 8: Create storage account

- Once we have a storage account created, click on “Containers” to create one container.

Figure 9: Create a container

- Click “Container” to add a new container.

- Provide name for the container. Make sure that “Private” access is selected.

- For more details, refer to these steps to create a blob storage account.

5 – Create a file share in the storage account

We will use Azure File share to store the .dlls needed by Azure Data Explorer for the ingestion process. Follow the step-by-step process as described to create an Azure file share in the storage account. We can create this file share under the same storage account, which we have created in previous step.

- Navigate to the storage account we have created above and select “File share”.

Figure 10: File shares

- Click “File share” to create new file share. Provide a name for the file share and allocate a 1GB quota.

Figure 11: Creating a file share

- Keep a note on the storage account and file share name, we will require them in the Automation Account.

6 – Setting up Azure Data Explorer (Kusto) Data store

Create an Azure Data Explorer cluster and database by following this step-by-step guide or the steps given below. Once the cluster is created, copy the details of cluster and database which we’ll need when inputting the Azure Automation Account variables.

For writing data into Azure Data Explorer we will use “Service Principal / AAD application” access (using the service principal secret). We will need “Admin” permissions during the first run of the script to create a table in the Kusto database. After the table is successfully created, permissions can be reduced to “Ingestor” permissions.

- Login to the Azure portal. Search for the service “Azure Data Explorer”. Click “Add” to create a new cluster.

Figure 12: Azure Data Explorer Clusters

- Provide the required details and create the cluster.

Figure 13: Create an Azure Data Explorer Cluster

- Once we have the Azure Data Explorer Cluster created, we need to create a database under this cluster. Navigate to the cluster we have created and click “Add database”.

Figure 14: Create new database

6.1 – Add Azure Active Directory app / Service principal for write access in Azure Data Explorer.

- Navigate to Azure Data Explorer Clusters.

- Open the cluster that you have created in previous step.

- Open the database created under Kusto cluster. Click “Permissions”.

Figure 15: Database Permissions

- Click Add. Make sure the “Admin” role is selected. Click “Select principals”. In the New Principals fly-out, paste the Application ID of the Run As Automation account. Select the Application ID entity. Click “Select” and click “Save”.

Figure 16: Add Permissions

- To find the Azure Run As Account Application ID follow these steps: On the Automation Accounts page, select your Automation account from the list. In the left pane, select Run As Accounts in the account settings section. Click Azure Run As Account. Copy the Application ID.

Figure 17: AzureRunAs account

7 – Upload Kusto library to file share

We need to upload Kusto libraries to the Azure Storage Account File Share.

- Download Microsoft.Azure.Kusto.Tools Nuget package Here. https://www.nuget.org/packages/Microsoft.Azure.Kusto.Tools/

- The downloaded NuPKG file is just an archive file. Extract the files from “tools” folder NuGet package, to any folder on your computer.

- In the web browser navigate to the “File share” created in chapter 5. Open the file share.

Figure 18: File Shares

- Click “Add directory”. Enter a name for the directory: “Microsoft.Azure.Kusto.Tools”

- Enter the newly created folder and click “Upload”. Select all the files extracted from “tools” folder package in step 2 and upload them. After the upload completes you should see following list of files in the folder:

Figure 19: Uploaded files

8 – Setup Automation Account

- In the Azure Portal, navigate to the Automation Accounts service and select to create new.

Figure 20: Automation Accounts

- Provide an Automation Account name, Azure subscription, and Resource group.

Figure 21: Add Automation Account

- Once we create the account, we can see the Automation Account in the Azure portal.

Figure 22: Created Automation account

- For more details refer this document for creating the automation account.

9 – Setup Azure Key Vault and store secrets

Setup Azure Key Vault and store the required secrets. Key vault name and secret names will be used in PowerShell runbooks.

- StorageAccountforBlobstorageAccessKey

- StorageAccountforFileShareAccessKey

- ClientSecrettoAccessO365

- KustoAccessAppKey

Make sure that Run-as account of Automation account has access to the Key Vault (Read – Get Keys and Secrets).

- Navigate to key vault from the portal and select access policies and add policy.

Figure 23: Key Vault Access Policies

- Select “Get” and “List” secret permissions.

Figure 24: Add Access policy

- In the “Select principal” field enter the name of your Azure Automation Account Run As Account. You can find it by navigating to your Automation Accounts. On the Automation Accounts page, select your Automation account from the list. In the left pane, select Run As Accounts in the account settings section. Click Azure Run As Account

Figure 25: Azure Run As Account

10 – Get dependent modules to Automation accounts

- We require the following modules. If these are not available, we need to import these into the automation account.

- For importing a new module, navigate to automation account and select “Modules” from the menu.

Figure 26: Modules

- Select “Browse gallery” and search for the required modules.

Figure 27: Browse Gallery

- Click on Import and select OK to import to the automation account.

Figure 28: Import module

- Repeat the same steps for all required modules.

11 – Adding Runbooks in automation account (PowerShell Runbooks)

We have broken down our PowerShell scripts into 3 different runbooks to achieve the following.

- Segregation of duties

- Parallelism

- Add plug-and-play capability

Deploy 3 runbooks into the automation account by following the steps below.

- Navigate to the Automation account we have created above and select “Runbooks”.

Figure 29: Runbooks

- Click on “Import Runbook”, choose the file from local system, and provide runbook type as “PowerShell”

- We have the following 3 PowerShell scripts, which need to be imported. Make sure to provide the names of the runbooks as we have specified in below table, otherwise scripts execution may fail.

- Download the zip file from here. Once you unzip, you can find the following 3 files.

Table 1: Runbook scripts

|

Name of the runbook (Provide the same as below while importing) |

Type of Runbook |

PowerShell Script to import |

|

GetO365DataBlobURLs |

PowerShell |

GetO365DataBlobURLs.ps1 |

|

GetO365GeneralAuditData |

PowerShell |

GetO365GeneralAuditData.ps1 |

|

ExporttoKusto_O365AuditGeneralData |

PowerShell |

ExporttoKusto_O365AuditGeneralData.ps1 |

12 – Adding variables in the automation account

- Navigate to the automation account and select variables from the menu.

Figure 30: Variables

- The following table lists the variables to be added. Add variables by selecting “Add a Variable”. Make sure to select “encryption/hiding” in case of secrets.

Table 2: Variables

|

Variable Name |

Data Type |

Value description |

|

AutomationAccountName |

String |

Name of the Automation Account created in chapter 8 |

|

AutomationAccountResourceGroupName |

String |

Name of Resource Group which Automation Account was created in. |

|

BlobStorageContainerName |

String |

Name of Container created in chapter 4 |

|

ClientIDtoAccessO365 |

String |

Azure AD application ID created in chapter 3.1 |

|

ClientSecrettoAccessO365 |

String (encrypted or from KeyVault) |

Azure AD application secret created in chapter 3.1 |

|

FileShareNameinStorageAccount |

String |

Name of the file share created in chapter 5 |

|

KeyVaultName |

String |

Name of the Azure Key Vault created in chapter 9 |

|

KustoAccessAppId |

String |

Azure Run As Account application ID. Steps to find it are described in chapter 12.1 |

|

KustoAccessAppKey (optional) |

String (encrypted or from KeyVault) |

Not required if access key is stored in Azure Key Vault in KustoAccessAppKey |

|

KustoClusterName |

String |

Azure Data Explorer cluster name created in chapter 6 |

|

KustoDatabaseName |

String |

Azure Data Explorer database name created in chapter 6 |

|

KustoIngestionURI |

String |

|

|

KustoTableName |

String |

Name of the Azure Data Explorer table that PowerShell script will create. |

|

MicrosoftLoginURL |

String |

|

|

O365ResourceUrl |

String |

|

|

O365TenantDomain |

String |

Default domain name of the tenant |

|

O365TenantGUID |

String |

This is the ID of the Office 365 tenant where alerts and investigation will be pulled out from. Follow this article to locate tenant ID. https://docs.microsoft.com/en-us/onedrive/find-your-office-365-tenant-id |

|

PathforKustoExportDlls |

String |

Name of the folder created in the file share in chapter 5. |

|

RunbookNameforExportDatatoKusto |

String |

ExporttoKusto_O365AuditGeneralData |

|

RunbookNameforGetAuditDataBlobURIs |

String |

GetO365DataBlobURLs |

|

RunbookNameforGetAuditDataFromURIs |

String |

GetO365GeneralAuditData |

|

StorageAccountforBlobstorage |

String |

Name of the storage account created in chapter 4 |

|

StorageAccountforBlobstorageAccessKey (optional) |

String (encrypted or from KeyVault) |

Not required if access key is stored in Azure Key Vault in StorageAccountforBlobstorageAccessKey (default configuration) |

|

StorageAccountforFileShare |

String |

Storage account created in chapter 4 |

|

StorageAccountforFileShareAccessKey (optional) |

String (encrypted or from KeyVault) |

Not required if access key is stored in Azure Key Vault in StorageAccountforFileShareAccessKey (default configuration) |

|

TenantIdforKustoAccessApp |

String |

This is the ID of the Azure AD tenant where Azure Run As Account is provisioned. Follow this article to locate tenant ID. https://docs.microsoft.com/en-us/onedrive/find-your-office-365-tenant-id |

12.1 – Finding the Azure Run As Account application ID.

You can find the KustoAccessAppId by navigating to your Automation Accounts. On the Automation Accounts page, select your Automation account from the list. In the left pane, select Run As Accounts in the account settings section. Click Azure Run As Account. Copy Application ID and paste it as KustoAccessAppId variable value.

Figure 31: Copy the Application ID

13 – Querying the data

After data is successfully imported by the scripts you can query it using KQL.

In the Azure Portal navigate to Azure Data Explorer Clusters. Click on the cluster name. Click on Query.

Figure 32: Query the data

Example query to verify that data is ingested:

KustoAuditTable

| extend IngestionTime=ingestion_time()

| order by IngestionTime desc

| project Name,Severity,InvestigationType,InvestigationName,InvestigationId,CreationTime,StartTimeUtc,LastUpdateTimeUtc,EndTimeUtc,Operation,ResultStatus,UserKey,ObjectId,Data,Actions,Source,Comments,Status

And finally, after all this effort an example of the output:

Figure 33: Data Output

14 – Final Remarks

- Use the following article to create a schedule to run scripts periodically (for example every hour). It is enough to create a schedule for the GetO365DataBlobURLs runbook.

- During the first execution of the PowerShell scripts, a Kusto table will be created with its entire schema. Afterwards permissions of the Azure Run As Account can be lowered from “Admins” to “Ingestor”.

- Data ingestion is delayed by approximately 5 minutes. Even after the script successfully completes, data may not show up immediately in the Azure Data Explorer cluster. This is caused by the IngestFromStreamAsync method used to ingest data to the Azure Data Explorer cluster.

- After Alerts and Investigations data is ingested into the Azure Data Explorer cluster you will notice some empty columns in the table. This is deliberate to accommodate for data coming from other workloads if you wish to ingest them as well.

Special thanks to @Satyajit Dash, Anki Narravula, and Sidhartha Bose for their contributions.

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments