This article is contributed. See the original author and article here.

Authors: Lu Han, Emil Almazov, Dr Dean Mohamedally, University College London (Lead Academic Supervisor) and Lee Stott, Microsoft (Mentor)

Both Lu Han and Emil Almazov, are the current UCL student team working on the first version of the MotionInput supporting DirectX project in partnership with UCL and Microsoft UCL Industry Exchange Network (UCL IXN).

Examples of MotionInput

Running on the spot

Cycling on an exercise bike

Introduction

This is a work in progress preview, the intent is this solution will become a Open Source community based project.

During COVID-19 it has been increasingly important for the general population’s wellbeing to keep active at home, especially in regions with lockdowns such as in the UK. Over the years, we have all been adjusting to new ways of working and managing time, with tools like MS Teams. It is especially the case for presenters, like teachers and clinicians who have to give audiences instructions, that they do so with regular breaks.

UCL’s MotionInput supporting DirectX is a modular framework to bring together catalogues of gesture inputs for Windows based interactions. This preview shows several Visual Studio based modules that use a regular webcam (e.g. on a laptop) and open-source computer vision libraries to deliver low-latency input on existing DirectX accelerated games and applications on Windows 10.

The current preview focuses on two MotionInput catalogues – gestures from at-home exercises, and desk-based gestures with in-air pen navigation. For desk-based gestures, in addition to being made operable with as many possible Windows based games, preliminary work has been made towards control in windows apps such as PowerPoint, Teams and Edge browser, focusing on the work from home era that uses are currently in.

The key ideas behind the prototype projects are to “use the tech and tools you have already” and “keep active”, providing touchless interactive interfaces to existing Windows software with a webcam. Of course, Sony’s EyeToy and Microsoft Kinect for Xbox have done this before and there are other dedicated applications that have gesture technologies embedded. However, many of these are no longer available or supported on the market and previously only worked with dedicated software titles that they are intended for. The general population’s fitness, the potential for physiotherapy and rehabilitation, and use of motion gestures for teaching purposes is something we intend to explore with these works. Also, we hope the open-source community will revisit older software titles and make selections of them become more “actionable” with further catalogue entries of gestures to control games and other software. Waving your arms outreached in front of your laptop to fly in Microsoft Flight Simulator is now possible!

The key investigation is in the creation of catalogues of motion-based gestures styles for interfacing with Windows 10, and potentially catalogues for different games and interaction genres for use industries, like teaching, healthcare and manufacturing.

The teams and projects development roadmap includes trialing at Great Ormond Street’s DRIVE unit and several clinical teams who have expressed interest for rehabilitation and healthcare systems interaction.

Key technical advantages

- Computer vision on RGB cameras on Windows 10 based laptops and tablets is now efficient enough to replace previous depth-camera only gestures for the specific user tasks we are examining.

- A library of categories for gestures will enable many uses for existing software to be controllable through gesture catalogue profiles.

- Bringing it as close as possible to the Windows 10 interfaces layer via DirectX and making it as efficient as possible on multi-threaded processes reduces the latency so that gestures are responsive replacements to their corresponding assigned interaction events.

Architecture

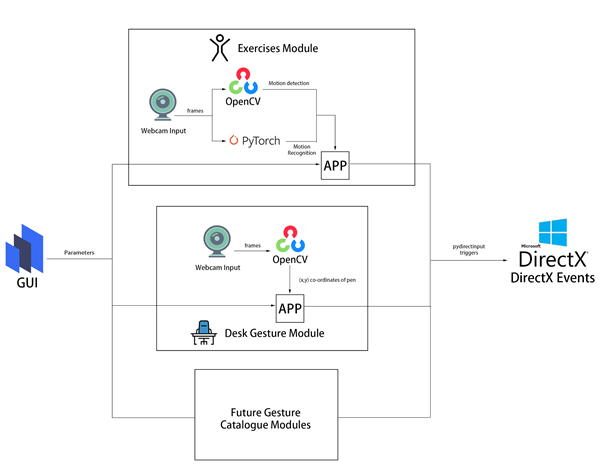

All modules are connected by a common windows based GUI configuration panel that exposes the parameters available to each gesture catalogue module. This allows a user to set the gesture types and customise the responses.

The Exercise module in this preview examines repetitious at-home based exercises, such as running on the spot, squatting, cycling on an exercise bike, rowing on a rowing machine etc. It uses the OpenCV library to decide whether the user is moving by calculating the pixel difference between two frames.

The PyTorch exercise recognition model is responsible for checking the status of the user every 8 frames. Only when the module decides the user is moving and the exercise he/she is performing is recognized to be the specified exercise chosen in the GUI, DirectX events (e.g. A keypress of “W” which is moving forward in many PC games) will be triggered via the PyDIrectInput’s functions.

The Desk Gestures module tracks the x and y coordinates of the pen each frame, using the parameters from the GUI. These coordinates are then mapped to the user’s screen resolution and fed into several PyDirectInput’s functions that trigger DirectX events, depending on whether we want to move the mouse, or press keys on the keyboard and click with the mouse.

Fig 1 – HSV colour range values for the blue of the pen

From then the current challenge and limitation is having other objects with the same colour range in the camera frame. When this happens, the program detects the wrong objects and therefore, produces inaccurate tracking results. The only viable solution is to make sure that no objects with similar colour range are present in the camera view. This is usually easy to achieve and if not, a simple green screen (or another screen of a singular colour) can be used to replace the background.

In the exercises module, we use OpenCV to do motion detection. This involves subtracting the current frame from the last frame and taking the absolute value to get the pixel intensity difference. Regions of high pixel intensity difference indicate motion is taking place. We then do a contour detection to find the outlines of the region with motion detected. Fig 2 shows how it looks in the module.

Technical challenges

OpenCV

In the desk gestures module, to track the pen, we had to provide an HSV (Hue, Saturation, Value) colour range to OpenCV so that it only detected the blue part of the pen. We needed to find a way to calculate this range as accurately as possible.

The solution involves running a program where the hue, saturation, and value channels of the image could be adjusted so that only the blue of the pen was visible(see Fig 1). Those values were then stored in a .npy file and loaded into the main program.

Fig 2 – Contour of the motion detected

Multithreading

Videos captured by the webcam can be seen as a collection of images. In the program, OpenCV keeps reading the input from the webcam frame by frame, then each frame is processed to get the data which is used to categorize the user into a status (exercising or not exercising in the exercise module, moving the pen to different directions in the desk gesture module). The status change will then trigger different DirectX events.

Initially, we tried to check the status of the user after every time the data is ready, however, this is not possible because most webcams are able to provide a frame rate of 30 frames per second, which means the data processing part is performed 30 times every second. If we check the status of the user and trigger DirectX events at this rate, it will cause the program to run slow.

The solution to this problem is multithreading, which allows multiple tasks to be executed at the same time. In our program, the main thread handles the work of reading input from webcam and data processing, and the status check is executed every 0.1 seconds in another thread. This reduces the execution time of the program and ensures real-time motion tracking.

Human Activity Recognition

In the exercise module, DirectX events are only triggered if the module decides the user is doing a particular exercise, therefore our program needs to be able to classify the input video frames into an exercise category. This then belongs to a broader field of study called Human Activity Recognition, or HAR for short.

Recognizing human activities from video frames is a challenging task because the input videos are very different in aspects like viewpoint, lighting and background. Machine learning is the most widely used solution to this task because it is an effective technique in extracting and learning knowledge from given activity datasets. Also, transfer learning makes it easy to increase the number of recognized activity types based on the pre-trained model. Because the input video can be viewed as a sequence of images, in our program, we used deep learning, convolutional neural networks and PyTorch to train a Human Activity Recognition model that can output the action category given an input image. Fig 3 shows the change of loss and accuracy during the training process, in the end, the accuracy of the prediction reached over 90% on the validation dataset.

Fig 3 – Loss and accuracy diagram of the training

Besides training the model, we used additional methods to increase the accuracy of exercise classification. For example, rather than changing the user status right after the model gives a prediction of the current frame, the status is decided based on 8 frames, this ensures the overall recognition accuracy won’t be influenced by one or two incorrect model predictions [Fig 4].

Fig 4 – Exercise recognition process

Another method we use to improve the accuracy is to ensure the shot size is similar in each input image. Images are a matrix of pixels, the closer the subject is to the webcam, the greater the number of pixels representing the user, that’s why recognition is sensitive to how much of the subject is displayed within the frame.

To resolve this problem, in the exercise module, we ask the user to select the region of interest in advance, the images are then cropped to fit the selection [Fig 5]. The selection will be stored as a config file and can be reused in the future.

Fig 5 – Region of interest selection

DirectX

The open-source libraries used for computer vision are all in Python so the library ‘PyDirectInput’ was found to be most suitable for passing the data stream. PyDirectInput is highly efficient at translating to DirectX.

Our Future Plan

For the future, we plan to add a way for the user to record gestures to a profile and store it in a catalogue. From there on the configuration panel they will be able to assign mouse clicks, any keyboard button presses and sequences of button presses, for the user to map to their specific gesture. This will be saved as gesture catalogue files and can be reused in different devices.

We are also benchmarking and testing the latency between gestures performed and DirectX events triggered to further evaluate efficiency markers, hardware limits and exposing timing figures for the users configuration panel.

We will be posting more videos on our progress of this work on our YouTube channels (so stay tuned!), and we look forward to submitting our final year dissertation project work at which point we will have our open-source release candidate published for users to try out.

We would like to build a community interest group around this. If would like to know more and join our MotionInput supporting DirectX community effort, please get in touch – d.mohamedally@ucl.ac.uk

Bonus clip for fun – Goat Simulator

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments