by Scott Muniz | Aug 4, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

There are many benefits of having domain joined lab VMs in Azure Lab Services, including allowing the students to connect to the VM using their domain credentials. Each VM can be joined to the domain manually, but this is tedious and doesn’t scale when domain joining multiple VMs. To help with this, we have put together some Windows 10 Powershell scripts that a lab owner can execute on the so that every VM started in the lab is automatically joined to the domain and the student is added as a Remote Desktop user.

The scripts use Windows’ task scheduler to automatically run a script when the student VM starts up. The first script, which the lab owner executes on the template VM, registers a scheduled task that will run another script at VM start up. The script then publishes the template VM to create the students’ lab VMs. When a student’s lab VM is started the first time, the script that executes the domain join will automatically run. I would recommend that the VM start and domain join occur before the students need to login as this may take several minutes. If you increase the lab capacity later, those VMs will use the same configuration from the template VM and will be domain joined when they are started the first time. However, if you change the template VM, such as by adding more software or changing the configuration, the first script will need to be run again to set up the scheduled tasks.

The script will need a user, with password, that has permissions to join the domain, you may need to work with your IT department to get the necessary information.

The Azure Lab Service team will be building this functionality directly into the product, in the meantime these scripts will allow you to move forward with Lab Services.

Environments

These scripts work on the following configurations:

- On-premise Active Directory domain

- Hybrid Active Directory domain – An on-premise AD which is connected to an Azure Active Directory through Azure AD Connect. AD Domain Services is installed on an on-premise server, see the diagram below. Applies also to federated domains.

- Azure AD DS Domains – For full-cloud AD (Azure AD + Azure AD DS) or Hybrid AD with secondary Domain Services on Azure.

Lab account and Lab setup

This section focuses on configuring your lab account and lab so that it is connected to your on-premise domain controller. You may need to work with your IT department to get the necessary information and permissions to get the configuration properly setup.

1.) Either option will work:

– Wire up your on-prem Domain Controller on-prem network to an Azure VNet, either with a site-to-site VPN gateway or ExpressRoute.

– Create a secondary managed domain on top of your on-prem one with Azure AD DS (PaaS).

2.) Peer the Lab Account with the connected Virtual Network (VNet).

WARNING: The lab account must be peered to a virtual network before the lab is created.

3.) Create a new lab, with the option enabled to use the same password for all virtual machines.

Where are the scripts

The scripts are available on the along with a readme that has all the details about running the scripts. The scripts require a domain user that can add VMs to the domain, you may need to contact your IT department to get the necessary information.

The scripts are designed to be modular. The first script that is run on the template VM is the Join-AzLabADTemplate.

- Join-AzLabADStudent_RenameVm which renames the VM to a unique name.

- Join-AzLabADStudent_JoinVM which joins the VM to the appropriate domain to an optional organizational unit.

- Join-AzLabADStudent_AddStudent which adds the student that the VM is registered to, to the Remote User group so they can login. If the VM isn’t registered to a user the task is skipped.

Here are two additional scripts that aren’t part of the domain-join process that will help manage the VMs.

- Set-AzLabCapacity, which allows you to change the capacity of the lab from the template VM.

- Set-AzLabADVms, which starts all the VMs from the template VM. This script can be run to get all the VMs domain-joined instead of having the domain-join occur when the students start the VM.

If you have any questions, feel free to post them at the community forum. For issues with the scripts, add an issue to the GitHub repository.

Thanks

Roger Best

by Scott Muniz | Aug 4, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

For organizations that operate a hybrid environment with a mix of on-premises and cloud apps, shifting to remote work in response to COVID-19 has not been easy. VPN solutions can be clumsy and slow, making it difficult for users to access legacy apps based on-premises or in private clouds. For today’s “Voice of the Customer” post, Nitin Aggarwal, Global Identity Security Engineer at Johnson Controls, describes how his organization overcame these challenges using the rich integration between Azure Active Directory (Azure AD) and F5 BIG-IP Access Policy Manager (F5 BIG-IP APM).

Enabling remote work in a hybrid environment

By Nitin Aggarwal, Global Identity Security Engineer, Johnson Controls

Johnson Controls is the world’s largest supplier of building products, technologies, and services. For more than 130 years, we’ve been making buildings smarter and transforming the environments where people live, work, learn and play. In response to COVID-19, Johnson Controls moved 50,000 non-essential employees to remote work in three weeks. As a result, VPN access increased by over 200 percent and usage spiked to 100 percent throughout the day. People had trouble sharing and were forced to sign in multiple times. To address this challenge, we enabled capabilities in F5 and Azure AD to simplify access to our on-premises apps and implement better security controls.

Securing a hybrid infrastructure

Our organization relies on a combination of hybrid and software-as-a-solution (SaaS) apps, such as Zscaler and Workday, to conduct business-critical work. Our hybrid application set contains some legacy apps that are built on a code base that can’t be updated. One example is a directory access app that we use to look up employee information like first name, last name, global ID, and phone number. It’s critical that we keep this data protected, yet we also need to make our apps available to employees working offsite.

Johnson Controls uses Azure AD to make over 150 Microsoft and non-Microsoft SaaS apps accessible from anywhere. Many of our legacy apps, however, use header-based authentication, which does not easily integrate with modern authentication standards. To enable single sign-on (SSO) to legacy apps for workers inside the network, we used a Web Access Management (WAM) solution. Remote workers used a VPN. The long-term strategy is to modernize these apps, eliminate them, or migrate them to Azure. In the meantime, we need to make them more accessible.

About five months ago we began an initiative to enable authentication to our legacy apps using Azure AD. We wanted to make access easier and apply security controls, including conditional access. Initially we planned to rewrite the authentication model to support Azure AD, but all these apps use different code. Some were built with .NET. Others were written in Java or Linux. It wasn’t possible to apply a single approach and quickly modernize authentication.

Migrating legacy apps to Azure AD in less than one hour

When our Microsoft team learned about our issues with our on-premises apps, they suggested we talk to F5. Johnson Controls uses F5 for load balancing, and F5 offers a product, F5 BIG-IP Access Policy Manager (F5 BIG-IP APM), that leverages the load-balancing solution to easily integrate with Azure AD. It requires no timely development work, which was exactly what we were looking for.

If an app is already behind the F5 load balancer and the right team is in place, it can take as little as one hour to migrate apps to Azure AD authentication using F5 BIG-IP APM. We just needed to create the appropriate configurations in F5 and Azure AD. Once the apps are onboarded, whenever a user signs in, they are redirected to Azure AD. Azure AD authenticates the user, sends the attributes back to the legacy app and inserts them in the header. For users, the experience is the same whether they are accessing an on-premises app or a cloud app. They sign in once using SSO and gain access to both cloud and legacy apps. It’s completely seamless.

We started the onboarding process in November. After we moved to remote work in response to the epidemic, we accelerated the schedule. So far, we’ve migrated about 30 apps. We have 15 remaining.

Implementing a Zero Trust security strategy

With authentication for our apps handled by Azure AD, we can put in place the right security controls. Our security strategy is driven by a Zero Trust model. We don’t automatically trust anything that tries to access the network. As we move workloads to the cloud and enable remote work, it’s important to verify the identity of devices, users and services that try to connect to our resources.

To protect our identities, we’ve enabled a conditional access policy in conjunction with multi-factor authentication (MFA). When users are inside the network on a domain-joined device or connected via VPN, they can access with just a password. Anybody outside the networks must use MFA to gain access. We are also using Azure AD Privileged Identity Management to protect global administrators. With Privileged Identity Manager, users who want to access sensitive resources sign in using a different set of credentials from the ones they use for routine work. This makes it less likely that those credentials will be compromised.

With Azure AD, we also benefit from Microsoft’s scale and availability. Before we migrated our apps from the WAM to Azure AD, there were frequently problems with access related to the WAM. With Azure AD we no longer worry about downtime. Remote work is easier for employees, and we feel more secure.

Support enabling remote work

If your organization relies on legacy apps for business-critical work, I hope you’ve found this blog useful. In the coming months, as you continue to support employees working from home, refer to the following resources for tips on improving the experience for you and your employees.

Top 5 ways you Azure AD can help you enable remote work

Developing applications for secure remote work with Azure AD

Microsoft’s COVID-19 response

by Scott Muniz | Aug 4, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Final Update: Tuesday, 04 August 2020 07:38 UTC

We’ve confirmed that all systems are back to normal with no customer impact as of 08/04, 02:42 UTC. Our logs show the incident started on 08/04, 00:35 UTC and that during the 2 hours and 7 minutes that it took to resolve the issue some of the customers might have experienced delayed alerts. Alerts would have eventually fired.

- Root Cause: The failure was due to an issue in one of our back-end services.

- Incident Timeline: 2 Hours & 7 minutes – 08/04, 00:35 UTC through 08/04, 02:42 UTC

We understand that customers rely on Azure Monitor as a critical service and apologize for any impact this incident caused.

-Saika

by Scott Muniz | Aug 4, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Hello Folks,

As we announced last month (Announcing the general availability of Azure shared disks and new Azure Disk Storage enhancements) Azure shared disks are now generally available.

Shared disks, is the only shared block storage in the cloud that supports both Windows and Linux-based clustered or high-availability applications. It now allows you to use a single disk to be attached to multiple VMs therefore enabling you to run applications, such as SQL Server Failover Cluster Instances (FCI), Scale-out File Servers (SoFS), Remote Desktop Servers (RDS), and SAP ASCS/SCS running on Windows Server. Thus, enabling you to migrate your applications, currently running on-premises on Storage Area Networks (SANs) to Azure more easily.

Shared disks are available on both Ultra Disks and Premium SSDs.

Ultra disks have their own separate list of limitations, unrelated to shared disks. For ultra disk limitations, refer to Using Azure ultra disks. When sharing ultra disks, they have the following additional limitations:

Shared ultra disks are available in all regions that support ultra disks by default.

Premium SSDs

- Currently only supported in the West Central US region.

- Currently limited to Azure Resource Manager or SDK support.

- Can only be enabled on data disks, not OS disks.

- ReadOnly host caching is not available for premium SSDs with maxShares>1.

- Disk bursting is not available for premium SSDs with maxShares>1.

- When using Availability sets and virtual machine scale sets with Azure shared disks, storage fault domain alignment with virtual machine fault domain is not enforced for the shared data disk.

- When using proximity placement groups (PPG), all virtual machines sharing a disk must be part of the same PPG.

- Only basic disks can be used with some versions of Windows Server Failover Cluster, for details see Failover clustering hardware requirements and storage options.

For this post we’ll deploy a 2-node Windows Server Failover Cluster (WSFC) using clustered shared volumes. That way both VMs will have simultaneous write-access to the disk, which results in the ReadWrite throttle being split across the two VMs and the ReadOnly throttle not being used. And we’ll do it using the new Windows Admin Center Failover clustering experience.

Azure shared disks usage is supported on all Windows Server 2008 and newer. And Azure shared disks are supported on the following Linux distros:

Currently only ultra disks and premium SSDs can enable shared disks. Each managed disk that have shared disks enabled are subject to the following limitations, organized by disk type:

Ultra disks

Ultra disks have their own separate list of limitations, unrelated to shared disks. For ultra disk limitations, refer to Using Azure ultra disks.

When sharing ultra disks, they have the following additional limitations:

Shared ultra disks are available in all regions that support ultra disks by default, and do not require you to sign up for access to use them.

Premium SSDs

- Currently only supported in the West Central US region.

- Currently limited to Azure Resource Manager or SDK support.

- Can only be enabled on data disks, not OS disks.

- ReadOnly host caching is not available for premium SSDs with maxShares>1.

- Disk bursting is not available for premium SSDs with maxShares>1.

- When using Availability sets and virtual machine scale sets with Azure shared disks, storage fault domain alignment with virtual machine fault domain is not enforced for the shared data disk.

- When using proximity placement groups (PPG), all virtual machines sharing a disk must be part of the same PPG.

Let’s get on with the creation of our cluster. In my test environment I have 2 Windows Server 2019 that will be used as our cluster Nodes. They are joined to a domain through a DC in the same virtual network on Azure. Windows Admin Center (WAC) is running on a separate VM and ALL these machine are accessed using an Azure Bastion server.

When creating the VMs you need to ensure that you enable Ultra Disk compatibility in the Disk section. If your shared Ultra Disk is already created, you can attach it as you create the VM. In my case I will attach it to existing VM in the next step.

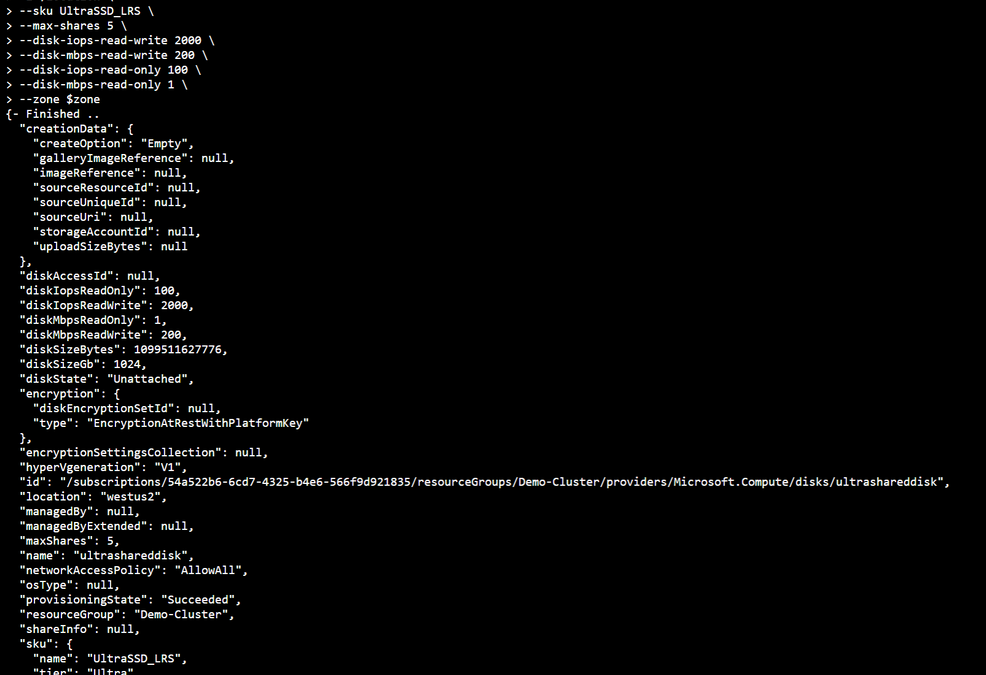

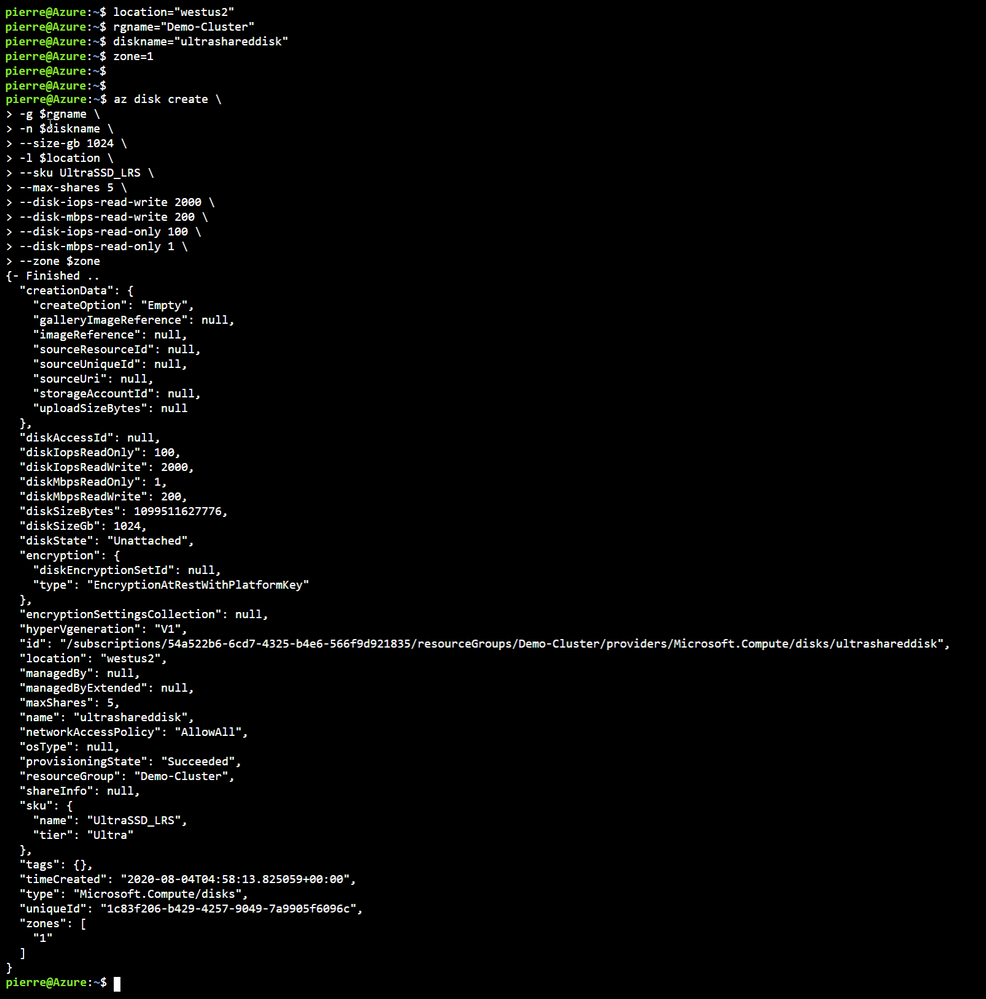

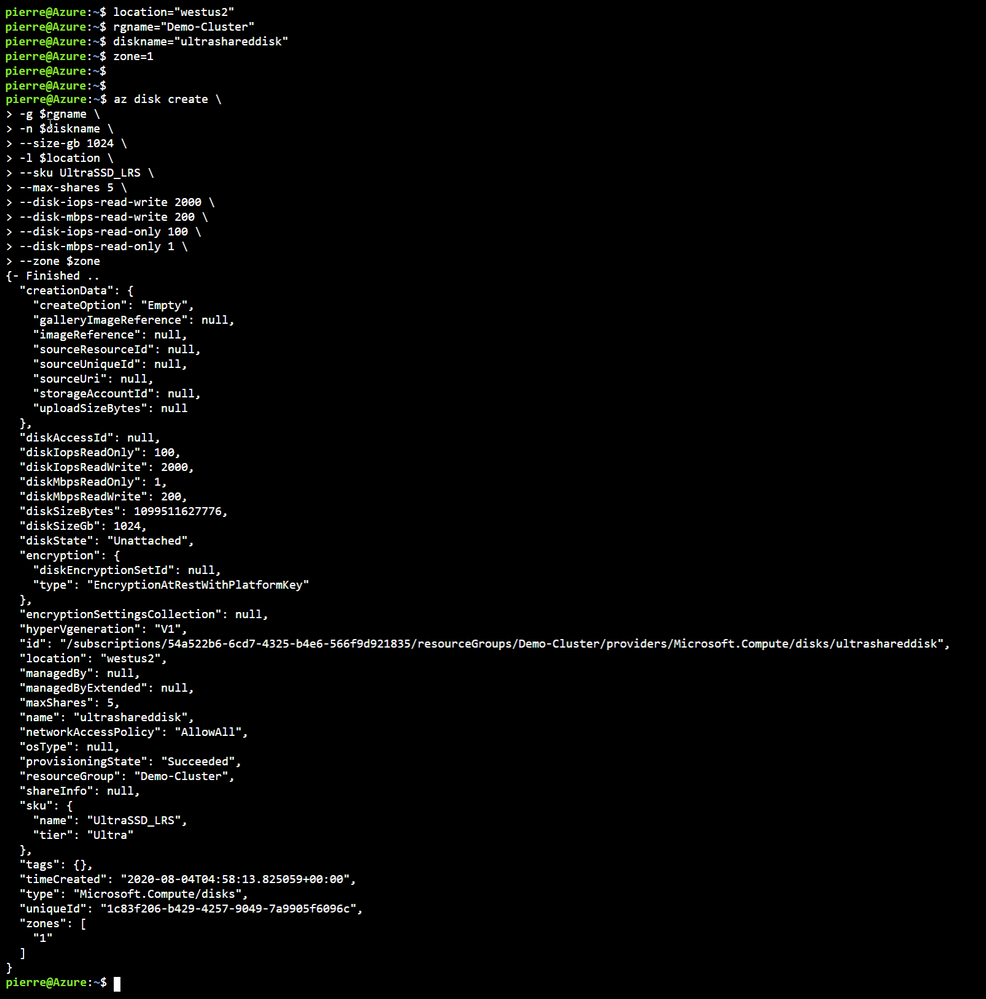

First, we need to Deploy an ultra disk as a shared disk. To deploy a managed disk with the shared disk feature enabled, you must ensure that the “maxShares” parameter is set to a value greater than 1. This makes the disk shareable across multiple VMs. I used the cloud shell through the portal and the following Azure CLI commands to perform that operation. Notice that we also need to set the zone parameter to the same zone where the VMs are located (Azure Shared Disk across availability zones is not yet supported)

location = "westus2"

rgname = "Demo-Cluster"

diskname = "ultrashareddisk"

zone = 1

az disk create

-g $rgname

-n $diskname

--size-gb 1024

-l $location

--sku UltraSSD_LRS

--max-shares 5

--disk-iops-read-write 2000

--disk-mbps-read-write 200

--disk-iops-read-only 100

--disk-mbps-read-only 1

--zone $zone

We end up with the following result:

Once the Shared Disk is created, we can attach it to BOTH VMs that will be our clustered nodes. I’ve attached the disk to the VMs through the Azure portal by navigating to the VM, and in the Disk management pane, clicking on the “+ Add data disk” and selecting the disk I created above.

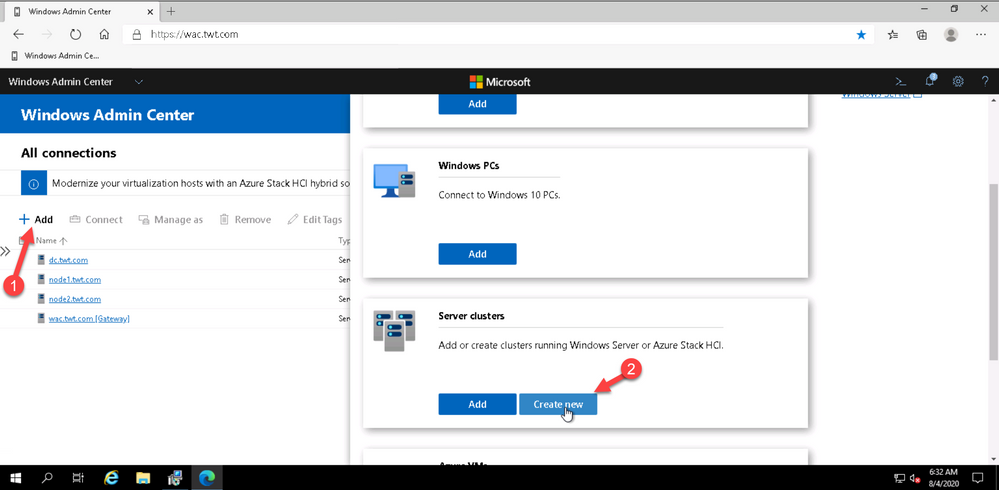

Now that the shard disk is attached to both VM, I use the WAC cluster deployment workflow to create the cluster.

To launch the workflow, from the All Connections page, click on “+Add” and select “Create new” on the server clusters tile.

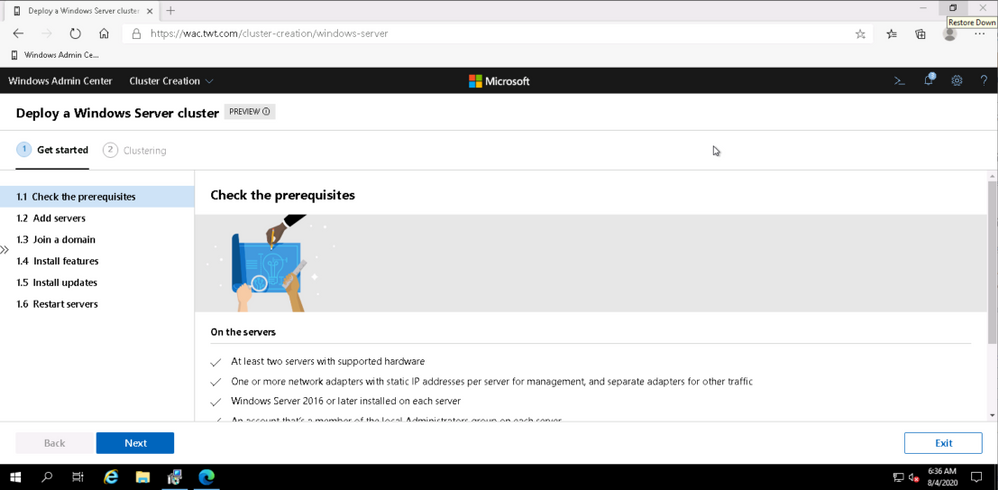

You can create hyperconverged clusters running Azure Stack HCI, or classic failover clusters running Windows Server (in one site or across two sites). I’m my case I’m deploying a traditional cluster in one site.

The cluster deployment workflow is included in Windows Admin Center without needing to install an extension.

At this point just follow the prompts and walk through the workflow. Just remember that whenever, in the workflow, you are asked for an account name and password. the username MUST be in the DOMAINUSERNAME format.

Once I walked through the workflow, I connected to Node 1 and added the disk to my clustered shard volume.

and verified on the other node that I could see the Clustered Shared Volume.

That’s it!! My traditional WSFC is up and running and ready to host whatever application I need to migrate to Azure.

I hope this helped. Let me know in the comments if there are any specific scenarios you would like us to review.

Cheers!

Pierre

by Scott Muniz | Aug 4, 2020 | Alerts, Microsoft, Technology, Uncategorized

This article is contributed. See the original author and article here.

Today I am happy to announce an expansion of our longstanding partnership with Bitnami (now part of VMware) to deliver a collection of production-ready templates on the Azure marketplace for our open source database services—namely, MySQL, MariaDB, and Postgres.

In this blog post you can learn about the Bitnami Certified Apps (what many of us call “templates”) for our Azure Database for PostgreSQL services are available on the Azure Marketplace and are production-ready. You can also find Bitnami templates using Azure Database for MySQL and MariaDB.

More importantly, these Bitnami templates make it easy for you to manage the complexity of modern software deployments.

And when we say the Bitnami templates make it easy, we mean easy: these templates for our Azure open source databases give you a one-click solution to deploy your applications for production workloads. Bitnami packages the templates following industry standards—and continuously monitors all components and libraries for vulnerabilities and application updates.

Invent with purpose on Azure with Bitnami templates !

Bitnami templates available with PostgreSQL on Azure Marketplace

The current collection of Bitnami production-ready templates on the Azure marketplace are for these applications.

- Airflow for PostgreSQL

- CKAN for Postgres—with Hyperscale (Citus)

The Bitnami Community Catalog on the Azure Marketplace give you certified applications that are always up-to-date, highly secure, and built to work right out of the box.

In these solution templates for the Azure open source database services, our Azure and Bitnami engineering teams have worked together to incorporate all the best practices for performance, scale, and security—to make the Bitnami templates ready for you to consume, with no additional integration work necessary.

Airflow with PostgreSQL on Azure

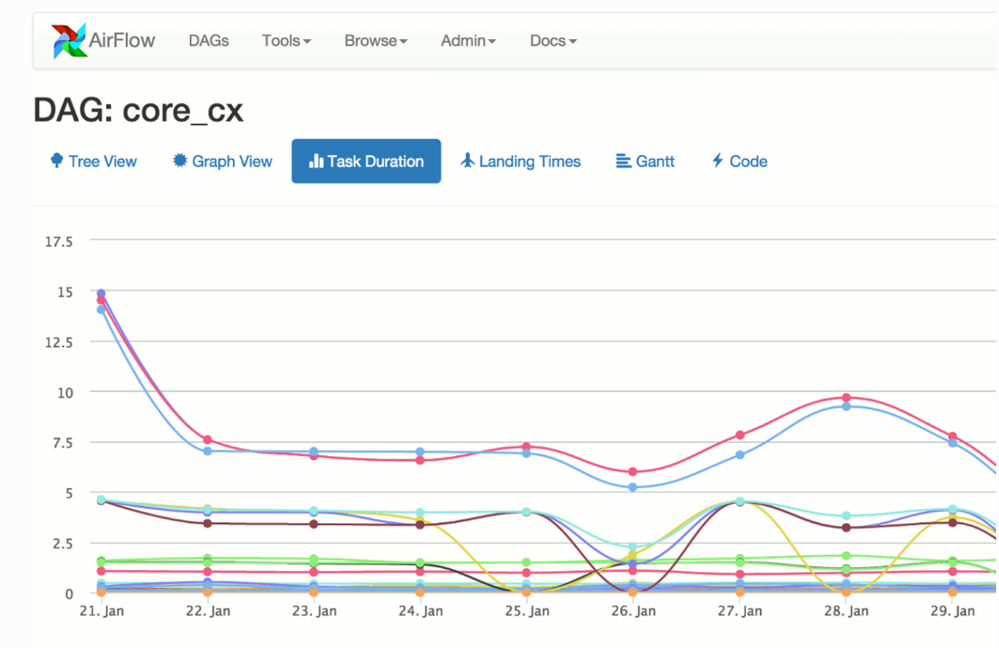

Caption: Airflow with Azure Database for PostgreSQL

You can build and manage your company’s workflows using Apache Airflow solution that gives you high availability, better performance, and scalability. This Bitnami template for Airflow uses two virtual machines for the application front-end and scheduler, plus a configurable number of worker virtual machines. It also uses Azure Database for PostgreSQL and Azure Cache for Redis to store application data and queue tasks.

Try Airflow with Azure Database for PostgreSQL

CKAN with PostgreSQL & Hyperscale (Citus)

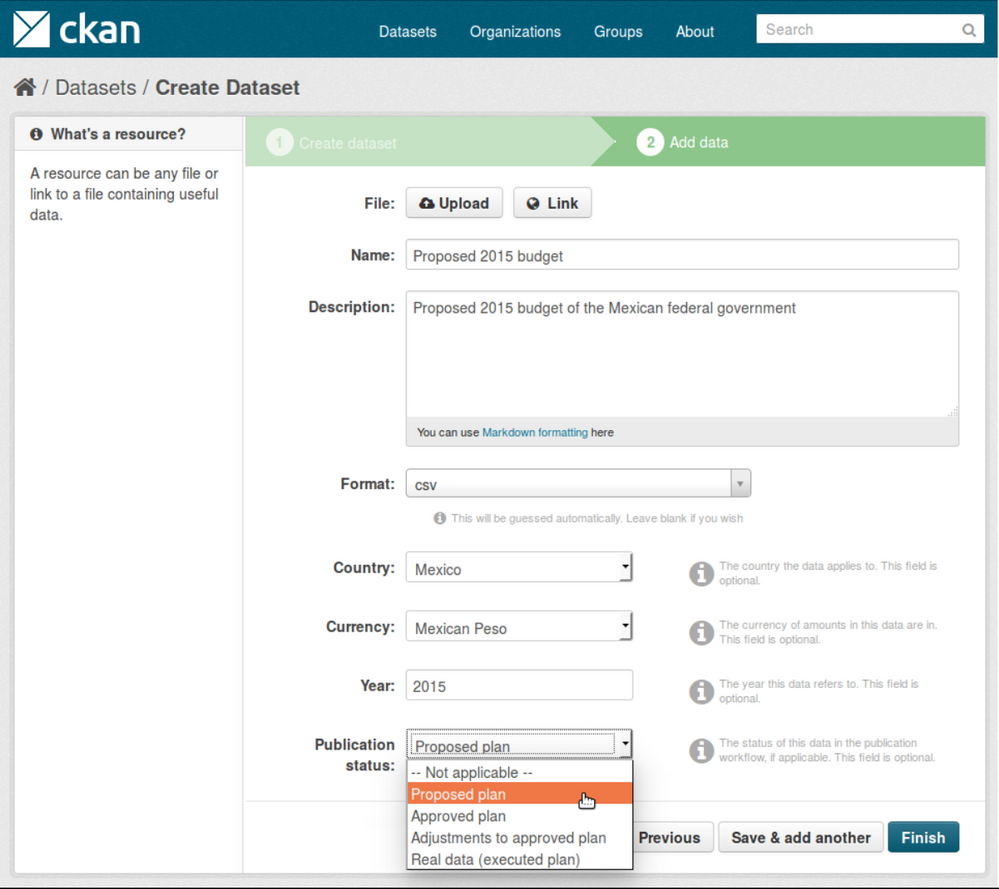

Caption: CKAN with Hyperscale PostgreSQL (Citus)

With this Bitnami template for CKAN, you can now build an open data management system on Azure that is based on CKAN. CKAN is used by various governments, organizations and communities around the world when they need store and process large amounts of data from census data to scientific data. This is a scalable solution that uses several virtual machines instances to host the applications which also include virtual machines for Solr , Memcahced and managed databases using Hyperscale (Citus) on Azure Database for PostgreSQL.

Try CKAN with Hyperscale Citus PostgreSQL

If you’re building an application on Azure using CKAN or Airflow with Azure Database for PostgreSQL or Hyperscale Citus, here are a few of the reasons I recommend you consider taking advantage of the Bitnami templates on the Azure Marketplace.

Bitnami templates lower your TCO

These Bitnami production-ready templates are available to you at no additional cost than the underlying Azure services being used to host the application. These services are already optimized to reduce the total cost of ownership using the elastic cloud infrastructure.

You also get Azure advisor recommendations for your managed database service, so you can scale up or down based on your usage telemetry.

Managed database services on Azure enable you to focus on your application—not your database

Azure managed database services provide high availability with 99.9% SLA and ease of scale up or down your servers based on what your application needs are. You can easily backup and perform point-in-time restore for business continuity and disaster recovery. Using Hyperscale (Citus) on Azure Database for PostgreSQL, you can scale out horizontally your multi-tenant app on Azure—or build a real-time operational analytics app that gives your customers sub-second performance even with billions of rows.

Security benefits that are built into Azure

With these Bitnami templates on Azure, you can use built-in security features like using SSL connectivity to the database server and using Azure Role-based access control (RBAC) to control who has access to the server.

You can also use our Advanced Threat Protection feature for the managed databases to detect anomalous activities indicating unusual and potentially harmful attempts to access or exploit databases.

You can also read this blog post from Bitnami (now part of VMWare). We are thrilled to work with Bitnami and focus on simplifying the experience for developers to build solutions using community based applications like CKAN and Airflow.

What our leaders are saying about the Bitnami & Azure collaboration

Sunil Kamath is Director of Product Management for OSS databases at Microsoft Azure Data—here is Sunil’s take on the importance of our partnership:

“Developers want simple, fast ways to deploy production-ready solutions on the cloud. We have been excited about our partnership with Bitnami—now part of VMware—to deliver what customers say they care about the most. Today, together with Bitnami, we are thrilled to launch new production-ready and enterprise-grade Bitnami templates for WordPress, Drupal, Magento, and more, making it easier than ever for developers to run these solutions on the Azure cloud. These solutions are built to fully utilize the best-in-class intelligence, enterprise security, and scalability offered by Azure database services for MySQL, MariaDB, and PostgreSQL.“

Daniel Lopez is the former CEO/Founder of Bitnami and is now Sr. Director R&D at VMware. When asked for his perspective on the partnership with Microsoft Azure, Daniel said:

“Bitnami has worked closely with Microsoft for many years to provide Azure customers with a wide array of ready to deploy open source software in a variety of formats including virtual machines, containers, Helm Charts, and ARM Templates; and across environments including Azure and Azure Stack. Our recent expansion of this partnership with the Azure Data team is a particularly exciting area of development as we’re bringing together the convenience and simplicity of Bitnami applications with the power and scalability of Azure Data services; creating a low-friction and high-value win for customers. We’re also proud of this collaboration in highlighting the type of innovation and benefits the cloud operating model allows us to unlock.”

Want to learn more about Azure open source databases & Bitnami?

Below are some resources if you want to dig in further and try out some of these Bitnami production-ready templates with our Azure open source databases.

Oh and if you have ideas for more Bitnami templates we should create that you think you and other developers would benefit from on the Azure Marketplace, please provide feedback on UserVoice. We would love your input.

Recent Comments