This article is contributed. See the original author and article here.

This is a step-by-step example of how to use MSI while connecting from Spark notebook based on a support case scenario. This is for beginners in Synapse with some knowledge of the workspace configuration such as linked severs.

Scenario: The customer wants to configure the notebook to run without using the AAD configuration. Just using MSI.

Here you can see, synapse uses Azure Active Directory (AAD) passthrough by default for authentication between resources, the idea here is to take advantage of the linked server synapse configuration inside of the notebook.

_When the linked service authentication method is set to Managed Identity or Service Principal, the linked service will use the Managed Identity or Service Principal token with the LinkedServiceBasedTokenProvider provider._

The purpose of this post is to help step by step how to do this configuration:

Requisites:

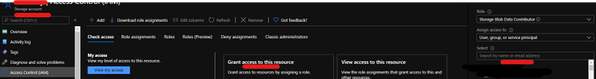

- Permissions: Synapse ( literally the workspace) MSI must have the RBAC – Storage Blob Data Contributor permission on the Storage Account.

- It should work with or without the firewall enabled on the storage. I mean firewall enable is not mandatory.

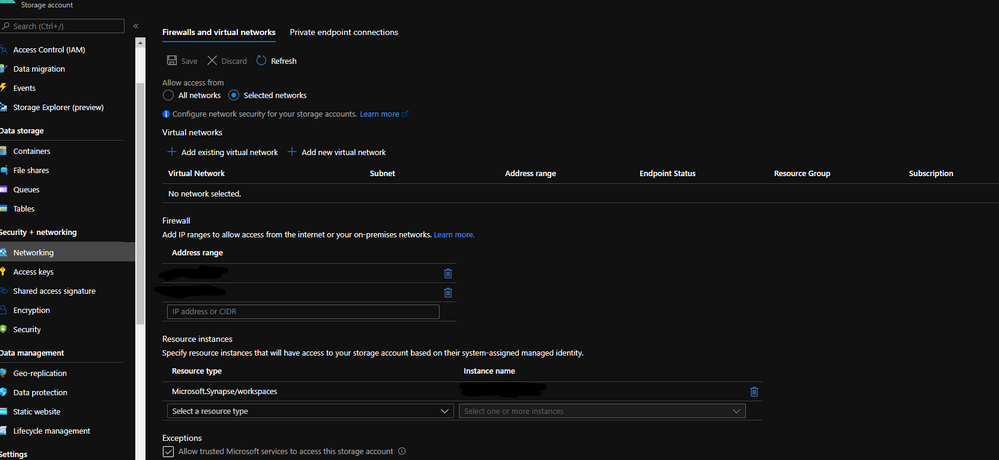

- However, If you for security reasons has enabled the firewall on the storage be sure of the following:

- Configure Azure Storage firewalls and virtual networks | Microsoft Docs

Follow my example with firewall enabled on the storage

When you grant access to trusted Azure services inside of the storage networking, you will grant the following types of access:

- Trusted access for select operations to resources that are registered in your subscription.

- Trusted access to resources based on system-assigned managed identity.

- Additional information regards to this subject: Connect to a secure storage account from your Azure Synapse workspace – Azure Synapse Analytics | Microsoft Docs

Step 1:

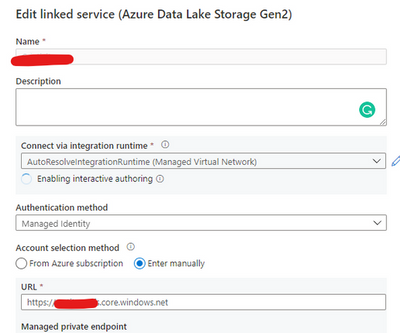

Open Synapse Studio and configure the Linked Server to this storage account using MSI:

Test the configuration and see if it is successful.

Step 2:

Using config set point the notebook to the linked server as documented:

val linked_service_name = “LinkedServerName”

// replace with your linked service name

// Allow SPARK to access from Blob remotely

val sc = spark.sparkContext

spark.conf.set(“spark.storage.synapse.linkedServiceName”, linked_service_name)

spark.conf.set(“fs.azure.account.oauth.provider.type”, “com.microsoft.azure.synapse.tokenlibrary.LinkedServiceBasedTokenProvider”)

//replace the container and storage account names

val df = “abfss://Container@StorageAccount.dfs.core.windows.net/”

print(“Remote blob path: ” + df)

mssparkutils.fs.ls(df)

In my example, I am using mssparkutils to list the container.

You can read more about mssparkutils here: Introduction to Microsoft Spark utilities – Azure Synapse Analytics | Microsoft Docs

Additionally:

Following references permissions to Synapse workspace :

- Step 1: Managed identities for Azure resource authentication

- Step 2: ADLS Gen2 storage with linked services

That is it!

Liliam UK Engineer

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments