This article is contributed. See the original author and article here.

It’s been an eventful time for Hyperscale (Citus) lately. If you’re interested in Postgres, distributed databases, and how to handle ever growing needs for your Postgres application or simply use Hyperscale (Citus), keep reading.

Citus is an open source extension to Postgres that enables horizontal scaling of your Postgres database. Citus distributes your Postgres tables, writes, and SQL queries across multiple nodes—parallelizing your workload and enabling you to use the memory, compute, and disk of a multi-node cluster. And Citus is available on Azure: Hyperscale (Citus) is a deployment option in Azure Database for PostgreSQL.

What’s really exciting to me is that we’ve made it easier and cheaper than ever to try and use Hyperscale (Citus). With Basic tier, you can now use Hyperscale (Citus) on a single node, parallelizing your operations and adopting a distributed database model from the very beginning. And you can now try Citus open source with a single docker run command—boom!

And Hyperscale (Citus) can scale to serve some big applications: it’s used to manage public transport in a large European capital, to handle ongoing market analysis in one of the biggest banks in the world, and to power the UK Coronavirus Dashboard. Lots of use cases can benefit from scaling out Postgres.

So what’s new with Hyperscale (Citus)? Lots. In the last month we launched these new features in preview:

- Basic tier: with Basic tier, you can now run Hyperscale (Citus) on a single node for as little as $0.27 USD/hour[1]

- Postgres 12 & Postgres 13: for the latest developments in Postgres

- Citus 10: The latest version of Citus with all the new capabilities—including columnar compression

- Read replicas in the same region for unlimited read scalability

- Managed PgBouncer: so you no longer need to set up and maintain your own PgBouncer anymore

And there’s more! We have also rolled out:

- Custom schedules for maintenance

- Shard rebalancing features in portal

You can go ahead and try the new Hyperscale (Citus) features right now—whether they are still in preview or have already GA’d. This post will walk you through the new features that were recently added to Hyperscale (Citus) and how you can benefit. Ready? Let’s dive in.

What is the new Basic tier for Hyperscale (Citus)?

Some of you gave us feedback that you wanted us to create a smaller Hyperscale (Citus) cluster, to make it easier to get started and to try out Hyperscale (Citus). We heard you loud and clear.

Think about it—20 worker nodes with 64 vCores in each node would give you 1280 vCores with 8TB+ of RAM to run your Postgres database. That is a lot of power. And in many cases, you don’t need it (yet). Or you need something smaller than even a 2-node cluster for development, test, or stage environment.

So in Preview, we are now introducing a Basic tier.

The new Basic tier in Hyperscale (Citus) allows you to shard Postgres on a single node. So that you are “scale-out ready” and can use a distributed data model from the very start, even when you are still running on a single node database. And it’s easy to add workers nodes to your Hyperscale (Citus) basic tier when you need to—when you do, you’re effectively converting your Basic configuration to a Standard tier.

And the configuration with 1 coordinator and 2 or more worker nodes that you used to know is now called “Standard tier”.

Some of you who have been using Citus for a while told us that if you could rewind the clock, you would have started using Citus earlier, even when your Postgres database was smaller. Now you can, by using Basic tier!

And you can select Postgres version of your choice—11, 12, or 13—for your Basic and Standard tiers. Which brings me to my next point.

Postgres 12 and 13

One of the tough challenges a PM faces with a popular cloud database service like Postgres is prioritization. You keep talking to your customers and you feel how much they need this new functionality. And that one. And another one. It is great to see how many customers are asking for so many things—there is definitely a lot of interest in your service! But it also means that some much-needed capabilities will have to wait until our team delivers others. No matter how big (or not) the team is you can’t get it all at the same time.

One of the tradeoffs we previously made for Hyperscale (Citus) was to delay support for the latest Postgres versions. The good news is, now we are catching up and are extremely happy to offer Postgres 12 and Postgres 13 support in Hyperscale (Citus).

With addition of Postgres 12 and Postgres 13, you may ask—how can I upgrade my Hyperscale (Citus) cluster to the latest Postgres version? You can initiate a major Postgres version upgrade for your cluster with few clicks in Azure portal. Upgrade on all nodes in your Hyperscale (Citus) cluster is performed by the service and keeps all configuration, including server group name and connection string, the same.

One of the advantages to have the latest Postgres versions—in addition to the new capabilities in these major Postgres versions—is the ability to use the latest Citus version! Let’s take a closer look at why you could be interested in the latest Citus version.

Almighty Citus 10

OK, maybe not almighty but look at what Citus database team delivered this time!

In case you didn’t know, we have a dedicated team in Azure Data that is working full time on …the open source Citus extension! That’s right. You can run a Citus cluster on your own anywhere if you don’t need any of the advantages provided by a managed database service. No strings attached and we love our Citus open source community. However, many customers would like us, Azure Data, to run their databases for them and take care of updates, security, backups, BCDR, and many other important things that frankly you can spend a lot of time setting up and maintaining as your databases grow. This way you can focus on what matters most to you: your application. And we love to help you with it.

But let’s get back to Citus 10 in Hyperscale (Citus). With Citus 10 support in Hyperscale (Citus), you can:

- Compress your tables to reduce storage cost and speed up your analytical queries using columnar storage.

- Use joins and foreign keys between local PostgreSQL tables and distributed tables.

- Use the new alter table function to change your distribution key, shard count, colocation properties and more.

- And there’s more: More DDL commands supported, better SQL support, and new views to see the state of your cluster with

citus_tablesandcitus_shards.

Let’s see what these new capabilities are.

Columnar compression with Citus 10

Postgres typically stores data using the heap access method, which is row-based storage. Row-based tables are good for transactional workloads but can cause excessive IO for some analytic queries.

Columnar storage provides another way to store data in a Postgres table, by grouping data by column instead of by row.

So what are some of the benefits of columnar?

- Compression reduces storage requirements.

- Compression reduces IO needed to scan the table.

- Performance: Queries can skip over the columns that they don’t need, further reducing IO.

All of these together mean faster queries and lower costs!

To use the new columnar feature with Hyperscale (Citus), you just need to create tables with the new USING columnar syntax, and you’re ready to go (of course, read the docs, too!).

And finally, you can mix and match columnar and row tables and partitions; you can also mix and match local and distributed columnar tables; and you can use columnar with Basic tier on a single node as well as on a distributed Citus cluster in Standard tier. There are lots more details in Jeff’s “Quickstart” blog posts about using Columnar in Hyperscale (Citus)—as well as using columnar with Citus open source. Oh, and Jeff made a video demo about Citus Columnar too.

Use joins and foreign keys between local and distributed tables

If you have a very large Postgres table and a data-intensive workload (e.g. the frequently-queried part of the table exceeds memory), then the performance gains from distributing the table over multiple nodes with Citus will vastly outweigh any downsides. However, if most of your other Postgres tables are small, then you may not get much of additional benefits by distributing them.

A simple solution for you would be to not distribute the smaller Postgres tables at all!

Because the Citus coordinator is just a regular Postgres server, you can keep some of your tables as local, regular Postgres tables that live on the Citus coordinator. That’s right, you don’t need to distribute all of your tables with Citus.

Here’s an example of how you could organize your database:

- take your large tables and distribute them across a cluster with Citus,

- convert smaller tables that frequently JOIN with distributed tables into reference tables,

- convert smaller tables that have foreign keys from distributed tables into reference tables,

- keep all other tables as local PostgreSQL tables, that stay local to the coordinator.

That way, you can scale out compute, memory, and IO where you need it—and minimize application changes and other trade-offs where you don’t.

To make this model work seamlessly, Citus 10 adds support for 2 important features:

- foreign keys between local Postgres tables and reference tables

- direct joins between local Postgres tables and distributed tables

With these new features, you can use Postgres tables and Citus distributed tables in combination to get the best of both worlds.

Change your distribution key if you need to

When you distribute a table, choosing your distribution column is an important step, since the distribution column determines which constraints you can create, how (fast) you can join tables, and more.

With Citus 10 you can change the distribution column, shard count, and co-location of a distributed table using the new alter_distributed_table function.

Internally, alter_distributed_table reshuffles the data between the worker nodes, which means it is fast and works well on very large tables. For instance, using this capability makes it much easier to experiment with distributing your tables without having to reload your data.

You can also use the function in production (it’s fully transactional!), but you do need to:

(1) make sure that you have enough disk space to store the table several times, and

(2) make sure that your application can tolerate blocking all writes to the table for a while.

Read scalability via read replicas

Some of you might have sizable read needs that are hard to satisfy with just one database. For instance, dozens and hundreds of business analysts across your company might hit your database hard with queries but are not going to write to your database. That is when a Hyperscale (Citus) server group that contains a read replica of the database in addition to the primary Hyperscale (Citus) cluster can help.

You can now create one or more read-only replicas of a Hyperscale (Citus) server group.

Any changes that happen to the original server group get promptly reflected in its read replicas via asynchronous replication, and queries against the read replicas cause no extra load on the original. The replica is a safe place for you to run big report queries.

The replica cluster is distinct from the original and has its own database connection string. You can also change compute configuration separately on each replica. You can create unlimited number of read replicas without performance penalty on the primary cluster.

Managed PgBouncer

Each client connection to PostgreSQL consumes a noticeable amount of resources. To protect resource usage, Hyperscale (Citus) enforces a hard limit of 300 concurrent connections to the coordinator.

What if you require more client connections for some reason? While you can always setup your preferred connection pooler in front of Hyperscale (Citus) coordinator, it requires additional effort to set it up and maintain.

To improve connection scaling, Hyperscale (Citus) now comes with PgBouncer. If your application requires more than 300 connections, change the port in the connection URL from 5432 to 6432. This will connect to PgBouncer rather than directly to the coordinator, allowing up to roughly 2,000 simultaneous connections.

This new Managed PgBouncer capability in Hyperscale (Citus) will give you all the capabilities of your self-managed PgBouncer—combined with managed service benefits such as automatic updates without connection interruption. And if HA is enabled for your Hyperscale (Citus) cluster, managed PgBouncer is going to be highly available too.

More scheduling choices for maintenance windows

Having an up-to-date database engine (Postgres), operating system (Linux), and other service components is one of the big benefits of any managed database service. Updates however come at a price of downtime that is required to apply them to your system.

For a while now, Hyperscale (Citus) has posted notifications about scheduled maintenance events 5 days before the actual update—plus we’ve had a policy of doing maintenance at least 30 days after the last successful update.

Now you have even more control over planned maintenance events: you can define your preferred day of the week and time window on that day when maintenance for your Hyperscale (Citus) cluster should be scheduled. So you now get to choose between 2 different types of scheduling options for each of your Hyperscale (Citus) clusters:

- System managed schedule: The default maintenance scheduling option is to let the system pick a day and a 30-minute time window between 11pm and 7am in the time zone of your Azure region geography.

- Custom maintenance schedule: You can select day of the week and 30-minute time window, e.g. Sunday at 01:00-01:30am, when maintenance events should be scheduled for that cluster.

You will get notifications about scheduled maintenance 5 days in advance regardless of what schedule your cluster is on.

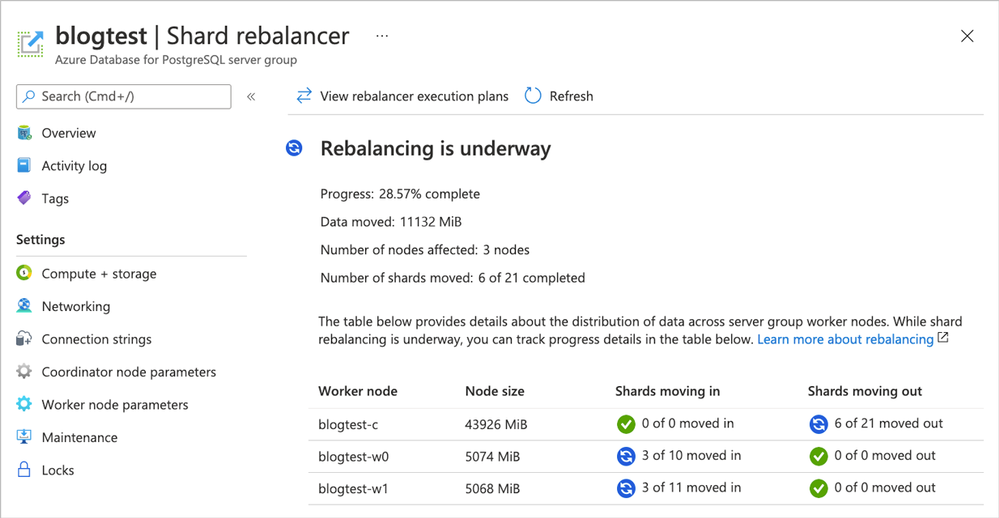

Take advantage of shard rebalancer recommendations & progress monitoring in the Azure portal

When you add a new node to your Hyperscale (Citus) cluster—or when your database has grown and the data distribution across nodes has become uneven—you will want to rebalance your shards. Shard rebalancing is the movement of shards between nodes in your Citus cluster, to make sure your database is spread evenly across all nodes.

Hyperscale (Citus) has had the shard rebalancer as one of its core features from the very beginning. Recently, we’ve added both shard rebalancing recommendations and progress tracking to the Azure portal.

Figure 1. Screenshot of the Azure portal and the Shard rebalancer screen for Hyperscale (Citus).

Figure 1. Screenshot of the Azure portal and the Shard rebalancer screen for Hyperscale (Citus).

Ways to learn more about Hyperscale (Citus) and to try all of these new things

To figure out if Azure Database for PostgreSQL – Hyperscale (Citus) is right for you and your app, here are some ways to roll up your sleeves and get started. Pick what works best for you!

- Create a Hyperscale (Citus) server group on Azure to try it out – Basic or Standard tier.

- And if you don’t yet have an Azure subscription, just create a free Azure account first.

- If you don’t have a distributed dataset to play with, you can start with our step-by-step guide.

- Try some tutorials for multi-tenant SaaS and real-time analytics dashboards.

- Read the Hyperscale (Citus) docs on distributed data concepts, determining application type, or selecting the initial Hyperscale (Citus) server group size.

- Watch this ~15 min Hyperscale (Citus) demo from SIGMOD about scaling out Postgres to achieve high performance transactions & analytics

If you need help figuring out whether Hyperscale (Citus) is a good fit for your workload, you can always reach out to us—the team that created Hyperscale (Citus)—via email at Ask AzureDB for PostgreSQL.

Oh, and if you want to stay connected, you can follow our @AzureDBPostgres account on Twitter. Plus, we ship a monthly technical Citus newsletter to our open source community.

Footnotes

- In the East US region on Azure, the cost of a Hyperscale (Citus) Basic tier with 2 vCores, 8 GiB total memory, and 128 GiB of storage on the coordinator node is $0.27/hour or ~$200/month. At $0.27 USD/hour, you can try it for ~8 hours or so and you’ll only pay $2 to $3 USD.↩

Brought to you by Dr. Ware, Microsoft Office 365 Silver Partner, Charleston SC.

Recent Comments